In this post you will discover 3 recipes for penalized regression for the R platform.

You can copy and paste the recipes in this post to make a jump-start on your own problem or to learn and practice with linear regression in R.

Kick-start your project with my new book Machine Learning Mastery With R, including step-by-step tutorials and the R source code files for all examples.

Let’s get started.

Penalized Regression

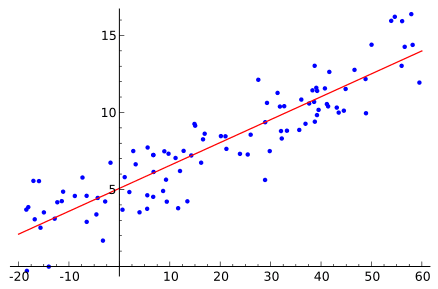

Photo by Bay Area Bias, some rights reserved

Each example in this post uses the longley dataset provided in the datasets package that comes with R. The longley dataset describes 7 economic variables observed from 1947 to 1962 used to predict the number of people employed yearly.

Ridge Regression

Ridge Regression creates a linear regression model that is penalized with the L2-norm which is the sum of the squared coefficients. This has the effect of shrinking the coefficient values (and the complexity of the model) allowing some coefficients with minor contribution to the response to get close to zero.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# load the package library(glmnet) # load data data(longley) x <- as.matrix(longley[,1:6]) y <- as.matrix(longley[,7]) # fit model fit <- glmnet(x, y, family="gaussian", alpha=0, lambda=0.001) # summarize the fit summary(fit) # make predictions predictions <- predict(fit, x, type="link") # summarize accuracy mse <- mean((y - predictions)^2) print(mse) |

Learn about the glmnet function in the glmnet package.

Need more Help with R for Machine Learning?

Take my free 14-day email course and discover how to use R on your project (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Least Absolute Shrinkage and Selection Operator

Least Absolute Shrinkage and Selection Operator (LASSO) creates a regression model that is penalized with the L1-norm which is the sum of the absolute coefficients. This has the effect of shrinking coefficient values (and the complexity of the model), allowing some with a minor effect to the response to become zero.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# load the package library(lars) # load data data(longley) x <- as.matrix(longley[,1:6]) y <- as.matrix(longley[,7]) # fit model fit <- lars(x, y, type="lasso") # summarize the fit summary(fit) # select a step with a minimum error best_step <- fit$df[which.min(fit$RSS)] # make predictions predictions <- predict(fit, x, s=best_step, type="fit")$fit # summarize accuracy mse <- mean((y - predictions)^2) print(mse) |

Learn about the lars function in the lars package.

Elastic Net

Elastic Net creates a regression model that is penalized with both the L1-norm and L2-norm. This has the effect of effectively shrinking coefficients (as in ridge regression) and setting some coefficients to zero (as in LASSO).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# load the package library(glmnet) # load data data(longley) x <- as.matrix(longley[,1:6]) y <- as.matrix(longley[,7]) # fit model fit <- glmnet(x, y, family="gaussian", alpha=0.5, lambda=0.001) # summarize the fit summary(fit) # make predictions predictions <- predict(fit, x, type="link") # summarize accuracy mse <- mean((y - predictions)^2) print(mse) |

Learn about the glmnet function in the glmnet package.

Summary

In this post you discovered 3 recipes for penalized regression in R.

Penalization is a powerful method for attribute selection and improving the accuracy of predictive models. For more information see Chapter 6 of Applied Predictive Modeling by Kuhn and Johnson that provides an excellent introduction to linear regression with R for beginners.

Nice article, but first and third code are the same 🙂 What’s the difference?

Almost. Note the value of alpha (the elastic net mixing parameter).

A great thing about the glmnet function is that it can do ridge, lasso and a hybrid of both. In the first example, we have used glmnet with an alpha of 0 which results in ridge regression (only L2). If alpha was set to 1 it would be lasso (only L1). Note in the third example that alpha is set to 0.5, this is the elastic net mixture of L1 and L2 at a 50% mixing.

I hope that is clearer.

It’ s much clearer now. Tnx a lot 🙂

Thanks for the post,

I was wondering if you knew the differences (computational and statistical performances) between using the lars package and the glmnet one with alpha=1 for performing a LASSO regression ?

Thank you for your time and keep up the good work!

nice article.l want to know how to use R for regression analysis.you are following the step by step method to do it to my e-mail.l have R package already on my Laptop

Hi Jason, thank you so much for the very clear tutorials,

It may be a silly question to ask, but how do I interpret the goodness-of-fit from the elastic net? I’ve obtained a value after:

# summarize the fit

summary(fit)

# make predictions

predictions <- predict(fit, x, type="link")

# summarize accuracy

rmse <- mean((y – predictions)^2)

print(rmse)

but not sure how I should interpret it.

Thanks so much!

I was wondering the same, how do I interpret mse?

Take the square root and the results are in the same units as the original data.

What does ‘longley[,1:6]’ respectively ‘longley[,7]’ mean?

How can we replace data(longley) with own csv-data accurately?

It specifies columns in the data.

Which columns are specified with ‘longley[,1:6]’ for example?

When I click ‘x’ in the inspector of R Studio it gives me a table with headers:

GNP.deflator

GNP

Unemployed

Armed.Forces

Population

Year

When I click y it gives me a table with header “V1”

Could it described in words like ‘longley[datastart,dataend:datarows]’?

Is it a kind of subsetting?

Alternatively if it is meant to be

longley[,1:6] = longley[,col 1 to col 6]

and

longley[,7] = longley[,col 7]

what is the part before the ‘,’ ? Any wildcard?

got it…

dataset[10:12,1:3] = dataset[startRow:endRow,startColumn:endColumn]

dataset[,1:3] = dataset[allRows,startColumn:endColumn]

Is there a way in R Studio where I can easily see how the original table of ‘data(longley)’ is structured (all headers)?

I do not use RStudio and cannot give you advice about it sorry.

In RStudio you can use View(df) and it shows the dataframe/tibble in the Viewer

View(longley)

Alternativly within the dplyr package you can do glimpse(df) to get a list of the column names and the data type.

dplyr::glimpse(longley)

How to predict one step of unseen data in the above code?

Do we have to use training and testdata to predict unseen data with glmnet?

And if so, should we use last obervations of prediction with ‘test_data’ as newx for a prediction of new unseen data?

You can use any test harness you like to estimate the skill of the model on unseen data.

Internally what method has been used – Least square

or Maximum Likelihood

I would recommend reading the package documentation for the specific methods.

what if i have to use a proposed penalty than the built in penalty of LASSO or Elastic net? How it can be used?

Good question, you might need to implement it yourself.

Hi Jason,

I have started your MAchine Learning Course-which seems to be very useful. I have a question regarding an analysis I am planning to conduct. I have briefly described my research setting and questions about R packages. If you can help me with this, that would be wonderful.

I have been trying to analyze a high dimensional data (p exceeds n) with limited observation (n=50). I want to use Lasso method for variable selection and parameter estimation to develop a prediction model. As my data is Count observation, it has to be Poisson or Negative Binomial. I have explored several R packages and researches and finally decided to use Glmnet. Now I have some questions

I know “Glmnet” and “Penalized” package are using different algorithm. As I saw “Mpath” use coordinate descent like Glmnet, do “Mapth” and “Glmnet” provide comparable results? The only reason I am interested in “Mpath” because they allow NB regression that “Glmnet” doesn’t.

A few of the package allow post Inference (p-value and confidence interval) for example ‘selectiveInference’, “hdi”. however, I couldn’t find anything for Poisson or NB models. Is there any package that can help me?

Thanks in advance.

There might be, I’m not sure off the cuff sorry.

Perhaps try posting to the R user list?

As I understand from glmnet package description (https://cran.r-project.org/web/packages/glmnet/glmnet.pdf), it does not fit the ridge regression, only lasso or elastic net – I guess it is because of penalty definition (it never reduces to ridge penalty definition)

With elastic net you can do ridge, lasso and both (e.g. elastic net).

is Lasso a good method to use for feature selection for high dimensional dataset for a regression problem ML algorithm?

i.e. i’m trying to determine the best algorithm i can use to select the best features for my output variable, which is continuous, so i’ve been using Lasso, i’m just not sure how effective it is compared to others…any suggestions for feature selection methods for regression-focused ML problems?

thanks,

It can be, try it on your problem and see.

Generally, I recommend testing a suite of “views” of your problem with different algorithms in order to discover the best combination. This post might help:

https://machinelearningmastery.com/how-to-get-the-most-from-your-machine-learning-data/