Not all classification predictive models support multi-class classification.

Algorithms such as the Perceptron, Logistic Regression, and Support Vector Machines were designed for binary classification and do not natively support classification tasks with more than two classes.

One approach for using binary classification algorithms for multi-classification problems is to split the multi-class classification dataset into multiple binary classification datasets and fit a binary classification model on each. Two different examples of this approach are the One-vs-Rest and One-vs-One strategies.

In this tutorial, you will discover One-vs-Rest and One-vs-One strategies for multi-class classification.

After completing this tutorial, you will know:

- Binary classification models like logistic regression and SVM do not support multi-class classification natively and require meta-strategies.

- The One-vs-Rest strategy splits a multi-class classification into one binary classification problem per class.

- The One-vs-One strategy splits a multi-class classification into one binary classification problem per each pair of classes.

Kick-start your project with my new book Ensemble Learning Algorithms With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Use One-vs-Rest and One-vs-One for Multi-Class Classification

Photo by Espen Sundve, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Binary Classifiers for Multi-Class Classification

- One-Vs-Rest for Multi-Class Classification

- One-Vs-One for Multi-Class Classification

Binary Classifiers for Multi-Class Classification

Classification is a predictive modeling problem that involves assigning a class label to an example.

Binary classification are those tasks where examples are assigned exactly one of two classes. Multi-class classification is those tasks where examples are assigned exactly one of more than two classes.

- Binary Classification: Classification tasks with two classes.

- Multi-class Classification: Classification tasks with more than two classes.

Some algorithms are designed for binary classification problems. Examples include:

- Logistic Regression

- Perceptron

- Support Vector Machines

As such, they cannot be used for multi-class classification tasks, at least not directly.

Instead, heuristic methods can be used to split a multi-class classification problem into multiple binary classification datasets and train a binary classification model each.

Two examples of these heuristic methods include:

- One-vs-Rest (OvR)

- One-vs-One (OvO)

Let’s take a closer look at each.

One-Vs-Rest for Multi-Class Classification

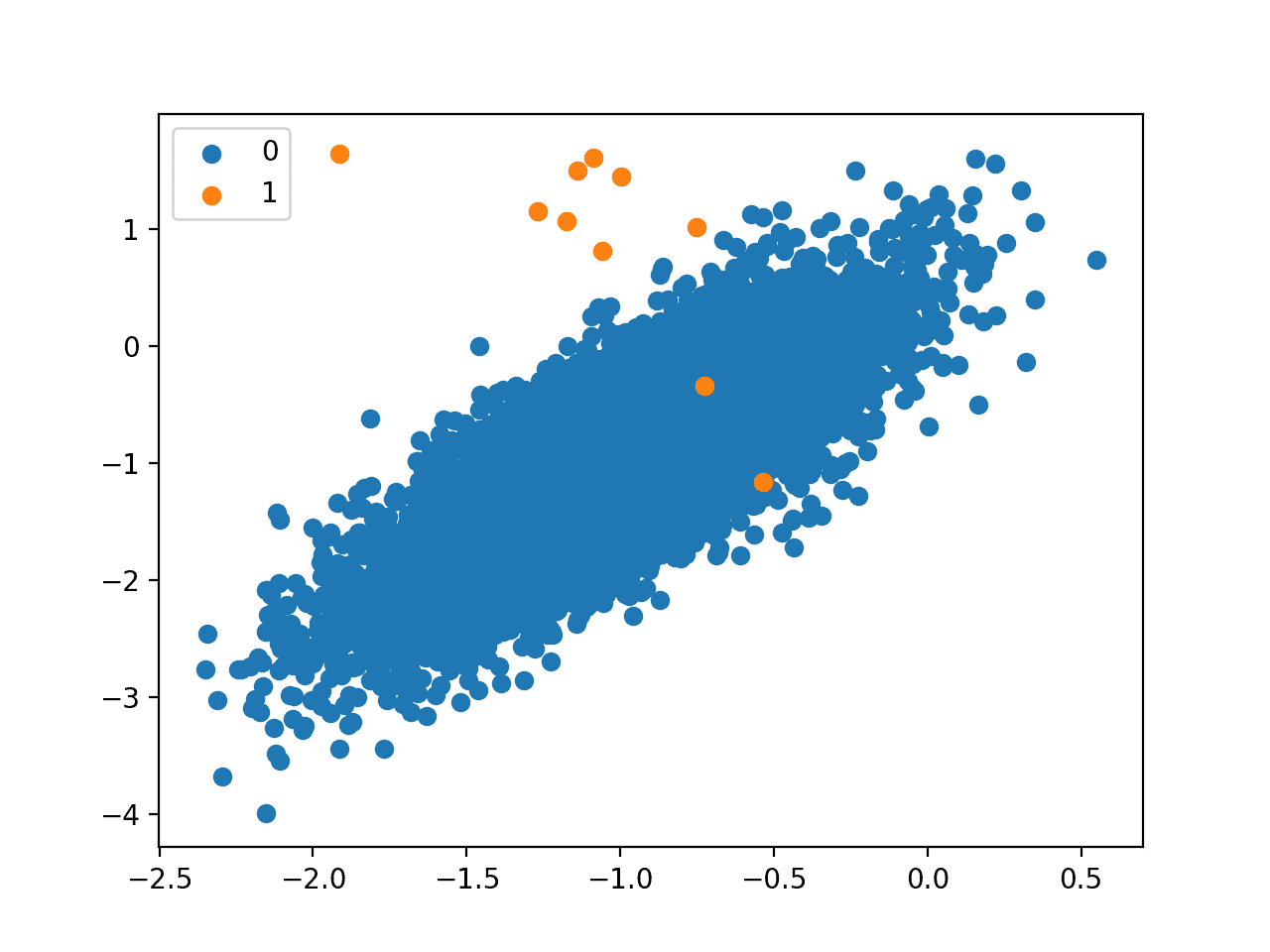

One-vs-rest (OvR for short, also referred to as One-vs-All or OvA) is a heuristic method for using binary classification algorithms for multi-class classification.

It involves splitting the multi-class dataset into multiple binary classification problems. A binary classifier is then trained on each binary classification problem and predictions are made using the model that is the most confident.

For example, given a multi-class classification problem with examples for each class ‘red,’ ‘blue,’ and ‘green‘. This could be divided into three binary classification datasets as follows:

- Binary Classification Problem 1: red vs [blue, green]

- Binary Classification Problem 2: blue vs [red, green]

- Binary Classification Problem 3: green vs [red, blue]

A possible downside of this approach is that it requires one model to be created for each class. For example, three classes requires three models. This could be an issue for large datasets (e.g. millions of rows), slow models (e.g. neural networks), or very large numbers of classes (e.g. hundreds of classes).

The obvious approach is to use a one-versus-the-rest approach (also called one-vs-all), in which we train C binary classifiers, fc(x), where the data from class c is treated as positive, and the data from all the other classes is treated as negative.

— Page 503, Machine Learning: A Probabilistic Perspective, 2012.

This approach requires that each model predicts a class membership probability or a probability-like score. The argmax of these scores (class index with the largest score) is then used to predict a class.

This approach is commonly used for algorithms that naturally predict numerical class membership probability or score, such as:

- Logistic Regression

- Perceptron

As such, the implementation of these algorithms in the scikit-learn library implements the OvR strategy by default when using these algorithms for multi-class classification.

We can demonstrate this with an example on a 3-class classification problem using the LogisticRegression algorithm. The strategy for handling multi-class classification can be set via the “multi_class” argument and can be set to “ovr” for the one-vs-rest strategy.

The complete example of fitting a logistic regression model for multi-class classification using the built-in one-vs-rest strategy is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 |

# logistic regression for multi-class classification using built-in one-vs-rest from sklearn.datasets import make_classification from sklearn.linear_model import LogisticRegression # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, n_classes=3, random_state=1) # define model model = LogisticRegression(multi_class='ovr') # fit model model.fit(X, y) # make predictions yhat = model.predict(X) |

The scikit-learn library also provides a separate OneVsRestClassifier class that allows the one-vs-rest strategy to be used with any classifier.

This class can be used to use a binary classifier like Logistic Regression or Perceptron for multi-class classification, or even other classifiers that natively support multi-class classification.

It is very easy to use and requires that a classifier that is to be used for binary classification be provided to the OneVsRestClassifier as an argument.

The example below demonstrates how to use the OneVsRestClassifier class with a LogisticRegression class used as the binary classification model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# logistic regression for multi-class classification using a one-vs-rest from sklearn.datasets import make_classification from sklearn.linear_model import LogisticRegression from sklearn.multiclass import OneVsRestClassifier # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, n_classes=3, random_state=1) # define model model = LogisticRegression() # define the ovr strategy ovr = OneVsRestClassifier(model) # fit model ovr.fit(X, y) # make predictions yhat = ovr.predict(X) |

Want to Get Started With Ensemble Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

One-Vs-One for Multi-Class Classification

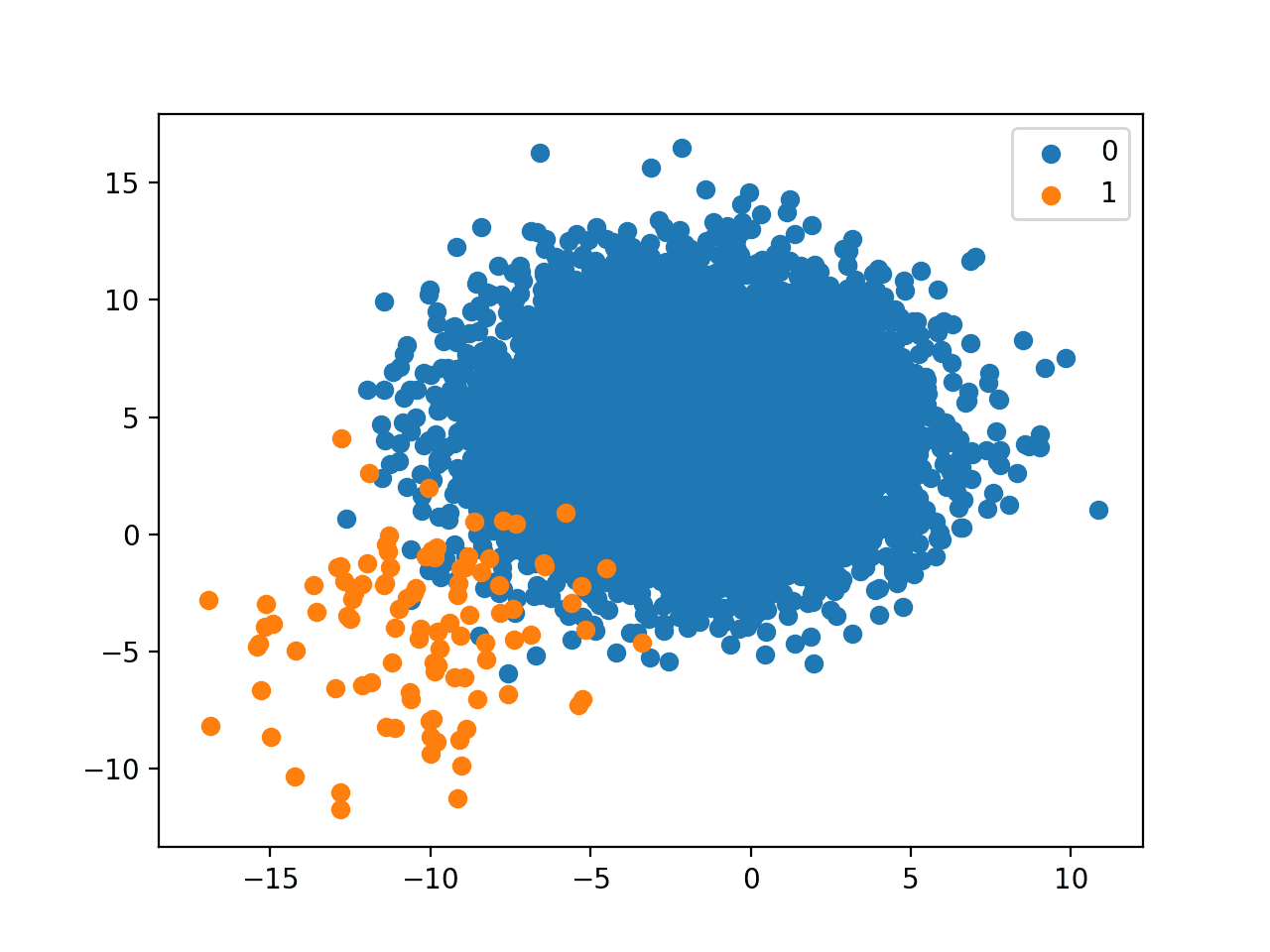

One-vs-One (OvO for short) is another heuristic method for using binary classification algorithms for multi-class classification.

Like one-vs-rest, one-vs-one splits a multi-class classification dataset into binary classification problems. Unlike one-vs-rest that splits it into one binary dataset for each class, the one-vs-one approach splits the dataset into one dataset for each class versus every other class.

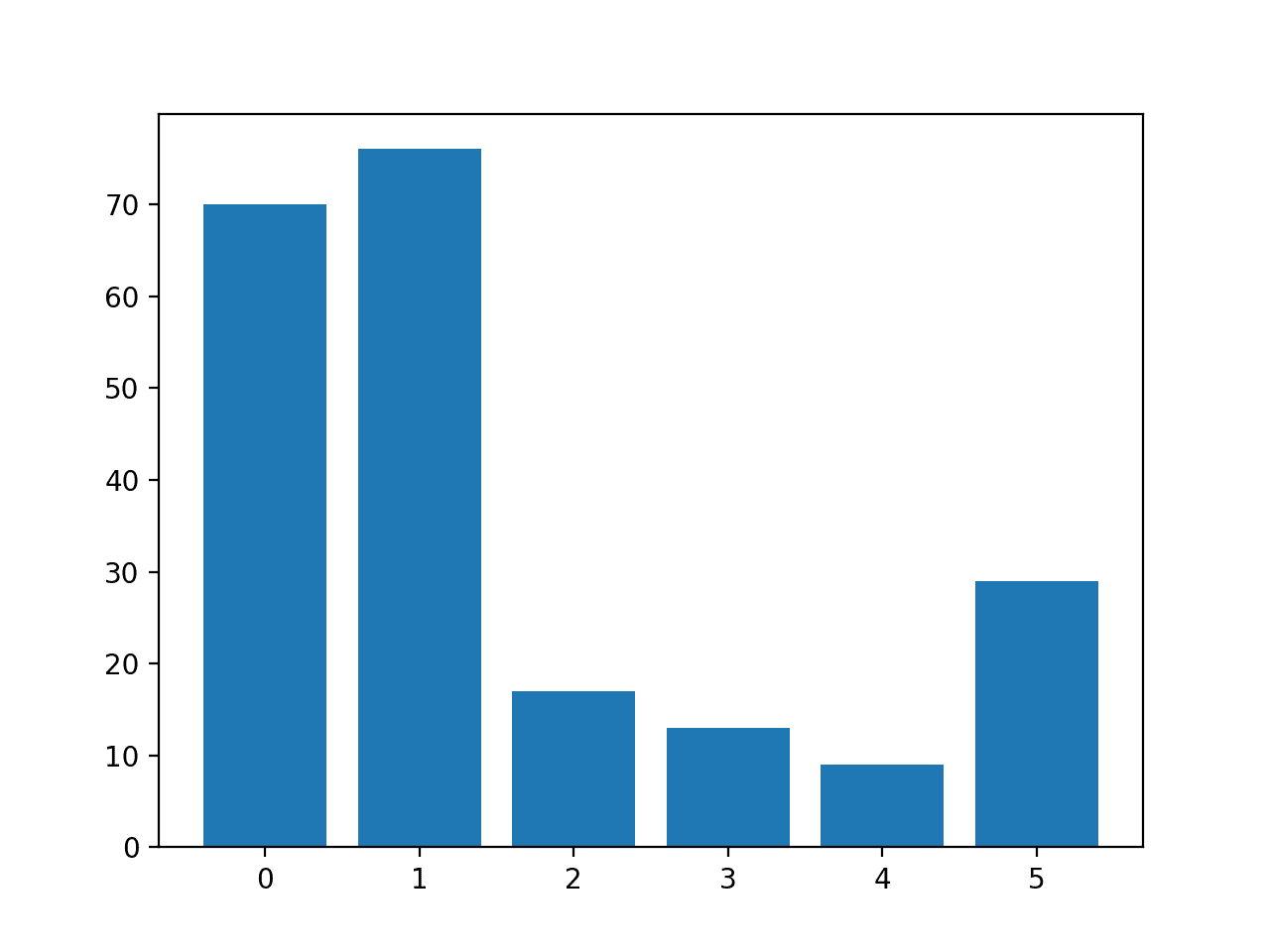

For example, consider a multi-class classification problem with four classes: ‘red,’ ‘blue,’ and ‘green,’ ‘yellow.’ This could be divided into six binary classification datasets as follows:

- Binary Classification Problem 1: red vs. blue

- Binary Classification Problem 2: red vs. green

- Binary Classification Problem 3: red vs. yellow

- Binary Classification Problem 4: blue vs. green

- Binary Classification Problem 5: blue vs. yellow

- Binary Classification Problem 6: green vs. yellow

This is significantly more datasets, and in turn, models than the one-vs-rest strategy described in the previous section.

The formula for calculating the number of binary datasets, and in turn, models, is as follows:

- (NumClasses * (NumClasses – 1)) / 2

We can see that for four classes, this gives us the expected value of six binary classification problems:

- (NumClasses * (NumClasses – 1)) / 2

- (4 * (4 – 1)) / 2

- (4 * 3) / 2

- 12 / 2

- 6

Each binary classification model may predict one class label and the model with the most predictions or votes is predicted by the one-vs-one strategy.

An alternative is to introduce K(K − 1)/2 binary discriminant functions, one for every possible pair of classes. This is known as a one-versus-one classifier. Each point is then classified according to a majority vote amongst the discriminant functions.

— Page 183, Pattern Recognition and Machine Learning, 2006.

Similarly, if the binary classification models predict a numerical class membership, such as a probability, then the argmax of the sum of the scores (class with the largest sum score) is predicted as the class label.

Classically, this approach is suggested for support vector machines (SVM) and related kernel-based algorithms. This is believed because the performance of kernel methods does not scale in proportion to the size of the training dataset and using subsets of the training data may counter this effect.

The support vector machine implementation in the scikit-learn is provided by the SVC class and supports the one-vs-one method for multi-class classification problems. This can be achieved by setting the “decision_function_shape” argument to ‘ovo‘.

The example below demonstrates SVM for multi-class classification using the one-vs-one method.

|

1 2 3 4 5 6 7 8 9 10 11 |

# SVM for multi-class classification using built-in one-vs-one from sklearn.datasets import make_classification from sklearn.svm import SVC # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, n_classes=3, random_state=1) # define model model = SVC(decision_function_shape='ovo') # fit model model.fit(X, y) # make predictions yhat = model.predict(X) |

The scikit-learn library also provides a separate OneVsOneClassifier class that allows the one-vs-one strategy to be used with any classifier.

This class can be used with a binary classifier like SVM, Logistic Regression or Perceptron for multi-class classification, or even other classifiers that natively support multi-class classification.

It is very easy to use and requires that a classifier that is to be used for binary classification be provided to the OneVsOneClassifier as an argument.

The example below demonstrates how to use the OneVsOneClassifier class with an SVC class used as the binary classification model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# SVM for multi-class classification using one-vs-one from sklearn.datasets import make_classification from sklearn.svm import SVC from sklearn.multiclass import OneVsOneClassifier # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, n_classes=3, random_state=1) # define model model = SVC() # define ovo strategy ovo = OneVsOneClassifier(model) # fit model ovo.fit(X, y) # make predictions yhat = ovo.predict(X) |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Pattern Recognition and Machine Learning, 2006.

- Machine Learning: A Probabilistic Perspective, 2012.

APIs

- Multiclass and multilabel algorithms, scikit-learn API.

- sklearn.multiclass.OneVsRestClassifier API.

- sklearn.multiclass.OneVsOneClassifier API.

Articles

Summary

In this tutorial, you discovered One-vs-Rest and One-vs-One strategies for multi-class classification.

Specifically, you learned:

- Binary classification models like logistic regression and SVM do not support multi-class classification natively and require meta-strategies.

- The One-vs-Rest strategy splits a multi-class classification into one binary classification problem per class.

- The One-vs-One strategy splits a multi-class classification into one binary classification problem per each pair of classes.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Is it possible to access each individual classifier that has been created for each case? In some cases it is required to understand and see result individually

Yes, I believe they are a property on the fit model.

Knowledgeful Information

Thanks!

Excellent article. My questions are how to decide which one to use when we have to build a predictive model with specific input dataset. Should we try both methods and Keep the one with the best results in terms of i.e. kappa, F1 etc of the classification table? Does the literature propose a specific choice if we have an unbalanced training dataset?

Thanks!

Generally, I would recommend testing both and other methods and use the technique that performs best on your specific dataset.

Regarding metrics for imbalanced classification, see this tutorial on how to choose:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

I believed multinomial logistic regression vould solve multi-label classification.

If we employ one to rest or one to one; wouldnt it takr more time in midel building

Agreed on both points.

Thank you very much

Please which type of machine learning you recommend to use for classification faults for PV solar

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/what-algorithm-config-should-i-use

Great article as always.

1. How do you choose between setting parameters in the model or using the seperate ovr/ovo class?

2. Do you have any links to examples with output using these methods? Would you suggest a real-world dataset to work with with to show the value here?

Thanks!

Thanks!

What do you mean model vs separate class? You mean as in logistic regression? In that case, probably better to use the built-in case to avoid introducing bugs.

I’m sure I have examples of LR on multi-class classification. It is not about value, it about being able to use algorithms on problems were they could not be used before. That is valuable if those algorithms are as/more skillful than other methods tried on the same problem.

Excellent article.

Do you perhaps have a similar article where you use R.

Thanks.

Sorry, I do not.

thanks for the explanation. I do have a question:

for xgboost, it is internally for binary classification. Therefore, in xgboost in we choose to use “multi:softprob” as the objective function, it will create n forests for each class, right? So we don’t need to build a xgboost classifier with “binary:logistic” and wrap it with OneVsRestClassifier, right?

Not quite, each tree makes a multi-class prediction directly in xgboost.

Thanks for your answer. Just want to confirm my understanding with you. The author of xbgoost says in:

https://github.com/dmlc/xgboost/issues/3655

so, each class has a binary forest; however, they are trained based on multi-class cross-entropy loss. So it seems that xgboost does build binary classifiers, but not trains them on binary loss.

Then my question is how about for logstic regression in sklearn, if we choose the multi_class parameter to be ovr, then what loss function it should use? If it also use multiclass crossentropy loss function, then what is the meaning here. We can train N 1-by-m vectors for N binary classifier, and we can also train a N-by-m matrix to directily get the softmax result.

Fascinating. Thanks.

You can train a multinomial logistic regression, e.g. true multi-class. Or you can use binary cross entropy and fit multiple OvR models, which is what sklearn does I believe.

Very nice article.

1) Is it possible to use with MultiLayerPerceptron ?

Thanks.

Perhaps. I don’t have an example, sorry.

Hello Jason, thank you for your article.

My name is Isak and I’m from Indonesia. So, sorry for my bad English. I want to ask about the code on this line:

# define model

model = SVC()

# define ovo strategy

ovo = OneVsOneClassifier(model)

I have read sklearn documentation that explains two things below:

=== For SVC() ===

The multiclass support is handled according to a one-vs-one scheme.

=== For LinearSVC() ===

This class supports both dense and sparse input and the multiclass support is handled according to a one-vs-the-rest scheme.

My Question:

1. SVC already supports multi-class classification with OVO approach. Why use “OneVsOneClassifier” module for SVC ()?

2. I am currently working on my final project on multiclass classification using the SVM method with the OnevsAll approach. Is it possible to change the “OnevsOneClassifier” with “OnevsRestClassifier” on SVC() ? Or should I use the “OnevsRestClassifier” module on LinearSVC() ?

Thank you…

Correct. I do it explicitly here as a demonstration. You can adapt it to use any method you like.

Yes, try it!

Nice Article! My two cents:

“decision_function_shape{‘ovo’, ‘ovr’}, default=’ovr’

Whether to return a one-vs-rest (‘ovr’) decision function of shape (n_samples, n_classes) as all other classifiers, or the original one-vs-one (‘ovo’) decision function of libsvm which has shape (n_samples, n_classes * (n_classes – 1) / 2). However, one-vs-one (‘ovo’) is always used as multi-class strategy. The parameter is ignored for binary classification.”

from https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html#sklearn.svm.SVC

Thus, for SVM ovo is always used for multi class classfication, and this parameter just decides how the decision function looks like, don’t you agree?

Thanks for the note.

Thanks for this wonderful article, Very well explained different OvO and OvR methods

Thanks!

I presume that this one v rest creates, in your case 3 models. The first where its either a 1 for red and 0 for anything else, then 1 for blue and 0 for anything else etc. It then predicts by running all three models on a line and taking the highest prob’. What if you have a problem involving ‘races’. Here the one is a runner and the rest are the other runners in the race. One could make all races have equal runners by padding out but my question is whether there is a neat library routine or parameter to handle this ?. Can ti be made to perform one v rest id the data has a raceId and a runnerId field

Yes, padding, but it does not sound like a good fit for that type of problem.

Any tracking of row to a subject (e.g. an id) must happen in the application (code around the model), not in the predictive model.

This may help:

https://machinelearningmastery.com/how-to-connect-model-input-data-with-predictions-for-machine-learning/

Can we use binary classification for mutli class classifications?

Yes, the above techniques show how how.

This caught my attention:

“This approach requires that each model predicts a class membership probability or a probability-like score. The argmax of these scores (class index with the largest score) is then used to predict a class.”

I’ve implemented some toy AI tool myself self and what I do instead is that I compare scores for each model (OvR) that says “yes, that’s the class”. So if:

model “RED” has training score 0.6 and says YES

model “BLUE” has training score 0.7 and says NO

model “GREEN” has training score 0.65 says YES

then I assign GREEN label.

So it’s not directly “class membership probability” but more like “probability that model is correct”.

What do you think about this approach?

Nice. If it works well/better than other approaches then use it.

Hey Jason. Interesting concepts. Does each methodology you described computes a probability score value for each class variable? So In other words , each class variable in the test dataset will get a probability value assigned based on training data. What I am getting at is the difference between say a random forest where only the highest value is assigned to one of the three class variables but others are 0 and your description seems like suggest that not only does the class with highest probability identified but also classes with lower probability.

Some models will natively predict a probability, e.g. logistic regression.

This is not related to using binary classification models for multi-class classification problems as described above. The topics are orthogonal.

I think i don’t really understand OvO, if i have three classes A, B, C then i have pairs :

A vs B, A vs C, B vs C,

let’s say we want to use model for class C, then we need to choose between A vs C, B vs C right? but both of them did flas C as negative observation, then we are choosing one with higher number of negatives not postivies(not sure if this vote is just higher num of positives)?

You predict with all 3 models and the label with the most support wins/is predicted.

Hey Jason, great article can you describe how OvO works for testing dataset? How voting works?

The label with the most “votes” is predicted as described here:

https://scikit-learn.org/stable/modules/generated/sklearn.multiclass.OneVsOneClassifier.html#sklearn.multiclass.OneVsOneClassifier.predict

Hi Jason,

Why do we even use One vs Rest and why don’t we train our model on k classes simply and what trouble does it cause if we use k-classes instead of creating a binary class out of every class?

And how does One Vs Rest solve the problem?

Please Help.

Thank You

Jimmy

It may work well or best for a specific binary classification model and dataset.

The way it solves the problem is described in the above tutorial.

Hi Jason, very interesting article.

I wanted to ask you a question.

In a multiclass classification problem (3 classes), how do I determine which classifier works best between multiclass classifiers (such as naive bayes or mln) and svm classifier (one vs one) which is binary??

Generally, you would select a metric and evaluate a suite of models and model configurations until you find one that performs best.

Hi Dr Jason, Thank you very much for the interesting topic.

If I need to compare three classifiers performance in the one-vs-one method. I select to use sensitivity and accuracy as metrics. For each pair of classes, I will produce my metrics (sensitivity and accuracy). How to estimate the overall metric for the classifier.

for example, I have a three-class problem, assuming that I have balanced-class data, for each classifier I will have 3 values for sensitivity and three values for accuracy one for each pair of classes. how to estimate the overall accuracy and overall sensitivity for the classifier.

can I just average them, or there is another way?

Thanks

Perhaps you can use repeated k-fold cv to evaluate each method and compare the mean score.

Hi jason, very interesting article. I need your help. I have a dataset which have 11 classes and I am using SVM classifier for multiclass classification but my accuracy is not good. but when I perform binary classification, it gives me good accuracy. There is something wrong with multiclass classifier. Please help me.

Thanks.

Perhaps SVM is no good for your data.

Perhaps try a different model?

Perhaps try a different data preparation?

Hi dr. Brownlee, I was wondering how does the “.predict_proba” work with ovr? since ovr uses multiple independent binary classifiers… I actually tried the example above and got 3 probabilities with a sum equals to 1 just like the multi class model.

Not sure off the cuff, but here is the API:

https://scikit-learn.org/stable/modules/generated/sklearn.multiclass.OneVsRestClassifier.html#sklearn.multiclass.OneVsRestClassifier.predict_proba

Here is the code:

https://github.com/scikit-learn/scikit-learn/blob/95119c13a/sklearn/multiclass.py#L386

I guess the probabilities were normalized in order to achieve a sum of 1.

Thanks a lot for your quick reply and the useful links.

Yes, often softmax is used to achieve this effect:

https://machinelearningmastery.com/softmax-activation-function-with-python/

Does RandomForestClassifier work in the same way? I mean, does Sklearn create multiple RandomForestClassifiers for multi-class classification too?

No, I believe random forest directly supports multi-class classification.

Hi,

Since we may encounter the challenge of imbalanced classification in One vs All, how should we handle it?

Thank you.

Regards,

Mary

Perhaps try rebalancing the training dataset.

Hi Jason Brownlee great work,

Does stacking ensemble support multilabel classification. or let me rephrase my question can i use multilabel classifiers(such as MLKNN, BRKNN, or transformation classifier : Labelpowerst, binaryrelevance) at level 0 and 1 for

I’m not sure off the cuff, perhaps try it and see.

Let’s say we have 3 classes: red, green, and blue.

So, in one-vs-one (OVO) we create 3 pairs of classifier:

– red vs blue

– red vs green

– blue vs green

How if the result for each classifier is like this?

– red vs blue , resulting red

– red vs green , resulting green

– blue vs green , resulting blue

Which class should be the winner?

Or maybe I misunderstood how this OVO concept works?

No, you didn’t misunderstand. That’s correct and we have no concrete answer in this case. I would rather say, “undetermined”.

Hello, thanks for your post. I have a question, perhaps you can help. Do you happen to know what is the default multi-class strategy in sklearn for DecisionTreeClassifier?

IF no indication of OVO or OVR is given.

Thanks greatly!

Neither because decision tree is a model not based on binary classification.

Hello, would anyone know how to do this project? It would mean a lot to me!

Multiple classification (one against one)

The one-versus-one classification is used in situations where we have more than two classes to which data can belong.

The task of each student is to:

1. Study how a given algorithm works.

2. Creates an implementation that solves the problem of logistic regression using multiple classification (it is allowed to use a library with a ready-made implementation of the classification).

3. Provide an input file for training and testing models with at least 100 inputs divided into more than two classes (minimum 3).

4. Analyze the quality of the model using the techniques learned in the exercises, analyze the sensitivity and precision of the entire system.

The one-versus-one algorithm tests the hypothesis that the data belongs to the CI class, ie. that it does not belong to class Cj. The data class is the class for which the largest number of positive classifications is obtained, when tested with all other classes. Hypothesis testing for two classes should be done by logistic regression.

Hello Korisnik…From your post, I am not certain if you asking about a school project or assignment.

Generally, I recommend that you complete homework and assignments yourself.

You have chosen a course and (perhaps) have even paid money to take the course. You have chosen to invest in yourself via self-education.

In order to get the most out of this investment, you must do the work.

Also, you (may) have paid the teachers, lectures and support staff to teach you. Use that resource and ask for help and clarification about your homework or assignment from them. They work for you in some sense, and no one knows more about your homework or assignment and how it will be assed than them.

Nevertheless, if you are still struggling, perhaps you can boil your difficulty down to one sentence and contact me.

What is the features in this example?

Hi Student…the features are the items that you are using to make predictions…such as the categories being considered for classification.

Jason, can you explain how LogisticRegression(multi)class=’multinomial’) works. Clearly it’s not OVR as that is a separate option. I would assume it is each of K-1 classes versus a reference class, but if that is so, how does it generate betas for all classes?

Hi James…The following resource may be of interest to you:

https://machinelearningmastery.com/multinomial-logistic-regression-with-python/

Your blogs are better than paid blogs- they hit the nail on the head every time – thanks for being a life saver !

Thank you RK for the feedback and support! We greatly appreciate it!

Thank you for this piece, Jason.

I’m using sklearn’s implementation of OneVsOneClassifier with SVM. In sklearn’s documentation of OneVsOneClassifier, this class does provide methods such as fit(), predict() etc.

However, the classifier does not provide the predict_proba(), which is required to calculate roc_auc_score for multiclass classification.

How do I get the predictions probabilites of the classes (which is what predict_proba returns) for use to calculate the roc_auc_score?

Hi abu_nuwayrah…The following resource will hopefully be of value:

https://scikit-learn.org/stable/modules/generated/sklearn.multiclass.OneVsOneClassifier.html

Hello, thanks for helpful article.

I need numeric example on how to use perceptron to make multi class classification.

Hi Israa…You are very welcome! The following resource may be of interest:

https://machinelearningmastery.com/perceptron-algorithm-for-classification-in-python/

Hi Jason, I used this method for getting the AUCROC for my ensemble model, I used it for bagging, Voting, stacking, and booting. it worked for all except the voting. this is the code below

visualizer= ROCAUC(voting_model)

visualizer.fit (X_train, y_train)

visualizer.score(X_test, y_test)

visualizer. Show()

Please Ineed your assistance on this.

Hello Sunday…What is the error you encountered?