Gradient boosting is a powerful ensemble machine learning algorithm.

It’s popular for structured predictive modeling problems, such as classification and regression on tabular data, and is often the main algorithm or one of the main algorithms used in winning solutions to machine learning competitions, like those on Kaggle.

There are many implementations of gradient boosting available, including standard implementations in SciPy and efficient third-party libraries. Each uses a different interface and even different names for the algorithm.

In this tutorial, you will discover how to use gradient boosting models for classification and regression in Python.

Standardized code examples are provided for the four major implementations of gradient boosting in Python, ready for you to copy-paste and use in your own predictive modeling project.

After completing this tutorial, you will know:

- Gradient boosting is an ensemble algorithm that fits boosted decision trees by minimizing an error gradient.

- How to evaluate and use gradient boosting with scikit-learn, including gradient boosting machines and the histogram-based algorithm.

- How to evaluate and use third-party gradient boosting algorithms, including XGBoost, LightGBM, and CatBoost.

Kick-start your project with my new book Ensemble Learning Algorithms With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Gradient Boosting with Scikit-Learn, XGBoost, LightGBM, and CatBoost

Photo by John, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Gradient Boosting Overview

- Gradient Boosting With Scikit-Learn

- Library Installation

- Test Problems

- Gradient Boosting

- Histogram-Based Gradient Boosting

- Gradient Boosting With XGBoost

- Library Installation

- XGBoost for Classification

- XGBoost for Regression

- Gradient Boosting With LightGBM

- Library Installation

- LightGBM for Classification

- LightGBM for Regression

- Gradient Boosting With CatBoost

- Library Installation

- CatBoost for Classification

- CatBoost for Regression

Gradient Boosting Overview

Gradient boosting refers to a class of ensemble machine learning algorithms that can be used for classification or regression predictive modeling problems.

Gradient boosting is also known as gradient tree boosting, stochastic gradient boosting (an extension), and gradient boosting machines, or GBM for short.

Ensembles are constructed from decision tree models. Trees are added one at a time to the ensemble and fit to correct the prediction errors made by prior models. This is a type of ensemble machine learning model referred to as boosting.

Models are fit using any arbitrary differentiable loss function and gradient descent optimization algorithm. This gives the technique its name, “gradient boosting,” as the loss gradient is minimized as the model is fit, much like a neural network.

Gradient boosting is an effective machine learning algorithm and is often the main, or one of the main, algorithms used to win machine learning competitions (like Kaggle) on tabular and similar structured datasets.

Note: We will not be going into the theory behind how the gradient boosting algorithm works in this tutorial.

For more on the gradient boosting algorithm, see the tutorial:

The algorithm provides hyperparameters that should, and perhaps must, be tuned for a specific dataset. Although there are many hyperparameters to tune, perhaps the most important are as follows:

- The number of trees or estimators in the model.

- The learning rate of the model.

- The row and column sampling rate for stochastic models.

- The maximum tree depth.

- The minimum tree weight.

- The regularization terms alpha and lambda.

Note: We will not be exploring how to configure or tune the configuration of gradient boosting algorithms in this tutorial.

For more on tuning the hyperparameters of gradient boosting algorithms, see the tutorial:

There are many implementations of the gradient boosting algorithm available in Python. Perhaps the most used implementation is the version provided with the scikit-learn library.

Additional third-party libraries are available that provide computationally efficient alternate implementations of the algorithm that often achieve better results in practice. Examples include the XGBoost library, the LightGBM library, and the CatBoost library.

Do you have a different favorite gradient boosting implementation?

Let me know in the comments below.

When using gradient boosting on your predictive modeling project, you may want to test each implementation of the algorithm.

This tutorial provides examples of each implementation of the gradient boosting algorithm on classification and regression predictive modeling problems that you can copy-paste into your project.

Let’s take a look at each in turn.

Note: We are not comparing the performance of the algorithms in this tutorial. Instead, we are providing code examples to demonstrate how to use each different implementation. As such, we are using synthetic test datasets to demonstrate evaluating and making a prediction with each implementation.

This tutorial assumes you have Python and SciPy installed. If you need help, see the tutorial:

Want to Get Started With Ensemble Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Gradient Boosting with Scikit-Learn

In this section, we will review how to use the gradient boosting algorithm implementation in the scikit-learn library.

Library Installation

First, let’s install the library.

Don’t skip this step as you will need to ensure you have the latest version installed.

You can install the scikit-learn library using the pip Python installer, as follows:

|

1 |

sudo pip install scikit-learn |

For additional installation instructions specific to your platform, see:

Next, let’s confirm that the library is installed and you are using a modern version.

Run the following script to print the library version number.

|

1 2 3 |

# check scikit-learn version import sklearn print(sklearn.__version__) |

Running the example, you should see the following version number or higher.

|

1 |

0.22.1 |

Test Problems

We will demonstrate the gradient boosting algorithm for classification and regression.

As such, we will use synthetic test problems from the scikit-learn library.

Classification Dataset

We will use the make_classification() function to create a test binary classification dataset.

The dataset will have 1,000 examples, with 10 input features, five of which will be informative and the remaining five that will be redundant. We will fix the random number seed to ensure we get the same examples each time the code is run.

An example of creating and summarizing the dataset is listed below.

|

1 2 3 4 5 6 |

# test classification dataset from sklearn.datasets import make_classification # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # summarize the dataset print(X.shape, y.shape) |

Running the example creates the dataset and confirms the expected number of samples and features.

|

1 |

(1000, 10) (1000,) |

Regression Dataset

We will use the make_regression() function to create a test regression dataset.

Like the classification dataset, the regression dataset will have 1,000 examples, with 10 input features, five of which will be informative and the remaining five that will be redundant.

|

1 2 3 4 5 6 |

# test regression dataset from sklearn.datasets import make_regression # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # summarize the dataset print(X.shape, y.shape) |

Running the example creates the dataset and confirms the expected number of samples and features.

|

1 |

(1000, 10) (1000,) |

Next, let’s look at how we can develop gradient boosting models in scikit-learn.

Gradient Boosting

The scikit-learn library provides the GBM algorithm for regression and classification via the GradientBoostingClassifier and GradientBoostingRegressor classes.

Let’s take a closer look at each in turn.

Gradient Boosting Machine for Classification

The example below first evaluates a GradientBoostingClassifier on the test problem using repeated k-fold cross-validation and reports the mean accuracy. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# gradient boosting for classification in scikit-learn from numpy import mean from numpy import std from sklearn.datasets import make_classification from sklearn.ensemble import GradientBoostingClassifier from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from matplotlib import pyplot # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # evaluate the model model = GradientBoostingClassifier() cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = GradientBoostingClassifier() model.fit(X, y) # make a single prediction row = [[2.56999479, -0.13019997, 3.16075093, -4.35936352, -1.61271951, -1.39352057, -2.48924933, -1.93094078, 3.26130366, 2.05692145]] yhat = model.predict(row) print('Prediction: %d' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

Accuracy: 0.915 (0.025) Prediction: 1 |

Gradient Boosting Machine for Regression

The example below first evaluates a GradientBoostingRegressor on the test problem using repeated k-fold cross-validation and reports the mean absolute error. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# gradient boosting for regression in scikit-learn from numpy import mean from numpy import std from sklearn.datasets import make_regression from sklearn.ensemble import GradientBoostingRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from matplotlib import pyplot # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # evaluate the model model = GradientBoostingRegressor() cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = GradientBoostingRegressor() model.fit(X, y) # make a single prediction row = [[2.02220122, 0.31563495, 0.82797464, -0.30620401, 0.16003707, -1.44411381, 0.87616892, -0.50446586, 0.23009474, 0.76201118]] yhat = model.predict(row) print('Prediction: %.3f' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

MAE: -11.854 (1.121) Prediction: -80.661 |

Histogram-Based Gradient Boosting

The scikit-learn library provides an alternate implementation of the gradient boosting algorithm, referred to as histogram-based gradient boosting.

This is an alternate approach to implement gradient tree boosting inspired by the LightGBM library (described more later). This implementation is provided via the HistGradientBoostingClassifier and HistGradientBoostingRegressor classes.

The primary benefit of the histogram-based approach to gradient boosting is speed. These implementations are designed to be much faster to fit on training data.

At the time of writing, this is an experimental implementation and requires that you add the following line to your code to enable access to these classes.

|

1 |

from sklearn.experimental import enable_hist_gradient_boosting |

Without this line, you will see an error like:

|

1 |

ImportError: cannot import name 'HistGradientBoostingClassifier' |

or

|

1 |

ImportError: cannot import name 'HistGradientBoostingRegressor' |

Let’s take a close look at how to use this implementation.

Histogram-Based Gradient Boosting Machine for Classification

The example below first evaluates a HistGradientBoostingClassifier on the test problem using repeated k-fold cross-validation and reports the mean accuracy. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# histogram-based gradient boosting for classification in scikit-learn from numpy import mean from numpy import std from sklearn.datasets import make_classification from sklearn.experimental import enable_hist_gradient_boosting from sklearn.ensemble import HistGradientBoostingClassifier from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from matplotlib import pyplot # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # evaluate the model model = HistGradientBoostingClassifier() cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = HistGradientBoostingClassifier() model.fit(X, y) # make a single prediction row = [[2.56999479, -0.13019997, 3.16075093, -4.35936352, -1.61271951, -1.39352057, -2.48924933, -1.93094078, 3.26130366, 2.05692145]] yhat = model.predict(row) print('Prediction: %d' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

Accuracy: 0.935 (0.024) Prediction: 1 |

Histogram-Based Gradient Boosting Machine for Regression

The example below first evaluates a HistGradientBoostingRegressor on the test problem using repeated k-fold cross-validation and reports the mean absolute error. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# histogram-based gradient boosting for regression in scikit-learn from numpy import mean from numpy import std from sklearn.datasets import make_regression from sklearn.experimental import enable_hist_gradient_boosting from sklearn.ensemble import HistGradientBoostingRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from matplotlib import pyplot # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # evaluate the model model = HistGradientBoostingRegressor() cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = HistGradientBoostingRegressor() model.fit(X, y) # make a single prediction row = [[2.02220122, 0.31563495, 0.82797464, -0.30620401, 0.16003707, -1.44411381, 0.87616892, -0.50446586, 0.23009474, 0.76201118]] yhat = model.predict(row) print('Prediction: %.3f' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

MAE: -12.723 (1.540) Prediction: -77.837 |

Gradient Boosting With XGBoost

XGBoost, which is short for “Extreme Gradient Boosting,” is a library that provides an efficient implementation of the gradient boosting algorithm.

The main benefit of the XGBoost implementation is computational efficiency and often better model performance.

For more on the benefits and capability of XGBoost, see the tutorial:

Library Installation

You can install the XGBoost library using the pip Python installer, as follows:

|

1 |

sudo pip install xgboost |

For additional installation instructions specific to your platform see:

Next, let’s confirm that the library is installed and you are using a modern version.

Run the following script to print the library version number.

|

1 2 3 |

# check xgboost version import xgboost print(xgboost.__version__) |

Running the example, you should see the following version number or higher.

|

1 |

1.0.1 |

The XGBoost library provides wrapper classes so that the efficient algorithm implementation can be used with the scikit-learn library, specifically via the XGBClassifier and XGBregressor classes.

Let’s take a closer look at each in turn.

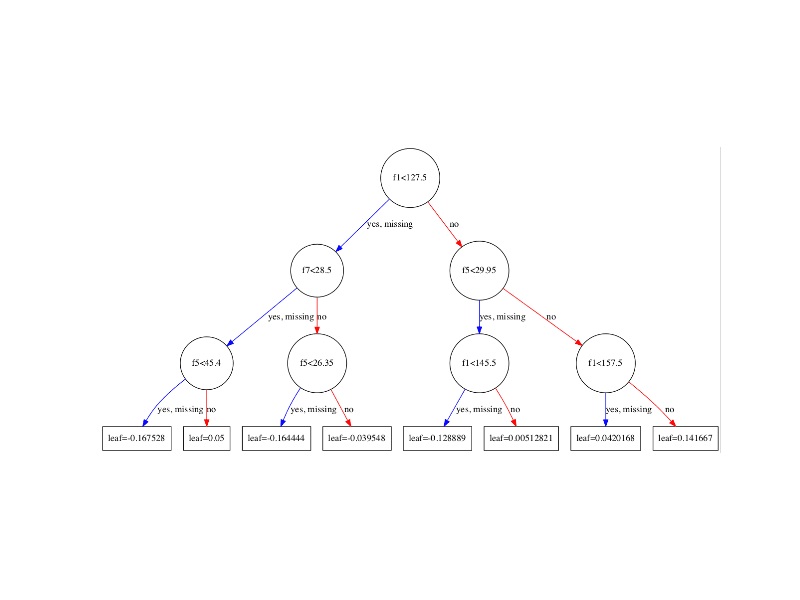

XGBoost for Classification

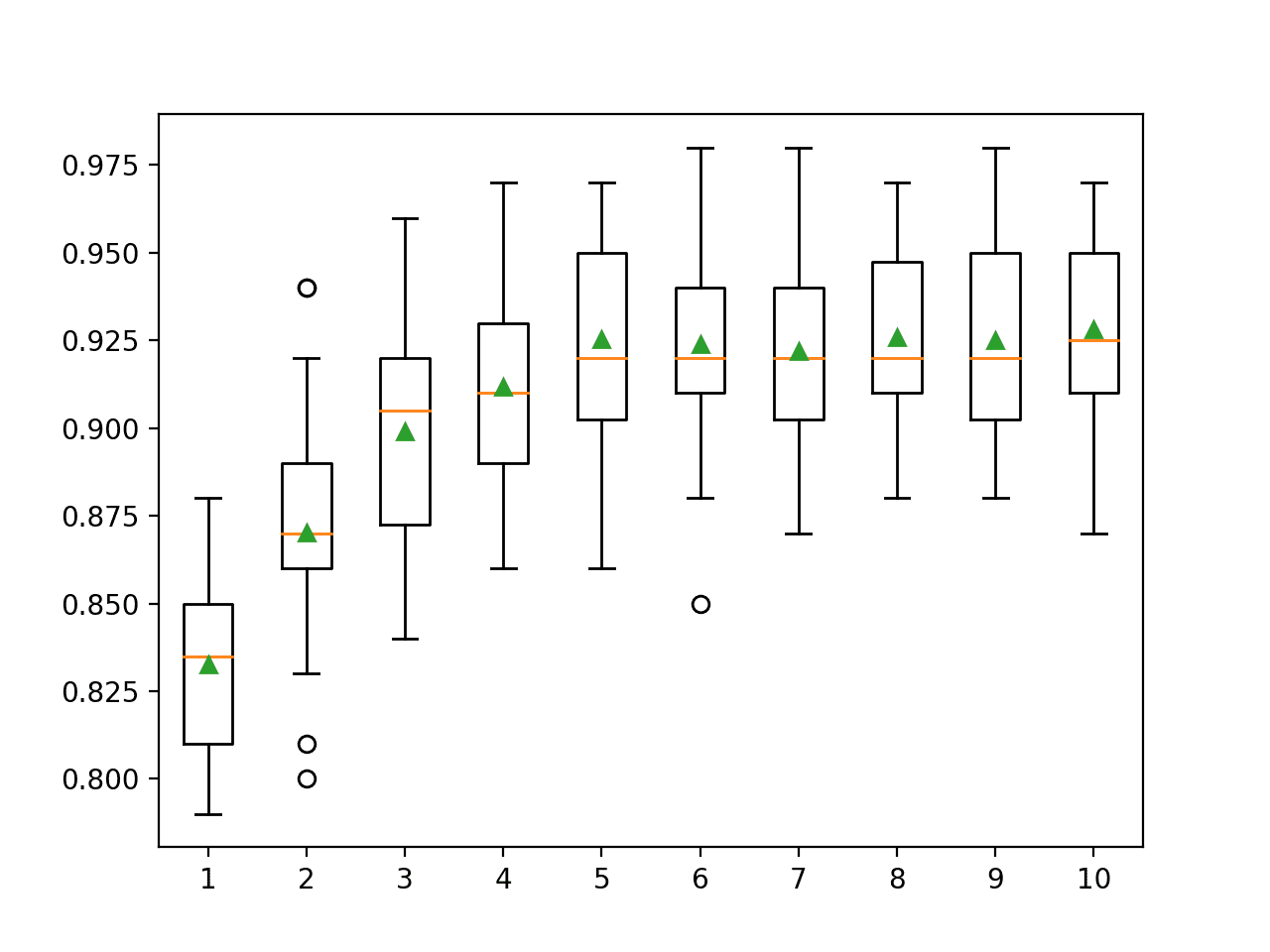

The example below first evaluates an XGBClassifier on the test problem using repeated k-fold cross-validation and reports the mean accuracy. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# xgboost for classification from numpy import asarray from numpy import mean from numpy import std from sklearn.datasets import make_classification from xgboost import XGBClassifier from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from matplotlib import pyplot # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # evaluate the model model = XGBClassifier() cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = XGBClassifier() model.fit(X, y) # make a single prediction row = [2.56999479, -0.13019997, 3.16075093, -4.35936352, -1.61271951, -1.39352057, -2.48924933, -1.93094078, 3.26130366, 2.05692145] row = asarray(row).reshape((1, len(row))) yhat = model.predict(row) print('Prediction: %d' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

Accuracy: 0.936 (0.019) Prediction: 1 |

XGBoost for Regression

The example below first evaluates an XGBRegressor on the test problem using repeated k-fold cross-validation and reports the mean absolute error. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# xgboost for regression from numpy import asarray from numpy import mean from numpy import std from sklearn.datasets import make_regression from xgboost import XGBRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from matplotlib import pyplot # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # evaluate the model model = XGBRegressor(objective='reg:squarederror') cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = XGBRegressor(objective='reg:squarederror') model.fit(X, y) # make a single prediction row = [2.02220122, 0.31563495, 0.82797464, -0.30620401, 0.16003707, -1.44411381, 0.87616892, -0.50446586, 0.23009474, 0.76201118] row = asarray(row).reshape((1, len(row))) yhat = model.predict(row) print('Prediction: %.3f' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

MAE: -15.048 (1.316) Prediction: -93.434 |

Gradient Boosting With LightGBM

LightGBM, short for Light Gradient Boosted Machine, is a library developed at Microsoft that provides an efficient implementation of the gradient boosting algorithm.

The primary benefit of the LightGBM is the changes to the training algorithm that make the process dramatically faster, and in many cases, result in a more effective model.

For more technical details on the LightGBM algorithm, see the paper:

Library Installation

You can install the LightGBM library using the pip Python installer, as follows:

|

1 |

sudo pip install lightgbm |

For additional installation instructions specific to your platform, see:

Next, let’s confirm that the library is installed and you are using a modern version.

Run the following script to print the library version number.

|

1 2 3 |

# check lightgbm version import lightgbm print(lightgbm.__version__) |

Running the example, you should see the following version number or higher.

|

1 |

2.3.1 |

The LightGBM library provides wrapper classes so that the efficient algorithm implementation can be used with the scikit-learn library, specifically via the LGBMClassifier and LGBMRegressor classes.

Let’s take a closer look at each in turn.

LightGBM for Classification

The example below first evaluates an LGBMClassifier on the test problem using repeated k-fold cross-validation and reports the mean accuracy. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# lightgbm for classification from numpy import mean from numpy import std from sklearn.datasets import make_classification from lightgbm import LGBMClassifier from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from matplotlib import pyplot # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # evaluate the model model = LGBMClassifier() cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = LGBMClassifier() model.fit(X, y) # make a single prediction row = [[2.56999479, -0.13019997, 3.16075093, -4.35936352, -1.61271951, -1.39352057, -2.48924933, -1.93094078, 3.26130366, 2.05692145]] yhat = model.predict(row) print('Prediction: %d' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

Accuracy: 0.934 (0.021) Prediction: 1 |

LightGBM for Regression

The example below first evaluates an LGBMRegressor on the test problem using repeated k-fold cross-validation and reports the mean absolute error. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# lightgbm for regression from numpy import mean from numpy import std from sklearn.datasets import make_regression from lightgbm import LGBMRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from matplotlib import pyplot # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # evaluate the model model = LGBMRegressor() cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = LGBMRegressor() model.fit(X, y) # make a single prediction row = [[2.02220122, 0.31563495, 0.82797464, -0.30620401, 0.16003707, -1.44411381, 0.87616892, -0.50446586, 0.23009474, 0.76201118]] yhat = model.predict(row) print('Prediction: %.3f' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

MAE: -12.739 (1.408) Prediction: -82.040 |

Gradient Boosting with CatBoost

CatBoost is a third-party library developed at Yandex that provides an efficient implementation of the gradient boosting algorithm.

The primary benefit of the CatBoost (in addition to computational speed improvements) is support for categorical input variables. This gives the library its name CatBoost for “Category Gradient Boosting.”

For more technical details on the CatBoost algorithm, see the paper:

Library Installation

You can install the CatBoost library using the pip Python installer, as follows:

|

1 |

sudo pip install catboost |

For additional installation instructions specific to your platform, see:

Next, let’s confirm that the library is installed and you are using a modern version.

Run the following script to print the library version number.

|

1 2 3 |

# check catboost version import catboost print(catboost.__version__) |

Running the example, you should see the following version number or higher.

|

1 |

0.21 |

The CatBoost library provides wrapper classes so that the efficient algorithm implementation can be used with the scikit-learn library, specifically via the CatBoostClassifier and CatBoostRegressor classes.

Let’s take a closer look at each in turn.

CatBoost for Classification

The example below first evaluates a CatBoostClassifier on the test problem using repeated k-fold cross-validation and reports the mean accuracy. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# catboost for classification from numpy import mean from numpy import std from sklearn.datasets import make_classification from catboost import CatBoostClassifier from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from matplotlib import pyplot # define dataset X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=5, random_state=1) # evaluate the model model = CatBoostClassifier(verbose=0, n_estimators=100) cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = CatBoostClassifier(verbose=0, n_estimators=100) model.fit(X, y) # make a single prediction row = [[2.56999479, -0.13019997, 3.16075093, -4.35936352, -1.61271951, -1.39352057, -2.48924933, -1.93094078, 3.26130366, 2.05692145]] yhat = model.predict(row) print('Prediction: %d' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

Accuracy: 0.931 (0.026) Prediction: 1 |

CatBoost for Regression

The example below first evaluates a CatBoostRegressor on the test problem using repeated k-fold cross-validation and reports the mean absolute error. Then a single model is fit on all available data and a single prediction is made.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# catboost for regression from numpy import mean from numpy import std from sklearn.datasets import make_regression from catboost import CatBoostRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from matplotlib import pyplot # define dataset X, y = make_regression(n_samples=1000, n_features=10, n_informative=5, random_state=1) # evaluate the model model = CatBoostRegressor(verbose=0, n_estimators=100) cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # fit the model on the whole dataset model = CatBoostRegressor(verbose=0, n_estimators=100) model.fit(X, y) # make a single prediction row = [[2.02220122, 0.31563495, 0.82797464, -0.30620401, 0.16003707, -1.44411381, 0.87616892, -0.50446586, 0.23009474, 0.76201118]] yhat = model.predict(row) print('Prediction: %.3f' % yhat[0]) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example first reports the evaluation of the model using repeated k-fold cross-validation, then the result of making a single prediction with a model fit on the entire dataset.

|

1 2 |

MAE: -9.281 (0.951) Prediction: -74.212 |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

- How to Setup Your Python Environment for Machine Learning with Anaconda

- A Gentle Introduction to the Gradient Boosting Algorithm for Machine Learning

- How to Configure the Gradient Boosting Algorithm

- A Gentle Introduction to XGBoost for Applied Machine Learning

Papers

- Stochastic Gradient Boosting, 2002.

- XGBoost: A Scalable Tree Boosting System, 2016.

- LightGBM: A Highly Efficient Gradient Boosting Decision Tree, 2017.

- CatBoost: gradient boosting with categorical features support, 2017.

APIs

- Scikit-Learn Homepage.

- sklearn.ensemble API.

- XGBoost Homepage.

- XGBoost Python API.

- LightGBM Project.

- LightGBM Python API.

- CatBoost Homepage.

- CatBoost API.

Articles

Summary

In this tutorial, you discovered how to use gradient boosting models for classification and regression in Python.

Specifically, you learned:

- Gradient boosting is an ensemble algorithm that fits boosted decision trees by minimizing an error gradient.

- How to evaluate and use gradient boosting with scikit-learn, including gradient boosting machines and the histogram-based algorithm.

- How to evaluate and use third-party gradient boosting algorithms including XGBoost, LightGBM and CatBoost.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thank you for your good writing!

I’m happy it helps.

Excellent post, thanks a lot!

Thanks!

Well organized, keep up the good work!

Thanks!

Hi Jason, all of my work is time series regression with utility metering data. And I always just look at RSME because its in the units that make sense to me. Basically when using

from sklearn.metrics import mean_squared_errorI just take themath.sqrt(mse)I notice that you use mean absolute error in the code above… Is there anything wrong with what I am doing to achieve best model results only viewing RSME?No problem! I used to use RMSE all the time myself.

Recently I prefer MAE – can’t say why. Perhaps taste. Perhaps because no sqrt step is required.

The best article. Thanks for such a mindblowing article.

When you use RepeatedStratifiedKFold mostly the accuracy is calculated to know the best performing model. What if one whats to calculate the parameters like recall, precision, sensitivity, specificity. Then how do we calculate it for each of these repeated folds and also the final mean of all of them like how accuracy is calculated?

Thanks!

You can specify any metric you like for stratified k-fold cross-validation.

Congratulations your text.

Excelent.

Thanks!

Do you have and example for the same? Or can you show how to do that?

Same what?

Thanks! Good tutorials

Thanks! You’re welcome.

What a job! Thank you Jason.

Thanks!

Hi Jason, I have a question regarding the generating the dataset.

So if you set the informative to be 5, does it mean that the classifier will detect these 5 attributes during the feature importance at high scores while as the other 5 redundant will be calculated as low?

If you set informative at 5 and redundant at 2, then the other 3 attributes will be random important?

Thanks

Not really.

We change informative/redundant to make the problem easier/harder – at least in the general sense.

Trees are great at sifting out redundant features automatically.

Any of Gradient Boosting Methods can work with multi-dimensional arrays for target values (y)?

I believe the sklearn gradient boosting implementation supports multi-output regression directly.

Perhaps test and confirm?

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingRegressor.html#sklearn.ensemble.GradientBoostingRegressor.fit

y array-like of shape (n_samples,)

Target values (strings or integers in classification, real numbers in regression) For classification, labels must correspond to classes.

Diferent from one that supports multi-output regression directly:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html#sklearn.ensemble.RandomForestRegressor.fit

yarray-like of shape (n_samples,) or (n_samples, n_outputs)

The target values (class labels in classification, real numbers in regression).

Nice.

Perhaps try this:

https://machinelearningmastery.com/multi-output-regression-models-with-python/

Hi Jason,

I am confused how a light gradient boosting model works, since in the API they use “num_round = 10

bst = lgb.train(param, train_data, num_round, valid_sets=[validation_data])” to fit the model with the training data.

Why is it that the .fit method works in your code? Is it just because you imported the LGBMRegressor model?

Thanks,

Yes, I recommend using the scikit-learn wrapper classes – it makes using the model much simpler.

Hello Jason – I am not quite happy with the regression results of my LSTM neural network. In particular, the far ends of the y-distribution are not predicted very well.

I am wondering if I could use the principle of gradient boosting to train successive networks to correct the remaining error the previous ones have made.

What do you think of this idea? What would the risks be?

Try it!

hello

I have created used XGBoost and I have making tuning parameters by search grid (even I know that Bayesian optimization is better but I was obliged to use search grid)

The question is I must answer this question:(robustness of the system is not clear, you have to specify it) But I have no idea how to estimate robustness and what should I read to answer it

any help, please

One estimate of model robustness is the variance or standard deviation of the performance metric from repeated evaluation on the same test harness.

Jason,

One more blog of yours published by Johar Ashfaque. https://medium.com/ai-in-plain-english/gradient-boosting-with-scikit-learn-xgboost-lightgbm-and-catboost-58e372d0d34b.

I did not find any reference to your article. This is the second one I know of. He seems to have omitted Histogram Based Gradient Boosting in here.

Ron

Thanks for letting me know, very disappointing that people rip me off so blatantly.

I also had to comment on his post because it is really shameful. He could cite you and add his own comments but recycling and adding something to the code. But really, that was a sad copy-paste.

Thank you!!!

Yes, people have no shame and I see it more and more – direct copy-paste of my tutorials on medium or similar.

I believe google can detect the duplicate content and punishes the copy cats with low rankings.

How about a Gradient boosting classifier using Numpy alone ?

Great suggestion, thanks!

Hi

Is catboost familiar with scikitlearn api?

Catboost can be used via the scikit-learn wrapper class, as in the above example.

thanks

You’re welcome.

Hey Jason, just wondering how you can incorporate early stopping with catboost and lightgbm? I’m getting an error which is asking for a validation set to be generated. I’m wondering if cross_val_score isn’t compatible with early stopping.

Cheers!

Sorry, I don’t have an example. I recommend checking the API documentation.

Yes, CV + early stopping don’t mix well, this may give you ideas:

https://machinelearningmastery.com/faq/single-faq/how-do-i-use-early-stopping-with-k-fold-cross-validation-or-grid-search

how to find precision, recall,f1 scores from here?

You can specify the metrics to calculate when evaluating a model, I recommend choosing one – see this:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Thanks Jason. I always enjoy reading your articles.

You’re welcome!

Thanks for the concise post. However, when trying to reproduce the classification results here, either I get an error from joblib or the run hangs forever. Any ideas on this issue? Thanks!

Hi JTM…Are you trying to run a specific code listing from our materials? If so, please indicate the specific code listing and provide the exact error message.

Regards,

Thanks! I wanted to ask when you are reporting the MAE values for regression, the bracketed values represent the cross validation? If yes, what does it mean when the value is more than 1? Ideally the max value should be 1?

Also, when I tested a model based that was made using gbr = GradientBoostingRegressor(parameters), the function gbr.score(X_test, y_test) gave a negative value like -1.08 this means that the model is a blunder? What do these negative values mean?

Hi Maya…the following resource may help add clarity:

https://machinelearningmastery.com/regression-metrics-for-machine-learning/

Some model evaluation metrics such as mean squared error (MSE) are negative when calculated in scikit-learn.

This is confusing, because error scores like MSE cannot actually be negative, with the smallest value being zero or no error.

The scikit-learn library has a unified model scoring system where it assumes that all model scores are maximized. In order this system to work with scores that are minimized, like MSE and other measures of error, the sores that are minimized are inverted by making them negative.

This can also be seen in the specification of the metric, e.g. ‘neg‘ is used in the name of the metric ‘neg_mean_squared_error‘.

When interpreting the negative error scores, you can ignore the sign and use them directly.

You can learn more here:

Model evaluation: quantifying the quality of predictions

Hi !

very helpful tutorial!

Can we use the same code for LightGBM Ranker and XGBoost Ranker by changing only the model fit and some of the params?

Thank you in advance!

Sofia

Hi Faiy V…There would be a great deal of reuse of code. Have you implemented models for both and compared the results? Let us know what you find!

Yes I tried. requires to define a group for ranking! I hope will work!

Yes I tried. requires to define a group for ranking! I hope will work!

Thanks for your article, it was very helpful.

There’s a question I’d like to ask.

You first made a model for each algorithm, implemented K-fold cross-validation on it, then made another model and predicted the targets with it.

In other words, you made 2 different models for each algorithm and cross-validate only one of them, and use the other one for predicting.

May I ask you why did you do that?

Could you please explain why you did not create only one model for cross-validation and prediction?

Thanks in advance.

You are very welcome Sepideh! The following may be of interest:

https://machinelearningmastery.com/training-validation-test-split-and-cross-validation-done-right/

Thank you so much.

Hi, I am using one of your GradientBoosting method for Regression dataset. It helps the RMSE improved a lot. Thanks.

Thank you Natalie for your feedback! We appreciate it!