Image created by Author using Midjourney

Introduction

Classification algorithms are at the heart of data science, helping us categorize and organize data into pre-defined classes. These algorithms are used in a wide array of applications, from spam detection and medical diagnosis to image recognition and customer profiling. It is for this reason that those new to data science must know about and understand these algorithms: they lay foundations for more advanced techniques and provide insight into how those data-driven decisions are made.

Let’s take a look at 5 essential classification algorithms, explained intuitively. We will include resources for each to learn more if interested.

1. Logistic Regression

One of the most basic algorithms in machine learning is Logistic Regression. Used to classify data into one of two possible classes, it maps any real number to the range [0, 1] using a function known as the sigmoid or logistic function. As a probabilistic output can be expressed in terms of this, different threshold values can be used to categorize the data.

Logistic regression is commonly used in tasks like predicting customer churn (churn/not churn) and email spam identification (spam/not spam). It is appreciated for its simplicity and ease of understanding, making it a reasonable starting point for the newcomers. Additionally, logistic regression is computationally efficient and can handle large datasets. However, logistic regression often faces scrutiny due to its assumption of a linear relationship between the feature values and the log-odds of the outcome, which can be a problem when the actual relationship is more complex.

Resources

2. Decision Trees

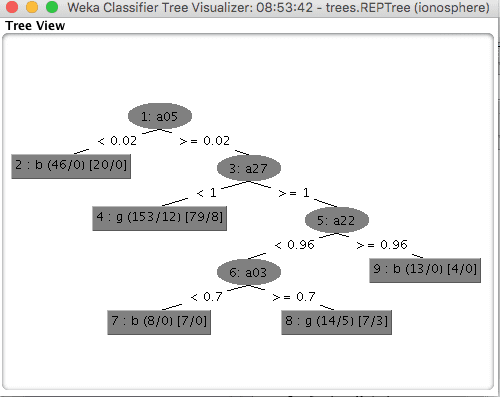

Decision Trees provide a more straightforward approach to classification, sorting a dataset into smaller and increasingly granular subsets according to feature values. The algorithm selects the “best” feature split to make at each node in the tree using a criterion like Gini impurity or entropy. Inside this tree structure there are leaf nodes which indicate final class labels, decision nodes in which decisions for splits are made and subtrees take root, and a root node which represents the entire dataset of samples.

Common tasks involving decision trees include credit scoring and customer segmentation. They are simple to interpret and scale both numerical and categorical data without preprocessing or preparation. Decision trees are not without fault, however, as they have high inclination toward overfitting, especially as they grow deeper, and can be brittle. Techniques such as pruning and setting minimum leaf node membership sizes can help here.

Resources

- How To Implement The Decision Tree Algorithm From Scratch In Python

- Classification And Regression Trees for Machine Learning

3. Random Forest

Random Forest is an ensemble method which manufactures multiple decision trees and then combines their output to attain higher accuracy and prediction stability, employing a technique called bagging (short for bootstrap aggregating). An improvement over “regular” decision tree bagging, random subsets of features and data are employed in the process to make the model variance higher. The model prediction is formed from an average of the output of each individual tree.

Applications with high success from a random forest classifier include image classification and stock price prediction, measured by their accuracy and robustness. Random forests are better than the single decision trees in this way and can handle large data sets much more efficiently. This is not to say that the model is perfect, for it has a worryingly high computational requirement and is poorly interpretable due to a given model’s high number of constituent decision trees.

Resources

- How to Implement Random Forest From Scratch in Python

- How to Develop a Random Forest Ensemble in Python

- Bagging and Random Forest Ensemble Algorithms for Machine Learning

4. Support Vector Machines

The aim of Support Vector Machines (SVM) is to find the hyperplane (a separation boundary of n-1 dimensions in a dataset with n dimensions) that separates the classes in the feature space effectively. Focusing on the locality of the two classes nearest the hyperplane, SVM introduces the support vectors — data points very close to this boundary — and the notion of a “margin”, which is the distance between the nearest data points from different classes near the hyperplane. Through a process known as the kernel trick, SVM projects data into higher dimensions, where a linear split is found. Using kernel functions like polynomial, radial basis function (RBF), or sigmoid, SVMs can effectively classify data that is not linearly separable in the original input space.

Applications such as bioinformatics and handwriting recognition use SVM, where the technique is particularly successful in high-dimensional conditions. SVMs can adapt to various other problems well, generally thanks to how different kernel functions can be employed. Nevertheless, there are data sizes for which SVM is not good, and the model requires careful parametrization, which can easily overwhelm newcomers.

Resources

- Support Vector Machines for Machine Learning

- Support Vector Machines for Image Classification and Detection Using OpenCV

5. k-Nearest Neighbors

An instance-based learning algorithm called k-Nearest Neighbors (k-NN) is one of incredible simplicity, proof that machine learning need not be unnecessarily complex in order to prove useful. k-NN’s classification of a data point relies sight unseen on the majority vote among the k closest neighbors. A distance metric, like the Euclidean distance, facilitates the selection of the nearest neighbors.

Mirroring k-NN’s simplicity is its use in tasks such as pattern recognition and recommendation systems, its implementation providing a ready entry-point for the new student. A perk here is the lack of underlying data distribution assumption. Being computationally expensive when dealing with large datasets hurts it, however, as does its reliance on an arbitrary choice of k and sensitivity to irrelevant features. Proper feature scaling is paramount.

Resources

Summary

Understanding these classification algorithms is absolutely necessary for someone entering data science. These algorithms are the starting point for highly sophisticated models, and are widely applicable in numerous fields of academics and deployment. New students are strongly encouraged to apply these algorithms to real-world data sets in order to acquire practical experience. Developing a working knowledge of these fundamentals will leave you prepared for approaching more challenging tasks of data science in the future.

No comments yet.