In this post you will discover the k-Nearest Neighbors (KNN) algorithm for classification and regression. After reading this post you will know.

- The model representation used by KNN.

- How a model is learned using KNN (hint, it’s not).

- How to make predictions using KNN

- The many names for KNN including how different fields refer to it.

- How to prepare your data to get the most from KNN.

- Where to look to learn more about the KNN algorithm.

This post was written for developers and assumes no background in statistics or mathematics. The focus is on how the algorithm works and how to use it for predictive modeling problems. If you have any questions, leave a comment and I will do my best to answer.

Kick-start your project with my new book Master Machine Learning Algorithms, including step-by-step tutorials and the Excel Spreadsheet files for all examples.

Let’s get started.

K-Nearest Neighbors for Machine Learning

Photo by Valentin Ottone, some rights reserved.

KNN Model Representation

The model representation for KNN is the entire training dataset.

It is as simple as that.

KNN has no model other than storing the entire dataset, so there is no learning required.

Efficient implementations can store the data using complex data structures like k-d trees to make look-up and matching of new patterns during prediction efficient.

Because the entire training dataset is stored, you may want to think carefully about the consistency of your training data. It might be a good idea to curate it, update it often as new data becomes available and remove erroneous and outlier data.

Get your FREE Algorithms Mind Map

Sample of the handy machine learning algorithms mind map.

I've created a handy mind map of 60+ algorithms organized by type.

Download it, print it and use it.

Also get exclusive access to the machine learning algorithms email mini-course.

Making Predictions with KNN

KNN makes predictions using the training dataset directly.

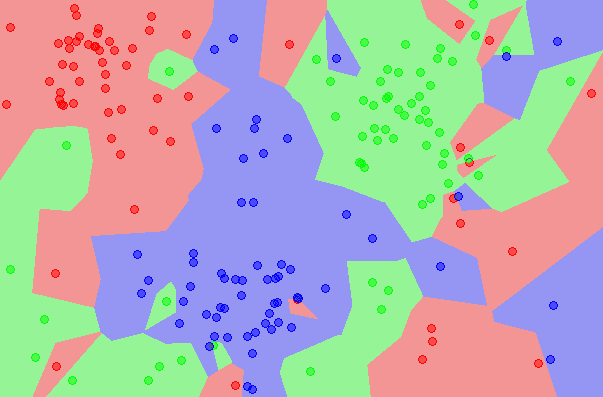

Predictions are made for a new instance (x) by searching through the entire training set for the K most similar instances (the neighbors) and summarizing the output variable for those K instances. For regression this might be the mean output variable, in classification this might be the mode (or most common) class value.

To determine which of the K instances in the training dataset are most similar to a new input a distance measure is used. For real-valued input variables, the most popular distance measure is Euclidean distance.

Euclidean distance is calculated as the square root of the sum of the squared differences between a new point (x) and an existing point (xi) across all input attributes j.

EuclideanDistance(x, xi) = sqrt( sum( (xj – xij)^2 ) )

Other popular distance measures include:

- Hamming Distance: Calculate the distance between binary vectors (more).

- Manhattan Distance: Calculate the distance between real vectors using the sum of their absolute difference. Also called City Block Distance (more).

- Minkowski Distance: Generalization of Euclidean and Manhattan distance (more).

There are many other distance measures that can be used, such as Tanimoto, Jaccard, Mahalanobis and cosine distance. You can choose the best distance metric based on the properties of your data. If you are unsure, you can experiment with different distance metrics and different values of K together and see which mix results in the most accurate models.

Euclidean is a good distance measure to use if the input variables are similar in type (e.g. all measured widths and heights). Manhattan distance is a good measure to use if the input variables are not similar in type (such as age, gender, height, etc.).

The value for K can be found by algorithm tuning. It is a good idea to try many different values for K (e.g. values from 1 to 21) and see what works best for your problem.

The computational complexity of KNN increases with the size of the training dataset. For very large training sets, KNN can be made stochastic by taking a sample from the training dataset from which to calculate the K-most similar instances.

KNN has been around for a long time and has been very well studied. As such, different disciplines have different names for it, for example:

- Instance-Based Learning: The raw training instances are used to make predictions. As such KNN is often referred to as instance-based learning or a case-based learning (where each training instance is a case from the problem domain).

- Lazy Learning: No learning of the model is required and all of the work happens at the time a prediction is requested. As such, KNN is often referred to as a lazy learning algorithm.

- Non-Parametric: KNN makes no assumptions about the functional form of the problem being solved. As such KNN is referred to as a non-parametric machine learning algorithm.

KNN can be used for regression and classification problems.

KNN for Regression

When KNN is used for regression problems the prediction is based on the mean or the median of the K-most similar instances.

KNN for Classification

When KNN is used for classification, the output can be calculated as the class with the highest frequency from the K-most similar instances. Each instance in essence votes for their class and the class with the most votes is taken as the prediction.

Class probabilities can be calculated as the normalized frequency of samples that belong to each class in the set of K most similar instances for a new data instance. For example, in a binary classification problem (class is 0 or 1):

p(class=0) = count(class=0) / (count(class=0)+count(class=1))

If you are using K and you have an even number of classes (e.g. 2) it is a good idea to choose a K value with an odd number to avoid a tie. And the inverse, use an even number for K when you have an odd number of classes.

Ties can be broken consistently by expanding K by 1 and looking at the class of the next most similar instance in the training dataset.

Curse of Dimensionality

KNN works well with a small number of input variables (p), but struggles when the number of inputs is very large.

Each input variable can be considered a dimension of a p-dimensional input space. For example, if you had two input variables x1 and x2, the input space would be 2-dimensional.

As the number of dimensions increases the volume of the input space increases at an exponential rate.

In high dimensions, points that may be similar may have very large distances. All points will be far away from each other and our intuition for distances in simple 2 and 3-dimensional spaces breaks down. This might feel unintuitive at first, but this general problem is called the “Curse of Dimensionality“.

Best Prepare Data for KNN

- Rescale Data: KNN performs much better if all of the data has the same scale. Normalizing your data to the range [0, 1] is a good idea. It may also be a good idea to standardize your data if it has a Gaussian distribution.

- Address Missing Data: Missing data will mean that the distance between samples can not be calculated. These samples could be excluded or the missing values could be imputed.

- Lower Dimensionality: KNN is suited for lower dimensional data. You can try it on high dimensional data (hundreds or thousands of input variables) but be aware that it may not perform as well as other techniques. KNN can benefit from feature selection that reduces the dimensionality of the input feature space.

Further Reading

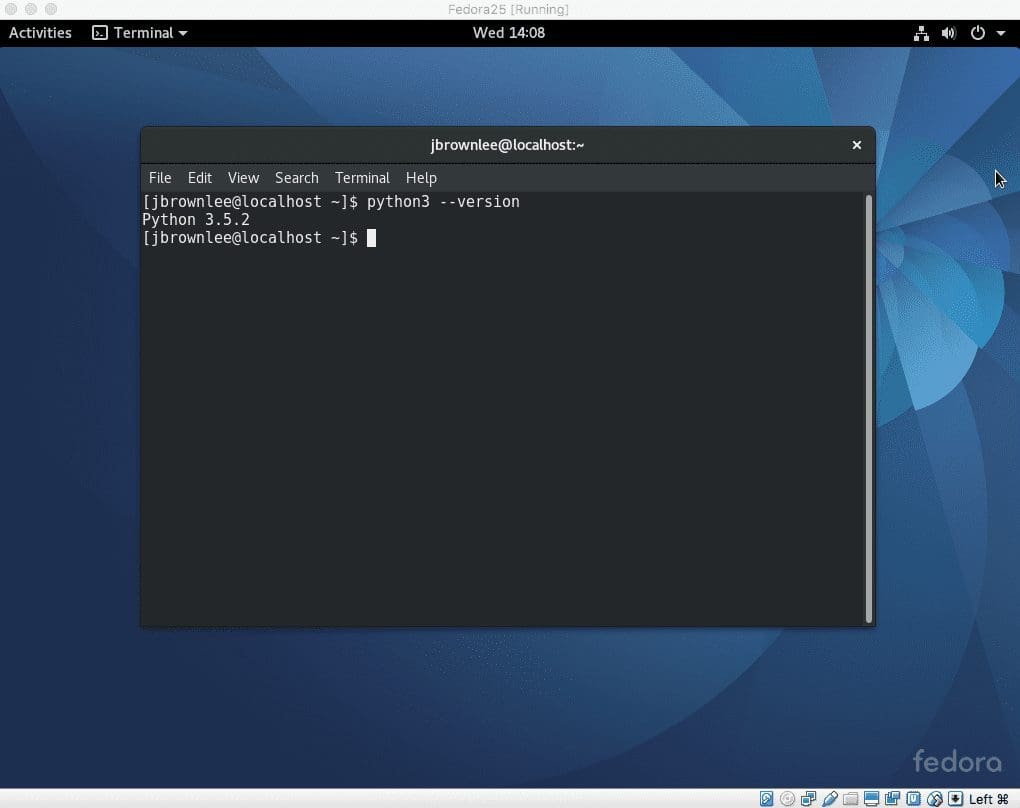

If you are interested in implementing KNN from scratch in Python, checkout the post:

Below are some good machine learning texts that cover the KNN algorithm from a predictive modeling perspective.

- Applied Predictive Modeling, Chapter 7 for regression, Chapter 13 for classification.

- Data Mining: Practical Machine Learning Tools and Techniques, page 76 and 128

- Doing Data Science: Straight Talk from the Frontline, page 71

- Machine Learning, Chapter 8

Also checkout K-Nearest Neighbors on Wikipedia.

Summary

In this post you discovered the KNN machine learning algorithm. You learned that:

- KNN stores the entire training dataset which it uses as its representation.

- KNN does not learn any model.

- KNN makes predictions just-in-time by calculating the similarity between an input sample and each training instance.

- There are many distance measures to choose from to match the structure of your input data.

- That it is a good idea to rescale your data, such as using normalization, when using KNN.

If you have any questions about this post or the KNN algorithm ask in the comments and I will do my best to answer.

KNN is good to looking for nearest date in two sets of data, excluding the nearest neighbor used? if not, what algorithm you should suggest me to solve the issue.

My doubt is it:

A = [‘2016-05-01 00:17:10’, ‘2016-05-01 00:49:06’]

B = [ ‘2016-05-01 01:49:02’, ‘2016-05-01 01:17:06’]

With

A[0]the nearest is neighbor isB[1], soB[1]is not used more in the next step, so the nearest neighbor forA[1]isB[0].Thanks for your advice.

Hi Jason,

Kindly accept my encomiums for your illustrative article on kNN.

I am extremely eager to know as to how the k has been computed to have a range between 1 and 21.

Since the rationale is not explicit in respect the value of k = 21.

I would be very obliged if can kindly through light on the methodology of arriving at the k value spanning between lower and upper noinds.

Hi Bhavani, great question.

There is no formula to calculate the best k value for a problem.

My advice is to use trial and error. Experiment with different k values on your problem and see which results in the model with the best performance.

The best value of k that I usually use is by taking the square root of number of observations in my dataset.

Although, this might seem crazy; sometimes, when my data set is high dimensional, then iterating the k value from 1 to n, then calculating the prediction error and then identifying the best k value has worked for me.

Nice tip.

thanks for this tip

Hi, for getting optimal K, previously I have used Elbow-method, where u draw a graph with different values and K and the point in graph where there seems kind of elbow shape, you choose that as K, I also used square-root method, but my dataset was small.

Please guide for bid dataset , would elbow method works?

Great tip!

Try it and see.

Hi Jason!

I was wondering if there’s any way for me to retrieve the neighbour IDs?

ie: If n = 10, I would like to specify data point x, and have the algorithm reply with the IDs of the 10 nearest neighbours of x.

Is that a thing that can be done? I’m working in R with Caret. I’d like to stick with that if possible.

I’m not sure what you mean by ID, Georgina. Perhaps the specific row number or a column value.

You may need to implement something custom for your requirements.

Hello Jason

I have a data set of time required for a state to complete.For example state 1- 5.2 sec,state 2 -5.5 sec,State 3 – 5.2 sec etc… Can I use KNN to match an input and say which state it belongs to if the input is not exactly close to the training data set?And how complex my data set should be to solve this kind of multi class matching?

Sorry Anand, I’m not sure I understand the question.

Thank you very much for your effort. May the God bless.

I’m glad you found the post useful.

Hello,

ı wonder how should be the input?

İ have a csv file that contains “Price” and “Score” for games, when i try to label the graph ,

Python gives the error for my input

thanks

Hello, and thanks for your useful article.

Is it true that KNN leads to overfitting for a small number of neighbors (k=1, for example)?

KNN will give different results on different problems.

Does KNN algorithm works on categorical variables too?

Yes.

How to use knn for categorical data[ in r]?I use kddcup data that has categorical features

Perhaps use hamming distance for categorical inputs.

Is there a way to select features for/using KNN ? Like the way LASSO regression can be used for feature selection . I am assuming that LASSO cannot be prior to running KNN, as the former is a parametric model and the later a non-parametric.

Yes, consider RFE.

How I can find the most influential features for the KNN implementation ? .

I wanted to select few features from the list of features.

Use feature selection methods:

https://machinelearningmastery.com/an-introduction-to-feature-selection/

hi jason,

please clarify my doubt when i went through knn algorithm while implementing it in r we are not using lazy and eager learning techniques.when should we use it..

Regards,

Bhagyashree

KNN is a lazy learning algorithm.

Practically all other common ML algorithms could be considered eager learning (e.g. preparing the model from training data).

Awesome post. Thank you for your effort. 🙂

I’m glad it helped.

I am unable to download the mindmap.

Try here:

https://machinelearningmastery.leadpages.co/machine-learning-algorithms-mini-course/

how to apply knn for extracted retinal blood vessel structure in comparison of test image with respect to training images for biometric authentication

If you are working with images, perhaps try the CNN deep learning method instead?

why we use KNN for displaying shortest paths than other algorithms i.e., what is the speciality

I don’t believe kNN is used as a shortest path algorithm, it is used for classification and regression.

How do I use this for regression? Do I need to calculate the probabilities for the same, just like classification?

See this post:

https://machinelearningmastery.com/spot-check-regression-machine-learning-algorithms-python-scikit-learn/

hello jason,

how can me classify binary classification using knn?

What is the problem exactly?

Hello Dr. Jason

I have sent email asking about which should i use in Malware detection, and you told the supervised learning requires a classification algorithm.

My question , Is KNN good for Malware detection? What is the best a classification algorithm for Malware detection?

Thank you for the excellent tutorial.

I would recommend testing a suite of supervised learning algorithms to see what works best for your problem.

This process may help guide you through your supervised predictive modeling problem:

https://machinelearningmastery.com/start-here/#process

Hi

How do we deal with ties in KNN. How does it make the best voting when the neighbors stay at the same distance…

What are the methods adapted…

You can use a random choice in that case.

In fact, there are many methods in the literature for working through ties. It might be work doing a quick survey.

I want to know how to apply knn algorithm for multi input(all are independent) .Training the algorithm is all good but if i want to enter new input(i.e active user input) and predict the output for it, how is it possible? I am facing this problem from last two months and for time being I have converted three input into one by taking mean of it. Please suggest.

I would recommend using sklearn to develop your model.

You can then make predictions directly by calling predict() on the model. Learn more here:

https://machinelearningmastery.com/make-predictions-scikit-learn/

hello Jason,

I am getting the same output for every different input. Is there problem in the data or algorithm? Please suggest.

Here are some ideas:

https://machinelearningmastery.com/machine-learning-performance-improvement-cheat-sheet/

Hello Jason,

is there any algorithm wich can do classification (k nearest-neighbor) with categorical variables ? if yes , what’s ?

kNN can classify with categorical variables, you must use a distance measure that can handle the categorical vars.

Can you explain about hamming distance intuition?

if i have independent variable as gender in my data set, and if the unseen data has male as category, how to predict the y value?

More on hamming distance here:

https://en.wikipedia.org/wiki/Hamming_distance

how can we apply knn algorithm on a dataset which has mixture of numerical and categorical attributes.

Good question. You can use a hamming distance for categorical variables and euclidean or similar for the normalised numerical variables, then add the scores together.

Very great Post! I saw that you write about different methods in different posts.

Do you also have a post about a comparison of the different machine learning algorithms (properties, when to use them, limitations ..) in order to be able to compare the methods?

Thank you very much

Timo

I generally recommend spot checking algorithms on a problem instead, more details here:

https://machinelearningmastery.com/faq/single-faq/what-algorithm-config-should-i-use

what will be the knn algo for 10 features and 2 faces? TIA

I don’t follow sorry, what do you mean?

Is it possible to use non- metric measure with KNN?

If the data set is mixed (numerical, nominal, and binary) features, for classifying such data we need to define new measure able to handle the three types.

Generally, the combined approach is widely used for this case.

If the new measure is a combination of different measures, it is very difficult to fulfill the triangle inequality, so it can be similarity measure or distance but not metric.

My question is

Is it possible to use such measure with KNN?

as we know KNN requires metric distance.

In general:

Can I use KNN with a non-metric measure for classifying the data?

Sure. You can use any sensible distance measure you like.

I recommend keeping things simple.

Great postJason. Thanks

One question – say there are 100 records classified as YES and NO. All 100 are YES, will a new record I.e, 101th record which is actually a NO get classified as NO?

No, the model needs examples of yes and no, ideally an equal number of examples.

Good afternoon, thank you for providing these explanations. Perhaps due to my shortcomings in math, can you explain “for very large training sets, kNN can be made stochastic by taking a sample from the training dataset from which to calculate the k-most similar instances” in greater detail? My understanding of “stochastic” is such that is generally random. Are you saying kNN itself becomes random when using different test data from a very large data set? Wouldn’t that depend on how you select the data as test values? Thank you very much for any additional information you can provide.

A random sample would be taken from the training set in order to make a prediction for the test set.

This would make the prediction stochastic for a given fixed trying set, when making prediction on the same test set samples. The benefit is lower computational complexity with approximately equal skill.

Hi Jason, Thank you for the informative post. Can you please let me know what could be the good feature selection method for implementing k-NN.

Good question, I answer it here:

https://machinelearningmastery.com/faq/single-faq/what-feature-selection-method-should-i-use

I have a working model that utilizes test and train data. I am struggling on the best method to implement in a production environment. Do I need to implement a function and loop through the dataset or does a command exists to make predictions on unforeseen data in Python with a large file.

Hi Chad…The following resource may help you understand best practices when deploying machine learning models:

https://machinelearningmastery.com/deploy-machine-learning-model-to-production/

Also, it may be helpful to understand the concept of pipelines:

https://machinelearningmastery.com/machine-learning-modeling-pipelines/