Distance measures play an important role in machine learning.

They provide the foundation for many popular and effective machine learning algorithms like k-nearest neighbors for supervised learning and k-means clustering for unsupervised learning.

Different distance measures must be chosen and used depending on the types of the data. As such, it is important to know how to implement and calculate a range of different popular distance measures and the intuitions for the resulting scores.

In this tutorial, you will discover distance measures in machine learning.

After completing this tutorial, you will know:

- The role and importance of distance measures in machine learning algorithms.

- How to implement and calculate Hamming, Euclidean, and Manhattan distance measures.

- How to implement and calculate the Minkowski distance that generalizes the Euclidean and Manhattan distance measures.

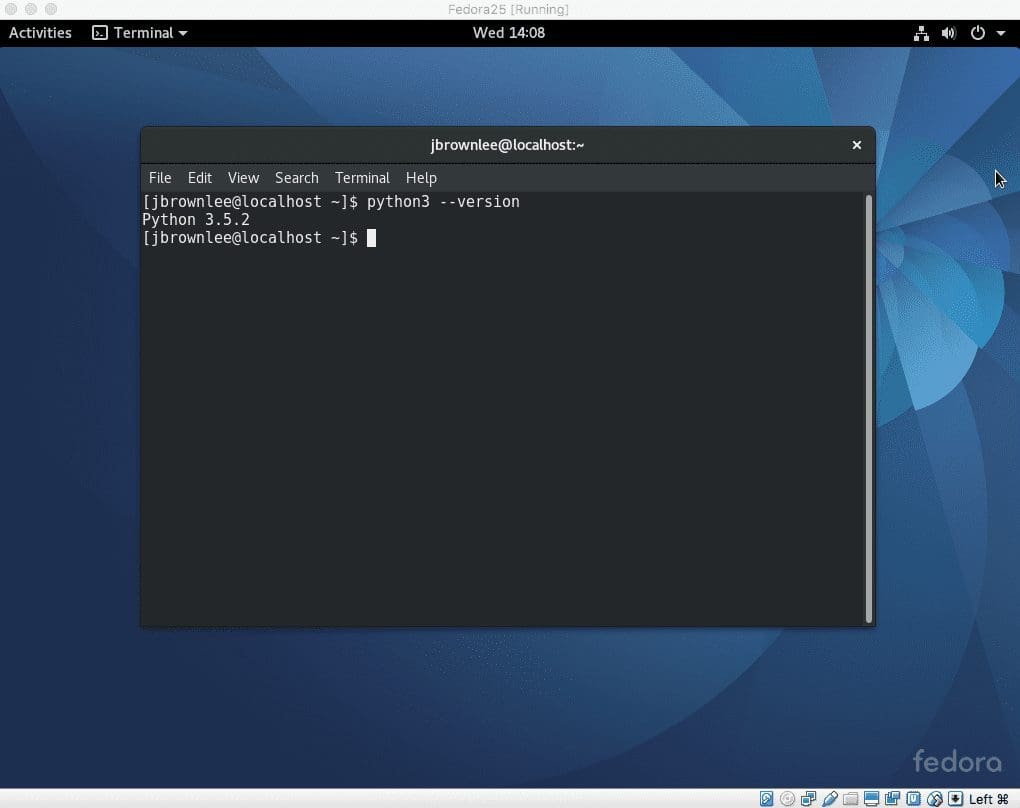

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Distance Measures for Machine Learning

Photo by Prince Roy, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Role of Distance Measures

- Hamming Distance

- Euclidean Distance

- Manhattan Distance (Taxicab or City Block)

- Minkowski Distance

Role of Distance Measures

Distance measures play an important role in machine learning.

A distance measure is an objective score that summarizes the relative difference between two objects in a problem domain.

Most commonly, the two objects are rows of data that describe a subject (such as a person, car, or house), or an event (such as a purchase, a claim, or a diagnosis).

Perhaps the most likely way you will encounter distance measures is when you are using a specific machine learning algorithm that uses distance measures at its core. The most famous algorithm of this type is the k-nearest neighbors algorithm, or KNN for short.

In the KNN algorithm, a classification or regression prediction is made for new examples by calculating the distance between the new example (row) and all examples (rows) in the training dataset. The k examples in the training dataset with the smallest distance are then selected and a prediction is made by averaging the outcome (mode of the class label or mean of the real value for regression).

KNN belongs to a broader field of algorithms called case-based or instance-based learning, most of which use distance measures in a similar manner. Another popular instance-based algorithm that uses distance measures is the learning vector quantization, or LVQ, algorithm that may also be considered a type of neural network.

Related is the self-organizing map algorithm, or SOM, that also uses distance measures and can be used for supervised or unsupervised learning. Another unsupervised learning algorithm that uses distance measures at its core is the K-means clustering algorithm.

In instance-based learning the training examples are stored verbatim, and a distance function is used to determine which member of the training set is closest to an unknown test instance. Once the nearest training instance has been located, its class is predicted for the test instance.

— Page 135, Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

A short list of some of the more popular machine learning algorithms that use distance measures at their core is as follows:

- K-Nearest Neighbors

- Learning Vector Quantization (LVQ)

- Self-Organizing Map (SOM)

- K-Means Clustering

There are many kernel-based methods may also be considered distance-based algorithms. Perhaps the most widely known kernel method is the support vector machine algorithm, or SVM for short.

Do you know more algorithms that use distance measures?

Let me know in the comments below.

When calculating the distance between two examples or rows of data, it is possible that different data types are used for different columns of the examples. An example might have real values, boolean values, categorical values, and ordinal values. Different distance measures may be required for each that are summed together into a single distance score.

Numerical values may have different scales. This can greatly impact the calculation of distance measure and it is often a good practice to normalize or standardize numerical values prior to calculating the distance measure.

Numerical error in regression problems may also be considered a distance. For example, the error between the expected value and the predicted value is a one-dimensional distance measure that can be summed or averaged over all examples in a test set to give a total distance between the expected and predicted outcomes in the dataset. The calculation of the error, such as the mean squared error or mean absolute error, may resemble a standard distance measure.

As we can see, distance measures play an important role in machine learning. Perhaps four of the most commonly used distance measures in machine learning are as follows:

- Hamming Distance

- Euclidean Distance

- Manhattan Distance

- Minkowski Distance

What are some other distance measures you have used or heard of?

Let me know in the comments below.

You need to know how to calculate each of these distance measures when implementing algorithms from scratch and the intuition for what is being calculated when using algorithms that make use of these distance measures.

Let’s take a closer look at each in turn.

Hamming Distance

Hamming distance calculates the distance between two binary vectors, also referred to as binary strings or bitstrings for short.

You are most likely going to encounter bitstrings when you one-hot encode categorical columns of data.

For example, if a column had the categories ‘red,’ ‘green,’ and ‘blue,’ you might one hot encode each example as a bitstring with one bit for each column.

- red = [1, 0, 0]

- green = [0, 1, 0]

- blue = [0, 0, 1]

The distance between red and green could be calculated as the sum or the average number of bit differences between the two bitstrings. This is the Hamming distance.

For a one-hot encoded string, it might make more sense to summarize to the sum of the bit differences between the strings, which will always be a 0 or 1.

- HammingDistance = sum for i to N abs(v1[i] – v2[i])

For bitstrings that may have many 1 bits, it is more common to calculate the average number of bit differences to give a hamming distance score between 0 (identical) and 1 (all different).

- HammingDistance = (sum for i to N abs(v1[i] – v2[i])) / N

We can demonstrate this with an example of calculating the Hamming distance between two bitstrings, listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# calculating hamming distance between bit strings # calculate hamming distance def hamming_distance(a, b): return sum(abs(e1 - e2) for e1, e2 in zip(a, b)) / len(a) # define data row1 = [0, 0, 0, 0, 0, 1] row2 = [0, 0, 0, 0, 1, 0] # calculate distance dist = hamming_distance(row1, row2) print(dist) |

Running the example reports the Hamming distance between the two bitstrings.

We can see that there are two differences between the strings, or 2 out of 6 bit positions different, which averaged (2/6) is about 1/3 or 0.333.

|

1 |

0.3333333333333333 |

We can also perform the same calculation using the hamming() function from SciPy. The complete example is listed below.

|

1 2 3 4 5 6 7 8 |

# calculating hamming distance between bit strings from scipy.spatial.distance import hamming # define data row1 = [0, 0, 0, 0, 0, 1] row2 = [0, 0, 0, 0, 1, 0] # calculate distance dist = hamming(row1, row2) print(dist) |

Running the example, we can see we get the same result, confirming our manual implementation.

|

1 |

0.3333333333333333 |

Euclidean Distance

Euclidean distance calculates the distance between two real-valued vectors.

You are most likely to use Euclidean distance when calculating the distance between two rows of data that have numerical values, such a floating point or integer values.

If columns have values with differing scales, it is common to normalize or standardize the numerical values across all columns prior to calculating the Euclidean distance. Otherwise, columns that have large values will dominate the distance measure.

Although there are other possible choices, most instance-based learners use Euclidean distance.

— Page 135, Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

Euclidean distance is calculated as the square root of the sum of the squared differences between the two vectors.

- EuclideanDistance = sqrt(sum for i to N (v1[i] – v2[i])^2)

If the distance calculation is to be performed thousands or millions of times, it is common to remove the square root operation in an effort to speed up the calculation. The resulting scores will have the same relative proportions after this modification and can still be used effectively within a machine learning algorithm for finding the most similar examples.

- EuclideanDistance = sum for i to N (v1[i] – v2[i])^2

This calculation is related to the L2 vector norm and is equivalent to the sum squared error and the root sum squared error if the square root is added.

We can demonstrate this with an example of calculating the Euclidean distance between two real-valued vectors, listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculating euclidean distance between vectors from math import sqrt # calculate euclidean distance def euclidean_distance(a, b): return sqrt(sum((e1-e2)**2 for e1, e2 in zip(a,b))) # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance dist = euclidean_distance(row1, row2) print(dist) |

Running the example reports the Euclidean distance between the two vectors.

|

1 |

6.082762530298219 |

We can also perform the same calculation using the euclidean() function from SciPy. The complete example is listed below.

|

1 2 3 4 5 6 7 8 |

# calculating euclidean distance between vectors from scipy.spatial.distance import euclidean # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance dist = euclidean(row1, row2) print(dist) |

Running the example, we can see we get the same result, confirming our manual implementation.

|

1 |

6.082762530298219 |

Manhattan Distance (Taxicab or City Block Distance)

The Manhattan distance, also called the Taxicab distance or the City Block distance, calculates the distance between two real-valued vectors.

It is perhaps more useful to vectors that describe objects on a uniform grid, like a chessboard or city blocks. The taxicab name for the measure refers to the intuition for what the measure calculates: the shortest path that a taxicab would take between city blocks (coordinates on the grid).

It might make sense to calculate Manhattan distance instead of Euclidean distance for two vectors in an integer feature space.

Manhattan distance is calculated as the sum of the absolute differences between the two vectors.

- ManhattanDistance = sum for i to N sum |v1[i] – v2[i]|

The Manhattan distance is related to the L1 vector norm and the sum absolute error and mean absolute error metric.

We can demonstrate this with an example of calculating the Manhattan distance between two integer vectors, listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculating manhattan distance between vectors from math import sqrt # calculate manhattan distance def manhattan_distance(a, b): return sum(abs(e1-e2) for e1, e2 in zip(a,b)) # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance dist = manhattan_distance(row1, row2) print(dist) |

Running the example reports the Manhattan distance between the two vectors.

|

1 |

13 |

We can also perform the same calculation using the cityblock() function from SciPy. The complete example is listed below.

|

1 2 3 4 5 6 7 8 |

# calculating manhattan distance between vectors from scipy.spatial.distance import cityblock # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance dist = cityblock(row1, row2) print(dist) |

Running the example, we can see we get the same result, confirming our manual implementation.

|

1 |

13 |

Minkowski Distance

Minkowski distance calculates the distance between two real-valued vectors.

It is a generalization of the Euclidean and Manhattan distance measures and adds a parameter, called the “order” or “p“, that allows different distance measures to be calculated.

The Minkowski distance measure is calculated as follows:

- EuclideanDistance = (sum for i to N (abs(v1[i] – v2[i]))^p)^(1/p)

Where “p” is the order parameter.

When p is set to 1, the calculation is the same as the Manhattan distance. When p is set to 2, it is the same as the Euclidean distance.

- p=1: Manhattan distance.

- p=2: Euclidean distance.

Intermediate values provide a controlled balance between the two measures.

It is common to use Minkowski distance when implementing a machine learning algorithm that uses distance measures as it gives control over the type of distance measure used for real-valued vectors via a hyperparameter “p” that can be tuned.

We can demonstrate this calculation with an example of calculating the Minkowski distance between two real vectors, listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# calculating minkowski distance between vectors from math import sqrt # calculate minkowski distance def minkowski_distance(a, b, p): return sum(abs(e1-e2)**p for e1, e2 in zip(a,b))**(1/p) # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance (p=1) dist = minkowski_distance(row1, row2, 1) print(dist) # calculate distance (p=2) dist = minkowski_distance(row1, row2, 2) print(dist) |

Running the example first calculates and prints the Minkowski distance with p set to 1 to give the Manhattan distance, then with p set to 2 to give the Euclidean distance, matching the values calculated on the same data from the previous sections.

|

1 2 |

13.0 6.082762530298219 |

We can also perform the same calculation using the minkowski_distance() function from SciPy. The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 |

# calculating minkowski distance between vectors from scipy.spatial import minkowski_distance # define data row1 = [10, 20, 15, 10, 5] row2 = [12, 24, 18, 8, 7] # calculate distance (p=1) dist = minkowski_distance(row1, row2, 1) print(dist) # calculate distance (p=2) dist = minkowski_distance(row1, row2, 2) print(dist) |

Running the example, we can see we get the same results, confirming our manual implementation.

|

1 2 |

13.0 6.082762530298219 |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

APIs

- Distance computations (scipy.spatial.distance)

- scipy.spatial.distance.hamming API.

- scipy.spatial.distance.euclidean API.

- scipy.spatial.distance.cityblock API.

- scipy.spatial.minkowski_distance API.

Articles

- Instance-based learning, Wikipedia.

- Hamming distance, Wikipedia.

- Euclidean distance, Wikipedia.

- Taxicab geometry, Wikipedia.

- Minkowski distance, Wikipedia.

Summary

In this tutorial, you discovered distance measures in machine learning.

Specifically, you learned:

- The role and importance of distance measures in machine learning algorithms.

- How to implement and calculate Hamming, Euclidean, and Manhattan distance measures.

- How to implement and calculate the Minkowski distance that generalizes the Euclidean and Manhattan distance measures.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Why didn’t you write about Mahalanobis distance? It’s much better than Euclidean, if we consider different measure scales of variables and correlations between them.

Upvote for covering Mahalanobis distance!

Thanks Mel!

Can you suggest me the books for calculating the distances for data science

Hi Subrata…The following resource may be of interest:

https://towardsdatascience.com/9-distance-measures-in-data-science-918109d069fa

Another upvote for Mahalanobis

Thanks, it’s on my TODO.

Great suggestion, thanks!

Agree with the comment above. Furthermore, the difference between mahalanobis and eucliden distance metric could be explained by using unsupervised support vector clustering algorithm that uses euclidean distance and unsupervised ellipsoidal support vector clustering algorithm that uses mahalanobis distance metric.

Thanks.

No love for vector cosine similarity?

Great suggestion, thanks.

How about EMD distance?

What is EMD distance?

Earth Mover’s Distance

Thanks.

It is worth mention that in some advance cases the default metric option are not enough (for example metric options available for KNN in sklearn). I am working currently on the project in which KNN distance is defined using both categorical columns ( having various distance weight in case of value difference ) and numerical columns (having distance proportional to absolute value difference). Final distance is a sum of distances over columns.

Don’t be afraid of custom metrics!

Regards!

Great tip!

Whats the difference between , similarity and distance ?

Also , difference between :

1 Cosine distance and Euclidean distance ?

2 Cosine similarity and Euclidean similarity ?

Not a lot, in this context they mean the same thing.

Hi, im still learning bout this distance measurement. can i ask you a question sir?

how did the rows data in euclidean work and how to obtain the data? is it a random numerical value?

i hope this question didnt too much for you sir. thank you

You would collect data from your domain, each row of data would be one observation.

in my case, im doing a project to measure the similarity for images. so can i used the coordinates of the image as my data?

I believe there are specific measures used for comparing the similarity between images (matrix of pixels). I recommend checking the literature.

Cosine model in distance algorithm is missing, update it with cosine model that is 5 the model of distance measure

Thanks for the suggestion.

New to Distance Measuring; For an unsupervised learning K-Clustering Analysis is there a preferred method. My variables relate to shopping and trying to identify groups of customers with same shopping habits, i have customer information (age, income, education level) and products they purchase. Thanks.

Yes, there are specific metrics for clustering:

https://machinelearningmastery.com/faq/single-faq/how-do-i-evaluate-a-clustering-algorithm

Cosin Similarity Distance

Great suggestion!

I love the subject very much. Friday i has a exam on clustering, ACO, perceptron. I can every math and i love to do the math. I have a wish to work with Data science in PhD.

Hi Jason, thank for this post

In the same context, i have tuned the distance metric of KKNN algorithm in caret and it gives me three values 1, 2 and 3 using the random search. What does this mean?

Hi Ghizlane…You may find the following of interest:

https://www.analyticsvidhya.com/blog/2021/08/how-knn-uses-distance-measures/

Thanks James for the reply, I will check the link

Hi Jason very nice post, does PCA can be considered a measure based algorithm? When the projection is computed it uses a distance measure at its core, wright?

Hi Dani…You may find the following of interest:

https://www.keboola.com/blog/pca-machine-learning

Many thanks for the contribution.

Please could you tell me how to initialize the K-Means process with Manhattan distance method?

Same as Euclidean distance? Choice of Cluster, Centroids calculation, iteration..etc?

Thank you

You are very welcome Renato! The following resource may be of interest:

https://www.analyticsvidhya.com/blog/2019/08/comprehensive-guide-k-means-clustering/

Hello

can you help me about a better way than wesserstain statistical distance for metric in machin learning?

Hi farid…Please elaborate on the criteria you consider when comparing algorithms. In other words, please elaborate on the issue or concern you have regarding Wasserstein.

Alternatives can be found here:

https://towardsdatascience.com/statistical-tests-wont-help-you-to-compare-distributions-d829eefe418