A popular demonstration of the capability of deep learning techniques is object recognition in image data.

The “hello world” of object recognition for machine learning and deep learning is the MNIST dataset for handwritten digit recognition.

In this post, you will discover how to develop a deep learning model to achieve near state-of-the-art performance on the MNIST handwritten digit recognition task in Python using the Keras deep learning library.

After completing this tutorial, you will know:

- How to load the MNIST dataset in Keras

- How to develop and evaluate a baseline neural network model for the MNIST problem

- How to implement and evaluate a simple Convolutional Neural Network for MNIST

- How to implement a close to state-of-the-art deep learning model for MNIST

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jun/2016: First published

- Update Oct/2016: Updated for Keras 1.1.0, TensorFlow 0.10.0 and scikit-learn v0.18

- Update Mar/2017: Updated for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Update Sep/2019: Updated for Keras 2.2.5 API

- Update Jul/2022: Updated for TensorFlow 2.x API

Note, for an extended version of this tutorial, see:

Handwritten digit recognition using convolutional neural networks in Python with Keras

Photo by Jamie, some rights reserved.

Description of the MNIST Handwritten Digit Recognition Problem

The MNIST problem is a dataset developed by Yann LeCun, Corinna Cortes, and Christopher Burges for evaluating machine learning models on the handwritten digit classification problem.

The dataset was constructed from a number of scanned document datasets available from the National Institute of Standards and Technology (NIST). This is where the name for the dataset comes from, the Modified NIST or MNIST dataset.

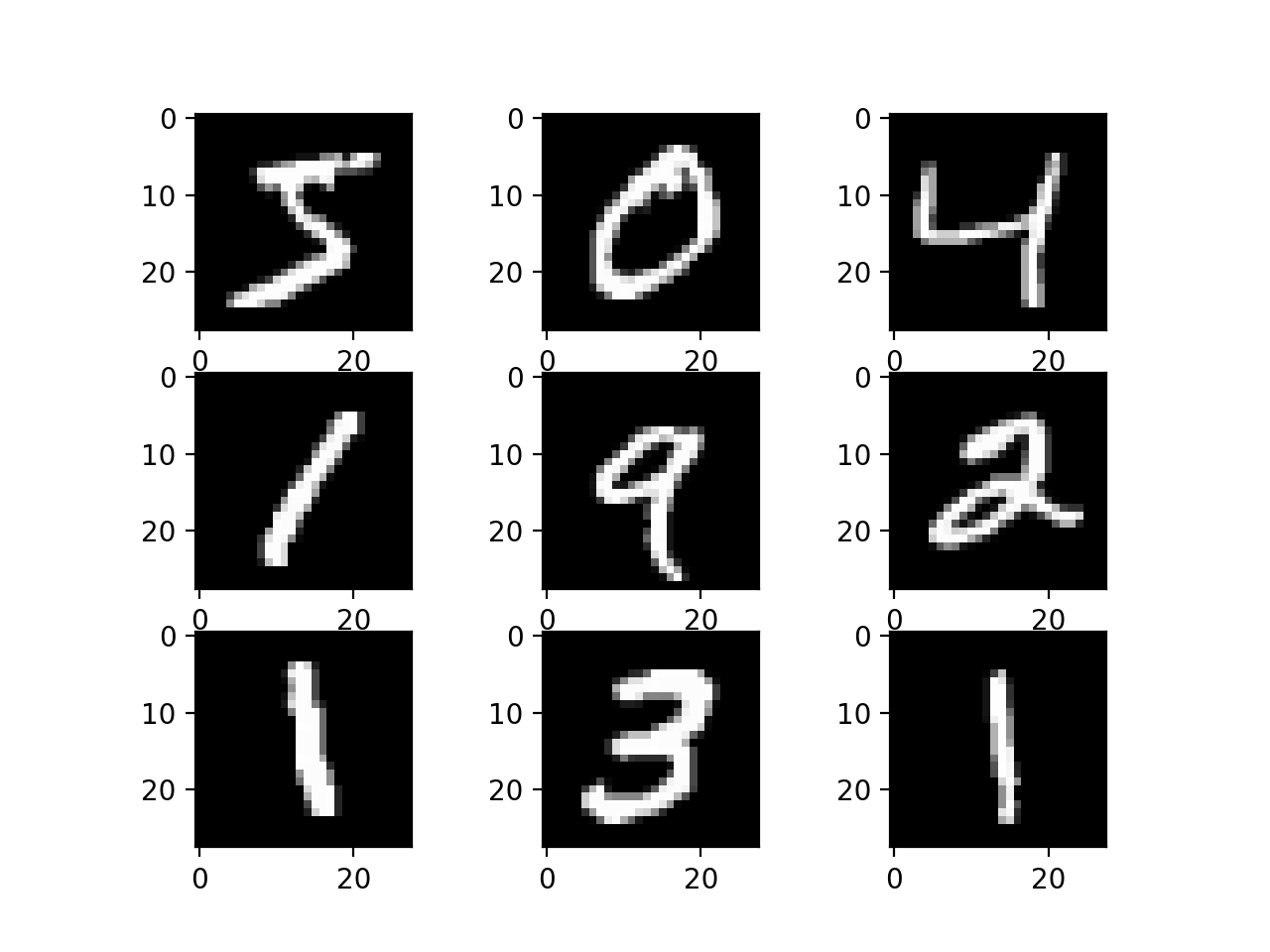

Images of digits were taken from a variety of scanned documents, normalized in size, and centered. This makes it an excellent dataset for evaluating models, allowing the developer to focus on machine learning with minimal data cleaning or preparation required.

Each image is a 28×28-pixel square (784 pixels total). A standard split of the dataset is used to evaluate and compare models, where 60,000 images are used to train a model, and a separate set of 10,000 images are used to test it.

It is a digit recognition task. As such, there are ten digits (0 to 9) or ten classes to predict. Results are reported using prediction error, which is nothing more than the inverted classification accuracy.

Excellent results achieve a prediction error of less than 1%. A state-of-the-art prediction error of approximately 0.2% can be achieved with large convolutional neural networks. There is a listing of the state-of-the-art results and links to the relevant papers on the MNIST and other datasets on Rodrigo Benenson’s webpage.

Need help with Deep Learning in Python?

Take my free 2-week email course and discover MLPs, CNNs and LSTMs (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

Loading the MNIST Dataset in Keras

The Keras deep learning library provides a convenient method for loading the MNIST dataset.

The dataset is downloaded automatically the first time this function is called and stored in your home directory in ~/.keras/datasets/mnist.npz as an 11MB file.

This is very handy for developing and testing deep learning models.

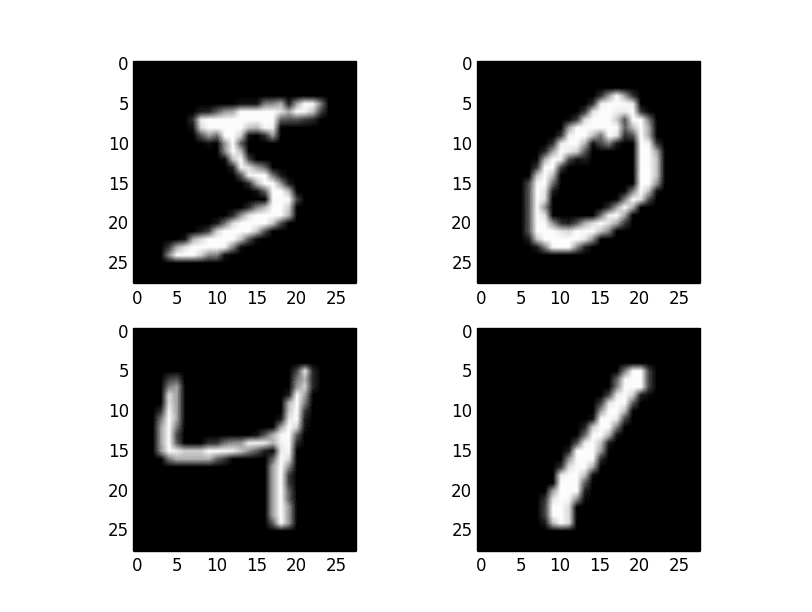

To demonstrate how easy it is to load the MNIST dataset, first, write a little script to download and visualize the first four images in the training dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Plot ad hoc mnist instances from tensorflow.keras.datasets import mnist import matplotlib.pyplot as plt # load (downloaded if needed) the MNIST dataset (X_train, y_train), (X_test, y_test) = mnist.load_data() # plot 4 images as gray scale plt.subplot(221) plt.imshow(X_train[0], cmap=plt.get_cmap('gray')) plt.subplot(222) plt.imshow(X_train[1], cmap=plt.get_cmap('gray')) plt.subplot(223) plt.imshow(X_train[2], cmap=plt.get_cmap('gray')) plt.subplot(224) plt.imshow(X_train[3], cmap=plt.get_cmap('gray')) # show the plot plt.show() |

You can see that downloading and loading the MNIST dataset is as easy as calling the mnist.load_data() function. Running the above example, you should see the image below.

Examples from the MNIST dataset

Baseline Model with Multi-Layer Perceptrons

Do you really need a complex model like a convolutional neural network to get the best results with MNIST?

You can get very good results using a very simple neural network model with a single hidden layer. In this section, you will create a simple multi-layer perceptron model that achieves an error rate of 1.74%. You will use this as a baseline for comparing more complex convolutional neural network models.

Let’s start by importing the classes and functions you will need.

|

1 2 3 4 5 6 |

from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.utils import to_categorical ... |

Now, you can load the MNIST dataset using the Keras helper function.

|

1 2 3 |

... # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() |

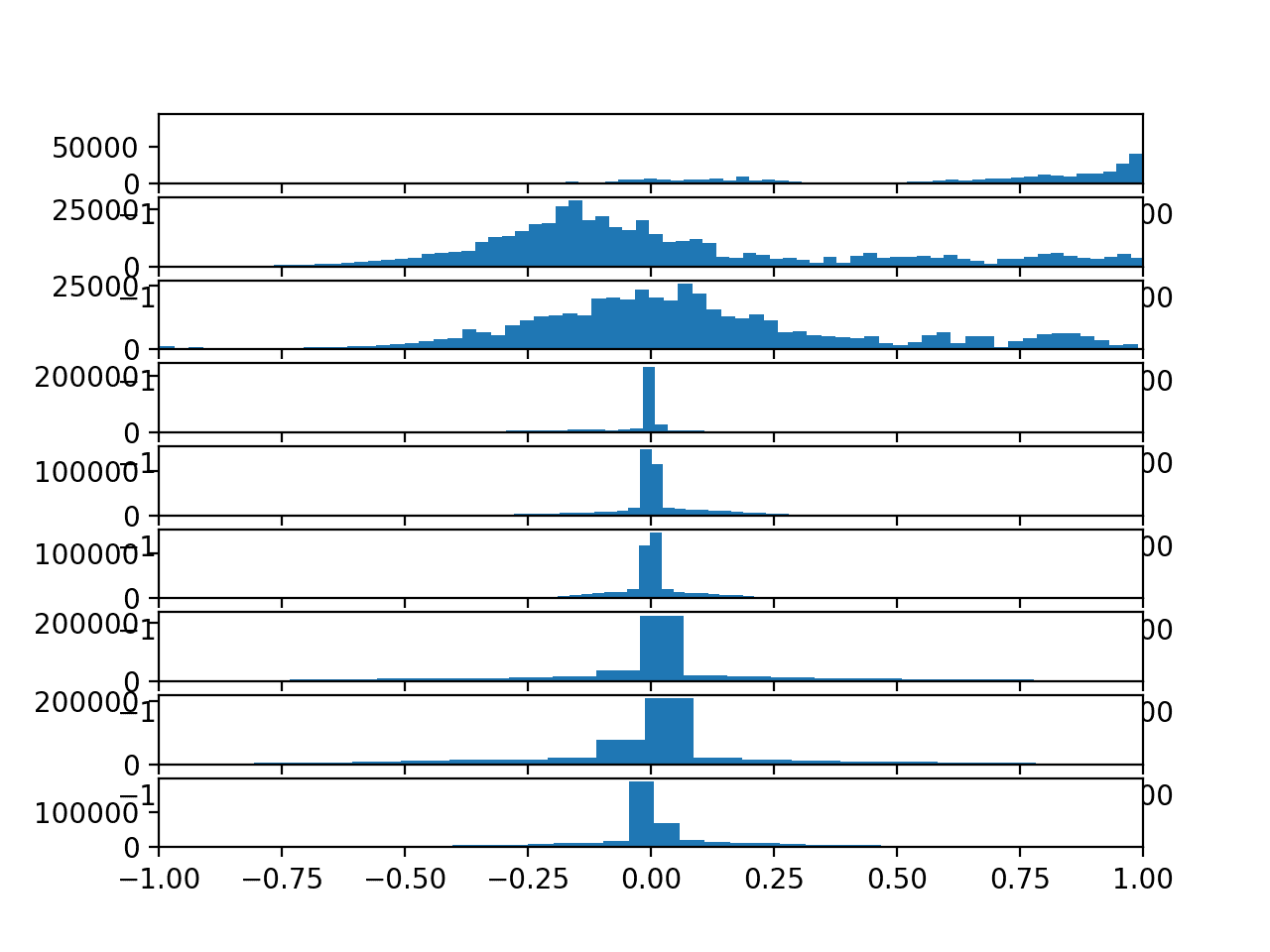

The training dataset is structured as a 3-dimensional array of instance, image width, and image height. For a multi-layer perceptron model, you must reduce the images down into a vector of pixels. In this case, the 28×28-sized images will be 784 pixel input values.

You can do this transform easily using the reshape() function on the NumPy array. You can also reduce your memory requirements by forcing the precision of the pixel values to be 32-bit, the default precision used by Keras anyway.

|

1 2 3 4 5 |

... # flatten 28*28 images to a 784 vector for each image num_pixels = X_train.shape[1] * X_train.shape[2] X_train = X_train.reshape((X_train.shape[0], num_pixels)).astype('float32') X_test = X_test.reshape((X_test.shape[0], num_pixels)).astype('float32') |

The pixel values are grayscale between 0 and 255. It is almost always a good idea to perform some scaling of input values when using neural network models. Because the scale is well known and well behaved, you can very quickly normalize the pixel values to the range 0 and 1 by dividing each value by the maximum of 255.

|

1 2 3 4 |

... # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 |

Finally, the output variable is an integer from 0 to 9. This is a multi-class classification problem. As such, it is good practice to use a one-hot encoding of the class values, transforming the vector of class integers into a binary matrix.

You can easily do this using the built-in tf.keras.utils.to_categorical() helper function in Keras.

|

1 2 3 4 5 |

... # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] |

You are now ready to create your simple neural network model. You will define your model in a function. This is handy if you want to extend the example later and try and get a better score.

|

1 2 3 4 5 6 7 8 9 10 |

... # define baseline model def baseline_model(): # create model model = Sequential() model.add(Dense(num_pixels, input_shape=(num_pixels,), kernel_initializer='normal', activation='relu')) model.add(Dense(num_classes, kernel_initializer='normal', activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model |

The model is a simple neural network with one hidden layer with the same number of neurons as there are inputs (784). A rectifier activation function is used for the neurons in the hidden layer.

A softmax activation function is used on the output layer to turn the outputs into probability-like values and allow one class of the ten to be selected as the model’s output prediction. Logarithmic loss is used as the loss function (called categorical_crossentropy in Keras), and the efficient ADAM gradient descent algorithm is used to learn the weights.

You can now fit and evaluate the model. The model is fit over ten epochs with updates every 200 images. The test data is used as the validation dataset, allowing you to see the skill of the model as it trains. A verbose value of 2 is used to reduce the output to one line for each training epoch.

Finally, the test dataset is used to evaluate the model, and a classification error rate is printed.

|

1 2 3 4 5 6 7 8 |

... # build the model model = baseline_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200, verbose=2) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Baseline Error: %.2f%%" % (100-scores[1]*100)) |

After tying this all together, the complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

# Baseline MLP for MNIST dataset from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() # flatten 28*28 images to a 784 vector for each image num_pixels = X_train.shape[1] * X_train.shape[2] X_train = X_train.reshape((X_train.shape[0], num_pixels)).astype('float32') X_test = X_test.reshape((X_test.shape[0], num_pixels)).astype('float32') # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] # define baseline model def baseline_model(): # create model model = Sequential() model.add(Dense(num_pixels, input_shape=(num_pixels,), kernel_initializer='normal', activation='relu')) model.add(Dense(num_classes, kernel_initializer='normal', activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model # build the model model = baseline_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200, verbose=2) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Baseline Error: %.2f%%" % (100-scores[1]*100)) |

Running the example might take a few minutes when you run it on a CPU.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You should see the output below. This very simple network defined in very few lines of code achieves a respectable error rate of 2.3%.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

Epoch 1/10 300/300 - 1s - loss: 0.2792 - accuracy: 0.9215 - val_loss: 0.1387 - val_accuracy: 0.9590 - 1s/epoch - 4ms/step Epoch 2/10 300/300 - 1s - loss: 0.1113 - accuracy: 0.9676 - val_loss: 0.0923 - val_accuracy: 0.9709 - 929ms/epoch - 3ms/step Epoch 3/10 300/300 - 1s - loss: 0.0704 - accuracy: 0.9799 - val_loss: 0.0728 - val_accuracy: 0.9787 - 912ms/epoch - 3ms/step Epoch 4/10 300/300 - 1s - loss: 0.0502 - accuracy: 0.9859 - val_loss: 0.0664 - val_accuracy: 0.9808 - 904ms/epoch - 3ms/step Epoch 5/10 300/300 - 1s - loss: 0.0356 - accuracy: 0.9897 - val_loss: 0.0636 - val_accuracy: 0.9803 - 905ms/epoch - 3ms/step Epoch 6/10 300/300 - 1s - loss: 0.0261 - accuracy: 0.9932 - val_loss: 0.0591 - val_accuracy: 0.9813 - 907ms/epoch - 3ms/step Epoch 7/10 300/300 - 1s - loss: 0.0195 - accuracy: 0.9953 - val_loss: 0.0564 - val_accuracy: 0.9828 - 910ms/epoch - 3ms/step Epoch 8/10 300/300 - 1s - loss: 0.0145 - accuracy: 0.9969 - val_loss: 0.0580 - val_accuracy: 0.9810 - 954ms/epoch - 3ms/step Epoch 9/10 300/300 - 1s - loss: 0.0116 - accuracy: 0.9973 - val_loss: 0.0594 - val_accuracy: 0.9817 - 947ms/epoch - 3ms/step Epoch 10/10 300/300 - 1s - loss: 0.0079 - accuracy: 0.9985 - val_loss: 0.0735 - val_accuracy: 0.9770 - 914ms/epoch - 3ms/step Baseline Error: 2.30% |

Simple Convolutional Neural Network for MNIST

Now that you have seen how to load the MNIST dataset and train a simple multi-layer perceptron model on it, it is time to develop a more sophisticated convolutional neural network or CNN model.

Keras does provide a lot of capability for creating convolutional neural networks.

In this section, you will create a simple CNN for MNIST that demonstrates how to use all the aspects of a modern CNN implementation, including Convolutional layers, Pooling layers, and Dropout layers.

The first step is to import the classes and functions needed.

|

1 2 3 4 5 6 7 8 9 |

from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical ... |

Next, you need to load the MNIST dataset and reshape it to be suitable for training a CNN. In Keras, the layers used for two-dimensional convolutions expect pixel values with the dimensions [pixels][width][height][channels].

Note that you are forcing so-called channels-last ordering for consistency in this example.

In the case of RGB, the last dimension pixels would be 3 for the red, green, and blue components, and it would be like having three image inputs for every color image. In the case of MNIST, where the pixel values are grayscale, the pixel dimension is set to 1.

|

1 2 3 4 5 6 |

... # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape(X_train.shape[0], 28, 28, 1).astype('float32') X_test = X_test.reshape(X_test.shape[0], 28, 28, 1).astype('float32') |

As before, it is a good idea to normalize the pixel values to the range 0 and 1 and one-hot encode the output variables.

|

1 2 3 4 5 6 7 8 |

... # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] |

Next, define your neural network model.

Convolutional neural networks are more complex than standard multi-layer perceptrons, so you will start by using a simple structure that uses all the elements for state-of-the-art results. Below summarizes the network architecture.

- The first hidden layer is a convolutional layer called a Convolution2D. The layer has 32 feature maps, with the size of 5×5 and a rectifier activation function. This is the input layer that expects images with the structure outlined above: [pixels][width][height].

- Next, define a pooling layer that takes the max called MaxPooling2D. It is configured with a pool size of 2×2.

- The next layer is a regularization layer using dropout called Dropout. It is configured to randomly exclude 20% of neurons in the layer in order to reduce overfitting.

- Next is a layer that converts the 2D matrix data to a vector called Flatten. It allows the output to be processed by standard, fully connected layers.

- Next is a fully connected layer with 128 neurons and a rectifier activation function.

- Finally, the output layer has ten neurons for the ten classes and a softmax activation function to output probability-like predictions for each class.

As before, the model is trained using logarithmic loss and the ADAM gradient descent algorithm.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

... def baseline_model(): # create model model = Sequential() model.add(Conv2D(32, (5, 5), input_shape=(28, 28, 1), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.2)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dense(num_classes, activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model |

You evaluate the model the same way as before with the multi-layer perceptron. The CNN is fit over ten epochs with a batch size of 200.

|

1 2 3 4 5 6 7 8 |

... # build the model model = baseline_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200, verbose=2) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("CNN Error: %.2f%%" % (100-scores[1]*100)) |

After tying this all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

# Simple CNN for the MNIST Dataset from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.shape[0], 28, 28, 1)).astype('float32') X_test = X_test.reshape((X_test.shape[0], 28, 28, 1)).astype('float32') # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] # define a simple CNN model def baseline_model(): # create model model = Sequential() model.add(Conv2D(32, (5, 5), input_shape=(28, 28, 1), activation='relu')) model.add(MaxPooling2D()) model.add(Dropout(0.2)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dense(num_classes, activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model # build the model model = baseline_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("CNN Error: %.2f%%" % (100-scores[1]*100)) |

After running the example, the accuracy of the training and validation test is printed for each epoch, and at the end, the classification error rate is printed.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Epochs may take about 45 seconds to run on the GPU (e.g., on AWS). You can see that the network achieves an error rate of 1.19%, which is better than our simple multi-layer perceptron model above.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

Epoch 1/10 300/300 [==============================] - 4s 12ms/step - loss: 0.2372 - accuracy: 0.9344 - val_loss: 0.0715 - val_accuracy: 0.9787 Epoch 2/10 300/300 [==============================] - 4s 13ms/step - loss: 0.0697 - accuracy: 0.9786 - val_loss: 0.0461 - val_accuracy: 0.9858 Epoch 3/10 300/300 [==============================] - 4s 13ms/step - loss: 0.0483 - accuracy: 0.9854 - val_loss: 0.0392 - val_accuracy: 0.9867 Epoch 4/10 300/300 [==============================] - 4s 13ms/step - loss: 0.0366 - accuracy: 0.9887 - val_loss: 0.0357 - val_accuracy: 0.9889 Epoch 5/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0300 - accuracy: 0.9909 - val_loss: 0.0360 - val_accuracy: 0.9873 Epoch 6/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0241 - accuracy: 0.9927 - val_loss: 0.0325 - val_accuracy: 0.9890 Epoch 7/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0210 - accuracy: 0.9932 - val_loss: 0.0314 - val_accuracy: 0.9898 Epoch 8/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0167 - accuracy: 0.9945 - val_loss: 0.0306 - val_accuracy: 0.9898 Epoch 9/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0142 - accuracy: 0.9956 - val_loss: 0.0326 - val_accuracy: 0.9892 Epoch 10/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0114 - accuracy: 0.9966 - val_loss: 0.0322 - val_accuracy: 0.9881 CNN Error: 1.19% |

Larger Convolutional Neural Network for MNIST

Now that you have seen how to create a simple CNN, let’s take a look at a model capable of close to state-of-the-art results.

You will import the classes and functions, then load and prepare the data the same as in the previous CNN example.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# Larger CNN for the MNIST Dataset from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.shape[0], 28, 28, 1)).astype('float32') X_test = X_test.reshape((X_test.shape[0], 28, 28, 1)).astype('float32') # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] ... |

This time you will define a large CNN architecture with additional convolutional, max pooling layers, and fully connected layers. The network topology can be summarized as follows:

- Convolutional layer with 30 feature maps of size 5×5

- Pooling layer taking the max over 2*2 patches

- Convolutional layer with 15 feature maps of size 3×3

- Pooling layer taking the max over 2*2 patches

- Dropout layer with a probability of 20%

- Flatten layer

- Fully connected layer with 128 neurons and rectifier activation

- Fully connected layer with 50 neurons and rectifier activation

- Output layer

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

... # define the larger model def larger_model(): # create model model = Sequential() model.add(Conv2D(30, (5, 5), input_shape=(28, 28, 1), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Conv2D(15, (3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.2)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dense(50, activation='relu')) model.add(Dense(num_classes, activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model |

Like the previous two experiments, the model is fit over ten epochs with a batch size of 200.

|

1 2 3 4 5 6 7 8 |

... # build the model model = larger_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Large CNN Error: %.2f%%" % (100-scores[1]*100)) |

After tying this all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

# Larger CNN for the MNIST Dataset from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = mnist.load_data() # reshape to be [samples][width][height][channels] X_train = X_train.reshape((X_train.shape[0], 28, 28, 1)).astype('float32') X_test = X_test.reshape((X_test.shape[0], 28, 28, 1)).astype('float32') # normalize inputs from 0-255 to 0-1 X_train = X_train / 255 X_test = X_test / 255 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] # define the larger model def larger_model(): # create model model = Sequential() model.add(Conv2D(30, (5, 5), input_shape=(28, 28, 1), activation='relu')) model.add(MaxPooling2D()) model.add(Conv2D(15, (3, 3), activation='relu')) model.add(MaxPooling2D()) model.add(Dropout(0.2)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dense(50, activation='relu')) model.add(Dense(num_classes, activation='softmax')) # Compile model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) return model # build the model model = larger_model() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Large CNN Error: %.2f%%" % (100-scores[1]*100)) |

Running the example prints accuracy on the training and validation datasets of each epoch and a final classification error rate.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The model takes about 100 seconds to run per epoch. This slightly larger model achieves a respectable classification error rate of 0.83%.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

Epoch 1/10 300/300 [==============================] - 4s 14ms/step - loss: 0.4104 - accuracy: 0.8727 - val_loss: 0.0870 - val_accuracy: 0.9732 Epoch 2/10 300/300 [==============================] - 5s 15ms/step - loss: 0.1062 - accuracy: 0.9669 - val_loss: 0.0601 - val_accuracy: 0.9804 Epoch 3/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0771 - accuracy: 0.9765 - val_loss: 0.0555 - val_accuracy: 0.9803 Epoch 4/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0624 - accuracy: 0.9812 - val_loss: 0.0393 - val_accuracy: 0.9878 Epoch 5/10 300/300 [==============================] - 4s 15ms/step - loss: 0.0521 - accuracy: 0.9838 - val_loss: 0.0333 - val_accuracy: 0.9892 Epoch 6/10 300/300 [==============================] - 4s 15ms/step - loss: 0.0453 - accuracy: 0.9861 - val_loss: 0.0280 - val_accuracy: 0.9907 Epoch 7/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0415 - accuracy: 0.9866 - val_loss: 0.0322 - val_accuracy: 0.9905 Epoch 8/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0376 - accuracy: 0.9879 - val_loss: 0.0288 - val_accuracy: 0.9906 Epoch 9/10 300/300 [==============================] - 4s 14ms/step - loss: 0.0327 - accuracy: 0.9895 - val_loss: 0.0245 - val_accuracy: 0.9925 Epoch 10/10 300/300 [==============================] - 4s 15ms/step - loss: 0.0294 - accuracy: 0.9904 - val_loss: 0.0279 - val_accuracy: 0.9910 Large CNN Error: 0.90% |

This is not an optimized network topology. Nor is it a reproduction of a network topology from a recent paper. There is a lot of opportunity for you to tune and improve upon this model.

What is the best error rate score you can achieve?

Post your configuration and best score in the comments.

Resources on MNIST

The MNIST dataset is very well studied. Below are some additional resources you might want to look into.

- The Official MNIST dataset webpage

- Rodrigo Benenson’s webpage that lists state-of-the-art results

- Kaggle competition that uses this dataset (check the scripts and forum sections for sample code)

- Read-only model trained on MNIST that you can test in your browser (very cool)

Summary

In this post, you discovered the MNIST handwritten digit recognition problem and deep learning models developed in Python using the Keras library that are capable of achieving excellent results.

Working through this tutorial, you learned:

- How to load the MNIST dataset in Keras and generate plots of the dataset

- How to reshape the MNIST dataset and develop a simple but well-performing multi-layer perceptron model on the problem

- How to use Keras to create convolutional neural network models for MNIST

- How to develop and evaluate larger CNN models for MNIST capable of near world-class results.

Do you have any questions about handwriting recognition with deep learning or this post? Ask your question in the comments, and I will do my best to answer.

Thanks for this tutorial. It was great. Though(it might sound silly) how do I see it in action? I mean if I wanna see it predict an answer for an image how do I do that?

Thanks again.

In it’s current form it is not a robust system.

You will have to provide a digit image with the same dimensions.

great work!!

but can you show that in action with a sample image

sir please help me !!

pip install tensorflow

ERROR: Could not find a version that satisfies the requirement tensorflow (from versions: none)

ERROR: No matching distribution found for tensorflow

py ver is 3.8.2

win 10

i am on it from one month but didnt got the perfect solution i feel you can help me out from this

I recommend this tutorial:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

How to predict an answer for an new image: https://blog.luisfred.com.br/reconhecimento-de-escrita-manual-com-redes-neurais-convolucionais/

use model.predict()

What is front end and back end used in this please tell me

Keras front end, tensorflow backend.

This URL is off-line now. It was changed to https://medium.com/luisfredgs/reconhecimento-de-escrita-manual-com-redes-neurais-convolucionais-6fca996af39e

Hello , i am also working on this Project and i choose this for my final year project of software engineering so i want help from u to understand it more better.

miansahilawais@gmail.com

Do you have a working program which recogniting the numbers ?

Just the examples in this tutorial Adrian.

did you get working program?

When I try the baseline model with MLPs I get much worse performance than what you are showing (an error rate of 53.64%). Any idea why I could be seeing such vastly different results when I’m using the same code? Thanks.

Hi Matthew, that is surprising that the numbers are so different.

Theano backend? or TensorFlow? What Platform? What version of Python?

Try running the example 3 times and report all 3 scores.

I have the same problem. I using Theano backend. platform: Pycharm. version 3.5

Sorry to hear that Adrian.

Does it work if you run on the command line?

I am also having the same issue of getting a very high error rate: 51.08%, 43.26% and 52.01%. But then I found that I forgot to normalize the pixels values from 0-255 to 0-1. After correcting now I got 1.93% baseline error.

Can you please explain this effect of normalization?

Yes, I have a huge post on the topic here:

https://machinelearningmastery.com/how-to-improve-neural-network-stability-and-modeling-performance-with-data-scaling/

Thanks!

To get an error rate that high, the code must have been copied incorrectly or something similar. Beyond that, do notice that each time you run this, the final output will be slightly different each time because of the Dropout layer in the neural network. It will randomly choose that 20% each time it runs thereby slightly affecting the final outcome.

yes.but the resulting accuracy varied even though i removed the dropout layer. any thoughts?. the accuracy shouldn’t change right?

You will get different accuracy each time you run the code. See this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

wow! I realize that now. thank you

Could you please give some simple example for CNN for ex may be in uci repository data set. Whether is possible to apply CNN for numeric features.

Sorry, I don’t have such an example.

Hello Jason, I tried running the script, but the baseline model is taking too much time.. its running from past 20 hours and still is on 4th EPoch,, can you please suggest some way to speed up the process.. I am using 4 gb ram computer, and running on Anaconda Theano backened Keras

Sorry to hear that Dinesh.

Perhaps try training on AWS:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

Hi Jason,

What is the configuration of machine that you used to run the model.. have you used GPU to improve performance? How much time it took for you?

Also AWS is a paid platform, is there any free platform for running ML algorithms?

Thanks

I used at 8 core machine with 8GB of RAM. It completed in reasonable time from memory.

AWS is very reasonably priced, I think less than $1 USD per hour. Great for one-off models like this.

Ran to 10 epochs in about 6 minutes for me, also 4gb of RAM. Check your code?

Nice work!

Hi! Great post! I tried it, but for the first CNN It does not seem to compile. I got:

ValueError: Filter must not be larger than the input: Filter: (5, 5) Input: (1, 28)

just after model = baseline_model()

I have updated the examples, try again!

Hi jason, I tried it, but I got the error below. I use tensorflow r0.11. I’m not sure whether it is the casuse.

Using TensorFlow backend.

Traceback (most recent call last):

File “/Users/Jack/.pyenv/versions/3.5.1/lib/python3.5/site-packages/tensorflow/python/framework/common_shapes.py”, line 594, in call_cpp_shape_fn

status)

File “/Users/Jack/.pyenv/versions/3.5.1/lib/python3.5/contextlib.py”, line 66, in __exit__

next(self.gen)

File “/Users/Jack/.pyenv/versions/3.5.1/lib/python3.5/site-packages/tensorflow/python/framework/errors.py”, line 463, in raise_exception_on_not_ok_status

pywrap_tensorflow.TF_GetCode(status))

tensorflow.python.framework.errors.InvalidArgumentError: Negative dimension size caused by subtracting 5 from 1

Ouch Jack, that does not look good.

It looks like the API has changed. I’ll dive into it and fix up the examples.

OK, I have updated the examples.

Firstly, I recommend using TensorFlow 0.10.0, NOT 0.11 as there are issues with the latest version.

Secondly, You must add the following two lines to make the CNNs work:

Fix taken from here: https://github.com/fchollet/keras/issues/2681

I hope that helps Jack.

Hi Jason.

Thanks for the great tutorial.

Your comment has not solved the problem yet, and we still the same error, Could you please modify your model to work with TF backend?

Keras has changed it’s input format. Instead of [pixel, width, height] it is now [width, height, pixel].

Change the input_shape = (28, 28, 1) in conv2D and in reshape call.

Use parameter : data_format=’channels_first’ in Input layer Conv2D

model.add(Conv2D(32, kernel_size=(3, 3) , activation=’relu’,data_format=’channels_first’, input_shape=(1,28,28)))

or you need to change in default keras configuration

in ~/.keras/keras.json

from “image_data_format”: “channels_last” => “image_data_format”: “channels_first”

thanks

thanks.it solved my problem.

Glad ti hear it.

Hi, thanks for the great tutorial !

I tried predicting with a test set and got the one-hot encoded predictions. I was just wondering if there’s a built-in function to convert it back to original labels (0,1,2,3…).

Great question Abhai.

If you use scikit-learn to perform the one hot encoding, it offers an inverse transform to turn the encoded prediction back into the original values.

http://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.OneHotEncoder.html

That would be my prefers as a starting point.

Hello,

Thank you very much for your usual brief and comprehensive illustration and discussion.

I’m glad you found the post useful Berisha.

Hello,

After finishing learning, how can I recognize my own pictures with this network.

Great question gs, I don’t have an example at the moment.

You will need to encode your own pictures in the same way as the MNIST dataset – mainly rescale to the same size. Then load them as a matrix of pixel values and you can make predictions.

jason can u brief more about this??? please

Thanks for the suggestion, I hope to cover it in the future.

Hello,

Thanks for great example, but how do I save the state of the net,

I mean that net learns on 60000 examples, then it tests and try to guess 10000

But if I want to use always, every day, for example, how can I use it without training it every day?

Great question, see this post for a tutorial on saving your net:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

Jason, does your book explain “WHY” you chose the various layers you did in this tutorial and shed light on how and why to choose certain designs for different data sets?

No, just the how John.

Why us hard, in most cases best results are achieved with trial and error. There is no “theory of neural networks” that helps you configure them.

hello

when i try to make a prediction for my own image, the net get it wrong

this is depressing me.

i use the command model.predict_classes(img)

please is there a way to get correct answer for my handwritten digit

Perhaps you need more and different training examples Nassim?

Perhaps some image augmentation can make your model more robust?

Great tutorial Jason, in fact you are the best, very easy to follow, I enjoy all your tutorials, thank you! In fact,

I achieved an error rate of 0.74 at one point using GPU and it took about 30sec to run.

Well done Anthony!

Thanks for the great tutorial. Just one thing I didn’t understand. In the Convolution2D layer, there is a border_mode=”valid” parameter. What does this do? What’s its purpose? The Keras documentation doesn’t seem to have an explanation for it either.

Excellent tutorial Jason. I really enjoyed reading it and implementing it. I just figured that if you have cuDNN installed it makes things waay fast (at least for the toy examples I’ve tried). I recommend anyone reading this to install cuDNN and configure theano to use it. You just have to put

[dnn]

enabled = True

in theanorc file.

Nice, thank for the tip Sanjaya.

See this post for how to run on AWS with GPUs if you do not have the hardware locally:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

Hi Jason,

I am trying to apply the CONVOLUTION1D for the IRIS Data.

The code is as below

—————————————————————————————–

max_features = 150

maxlen = 4

batch_size = 16

embedding_dims = 3

nb_epoch = 3

nb_classes =3

dropoutVal = 0.5

nb_filter = 5

hidden_dims = 500

filter_length = 4

import pandas as pd

data_load = pd.read_csv(“iris.csv”)

data = data_load.ix[:,0:4]

target = data_load.ix[:,4]

X_train = np.array(data[:100].values.astype(‘float32’))

Y_train = np.array(target[:100])

Y_train = np_utils.to_categorical(Y_train,nb_classes)

X_test = np.array(data[100:].values.astype(‘float32′))

Y_test = np.array(target[100:])

Y_test = np_utils.to_categorical(Y_test,nb_classes)

std = StandardScaler()

X_train = X_train_scaled = std.fit_transform(X_train)

X_test = X_test_scaled = std.transform(X_test)

X_train1 = sequence.pad_sequences(X_train_scaled,maxlen=maxlen)

X_test1 = sequence.pad_sequences(X_test_scaled,maxlen=maxlen)

model = Sequential()

model.add(Embedding(max_features,embedding_dims,input_length=maxlen))

model.add(Convolution1D(nb_filter=nb_filter,filter_length=filter_length, border_mode=’valid’,activation=’relu’))

model.add(GlobalMaxPooling1D())

model.add(Dense(hidden_dims,activation=’softmax’))

model.add(Dense(nb_classes))

model.add(Activation(‘sigmoid’))

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

model.fit(X_train1, Y_train, nb_epoch=5, batch_size=10)

scores = model.evaluate(X_test1, Y_test, verbose=0)

predictions = model.predict(X_test1)

—————————————————————————————-

I want to check if I am in the right direction on this.

I am not getting the accuracy more than 66% which is quite surprising.

Am I doing the Embedding Layer correctly. As when I see the embedding layer weights I see there is difference in what Layer Paremeters I set with the Weights I retreive.

Please advise.

Regards

Ganesh

I would recommend using an MLP rather than a CNN for the iris flowers dataset.

See this post:

https://machinelearningmastery.com/multi-class-classification-tutorial-keras-deep-learning-library/

Hi Jason

Thank you so much for your great tutorial.

By the way, can you explain why MLP is better than CNN for iris flowers dataset. Thanks a lot.

Best wishes,

Lua

Because the data is tabular (e.g. measurements of flowers), not images (e.g. photos).

If the data was photos, then a CNN would be the method of choice.

Thank you very much for this post. (:

You’re welcome Ger.

can you tell me how can i give the system an image and he tells me what number is it ? sorry i am new to this , thank you !

Hi Remon,

The image will have to be scaled to the same dimensions as those expected by the network.

Also, in this example, the network expects images to have a specific set of proportions and to be white digits on a black background. New examples will have to be prepared in the same way.

how will we prepare that?

Google tutorials on Python “Image”, for example:

http://effbot.org/imagingbook/introduction.htm

Hi jason,

snippet of your code:

————————-

in the step # load data

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# reshape to be [samples][pixels][width][height]

X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype(‘float32’)

X_test = X_test.reshape(X_test.shape[0], 1, 28, 28).astype(‘float32’)

you are using mnist data. what kind of data strurcture is it?

how to pre process images (in a list) an labels(in a list) into this structure anf fid it to keras model?

what exactly this line

X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype(‘float32’)

does?

thanks

joseph

Hi Joe,

The MNIST data is available within Keras.

It is stored as NumPy arrays of pixel data.

When used with a CNN, the data is reshaped into the format: [samples, pixels, width, height]

I hope that helps.

Hi Jason, what led you to choose 128 neurons for the fully-connected layer? (Calculating the number of activations leading into the fully-connected layer, it’s much larger than 128) Thanks!

Trial and error Amy.

Thank you!

Hi @Jason Brownlee, first things first awesome tut u got there ..!!

There’s a small problem at d following lines:

Small CNN:

# build the model

model = baseline_model()

Large CNN:

# build the model

model = larger_model()

Using the recent versions of Tensorfllow throws an AttributeError:

=> AttributeError: module ‘tensorflow.python’ has no attribute ‘control_flow_ops’ <=

solution:

Add following lines:

import tensorflow as tf

tf.python.control_flow_ops = tf

ref:

https://github.com/fchollet/keras/issues/3857

can u plz update the code..!!

once again thanx for d grt tut.

keep up the good work..!!

Thanks for the note Shaik, I’ll investigate.

your posts are great for an awesome start with keras. m loving it sir.

Thanks Mouz.

Hello sir, thanks for your great writing and well explanation. I have tried it and it works. But how can I train the network using my own handwriting data set instead of MNIST data.

It would be very helpful if you shed some light on this..

Thanks in advance.

Hi Faruk,

Generally, you will need to make the data consistent in dimensions as a starting point.

From there, you can separate the data into train/test sets or similar and begin exploring different configurations.

Does that help? Perhaps I misunderstand the question?

Hi

Thanks for your nice explanation. I succesfully trained all the networks you introduced here. However, when I want to use the trained model to make some predictions, using this pice of code:

im=misc.imread(‘test8.png’)

im=im[:,:,1]

im=im.flatten()

print(model.predict(im))

it gives me the error:

Error when checking : expected dense_input_1 to have shape (None, 784) but got array with shape (784, 1)

the ‘im’ has the shape (,784) , how can I feed in an array of size (None,784) ?

Hi Arash,

Consider reshaping as follows:

Hi Jason,

Thank you for your wonderful tutorial!

I have question about the ‘model.add(Dropout(0.2))’. As you stated ‘The next layer is a regularization layer using dropout called Dropout. It is configured to randomly exclude 20% of neurons in the layer in order to reduce overfitting.’ in the post, Dropout is treated as a separated layer in Keras, instead of a regularization operation on the existing layers such as convolution layer and fully-connected layer. How is this being achieved?

Since this Dropout is between MaxPooling and the next fully-connected layer, which part of the weights was applied Dropout?

Thank you very much!

Good question, it affects the weights between the layers it is inserted.

Hai mr Jason can we have chance to apply Ada boost to this hand digit recognition , may I know what the actual rate of accuracy

I have not, I recommend using the sklearn implementation of adaboost.

Hello sir, thanks for your well explanation. I have tried it and it works well. But how can I train the network using my own handwriting data set instead of MNIST data set.

It would be very thankful if you shed some light on this..

Thanks in advance.

You will need to load the data from file, adjust it so that it all has the same dimensions, then fit your model.

I do not have an example of working with custom data at the moment, sorry.

i tried the simple CNN with theano backend.

”’ImportError: (‘The following error happened while compiling the node’, DotModulo(A, s, m, A2, s2, m2), ‘\n’, ‘/home/pramod/.theano/compiledir_Linux-4.8–generic-x86_64-with-debian-stretch-sid-x86_64-2.7.13-64/tmpXpzrkl/d16654b784f584f17fdc481825fd2cca.so: undefined symbol: _ZdlPvm’, ‘[DotModulo(A, s, m, A2, s2, m2)]’)”’

i got this error while running the baseline model.

can you please tell me how to correct this?. i tried multiple ways of installing theano including pip and conda .

im guessing my theano installation is faulty .

clueless on how to proceed . please help.

thank you

I have not seen this error, sorry.

Many of my students have great success using Keras and Theano with Anaconda Python.

Got the verbatim code from above with one change:

X_train = X_train[:-20000 or None]

y_train = y_train[:-20000 or None]

to reduce the memory usage to run on a Mac OSX El Capitan (GeForce 650M with 512MB)

The error rate was a little higher at

1.51%

I used keras with the tensorflow-GPU backend.

Thanks for the note Chris!

Hi Jason,

Really awesome introduction to keras and digit recognition for a beginner like me.

You are using mnist dataset which is in form of pickled object (I guess). But my question is how will you convert set of existing images to this pickled object?

Secondly, you are calculating error rate compared to your test dataset. But suppose I have an image with a number written on it, how will you return class label of it, without making much changes in the above program.

Thanks Vikalp.

I would recommend loading your image data as numpy arrays and working with them directly.

You can make a prediction with the network (y = model.predict(x)) and use the numpy argmax() function to convert the one hot encoded output into a class index.

Hi Jason,

Thanks for quick reply.

I was looking into the way you suggested. Following is the code for that:

color_image = cv2.imread(“two.jpg”)

gray_image = cv2.cvtColor(color_image, cv2.COLOR_BGR2GRAY)

a = model.predict(numpy.array(gray_image))

print(a)

But getting following error:

ValueError: Error when checking : expected dense_1_input to have shape (None, 784) but got array with shape (1024, 791)

I am not sure if I am doing correct. Please guide over this. Thank you.

The loaded image must have the exact same dimensions as the data used to fit the model.

You may need to resize it.

Have you done this successfully? Can you please provide me the code

Hello,

I tried to save and load the model as you describe in another post, but I get always erros like:

ValueError: Error when checking model target: expected dense_3 to have shape (None, 1) but got array with shape (10000, 10)

The error happens at the

score = model.evaluate(…)

line after load

#

# save model and weights

print("Saving model...")

model_json = model.to_json()

with open('mnist_model.json', 'w') as json_file:

json_file.write(model_json)

model.save_weights("mnist_weights.h5")

print("model saved to disk")

# load model and weights

print("Laoding model...")

with open('mnist_model.json') as json_file:

model_json = json_file.read()

model = model_from_json(model_json)

model.load_weights('mnist_weights.h5')

print("mode loaded from disk")

print("compiling model...")

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

scores = model.evaluate(X_test, y_test, verbose=0)

print("Baseline Error: %.2f%%" % (100-scores[1]*100))

How to get precision and recall and f-measure of predicted output?

You can collect the predictions then use the tools from sklearn:

http://scikit-learn.org/stable/modules/classes.html#module-sklearn.metrics

Hi Jason,

Great site and tutorial. I understand you haven’t been able to describe how to pre-process our own images to be readable in our MNIST trained models, as a lot of other people here have asked. Perfectly understand if you don’t have the time to explain how to do this.

Would you be able to guide me on how I might continue my search to do this though? I’ve tried creating a new 28×28 pixel image, black background with white foreground for the image drawing, converting this to grayscale (for 1,28,28 input dimensions). Then I divide that by 255. The prediction accuracy for these customs images are very low (the model has a 99% accuracy on the MNIST test images).

Looking at the individual features, I see that the locations of the decimals for the custom images pre-processed as described above seem quite different to the MNIST ones, with the decimals and 0’s appearing in vastly different locations compared to the MNIST data. Completely different patterns. This leads me to believe some more complicated pre-processing must be going on besides the intuitive steps done above. I looked at instructions on the MNIST page for how the pre-processing took place, but don’t know how to implement these instructions in python. There also seem to be some pre-processing scripts out there but I can’t get these to work.

Any other suggestions on how I might continue my search to find how to pre-process custom images? All instructions in Google are too complex or the implementation seems to fail.

Generally, you need to train a model on data that will be representative of the type of images you need to make predictions on later.

Images will need to be of the same size (width x height) and the same colors.

If you expect a lot of variation in char placement in images, you can use image augmentation to create copies of your input data with random transforms:

https://machinelearningmastery.com/image-augmentation-deep-learning-keras/

I hope that helps as a start.

hey, can you please tell me how to mnist dataset readable, i have downloaded it in csv format now i want my image sto be readable

Hi Jason,

thx for your wonderfull courses!

My question is, why the pixels should be standardized to 0…1?

I have input like this:

70.67, 3170.27, 56.31, 1.28, 0.39, 0

204.70, 26419.57, 162.54, 0.42, -0.97, 1

173.70, 20141.12, 141.92, 0.61, -1.14, 3

219.80, 42211.29, 205.45, 0.55, -1.41, 0

243.00, 43254.00, 207.98, 0.23, -1.73, 0

241.22, 21973.94, 148.24, 0.07, -0.60, 3

245.42, 46176.45, 214.89, 0.29, -1.80, 0

164.78, 25253.94, 158.91, 1.08, -0.13, 0

115.29, 9792.57, 98.96, 0.56, -1.25, 1

The last row is the result I have split it away and converted it to one shot hot.

I am trying many models and many different parameters but none is learning anything. Even trying oversampling on few collums and looking if a model can reproduce the trained outputs fails!

But all your examples run without problems and produce the same results like you describe. So my setup: latest python 3.6 with anaconda runing on windows 10, should be all right.

So I fear that somethig with my inputs is wrong 🙁 . Should I standardice them? How can I do it? Later I will also have mixed inputs: numbers and strings. How could I work with this?

Would be very nice to get your kind help!

Thanks!

(pls excuse my very little perhaps bad english from school)

Input data must be scaled when working with neural networks, otherwise, large inputs will bias the network.

Ty Jason for your replay!

Meanwhile I found everything I needed here:

http://scikit-learn.org/stable/modules/preprocessing.html#preprocessing

My models are working now. Prediction is not as good as I want (just ~30%) but good enough to continue …

Thanks!

I’m glad to hear it.

Hi Jason,

Thanks for this! This is really helpful. I was just wondering; I realized you used all 60,000 training data. How would the code look like if you were to use only say 10k or 30k of the training data, yet achieve a low error?

Thanks!

You can select as much or little as of the training data as you wish to fit the model.

You can use array indexing to select the amount of data you require:

https://docs.scipy.org/doc/numpy/reference/arrays.indexing.html

Hello,

Thanks for your code.

I have a question.

How can I add a new activation function to this code?

I found the place where I can add the activation function, but I don’t know where should I add the derivative of the new activation function.

I really appreciate if you help me.

Thanks.

Ehsan.

Hi Ehsan,

You can specify the activation function between layers (e.g. model.add(…)) or on the layer (e.g. Dense(activation=’…’)).

Hello,

I couldn’t run the above example.

got the following error.

runfile(‘C:/Users/Paul/Desktop/CNN.py’, wdir=’C:/Users/Paul/Desktop’)

Traceback (most recent call last):

File “”, line 1, in

runfile(‘C:/Users/Paul/Desktop/CNN.py’, wdir=’C:/Users/Paul/Desktop’)

File “C:\Users\Paul\Anaconda2\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py”, line 714, in runfile

execfile(filename, namespace)

File “C:\Users\Paul\Anaconda2\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py”, line 74, in execfile

exec(compile(scripttext, filename, ‘exec’), glob, loc)

File “C:/Users/Paul/Desktop/CNN.py”, line 54, in

model = larger_model()

File “C:/Users/Paul/Desktop/CNN.py”, line 40, in larger_model

model.add(Conv2D(30, (5, 5), input_shape=(1, 28, 28), activation=’relu’))

TypeError: __init__() takes at least 4 arguments (4 given)

Can you help me out?

Sorry to hear that Paul, the case of your error is not obvious to me.

Perhaps confirm that you have the latest versions of all libraries and there were no copy paste errors with the code.

Nice work and spirit Jason, Thank you… it worked for me. I’m used Anaconda and GPU

Well done Jose!

hello team, I have following dought please help me

# create model

model = Sequential()

model.add(Conv2D(30, (5, 5), input_shape=(1, 28, 28), activation=’relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

in the above code input shape=(1,28,28)that’s for binary image, for color image we kept (3,28,28)..but what we kept for non image data?

I have dataset of 10248 obs with 18 variables including target variable.

what I need to kept in the input_shape?

please help me.

CNN is for image data.

For non-image data, you may want to consider an MLP. For sequence data, consider an RNN.

Sir, the input to my neural network is in a numpy array e.g. [[1,1,1,2], [1,2,1,2], ……..] and in this line of code

X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype(‘float32’)

the compiler throws an error:

ValueError: total size of new array must be unchanged

looking forward for an solution to the problem

If your data is not image data, consider starting with an MLP, not a CNN.

HI, Jason. Great tutorial! This is my first CNN and I cannot believe it is actually working, exited!

I am just wondering why there is no need to initialize the weights in CNN, using “kernel_initializer=”? In the baseline MLP you initialize every layer whereas for CNN, those lines are not there, no matter for the conv layer, the maxpooling layer, or the final fully connected layer.

Did I miss something? Thanks in advance.

There is a default kernel_initializer:

https://keras.io/layers/convolutional/

Great work .Thank you Sir . After Completing the training and testing I want to predict a character where I have a new character Image which Contains a New handwritten character . How Can I Do that sir .Please help me

How can I evaluate my models and estimate their performance

on unseen data.

See this post:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

Hi, Jason

My question is :

test_data is for check the model once you have already defined a model ( by using train_data).

why do you use the test data in your model training?

model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=10, batch_size=200, verbose=2)

# Final evaluation of the model

scores = model.evaluate(X_test, y_test, verbose=0)

It is only used to report the skill of the model on unseen during training (validation dataset).

Hi Jason still not clear for me (How Keras divide the datasets)?

is the validation data the same test data itself..?

this is what I have seen in the model fit equation !

so the datasets only divided by keras to training and test..

and then the test data is fit instead of validation?

I can not understand it but in this way !

Am I Right?

thank you so much

saeed

You can learn more about the validation dataset here:

https://machinelearningmastery.com/difference-test-validation-datasets/

Good evening, I really have your explanation on the recognition of manuscript characters and its helped me a lot to understand the architecture and operation of a CNN with keras. But, I have a concern, you will not have a good tutorial that deals with the problem of face recognition with CNN … I have done with other approaches like OpenCV, dlib … But, Would like to do it with a CNN.

Thank you…

Great suggestion, thanks.

How will predict the prediction on a new test example?

Fit the model on the entire available dataset, then pass in an image to:

Hi, Jason. A start to play with mnist and CNN in keras and your post was very helpful!

Thanks!

I have one question: In my tests I got poor probabilities distribution. In most cases we got 1 for the predicted class and 0 for others. I try figure out how to get a more informative distribution specially to able to find possible prediction errors. Initially I think that results have connection to a sigmoid activation function but look in the model we have only ReLu and Sofmax linear functions. Any suggestion on how we get more descriptive probabilities distribution?

I just see we have using Sofplus not Softmax function. I’ll try linear and relu functions to see whats the difference in outputs =)

Let me know how you go.

Well, first I must retifie my mess with function names. I was start with the same model in the last example, i.e., with Softmax: a non-linear function. And got a binary 0 or 1 in probabilities ndarray when activate with predict_proba().

One test with linear function the learning converging slowlly and I decide to drop away this test for now…

With Softplus, a function I was think is similar to linear, got non-normalized array of probabilities but, more Interesting, in cases when model fails to predict all values in probabilities array is zero! This can be very usefull when we try to catch just cases when model can’t predict.

A last test with Sigmoid I got the same behavior as Softplus with cases when all probabilities is 0 but in cases correct predicted got value 1 on class predicted. This make more sense with probabilities normalized but no one case with a distribution of probabilities non-binary.

To sumarize: the function with best results still be Softmax; No one function got a distribution non-binary when activation with predict_proba(); Sofplus and Sigmoid show a Interesting behavior with all values returned by predict_proba() is 0 in fails cases.

After all I still try to find a way to got more descriptive distribution of probabilities.

The model is not trying to output probabilities, it is approximating a function.

No matter what, you will have to mangle the output to get probability-looking values coming out.

Updating…

I found my mistake. In prediction I just forgot to normalize pixels values from 0-255 uint8 to 0-1 float. I think maybe this kind of inputs saturated and set outputs to 1 or 0 in all cases.

Glad to hear you figured it out.

Thanks for another great post. Could you point us to techniques that may be useful for detecting which patches/regions of images the network learns as most relevant for making the predictions? This is analogous to feature importances.

For example, is it possible to analyze the last hidden layer to find what parts of a given image contribute most to making one of the ten predictions?

It’s an area I’d like to cover in the future.

Hi Jason, I have tried this code tutorial on my windows 8.1 machine with theano 0.9 and keras 2.0.5 installed over Geforce 940M gpu but my model baseline error is worst , almost 90% . Please help

Consider running the example a few times.

Sir i tried it, but it did not help me. Plz suggest.

Jason, I’m going through trying to replicate these results on the data from the Kaggle competition using the same dataset, but I have a weird problem with the CNN section.

When I used the regular neural network model, it reached near 99% accuracy on the training set within a few epochs. However, when I use the CNN code in this article, the first epoch has an accuracy of around 53-54% and only slowly climbs to around 94% accuracy at best on the training set.

I’m using a training set of 42000 images rather than 60000, but I can’t imagine that would produce such a large effect on the performance of the model. Any idea what else might be going wrong?

(I’m using tensorflow backend for both kinds of NN by the way)

Double check you have normalized the input data.

This appears to have fixed the issue. Now I have 90% accuracy on the first epoch. In following along I think I accidentally divided the pixel values by 255 again after having normalized them already earlier.

Glad to hear that you worked it out Stefan.

Thank you for the help. Do you know of any articles that explain the different kinds of network topology and how to go about deciding what kind of network to use? Is it all trial and error or are there certain kinds of networks suited for different problems?

Great question.

Generally, start with an MLP as a baseline regardless. They can do a lot and provide a good starting point for more sophisticated models to beat

Use CNNs for problems with spatial input like image, but worth a shot on text, audio and other analog data.

Use RNNs for problems with a time component (e.g. observations over time) as input and/or output.

Does that help?

Thank you this is very helpful. I also wanted to know if there is any standard methodlogy for determining the size and number of feature maps, pooling layer patches, and number of neurons in the fully-connected layers. I’ve seen some rules of thumb for the number of neurons in fully-connected layers, but I’m not sure how to go about deciding which value to choose without just running the network over and over.

I’m sorry to keep bothering you by the way, I appreciate the help.

Not that I’m aware. It’s more art than science at this stage. Test.

What made you choose the value of 32 for the filter output dimension when creating the model for your 2D neural net? I’m teaching myself about this process and am interested in how one would optimize these variables.

It is arbitrary, 32 is commonly used in CNN demonstrations.

I recommend tuning the hyperparameters of your model on your problem to get the best performance.

Hello Jason! Any idea why am I getting this error?https://stackoverflow.com/questions/45479009/how-to-train-a-keras-ltsm-with-a-multidimensional-input

Sorry, I cannot debug your code for you, I just don’t have the capacity. I’m sure you can understand.

Hi jason, have you tried this with DropConnect and can you tell me how i can implement Dropconnect to MNIST?

Sorry I have not used drop connect in Keras.

Where does the initialized/default feature maps come from and what do they look like/check for?

What do you mean exactly Matt?

hi ,i am new in machine learning

print(model.predict_classes(x_test[1:5]))

print(y_test[1:5])

here i want to predict first five element from x_test and output is

[2 1 0 4](first five element)

[[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 1. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]]

my question is in the above prediction i am getting 2D array if i want to print in digit

[2]

[1]

[0]

[4]

This sounds like a Python array question.

You can access the prediction as the first element of each prediction:

Hi,

I have seen that everyone is eager to check their own handwritten images, follow the steps

1)open paint and write any digit(0-9) and save it as size of 28×28(pixels).

2)Use this code for prediction:

import cv2

test = cv2.imread(‘Test Image’)

test = cv2.cvtColor( test, cv2.COLOR_RGB2GRAY )

test = test.reshape(1, 1, 28, 28)

test = cv2.bitwise_not(test)

pred = model.predict_classes(test)

print(pred)

Thanks!! enjoy NN..

Thanks for sharing.

Hi,

I tried your code, but it is throwing error below:

ValueError: Error when checking : expected dense_1_input to have 2 dimensions, but got array with shape (1, 1, 28, 28)

Any ideas ?

Hi,

I am getting the same error. Where you able to resolve?

Saleem

can you say in which location we have to save the ‘Test image’

In the same directory as your code file.

Is there a typo in the third output print?

The code says:

print(“Baseline Error: %.2f%%” % (100-scores[1]*100))

while the output screen displays:

CNN Error: x.xx%

Yes, I have fixed this typo.

Hi, thank you very much for this program. So i already run the program and train the model and it’s working, then how can i use it for detecting number from my own image input?

Your new images will need to be formatted and resized the same as the MNIST examples.

I don’t have an example sorry.

How can i get the training model from this program? so i can use the model for my program to detecting number from image input

You could train the model and save it, then later load it within your application. This post shows you how:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

Hi Jason,

Thank you for sharing the code, I learned a lot from this. l also used the for a paper for school (I ran an ‘experiment’ where I change the size of the training set and look at the accuracy). Is that ok with you (if I cite it, of course)?

Thank you!!!

Well done!

Yes of course, please just reference the site or this page.

Hi Jason ,

I am doing a course in neural networks , I appreciate your work you have done for a novices like me. In that course they proposes this problem of classification as linear regression problem to classify two classes . Is this problem using mnist dataset is also linear regression classifying 10 classes?

Thank you>>>!!!

Sorry, I do not have an example of linear regression for classification.

doesn’t your example take linear regression such that given an image it has to calculate probability that it is represented by particular class using linear regression?

The above example demonstrates a neural network, not linear regression.

Hi Jason,

Thanks for such an incredible tutorial!!! I really appreciate your time and effort in putting the code and text, and replying to each and everyone’s questions!

When I try to run the code under “Simple Convolutional Neural Network for MNIST”, as such, I get the following error. Is that something that you can help? I am running it on Jupyter Notebook with Tensorflow 1.2.1 version.

With thanks in advance,

Socrates

________________________________________________________

AttributeError Traceback (most recent call last)

in ()

44

45 # build the model

—> 46 model = baseline_model()

47

48 # Fit the model

in baseline_model()

32 # create model

33 model = Sequential()

—> 34 model.add(Conv2D(32, (5, 5), input_shape=(1, 28, 28), activation=’relu’))

35 model.add(MaxPooling2D(pool_size=(2, 2)))

36 model.add(Dropout(0.2))

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\models.py in add(self, layer)

462 # and create the node connecting the current layer

463 # to the input layer we just created.

–> 464 layer(x)

465

466 if len(layer.inbound_nodes[-1].output_tensors) != 1:

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\engine\topology.py in __call__(self, inputs, **kwargs)

601

602 # Actually call the layer, collecting output(s), mask(s), and shape(s).

–> 603 output = self.call(inputs, **kwargs)

604 output_mask = self.compute_mask(inputs, previous_mask)

605

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\layers\convolutional.py in call(self, inputs)

162 padding=self.padding,

163 data_format=self.data_format,

–> 164 dilation_rate=self.dilation_rate)

165 if self.rank == 3:

166 outputs = K.conv3d(

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\backend\tensorflow_backend.py in conv2d(x, kernel, strides, padding, data_format, dilation_rate)

3178 raise ValueError(‘Unknown data_format ‘ + str(data_format))

3179

-> 3180 x, tf_data_format = _preprocess_conv2d_input(x, data_format)

3181

3182 padding = _preprocess_padding(padding)

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\backend\tensorflow_backend.py in _preprocess_conv2d_input(x, data_format)

3060 tf_data_format = ‘NHWC’

3061 if data_format == ‘channels_first’:

-> 3062 if not _has_nchw_support():

3063 x = tf.transpose(x, (0, 2, 3, 1)) # NCHW -> NHWC

3064 else:

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\backend\tensorflow_backend.py in _has_nchw_support()

268 “””

269 explicitly_on_cpu = _is_current_explicit_device(‘CPU’)

–> 270 gpus_available = len(_get_available_gpus()) > 0

271 return (not explicitly_on_cpu and gpus_available)

272

~\Anaconda3\envs\tensorflow-sessions\lib\site-packages\keras\backend\tensorflow_backend.py in _get_available_gpus()

254 global _LOCAL_DEVICES

255 if _LOCAL_DEVICES is None:

–> 256 _LOCAL_DEVICES = get_session().list_devices()

257 return [x.name for x in _LOCAL_DEVICES if x.device_type == ‘GPU’]

258

AttributeError: ‘Session’ object has no attribute ‘list_devices’

________________________________________________________

Looks like a problem with your TensorFlow version or Keras version. Ensure you have the latest installed.

Also, perhaps try running on the CPU first before trying the GPU.

Thanks for your immediate response, Jason!

Following are the version of Keras and Tensorflow:

Keras: 2.1.1

Tensorflow: 1.2.1

I am not running it on GPU either. In fact my machine does have GPU. It is T450s Lenovo Laptop with Intel(R) Core(TM) i5-5200U CPU @ 2.20GHz .

I would recommend updating to the latest version of Keras 2.1.2 and TensorFlow 1.4.1.

Thanks Jason!

Uninstalled Anaconda completely and reinstalled it. Then installed TensorFlow and Keras on top of that. It worked fine!!!

Well done!

Hi Jason, found your post very informative but I am getting an error saying “cannot import name ‘backend'”. how do i solve it?

Perhaps double check that your version of Keras is up to date?

Finally, the output variable is an integer from 0 to 9. Should be 0 to 0.9.

No, here I am commenting on the range of outputs before we normalize.

thanks a lot.

please, i have a question. how can i create my own custom pooling layer with keras and not using conventional max-pooling layer ?

thanks another time.

I have not done that. Perhaps you can use existing Keras code as a template?

Hi, Jason,

I love your code and it helps me a lot!

I have a question: In your simple CNN example, why you choose Conv2D(32,(5,5),..) (I mean why you choose 32 and 5 these numbers). Also why you choose 128 neurons in the fifth layer?

Also in your larger CNN example, why you choose Conv2D(30,..). I am confused about the reason you choose these numbers rather than other numbers like 31,32,33,34.

Thank you!

I used a little trial and error.

I got down to:

CNN Error: 0.67%

I used the advanced PReLU activation instead of relu, but not sure if that actually helped since I’m not sure how to best initialize the alphas anyway, so just left them at default.

Will look into playing around with the optimizers a bit as well. Possibly playing around with something more advanced than ADAM.

Nice work!

I am getting the “Value Error: Error when checking target: expected dense_8 to have shape (None, 784) but got array with shape (60000, 10)” while building the baseline model. I also did one hot encoding but still ending up the same error.

What is the solution?

it is really a good post, useful for many students who are working on CNN.

I’m Jagadeesh currently doing my under-graduation(B.Tech) at AMRITA UNIVERSITY(INDIA). we are trying to do SENTIMENT ANALYSIS ON PRODUCT REVIEWS USING CNN. The Design of my project is like

step 1: collecting labelled data-set from amazon

step2: using word2vec tool , converting the text into vectors

step3: feeding these vectors as input to CNN .

Now we are struck at converting text into vectors, for this word2vec is giving many vectors for a songle word, i don’t know how to take a single vector from 180 vectors produced for that single word.

kindly please help me.

I have to finish this project by Feb 20th.

Thanking you sir.

I have a few posts on word2vec that may help, perhaps start here:

https://machinelearningmastery.com/develop-word-embeddings-python-gensim/

hello

i run the simple CNN but nothing happen.how can i find out that the code is running?

i wrote the code in jupyter notebook.

Try running from the command line.

Try enabling the verbose output on the fit() function call.

hello again

it works and the cnn error was 0.93%.

i really thank you for your helpful codes.

Nice work!

hello

i have a dataset for handwritten digits in persian, it contains 10 folders for 10 digits(0, 1 ,2, …,9) that in each folder there is 6000 samples for that certain digit.each sample is an image of 61*61 size and in binary(black and white).

now how can i load this dataset in your ” Larger Convolutional Neural Network for MNIST ” code?

are data stored in MNIST images or matrix?

again i really grateful for your help.

His tutorial might give you some ideas:

https://blog.keras.io/building-powerful-image-classification-models-using-very-little-data.html

Hi, Jason. 🙂

I’ve reduced it to 0.16% 🙂 Will continue with tuning.

Thanx for the tutorial, it is pretty useful and understandable! 🙂