Deep learning neural networks are relatively straightforward to define and train given the wide adoption of open source libraries.

Nevertheless, neural networks remain challenging to configure and train.

In his 2012 paper titled “Practical Recommendations for Gradient-Based Training of Deep Architectures” published as a preprint and a chapter of the popular 2012 book “Neural Networks: Tricks of the Trade,” Yoshua Bengio, one of the fathers of the field of deep learning, provides practical recommendations for configuring and tuning neural network models.

In this post, you will step through this long and interesting paper and pick out the most relevant tips and tricks for modern deep learning practitioners.

After reading this post, you will know:

- The early foundations for the deep learning renaissance including pretraining and autoencoders.

- Recommendations for the initial configuration for the range of neural network hyperparameters.

- How to effectively tune neural network hyperparameters and tactics to tune models more efficiently.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Practical Recommendations for Deep Learning Neural Network Practitioners

Photo by Susanne Nilsson, some rights reserved.

Overview

This tutorial is divided into five parts; they are:

- Required Reading for Practitioners

- Paper Overview

- Beginnings of Deep Learning

- Learning via Gradient Descent

- Hyperparameter Recommendations

Recommendations for Practitioners

In 2012, a second edition of the popular practical book “Neural Networks: Tricks of the Trade” was published.

The first edition was published in 1999 and contained 17 chapters (each written by different academics and experts) on how to get the most out of neural network models. The updated second edition added 13 more chapters, including an important chapter (chapter 19) by Yoshua Bengio titled “Practical Recommendations for Gradient-Based Training of Deep Architectures.”

The time that this second edition was published was an important time in the renewed interest in neural networks and the start of what has become “deep learning.” Yoshua Bengio’s chapter is important because it provides recommendations for developing neural network models, including the details for, at the time, very modern deep learning methods.

Although the chapter can be read as part of the second edition, Bengio also published a preprint of the chapter to the arXiv website, that can be accessed here:

The chapter is also important as it provides a valuable foundation for what became the de facto textbook on deep learning four years later, titled simply “Deep Learning,” for which Bengio was a co-author.

This chapter (I’ll refer to it as a paper from now on) is required reading for all neural network practitioners.

In this post, we will step through each section of the paper and point out some of the most salient recommendations.

Want Better Results with Deep Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Paper Overview

The goal of the paper is to provide practitioners with practical recommendations for developing neural network models.

There are many types of neural network models and many types of practitioners, so the goal is broad and the recommendations are not specific to a given type of neural network or predictive modeling problem. This is good in that we can apply the recommendations liberally on our projects, but also frustrating as specific examples from literature or case studies are not given.

The focus of these recommendations is on the configuration of model hyperparameters, specifically those related to the stochastic gradient descent learning algorithm.

This chapter is meant as a practical guide with recommendations for some of the most commonly used hyper-parameters, in particular in the context of learning algorithms based on backpropagated gradient and gradient-based optimization.

Recommendations are presented in the context of the dawn of the field of deep learning, where modern methods and fast GPU hardware facilitated the development of networks with more depth and, in turn, more capability than had been seen before. Bengio draws this renaissance back to 2006 (six years before the time of writing) and the development of greedy layer-wise pretraining methods, that later (after this paper was written) were replaced by extensive use of ReLU, Dropout, BatchNorm, and other methods that aided in developing very deep models.

The 2006 Deep Learning breakthrough centered on the use of unsupervised learning to help learning internal representations by providing a local training signal at each level of a hierarchy of features.

The paper is divided into six main sections, with section three providing the main reading focus on recommendations for configuring hyperparameters. The full table of contents for the paper is provided below.

- Abstract

- 1 Introduction

- 1.1 Deep Learning and Greedy Layer-Wise Pretraining

- 1.2 Denoising and Contractive AutoEncoders

- 1.3 Online Learning and Optimization of Generalization Error

- 2 Gradients

- 2.1 Gradient Descent and Learning Rate

- 2.2 Gradient Computation and Automatic Differentiation

- 3 Hyper-Parameters

- 3.1 Neural Network HyperParameters

- 3.1.1 Hyper-Parameters of the Approximate Optimization

- 3.2 Hyper-Parameters of the Model and Training Criterion

- 3.3 Manual Search and Grid Search

- 3.3.1 General guidance for the exploration of hyper-parameters

- 3.3.2 Coordinate Descent and MultiResolution Search

- 3.3.3 Automated and Semi-automated Grid Search

- 3.3.4 Layer-wise optimization of hyperparameters

- 3.4 Random Sampling of HyperParameters

- 3.1 Neural Network HyperParameters

- 4 Debugging and Analysis

- 4.1 Gradient Checking and Controlled Overfitting

- 4.2 Visualizations and Statistics

- 5 Other Recommendations

- 5.1 Multi-core machines, BLAS and GPUs

- 5.2 Sparse High-Dimensional Inputs

- 5.3 Symbolic Variables, Embeddings, Multi-Task Learning and MultiRelational Learning

- 6 Open Questions

- 6.1 On the Added Difficulty of Training Deeper Architectures

- 6.2 Adaptive Learning Rates and Second-Order Methods

- 6.3 Conclusion

We will not touch on each section, but instead focus on the beginning of the paper and specifically the recommendations for hyperparameters and model tuning.

Beginnings of Deep Learning

The introduction section spends some time on the beginnings of deep learning, which is fascinating if viewed as a historical snapshot of the field.

At the time, the deep learning renaissance was driven by the development of neural network models with many more layers than could be used previously based on techniques such as greedy layer-wise pretraining and representation learning via autoencoders.

One of the most commonly used approaches for training deep neural networks is based on greedy layer-wise pre-training.

Not only was the approach important because it allowed the development of deeper models, but also the unsupervised form allowed the use of unlabeled examples, e.g. semi-supervised learning, which too was a breakthrough.

Another important motivation for feature learning and Deep Learning is that they can be done with unlabeled examples …

As such, reuse (literal reuse) was a major theme.

The notion of reuse, which explains the power of distributed representations is also at the heart of the theoretical advantages behind Deep Learning.

Although a single or two-layer neural network of sufficient capacity can be shown to approximate any function in theory, he offers a gentle reminder that deep networks provide a computational short-cut to approximating more complex functions. This is an important reminder and helps in motivating the development of deep models.

Theoretical results clearly identify families of functions where a deep representation can be exponentially more efficient than one that is insufficiently deep.

Time is spent stepping through two of the major “deep learning” breakthroughs: greedy layer-wise pretraining (both supervised and unsupervised) and autoencoders (both denoising and contrastive).

The third breakthrough, RBMs were left for discussion in another chapter of the book written by Hinton, the developer of the method.

- Restricted Boltzmann Machine (RBM).

- Greedy Layer-Wise Pretraining (Unsupervised and Supervised).

- Autoencoders (Denoising and Contrastive).

Although milestones, none of these techniques are preferred and used widely today (six years later) in the development of deep learning, and with perhaps with the exception of autoencoders, none are vigorously researched as they once were.

Learning via Gradient Descent

Section two provides a foundation on gradients and gradient learning algorithms, the main optimization technique used to fit neural network weights to training datasets.

This includes the important distinction between batch and stochastic gradient descent, and approximations via mini-batch gradient descent, today all simply referred to as stochastic gradient descent.

- Batch Gradient Descent. Gradient is estimated using all examples in the training dataset.

- Mini-Batch Gradient Descent. Gradient is estimated using subsets of samples in the training dataset.

- Stochastic (Online) Gradient Descent. Gradient is estimated using each single pattern in the training dataset.

The mini-batch variant is offered as a way to achieve the speed of convergence offered by stochastic gradient descent with the improved estimate of the error gradient offered by batch gradient descent.

Larger batch sizes slow down convergence.

On the other hand, as B [the batch size] increases, the number of updates per computation done decreases, which slows down convergence (in terms of error vs number of multiply-add operations performed) because less updates can be done in the same computing time.

Smaller batch sizes offer a regularizing effect due to the introduction of statistical noise in the gradient estimate.

… smaller values of B [the batch size] may benefit from more exploration in parameter space and a form of regularization both due to the “noise” injected in the gradient estimator, which may explain the better test results sometimes observed with smaller B.

This time was also the introduction and wider adoption of automatic differentiation in the development of neural network models.

The gradient can be either computed manually or through automatic differentiation.

This was of particular interest to Bengio given his involvement in the development of the Theano Python mathematical library and pylearn2 deep learning library, both now defunct, succeeded perhaps by TensorFlow and Keras respectively.

Manually implementing differentiation for neural networks is easy to mess up and errors can be hard to debug and cause sub-optimal performance.

When implementing gradient descent algorithms with manual differentiation the result tends to be verbose, brittle code that lacks modularity – all bad things in terms of software engineering.

Automatic differentiation is painted as a more robust approach to developing neural networks as graphs of mathematical operations, each of which knows how to differentiate, which can be defined symbolically.

A better approach is to express the flow graph in terms of objects that modularize how to compute outputs from inputs as well as how to compute the partial derivatives necessary for gradient descent.

The flexibility of the graph-based approach to defining models and the reduced likelihood of error in calculating error derivatives means that this approach has become a standard, at least in the underlying mathematical libraries, for modern open source neural network libraries.

Hyperparameter Recommendations

The main focus of the paper is on the configuration of the hyperparameters that control the convergence and generalization of the model under stochastic gradient descent.

Use a Validation Dataset

The section starts off with the importance of using a separate validation dataset from the train and test sets for tuning model hyperparameters.

For any hyper-parameter that has an impact on the effective capacity of a learner, it makes more sense to select its value based on out-of-sample data (outside the training set), e.g., a validation set performance, online error, or cross-validation error.

And on the importance of not including the validation dataset in the evaluation of the performance of the model.

Once some out-of-sample data has been used for selecting hyper-parameter values, it cannot be used anymore to obtain an unbiased estimator of generalization performance, so one typically uses a test set (or double cross-validation, in the case of small datasets) to estimate generalization error of the pure learning algorithm (with hyper-parameter selection hidden inside).

Cross-validation is often not used with neural network models given that they can take days, weeks, or even months to train. Nevertheless, on smaller datasets where cross-validation can be used, the double cross-validation technique is suggested, where hyperparameter tuning is performed within each cross-validation fold.

Double cross-validation applies recursively the idea of cross-validation, using an outer loop cross-validation to evaluate generalization error and then applying an inner loop cross-validation inside each outer loop split’s training subset (i.e., splitting it again into training and validation folds) in order to select hyper-parameters for that split.

Learning Hyperparameters

A suite of learning hyperparameters is then introduced, sprinkled with recommendations.

The hyperparameters in the suite are:

- Initial Learning Rate. The proportion that weights are updated; 0.01 is a good start.

- Learning Sate Schedule. Decrease in learning rate over time; 1/T is a good start.

- Mini-batch Size. Number of samples used to estimate the gradient; 32 is a good start.

- Training Iterations. Number of updates to the weights; set large and use early stopping.

- Momentum. Use history from prior weight updates; set large (e.g. 0.9).

- Layer-Specific Hyperparameters. Possible, but rarely done.

The learning rate is presented as the most important parameter to tune. Although a value of 0.01 is a recommended starting point, dialing it in for a specific dataset and model is required.

This is often the single most important hyperparameter and one should always make sure that it has been tuned […] A default value of 0.01 typically works for standard multi-layer neural networks but it would be foolish to rely exclusively on this default value.

He goes so far to say that if only one parameter can be tuned, then it would be the learning rate.

If there is only time to optimize one hyper-parameter and one uses stochastic gradient descent, then this is the hyper-parameter that is worth tuning.

The batch size is presented as a control on the speed of learning, not about tuning test set performance (generalization error).

In theory, this hyper-parameter should impact training time and not so much test performance, so it can be optimized separately of the other hyperparameters, by comparing training curves (training and validation error vs amount of training time), after the other hyper-parameters (except learning rate) have been selected.

Model Hyperparameters

Model hyperparameters are then introduced, again sprinkled with recommendations.

They are:

- Number of Nodes. Control over the capacity of the model; use larger models with regularization.

- Weight Regularization. Penalize models with large weights; try L2 generally or L1 for sparsity.

- Activity Regularization. Penalize model for large activations; try L1 for sparse representations.

- Activation Function. Used as the output of nodes in hidden layers; use sigmoidal functions (logistic and tang) or rectifier (now the standard).

- Weight Initialization. The starting point for the optimization process; influenced by activation function and size of the prior layer.

- Random Seeds. Stochastic nature of optimization process; average models from multiple runs.

- Preprocessing. Prepare data prior to modeling; at least standardize and remove correlations.

Configuring the number of nodes in a layer is challenging and perhaps one of the most asked questions by beginners. He suggests that using the same number of nodes in each hidden layer might be a good starting point.

In a large comparative study, we found that using the same size for all layers worked generally better or the same as using a decreasing size (pyramid-like) or increasing size (upside down pyramid), but of course this may be data-dependent.

He also recommends using an overcomplete configuration for the first hidden layer.

For most tasks that we worked on, we find that an overcomplete (larger than the input vector) first hidden layer works better than an undercomplete one.

Given the focus on layer-wise training and autoencoder, the sparsity of the representation (output of hidden layers) was a focus at the time. Hence the recommendation of using activity regularization that may still be useful in larger encoder-decoder models.

Sparse representations may be advantageous because they encourage representations that disentangle the underlying factors of representation.

At the time, the linear rectifier activation function was just beginning to be used and had not widely been adopted. Today, using the rectifier (ReLU) is the standard given that models using it readily out-perform models using logistic or hyperbolic tangent nonlinearities.

Tuning Hyperparameters

The default configurations do well for most neural networks on most problems.

Nevertheless, hyperparameter tuning is required to get the most out of a given model on a given dataset.

Tuning hyperparameters can be challenging both because of the computational resources required and because it can be easy to overfit the validation dataset, resulting in misleading findings.

One has to think of hyperparameter selection as a difficult form of learning: there is both an optimization problem (looking for hyper-parameter configurations that yield low validation error) and a generalization problem: there is uncertainty about the expected generalization after optimizing validation performance, and it is possible to overfit the validation error and get optimistically biased estimators of performance when comparing many hyper-parameter configurations.

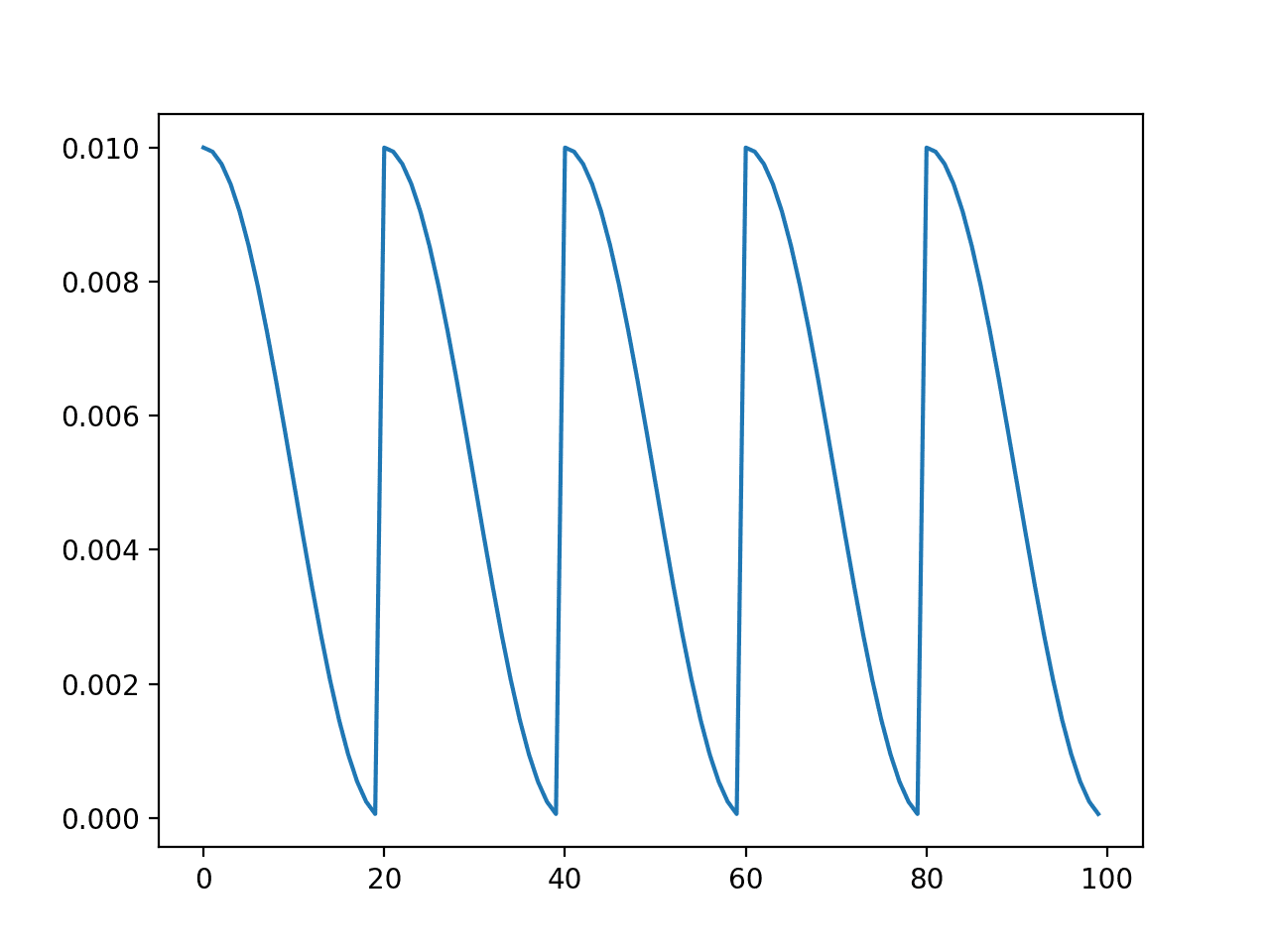

Tuning one hyperparameter for a model and plotting the results often results in a U-shaped curve showing the pattern of poor performance, good performance, and back up to poor performance (e.g. minimizing loss or error). The goal is to find the bottom of the “U.”

The problem is, many hyperparameters interact and the bottom of the “U” can be noisy.

Although to first approximation we expect a kind of U-shaped curve (when considering only a single hyper-parameter, the others being fixed), this curve can also have noisy variations, in part due to the use of finite data sets.

To aid in this search, he then provides three valuable tips to consider generally when tuning model hyperparameters:

- Best value on the border. Consider expanding the search if a good value is found on the edge of the interval searched.

- Scale of values considered. Consider searching on a log scale, at least at first (e.g. 0.1, 0.01, 0.001, etc.).

- Computational considerations. Consider giving up fidelity of the result in order to accelerate the search.

Three systematic hyperparameter search strategies are suggested:

- Coordinate Descent. Dial-in each hyperparameter one at a time.

- Multi-Resolution Search. Iteratively zoom in the search interval.

- Grid Search. Define an n-dimensional grid of values and test each in turn.

These strategies can be used separately or even combined.

The grid search is perhaps the most commonly understood and widely used method for tuning model hyperparameters. It is exhaustive, but parallelizable, a benefit that can be exploited using cheap cloud computing infrastructure.

The advantage of the grid search, compared to many other optimization strategies (such as coordinate descent), is that it is fully parallelizable.

Often, the process is repeated via iterative grid searches, combining the multi-resolution and grid search.

Typically, a single grid search is not enough and practitioners tend to proceed with a sequence of grid searches, each time adjusting the ranges of values considered based on the previous results obtained.

He also suggests keeping a human in the loop to keep an eye out for bugs and use pattern recognition to identify trends and change the shape of the search space.

Humans can get very good at performing hyperparameter search, and having a human in the loop also has the advantage that it can help detect bugs or unwanted or unexpected behavior of a learning algorithm.

Nevertheless, it is important to automate as much as possible to ensure the process is repeatable for new problems and models in the future.

The grid search is exhaustive and slow.

A serious problem with the grid search approach to find good hyper-parameter configurations is that it scales exponentially badly with the number of hyperparameters considered.

He suggests using a random sampling strategy, which has been shown to be effective. The interval of each hyperparameter can be searched uniformly. This distribution can be biased by including priors, such as the choice of sensible defaults.

The idea of random sampling is to replace the regular grid by a random (typically uniform) sampling. Each tested hyper-parameter configuration is selected by independently sampling each hyper-parameter from a prior distribution (typically uniform in the log-domain, inside the interval of interest).

The paper ends with more general recommendations, including techniques for debugging the learning process, speeding up training with GPU hardware, and remaining open questions.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- Neural Networks: Tricks of the Trade: Tricks of the Trade, First Edition, 1999.

- Neural Networks: Tricks of the Trade: Tricks of the Trade, Second Edition, 2012.

- Practical Recommendations for Gradient-Based Training of Deep Architectures, Preprint, 2012.

- Deep Learning, 2016.

- Automatic Differentiation, Wikipedia.

Summary

In this post, you discovered the salient recommendations, tips, and tricks from Yoshua Bengio’s 2012 paper titled “Practical Recommendations for Gradient-Based Training of Deep Architectures.”

Have you read this paper? What were your thoughts?

Let me know in the comments below.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi,

I think that the following definitions are swapped:

“Stochastic (Online) Gradient Descent. Gradient is estimated using subsets of samples in the training dataset.

Mini-Batch Gradient Descent. Gradient is estimated using each single pattern in the training dataset.”

Great article, as always Jason, thank you.

You’re right, thanks for that! Fixed.

what is the use of gradient descent and in what condition do we use it?

Perhaps start here:

https://machinelearningmastery.com/gradient-descent-for-machine-learning/

And here:

https://machinelearningmastery.com/gentle-introduction-mini-batch-gradient-descent-configure-batch-size/

I think, ‘Stochastic (Online) Gradient Descent’ and ‘Mini-Batch Gradient Descent’ titles might better be interchanged.

Fixed.

Hi Jason, is there any good literature on using TPE or Bayes to optimize the hyperparameters of a Neural Network?

I’ve had a lot of success using Hyperopt on XGBoost, but implementing that with Tensorflow 2.3 is causing a ton of headaches with deprecation and incompatibility between hyperopt, sklearn, and tensorflow.

Thanks!

Good question, I’m not sure off hand other than architecture search with autokeras:

https://machinelearningmastery.com/autokeras-for-classification-and-regression/