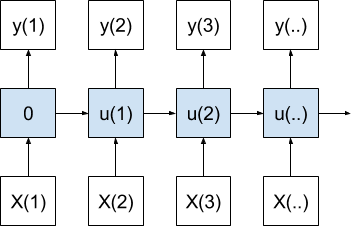

Sequence prediction is different from traditional classification and regression problems.

It requires that you take the order of observations into account and that you use models like Long Short-Term Memory (LSTM) recurrent neural networks that have memory and that can learn any temporal dependence between observations.

It is critical to apply LSTMs to learn how to use them on sequence prediction problems, and for that, you need a suite of well-defined problems that allow you to focus on different problem types and framings. It is critical so that you can build up your intuition for how sequence prediction problems are different and how sophisticated models like LSTMs can be used to address them.

In this tutorial, you will discover a suite of 5 narrowly defined and scalable sequence prediction problems that you can use to apply and learn more about LSTM recurrent neural networks.

After completing this tutorial, you will know:

- Simple memorization tasks to test the learned memory capability of LSTMs.

- Simple echo tasks to test the learned temporal dependence capability of LSTMs.

- Simple arithmetic tasks to test the interpretation capability of LSTMs.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

5 Examples of Simple Sequence Prediction Problems for Learning LSTM Recurrent Neural Networks

Photo by Geraint Otis Warlow, some rights reserved.

Tutorial Overview

This tutorial is divided into 5 sections; they are:

- Sequence Learning Problem

- Value Memorization

- Echo Random Integer

- Echo Random Subsequences

- Sequence Classification

Properties of Problems

The sequence problems were designed with a few properties in mind:

- Narrow. To focus on one aspect of the sequence prediction, such as memory or function approximation.

- Scalable. To be made more or less difficult along the chosen narrow focus.

- Reframed. Two or more framings of the each problem are presented to support the exploration of different algorithm learning capabilities.

I tried to provide a mixture of narrow focuses, problem difficulties, and required network architectures.

If you have ideas for further extensions or similarly carefully designed problems, please let me know in the comments below.

Need help with LSTMs for Sequence Prediction?

Take my free 7-day email course and discover 6 different LSTM architectures (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

1. Sequence Learning Problem

In this problem, a sequence of contiguous real values between 0.0 and 1.0 are generated. Given one or more time steps of past values, the model must predict the next item in the sequence.

We can generate this sequence directly, as follows:

|

1 2 3 4 5 6 7 |

from numpy import array # generate a sequence of real values between 0 and 1. def generate_sequence(length=10): return array([i/float(length) for i in range(length)]) print(generate_sequence()) |

Running this example prints the generated sequence:

|

1 |

[ 0. 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9] |

This could be framed as a memorization challenge where given the observation at the previous time step, the model must predict the next value:

|

1 2 3 4 5 |

X (samples), y 0.0, 0.1 0.1, 0.2 0.2, 0.3 ... |

The network could memorize the input-output pairs, which is quite boring, but would demonstrate the function approximation capability of the network.

The problem could be framed as randomly chosen contiguous subsequences as input time steps and the next value in the sequence as output.

|

1 2 3 4 5 |

X (timesteps), y 0.4, 0.5, 0.6, 0.7 0.0, 0.2, 0.3, 0.4 0.3, 0.4, 0.5, 0.6 ... |

This would require the network to learn either to add a fixed value to the last seen observation or to memorize all possible subsequences of the generated problem.

This framing of the problem would be modeled as a many-to-one sequence prediction problem.

This is an easy problem that tests primitive features of sequence learning. This problem could be solved by a multilayer Perceptron network.

2. Value Memorization

The problem is to remember the first value in the sequence and to repeat it at the end of the sequence.

This problem is based on “Experiment 2” used to demonstrate LSTMs in the 1997 paper Long Short Term Memory.

This can be framed as a one-step prediction problem.

Given one value in the sequence, the model must predict the next value in the sequence. For example, given a value of “0” as an input, the model must predict the value “1”.

Consider the following two sequences of 5 integers:

|

1 2 |

3, 0, 1, 2, 3 4, 0, 1, 2, 4 |

The Python code will generate two sequences of arbitrary length. You could generalize it further if you wish.

|

1 2 3 4 5 6 7 8 9 10 11 |

def generate_sequence(length=5): return [i for i in range(length)] # sequence 1 seq1 = generate_sequence() seq1[0] = seq1[-1] = seq1[-2] print(seq1) # sequence 2 seq1 = generate_sequence() seq1[0] = seq1[-1] print(seq1) |

Running the example generates and prints the above two sequences.

|

1 2 |

[3, 1, 2, 3, 3] [4, 1, 2, 3, 4] |

The integers could be normalized, or more preferably one hot encoded.

The patterns introduce a wrinkle in that there is conflicting information between the two sequences and that the model must know the context of each one-step prediction (e.g. the sequence it is currently predicting) in order to correctly predict each full sequence.

We can see that the first value of the sequence is repeated as the last value of the sequence. This is the indicator that provides context to the model as to which sequence it is working on.

The conflict is the transition from the second to last items in each sequence. In sequence one, a “2” is given as an input and a “3” must be predicted, whereas in sequence two, a “2” is given as input and a “4” must be predicted.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Sequence 1: X (samples), y ... 1, 2 2, 3 Sequence 2: X (samples), y ... 1, 2 2, 4 |

This wrinkle is important to prevent the model from memorizing each single-step input-output pair of values in each sequence, as a sequence unaware model may be inclined to do.

This framing would be modeled as a one-to-one sequence prediction problem.

This is a problem that a multilayer Perceptron and other non-recurrent neural networks cannot learn. The first value in the sequence must be remembered across multiple samples.

This problem could be framed as providing the entire sequence except the last value as input time steps and predicting the final value.

|

1 2 3 |

X (timesteps), y 3, 0, 1, 2, 3 4, 0, 1, 2, 4 |

Each time step is still shown to the network one at a time, but the network must remember the value at the first time step. The difference is, the network can better learn the difference between the sequence, and between long sequences via backpropagation through time.

This framing of the problem would be modeled as a many-to-one sequence prediction problem.

Again, this problem could not be learned by a multilayer Perceptron.

3. Echo Random Integer

In this problem, random sequences of integers are generated. The model must remember an integer at a specific lag time and echo it at the end of the sequence.

For example, a random sequence of 10 integers may be:

|

1 |

5, 3, 2, 1, 9, 9, 2, 7, 1, 6 |

The problem may be framed as echoing the value at the 5th time step, in this case 9.

The code below will generate random sequences of integers.

|

1 2 3 4 5 6 7 |

from random import randint # generate a sequence of random numbers in [0, 99] def generate_sequence(length=10): return [randint(0, 99) for _ in range(length)] print(generate_sequence()) |

Running the example will generate and print a random sequence, such as:

|

1 |

[47, 69, 76, 9, 71, 87, 8, 16, 32, 81] |

The integers can be normalized, but more preferably a one hot encoding can be used.

A simple framing of this problem is to echo the current input value.

|

1 |

yhat(t) = f(X(t)) |

For example:

|

1 2 |

X (timesteps), y 5, 3, 2, 1, 9, 9 |

This trivial problem can easily be solved by a multilayer Perceptron and could be used for calibration or diagnostics of a test harness.

A more challenging framing of the problem is to echo the value at the previous time step.

|

1 |

yhat(t) = f(X(t-1)) |

For example:

|

1 2 |

X (timesteps), y 5, 3, 2, 1, 9, 1 |

This is a problem that cannot be solved by a multilayer Perceptron.

The index to echo can be pushed further back in time, putting more demand on the LSTMs memory.

Unlike the “Value Memorization” problem above, a new sequence would be generated each training epoch. This would require that the model learn a generalization echo solution rather than memorize a specific sequence or sequences of random numbers.

In both cases, the problem would be modeled as a many-to-one sequence prediction problem.

4. Echo Random Subsequences

This problem also involves the generation of random sequences of integers.

Instead of echoing a single previous time step as in the previous problem, this problem requires the model to remember and output a partial sub-sequence of the input sequence.

The simplest framing would be the echo problem from the previous section. Instead, we will focus on a sequence output where the simplest framing is for the model to remember and output the whole input sequence.

For example:

|

1 2 |

X (timesteps), y 5, 3, 2, 4, 1, 5, 3, 2, 4, 1 |

This could be modeled as a many-to-one sequence prediction problem where the output sequence is output directly at the end of the last value in the input sequence.

This can also be modeled as the network outputting one value for each input time step, e.g. a one-to-one model.

A more challenging framing is to output a partial contiguous subsequence of the input sequence.

For example:

|

1 2 |

X (timesteps), y 5, 3, 2, 4, 1, 5, 3, 2 |

This is more challenging because the number of inputs does not match the number of outputs. A many-to-many model of this problem would require a more advanced architecture such as the encoder-decoder LSTM.

Again, a one hot encoding would be preferred, although the problem could be modeled as normalized integer values.

5. Sequence Classification

The problem is defined as a sequence of random values between 0 and 1. This sequence is taken as input for the problem with each number provided one per timestep.

A binary label (0 or 1) is associated with each input. The output values are all 0. Once the cumulative sum of the input values in the sequence exceeds a threshold, then the output value flips from 0 to 1.

A threshold of 1/4 the sequence length is used.

For example, below is a sequence of 10 input timesteps (X):

|

1 |

0.63144003 0.29414551 0.91587952 0.95189228 0.32195638 0.60742236 0.83895793 0.18023048 0.84762691 0.29165514 |

The corresponding classification output (y) would be:

|

1 |

0 0 0 1 1 1 1 1 1 1 |

We can implement this in Python.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

from random import random from numpy import array from numpy import cumsum # create a sequence classification instance def get_sequence(n_timesteps): # create a sequence of random numbers in [0,1] X = array([random() for _ in range(n_timesteps)]) # calculate cut-off value to change class values limit = n_timesteps/4.0 # determine the class outcome for each item in cumulative sequence y = array([0 if x < limit else 1 for x in cumsum(X)]) return X, y X, y = get_sequence(10) print(X) print(y) |

Running the example generates a random input sequence and calculates the corresponding output sequence of binary values.

|

1 2 3 |

[ 0.31102339 0.66591885 0.7211718 0.78159441 0.50496384 0.56941485 0.60775583 0.36833139 0.180908 0.80614878] [0 0 0 0 1 1 1 1 1 1] |

This is a sequence classification problem that can be modeled as one-to-one. State is required to interpret past time steps to correctly predict when the output sequence flips from 0 to 1.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

- Long Short-Term Memory, 1997

- How to use Different Batch Sizes for Training and Predicting in Python with Keras

- Demonstration of Memory with a Long Short-Term Memory Network in Python

- How to Learn to Echo Random Integers with Long Short-Term Memory Recurrent Neural Networks

- How to use an Encoder-Decoder LSTM to Echo Sequences of Random Integers

- How to Develop a Bidirectional LSTM For Sequence Classification in Python with Keras

Summary

In this tutorial, you discovered a suite of carefully designed contrived sequence prediction problems that you can use to explore the learning and memory capabilities of LSTM recurrent neural networks.

Specifically, you learned:

- Simple memorization tasks to test the learned memory capability of LSTMs.

- Simple echo tasks to test learned temporal dependence capability of LSTMs.

- Simple arithmetic tasks to test the interpretation capability of LSTMs.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thanks a lot for your valuable insights on LSTMs. Your blogs have been a great learning platform.

I have been lately trying different architectures using LSTMs for time series forecasting.

In keras the default shape of input tensor is given by [batch size, timesteps, features].

do you think the lags/percent changes over lags should be passed as features or as timesteps while reshaping the input tensor.

e.g : Assuming we are using lags/percent changes over lags as features for the past 4 days(assuming no other features are discovered)

for 5 inputs and 1 output

should it be modeled as [1,5] or [5,1].

the input shape of the tensor should be [batch size, 1, 5(current day’s value :past 4 day’s value)] or

[batch size, 5, 1].

At a higher level, due you think LSTMs can learn better across time steps or over across features within the time-steps.

Thanks

Great question. A good general answer is to brainstorm and then try/bake-off everything in terms of model skill.

In terms of normative use, I would encourage you to treat one sample as one series of many time steps, with one or more features at each time step.

Also, I have found LSTMs to be not super great at autoregression tasks. Please baseline performance with a well tuned MLP.

Does that help?

Thanks for your feedback. I have been testing multiple architecture settings(still working). I wish I had more features.

LSTMs and other time series methods(ARIMA,ETS, HW) are working great for a many to one input output setting. However they have failed miserably for long term forecasts.

I am eagerly waiting for your blog on Bayesian hyper-parameter optimization.

(P.S. I have more than a 1000 nets to optimize)

Thanks a lot ! 🙂

Wow, that is a lot of nets!

Error accumulates over longer forecast periods. The problem is hard.

I have to implement an ANN algorithm that produces an estimate of unknown state variables using their uncertainties. Basically, it should be divided in 2 steps: in the Prediction one an estimate of the current state is created based on the state in the previous time step. In the update one the measurement information from the current time step is used to produce a new accurate estimate of the state. So, if I consider my system as a black-box what I have is:

Input U = Control vector, that indicates the magnitude of the control system’s on the situation

Input Z = Measurement vector, it contains the real-world measurement we received in a time step

Output Xn = Newest estimate of the current “true” state

Output Pn = Newest estimate of the average error for each part of the state The algorithm that must be implemented must rely on a RNN architecture, more specific an LSTM. What I am struggling is how to correlate these inputs/outputs that I have to the one of an LSTM structure. How can I feed the network making in compliant to my state estimation problem?

Thanks for any kind of help!

Perhaps this post will help you prepare your data:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

I would suggest you to use a Kalman Filter or particle filter. Take a look at the Unscented Kalman Filter, it works great with nonlinear systems and it’s fast enough

Thanks.

Thanks for the great resources on your blogs.

Here I have a problem that I don’t know how I could use neural network to solve it. The problem is : suppose there is a dictionary containing many single words. I have many meaningful phrases which is the combination of the words from the dictionary. The question is when given a set of words (from the dictionary), how could I train a neural network to combine those words (adjust their sequence) and generate meaningful phrases? How to model the input and output?

I will be really appreciated if any suggestions could be provided.

Perhaps this framework will help you define your problem as a supervised learning problem:

https://machinelearningmastery.com/how-to-define-your-machine-learning-problem/

I am working with data that requires classifying if a patient will develop cancer or not in the future, based on medical tests done over time. The tests have a sequential relationship. A, then B, then C, etc. For example:

| Patient ID | Test ID | RBC Count | WBC Count | Label

| 1 | A | 4.2 | 7000 | 0

| 1 | B | 5.3 | 12000 | 0

| 1 | C | 2.4 | 15000 | 1

| 2 | A | 7.6 | 8000 | 0

| 2 | A | 7.4 | 7500 | 0

Each point is not taken at a regular time interval, so this may not be considered a time-series data. I have tried to aggregate features and use ensemble methods like Random Forests. Can I apply RNN? If so, how?

Perhaps model it as a sequence classification problem?

This post will help with the concepts:

https://machinelearningmastery.com/models-sequence-prediction-recurrent-neural-networks/

Hi. I always see your good writing.

I think there is a slight typo in section 2 example. The first sequence is [3,1,2,3,3] and the second sequence is [4,1,2,3,4]. The inference example 1 -> 2, 2 -> 3 in the first sequence is correct, but the second sequence is the same as 1 -> 2, 2 -> 3. If the goal is to show the difference between the first sequence and the second sequence, I think it is a correct example to compare 2-> 3, 3-> 3 of the first sequence and 2-> 3, 3-> 4 of the second sequence. I may have misunderstood, but I’ll leave a comment if it helps.

I believe it is correct.

Each example has 4 input time steps for 1 sample that must output the first value in the sequence, a “3” or “4”.

Oh, I missed this sentence. “The conflict is the transition from the second to last items in each sequence.” I thought it was a problem to predict the very next step. Thank you for your reply.

No problem.

Jason,

Do you have an example of the “contiguous subsequences” or many to one model? This sequence:

X (timesteps), y

0.4, 0.5, 0.6, 0.7

0.0, 0.2, 0.3, 0.4

0.3, 0.4, 0.5, 0.6

Thanks in advance

I believe the examples here will help:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

Hi ! its a great information on LSTM. I am working on pattern recognition problem of a quasi-periodic signal. Whether ANN or LSTM would be beneficial for this case? It is known that ANN does not have any memorizing concept in its model which basic LSTM have. But, recognition of pattern does actually needs any “memorizing concept” or simple learning through training is enough for this case?

Perhaps test it and see?

Hi,Thanks for the great resources! I have a problem,I think maybe it’s a sequence prediction question,but i could not to connect it to above examples.

The question is I have a mixed text , such as a contract with a title.I want to segment it to blocks.

the train dataset:

x: mixed contract text

y: title,chapter1,chaper2,……,chapterN

the test dataset:

x: mixed contract text.

predict y: title, chapter1, chapter2,……,chapterN.

Could you help me to solve it?

Perhaps use a multi-input model, one for the title, one for the content?

Thanks for your reply . but when I do the predict , I only have one mixed contract text , I couldn’t split it to title,content as multi-input。

I see. Perhaps model the problem based on the data you will have and will require at prediction time.

thanks for your help!

I find a method to resolve this problem in medium.

https://medium.com/illuin/https-medium-com-illuin-nested-bi-lstm-for-supervised-document-parsing-1e01d0330920.

the problem is about text segment. there are some papers focus on this problem.

I will try it. Thanks again!

Well done!

Hi Jason:

I have a problem where I am trying to predict whether a stock is going to end up “in the money” or not. So, in my case, it is selling a put option at a strike price, e.g. 10 and the price of the stock is 12 when I sell the option. I want to use a handful of parameters as features. And then my dependent variable is.: Yes or No (in the money or not)

For training data, I have historical option prices, greeks (like delta, theta), implied volatility, stock prices,etc. If I try to predict “in the money” or “not”, then I suppose I could look at it as a sequence of 1s and 0s starting from the Monday I sell the option to Tues, Tues to Wed, Wed. to Thurs, and Thurs to Friday where Friday is the expiration date (assuming weekly options).

Another way is to look at the closing prices on each day as part of the sequences.

And another way to look at the sequence is: M,T,W,Th as the first 4 values of the sequence while trying to predict the Friday value (stock price).

Are LTSMs good models for this type of thing? If so, what can you advise more specifically about the model characteristics ? Any readings come to mind as well?

Generally, I don’t have a background in finance:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

It sounds like a time series classification problem and I recommend testing a suite of different models:

https://machinelearningmastery.com/how-to-develop-a-skilful-time-series-forecasting-model/