Attention is becoming increasingly popular in machine learning, but what makes it such an attractive concept? What is the relationship between attention applied in artificial neural networks and its biological counterpart? What components would one expect to form an attention-based system in machine learning?

In this tutorial, you will discover an overview of attention and its application in machine learning.

After completing this tutorial, you will know:

- A brief overview of how attention can manifest itself in the human brain

- The components that make up an attention-based system and how these are inspired by biological attention

Kick-start your project with my book Building Transformer Models with Attention. It provides self-study tutorials with working code to guide you into building a fully-working transformer model that can

translate sentences from one language to another...

Let’s get started.

What is attention?

Photo by Rod Long, some rights reserved.

Tutorial Overview

This tutorial is divided into two parts; they are:

-

- Attention

- Attention in Machine Learning

Attention

Attention is a widely investigated concept that has often been studied in conjunction with arousal, alertness, and engagement with one’s surroundings.

In its most generic form, attention could be described as merely an overall level of alertness or ability to engage with surroundings.

– Attention in Psychology, Neuroscience, and Machine Learning, 2020.

Visual attention is one of the areas most often studied from both the neuroscientific and psychological perspectives.

When a subject is presented with different images, the eye movements that the subject performs can reveal the salient image parts that the subject’s attention is most attracted to. In their review of computational models for visual attention, Itti and Koch (2001) mention that such salient image parts are often characterized by visual attributes, including intensity contrast, oriented edges, corners and junctions, and motion. The human brain attends to these salient visual features at different neuronal stages.

Neurons at the earliest stages are tuned to simple visual attributes such as intensity contrast, colour opponency, orientation, direction and velocity of motion, or stereo disparity at several spatial scales. Neuronal tuning becomes increasingly more specialized with the progression from low-level to high-level visual areas, such that higher-level visual areas include neurons that respond only to corners or junctions, shape-from-shading cues or views of specific real-world objects.

Interestingly, research has also observed that different subjects tend to be attracted to the same salient visual cues.

Research has also discovered several forms of interaction between memory and attention. Since the human brain has a limited memory capacity, then selecting which information to store becomes crucial in making the best use of the limited resources. The human brain does so by relying on attention, such that it dynamically stores in memory the information that the human subject most pays attention to.

Attention in Machine Learning

Implementing the attention mechanism in artificial neural networks does not necessarily track the biological and psychological mechanisms of the human brain. Instead, it is the ability to dynamically highlight and use the salient parts of the information at hand—in a similar manner as it does in the human brain—that makes attention such an attractive concept in machine learning.

Think of an attention-based system consisting of three components:

- A process that “reads” raw data (such as source words in a source sentence), and converts them into distributed representations, with one feature vector associated with each word position.

- A list of feature vectors storing the output of the reader. This can be understood as a “memory” containing a sequence of facts, which can be retrieved later, not necessarily in the same order, without having to visit all of them.

- A process that “exploits” the content of the memory to sequentially perform a task, at each time step having the ability put attention on the content of one memory element (or a few, with a different weight).

– Page 491, Deep Learning, 2017.

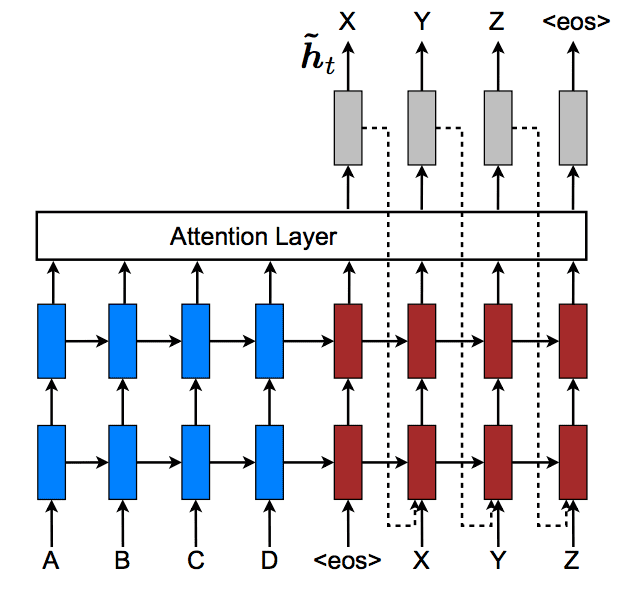

Let’s take the encoder-decoder framework as an example since it is within such a framework that the attention mechanism was first introduced.

If we are processing an input sequence of words, then this will first be fed into an encoder, which will output a vector for every element in the sequence. This corresponds to the first component of our attention-based system, as explained above.

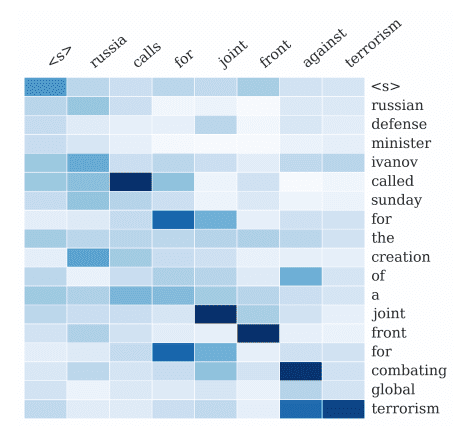

A list of these vectors (the second component of the attention-based system above), together with the decoder’s previous hidden states, will be exploited by the attention mechanism to dynamically highlight which of the input information will be used to generate the output.

At each time step, the attention mechanism then takes the previous hidden state of the decoder and the list of encoded vectors, using them to generate unnormalized score values that indicate how well the elements of the input sequence align with the current output. Since the generated score values need to make relative sense in terms of their importance, they are normalized by passing them through a softmax function to generate the weights. Following the softmax normalization, all the weight values will lie in the interval [0, 1] and add up to 1, meaning they can be interpreted as probabilities. Finally, the encoded vectors are scaled by the computed weights to generate a context vector. This attention process forms the third component of the attention-based system above. It is this context vector that is then fed into the decoder to generate a translated output.

This type of artificial attention is thus a form of iterative re-weighting. Specifically, it dynamically highlights different components of a pre-processed input as they are needed for output generation. This makes it flexible and context dependent, like biological attention.

– Attention in Psychology, Neuroscience, and Machine Learning, 2020.

The process implemented by a system that incorporates an attention mechanism contrasts with one that does not. In the latter, the encoder would generate a fixed-length vector irrespective of the input’s length or complexity. In the absence of a mechanism that highlights the salient information across the entirety of the input, the decoder would only have access to the limited information that would be encoded within the fixed-length vector. This would potentially result in the decoder missing important information.

The attention mechanism was initially proposed to process sequences of words in machine translation, which have an implied temporal aspect to them. However, it can be generalized to process information that can be static and not necessarily related in a sequential fashion, such as in the context of image processing. You will see how this generalization can be achieved in a separate tutorial.

Want to Get Started With Building Transformer Models with Attention?

Take my free 12-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Deep Learning Essentials, 2018.

- Deep Learning, 2017.

Papers

- Attention in Psychology, Neuroscience, and Machine Learning, 2020.

- Computational Modelling of Visual Attention, 2001.

Summary

In this tutorial, you discovered an overview of attention and its application in machine learning.

Specifically, you learned:

- A brief overview of how attention can manifest itself in the human brain

- The components that make up an attention-based system and how these are inspired by biological attention

Do you have any questions?

Ask your questions in the comments below, and I will do my best to answer.

Many thanks for this wonderful tutorial. It really helps me to understand the concept that I ve been struggling to figure out.

Glad to know you like it.

This is really helpful. I’m very much looking forward to an example. Thanks again for all you folks do!

Thank you for your feedback and support Ciaran! We appreciate it greatly!

Somehow missed the links. Got them, thanks!

You are very welcome Ciaran!

I would appreciate it if you could explain it with an icon.

Hi Elibeau…Please elaborate on what would be beneficial to you. We always look forward to suggestions to improve our content.

Thank you! Is there an example of this with python code?

Hi cn…The following may be of interest to you:

https://machinelearningmastery.com/the-attention-mechanism-from-scratch/

Its amazing that machine learning has so many parallels to biology! And even though attention in machine learning isn’t completely analogous to human attention, it seems like there only needs to be slight alteration to the concept to allow it to work. After reading this tutorial, I understand attention in machine learning much better. Thank you very much for the detailed and clear write-up!

You are very welcome Dallin! We greatly appreciate your feedback and support!

Very helpful, thanks

You are very welcome Amit!

the language is very dense for folks trying to understand the concept for the first time .

Thank you for your feedback Adithya! You may want to go to YouTube and search for the following:

“introduction to attention in machine learning”

This will bring up a few great options to view. Then you can come back to this post and it should make more sense. If it does not, please let us know if we can help clarify anything.