Generative Adversarial Networks, or GANs for short, is a deep learning neural network architecture for training a generator model for generating synthetic images.

A problem with generative models is that there is no objective way to evaluate the quality of the generated images.

As such, it is common to periodically generate and save images during the model training process and use subjective human evaluation of the generated images in order to both evaluate the quality of the generated images and to select a final generator model.

Many attempts have been made to establish an objective measure of generated image quality. An early and somewhat widely adopted example of an objective evaluation method for generated images is the Inception Score, or IS.

In this tutorial, you will discover the inception score for evaluating the quality of generated images.

After completing this tutorial, you will know:

- How to calculate the inception score and the intuition behind what it measures.

- How to implement the inception score in Python with NumPy and the Keras deep learning library.

- How to calculate the inception score for small images such as those in the CIFAR-10 dataset.

Kick-start your project with my new book Generative Adversarial Networks with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Oct/2019: Updated small bug in inception score for equal distribution example.

How to Implement the Inception Score (IS) From Scratch for Evaluating Generated Images

Photo by alfredo affatato, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- What Is the Inception Score?

- How to Calculate the Inception Score

- How to Implement the Inception Score With NumPy

- How to Implement the Inception Score With Keras

- Problems With the Inception Score

What Is the Inception Score?

The Inception Score, or IS for short, is an objective metric for evaluating the quality of generated images, specifically synthetic images output by generative adversarial network models.

The inception score was proposed by Tim Salimans, et al. in their 2016 paper titled “Improved Techniques for Training GANs.”

In the paper, the authors use a crowd-sourcing platform (Amazon Mechanical Turk) to evaluate a large number of GAN generated images. They developed the inception score as an attempt to remove the subjective human evaluation of images.

The authors discover that their scores correlated well with the subjective evaluation.

As an alternative to human annotators, we propose an automatic method to evaluate samples, which we find to correlate well with human evaluation …

— Improved Techniques for Training GANs, 2016.

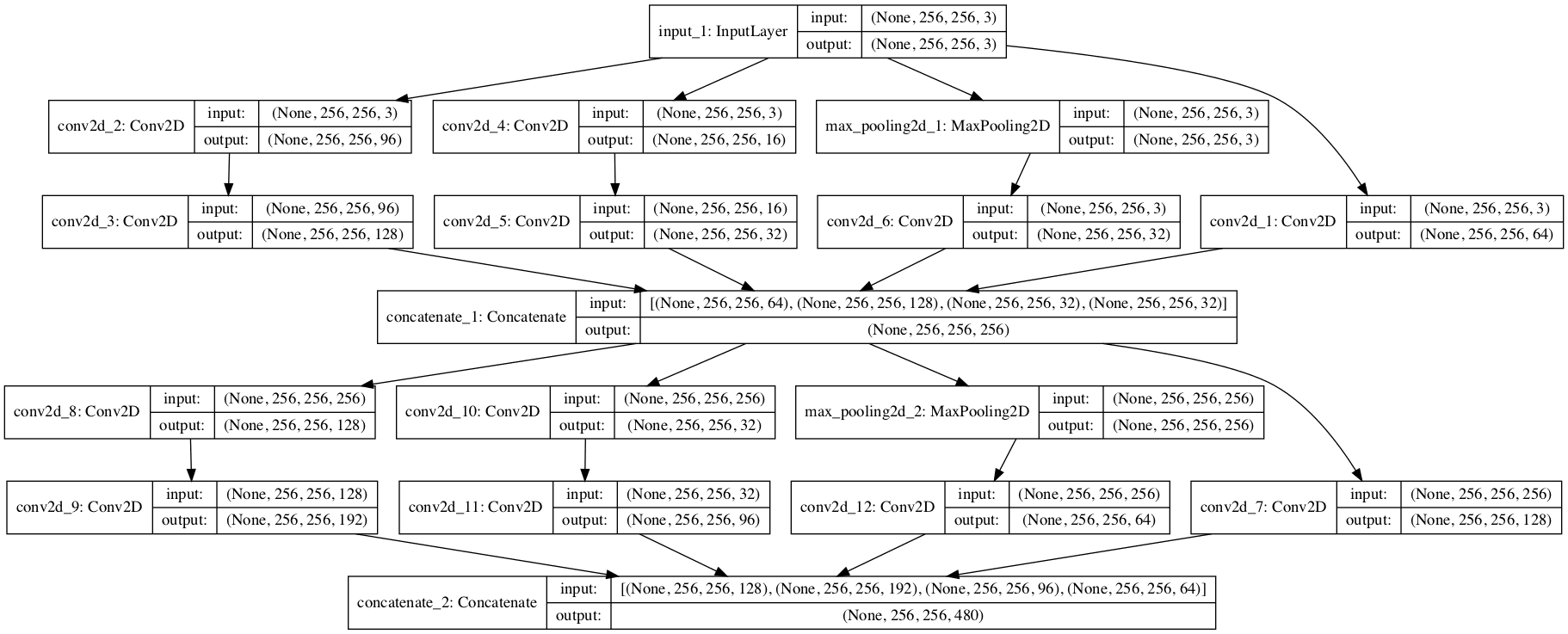

The inception score involves using a pre-trained deep learning neural network model for image classification to classify the generated images. Specifically, the Inception v3 model described by Christian Szegedy, et al. in their 2015 paper titled “Rethinking the Inception Architecture for Computer Vision.” The reliance on the inception model gives the inception score its name.

A large number of generated images are classified using the model. Specifically, the probability of the image belonging to each class is predicted. These predictions are then summarized into the inception score.

The score seeks to capture two properties of a collection of generated images:

- Image Quality. Do images look like a specific object?

- Image Diversity. Is a wide range of objects generated?

The inception score has a lowest value of 1.0 and a highest value of the number of classes supported by the classification model; in this case, the Inception v3 model supports the 1,000 classes of the ILSVRC 2012 dataset, and as such, the highest inception score on this dataset is 1,000.

The CIFAR-10 dataset is a collection of 50,000 images divided into 10 classes of objects. The original paper that introduces the inception calculated the score on the real CIFAR-10 training dataset, achieving a result of 11.24 +/- 0.12.

Using the GAN model also introduced in their paper, they achieved an inception score of 8.09 +/- .07 when generating synthetic images for this dataset.

Want to Develop GANs from Scratch?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

How to Calculate the Inception Score

The inception score is calculated by first using a pre-trained Inception v3 model to predict the class probabilities for each generated image.

These are conditional probabilities, e.g. class label conditional on the generated image. Images that are classified strongly as one class over all other classes indicate a high quality. As such, the conditional probability of all generated images in the collection should have a low entropy.

Images that contain meaningful objects should have a conditional label distribution p(y|x) with low entropy.

— Improved Techniques for Training GANs, 2016.

The entropy is calculated as the negative sum of each observed probability multiplied by the log of the probability. The intuition here is that large probabilities have less information than small probabilities.

- entropy = -sum(p_i * log(p_i))

The conditional probability captures our interest in image quality.

To capture our interest in a variety of images, we use the marginal probability. This is the probability distribution of all generated images. We, therefore, would prefer the integral of the marginal probability distribution to have a high entropy.

Moreover, we expect the model to generate varied images, so the marginal integral p(y|x = G(z))dz should have high entropy.

— Improved Techniques for Training GANs, 2016.

These elements are combined by calculating the Kullback-Leibler divergence, or KL divergence (relative entropy), between the conditional and marginal probability distributions.

Calculating the divergence between two distributions is written using the “||” operator, therefore we can say we are interested in the KL divergence between C for conditional and M for marginal distributions or:

- KL (C || M)

Specifically, we are interested in the average of the KL divergence for all generated images.

Combining these two requirements, the metric that we propose is: exp(Ex KL(p(y|x)||p(y))).

— Improved Techniques for Training GANs, 2016.

We don’t need to translate the calculation of the inception score. Thankfully, the authors of the paper also provide source code on GitHub that includes an implementation of the inception score.

The calculation of the score assumes a large number of images for a range of objects, such as 50,000.

The images are split into 10 groups, e.g 5,000 images per group, and the inception score is calculated on each group of images, then the average and standard deviation of the score is reported.

The calculation of the inception score on a group of images involves first using the inception v3 model to calculate the conditional probability for each image (p(y|x)). The marginal probability is then calculated as the average of the conditional probabilities for the images in the group (p(y)).

The KL divergence is then calculated for each image as the conditional probability multiplied by the log of the conditional probability minus the log of the marginal probability.

- KL divergence = p(y|x) * (log(p(y|x)) – log(p(y)))

The KL divergence is then summed over all images and averaged over all classes and the exponent of the result is calculated to give the final score.

This defines the official inception score implementation used when reported in most papers that use the score, although variations on how to calculate the score do exist.

How to Implement the Inception Score With NumPy

Implementing the calculation of the inception score in Python with NumPy arrays is straightforward.

First, let’s define a function that will take a collection of conditional probabilities and calculate the inception score.

The calculate_inception_score() function listed below implements the procedure.

One small change is the introduction of an epsilon (a tiny number close to zero) when calculating the log probabilities to avoid blowing up when trying to calculate the log of a zero probability. This is probably not needed in practice (e.g. with real generated images) but is useful here and good practice when working with log probabilities.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculate the inception score for p(y|x) def calculate_inception_score(p_yx, eps=1E-16): # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # kl divergence for each image kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the logs is_score = exp(avg_kl_d) return is_score |

We can then test out this function to calculate the inception score for some contrived conditional probabilities.

We can imagine the case of three classes of image and a perfect confident prediction for each class for three images.

|

1 2 |

# conditional probabilities for high quality images p_yx = asarray([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0], [0.0, 0.0, 1.0]]) |

We would expect the inception score for this case to be 3.0 (or very close to it). This is because we have the same number of images for each image class (one image for each of the three classes) and each conditional probability is maximally confident.

The complete example for calculating the inception score for these probabilities is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# calculate inception score in numpy from numpy import asarray from numpy import expand_dims from numpy import log from numpy import mean from numpy import exp # calculate the inception score for p(y|x) def calculate_inception_score(p_yx, eps=1E-16): # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # kl divergence for each image kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the logs is_score = exp(avg_kl_d) return is_score # conditional probabilities for high quality images p_yx = asarray([[1.0, 0.0, 0.0], [0.0, 1.0, 0.0], [0.0, 0.0, 1.0]]) score = calculate_inception_score(p_yx) print(score) |

Running the example gives the expected score of 3.0 (or a number extremely close).

|

1 |

2.999999999999999 |

We can also try the worst case.

This is where we still have the same number of images for each class (one for each of the three classes), but the objects are unknown, giving a uniform predicted probability distribution across each class.

|

1 2 3 4 |

# conditional probabilities for low quality images p_yx = asarray([[0.33, 0.33, 0.33], [0.33, 0.33, 0.33], [0.33, 0.33, 0.33]]) score = calculate_inception_score(p_yx) print(score) |

In this case, we would expect the inception score to be the worst possible where there is no difference between the conditional and marginal distributions, e.g. an inception score of 1.0.

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# calculate inception score in numpy from numpy import asarray from numpy import expand_dims from numpy import log from numpy import mean from numpy import exp # calculate the inception score for p(y|x) def calculate_inception_score(p_yx, eps=1E-16): # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # kl divergence for each image kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the logs is_score = exp(avg_kl_d) return is_score # conditional probabilities for low quality images p_yx = asarray([[0.33, 0.33, 0.33], [0.33, 0.33, 0.33], [0.33, 0.33, 0.33]]) score = calculate_inception_score(p_yx) print(score) |

Running the example reports the expected inception score of 1.0.

|

1 |

1.0 |

You may want to experiment with the calculation of the inception score and test other pathological cases.

How to Implement the Inception Score With Keras

Now that we know how to calculate the inception score and to implement it in Python, we can develop an implementation in Keras.

This involves using the real Inception v3 model to classify images and to average the calculation of the score across multiple splits of a collection of images.

First, we can load the Inception v3 model in Keras directly.

|

1 2 3 |

... # load inception v3 model model = InceptionV3() |

The model expects images to be color and to have the shape 299×299 pixels.

Additionally, the pixel values must be scaled in the same way as the training data images, before they can be classified.

This can be achieved by converting the pixel values from integers to floating point values and then calling the preprocess_input() function for the images.

|

1 2 3 4 5 |

... # convert from uint8 to float32 processed = images.astype('float32') # pre-process raw images for inception v3 model processed = preprocess_input(processed) |

Then the conditional probabilities for each of the 1,000 image classes can be predicted for the images.

|

1 2 3 |

... # predict class probabilities for images yhat = model.predict(images) |

The inception score can then be calculated directly on the NumPy array of probabilities as we did in the previous section.

Before we do that, we must split the conditional probabilities into groups, controlled by a n_split argument and set to the default of 10 as was used in the original paper.

|

1 2 |

... n_part = floor(images.shape[0] / n_split) |

We can then enumerate over the conditional probabilities in blocks of n_part images or predictions and calculate the inception score.

|

1 2 3 4 |

... # retrieve p(y|x) ix_start, ix_end = i * n_part, (i+1) * n_part p_yx = yhat[ix_start:ix_end] |

After calculating the scores for each split of conditional probabilities, we can calculate and return the average and standard deviation inception scores.

|

1 2 3 |

... # average across images is_avg, is_std = mean(scores), std(scores) |

Tying all of this together, the calculate_inception_score() function below takes an array of images with the expected size and pixel values in [0,255] and calculates the average and standard deviation inception scores using the inception v3 model in Keras.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# assumes images have the shape 299x299x3, pixels in [0,255] def calculate_inception_score(images, n_split=10, eps=1E-16): # load inception v3 model model = InceptionV3() # convert from uint8 to float32 processed = images.astype('float32') # pre-process raw images for inception v3 model processed = preprocess_input(processed) # predict class probabilities for images yhat = model.predict(processed) # enumerate splits of images/predictions scores = list() n_part = floor(images.shape[0] / n_split) for i in range(n_split): # retrieve p(y|x) ix_start, ix_end = i * n_part, i * n_part + n_part p_yx = yhat[ix_start:ix_end] # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # calculate KL divergence using log probabilities kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the log is_score = exp(avg_kl_d) # store scores.append(is_score) # average across images is_avg, is_std = mean(scores), std(scores) return is_avg, is_std |

We can test this function with 50 artificial images with the value 1.0 for all pixels.

|

1 2 3 4 |

... # pretend to load images images = ones((50, 299, 299, 3)) print('loaded', images.shape) |

This will calculate the score for each group of five images and the low quality would suggest that an average inception score of 1.0 will be reported.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

# calculate inception score with Keras from math import floor from numpy import ones from numpy import expand_dims from numpy import log from numpy import mean from numpy import std from numpy import exp from keras.applications.inception_v3 import InceptionV3 from keras.applications.inception_v3 import preprocess_input # assumes images have the shape 299x299x3, pixels in [0,255] def calculate_inception_score(images, n_split=10, eps=1E-16): # load inception v3 model model = InceptionV3() # convert from uint8 to float32 processed = images.astype('float32') # pre-process raw images for inception v3 model processed = preprocess_input(processed) # predict class probabilities for images yhat = model.predict(processed) # enumerate splits of images/predictions scores = list() n_part = floor(images.shape[0] / n_split) for i in range(n_split): # retrieve p(y|x) ix_start, ix_end = i * n_part, i * n_part + n_part p_yx = yhat[ix_start:ix_end] # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # calculate KL divergence using log probabilities kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the log is_score = exp(avg_kl_d) # store scores.append(is_score) # average across images is_avg, is_std = mean(scores), std(scores) return is_avg, is_std # pretend to load images images = ones((50, 299, 299, 3)) print('loaded', images.shape) # calculate inception score is_avg, is_std = calculate_inception_score(images) print('score', is_avg, is_std) |

Running the example first defines the 50 fake images, then calculates the inception score on each batch and reports the expected inception score of 1.0, with a standard deviation of 0.0.

Note: the first time the InceptionV3 model is used, Keras will download the model weights and save them into the ~/.keras/models/ directory on your workstation. The weights are about 100 megabytes and may take a moment to download depending on the speed of your internet connection.

|

1 2 |

loaded (50, 299, 299, 3) score 1.0 0.0 |

We can test the calculation of the inception score on some real images.

The Keras API provides access to the CIFAR-10 dataset.

These are color photos with the small size of 32×32 pixels. First, we can split the images into groups, then upsample the images to the expected size of 299×299, preprocess the pixel values, predict the class probabilities, then calculate the inception score.

This will be a useful example if you intend to calculate the inception score on your own generated images, as you may have to either scale the images to the expected size for the inception v3 model or change the model to perform the upsampling for you.

First, the images can be loaded and shuffled to ensure each split covers a diverse set of classes.

|

1 2 3 4 5 |

... # load cifar10 images (images, _), (_, _) = cifar10.load_data() # shuffle images shuffle(images) |

Next, we need a way to scale the images.

We will use the scikit-image library to resize the NumPy array of pixel values to the required size. The scale_images() function below implements this.

|

1 2 3 4 5 6 7 8 9 |

# scale an array of images to a new size def scale_images(images, new_shape): images_list = list() for image in images: # resize with nearest neighbor interpolation new_image = resize(image, new_shape, 0) # store images_list.append(new_image) return asarray(images_list) |

Note, you may have to install the scikit-image library if it is not already installed. This can be achieved as follows:

|

1 |

sudo pip install scikit-image |

We can then enumerate the number of splits, select a subset of the images, scale them, pre-process them, and use the model to predict the conditional class probabilities.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... # retrieve images ix_start, ix_end = i * n_part, (i+1) * n_part subset = images[ix_start:ix_end] # convert from uint8 to float32 subset = subset.astype('float32') # scale images to the required size subset = scale_images(subset, (299,299,3)) # pre-process images, scale to [-1,1] subset = preprocess_input(subset) # predict p(y|x) p_yx = model.predict(subset) |

The rest of the calculation of the inception score is the same.

Tying this all together, the complete example for calculating the inception score on the real CIFAR-10 training dataset is listed below.

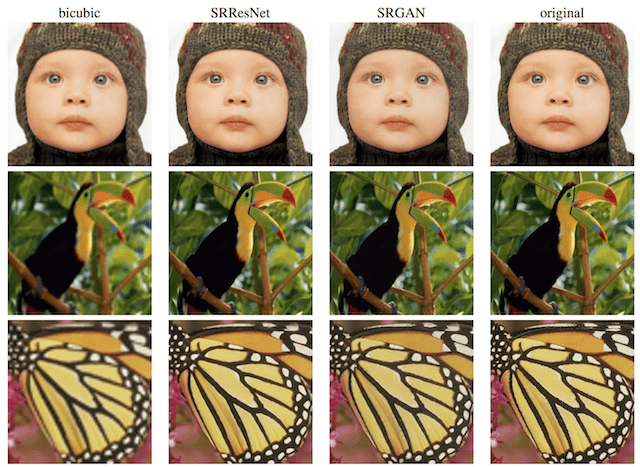

Based on the similar calculation reported in the original inception score paper, we would expect the reported score on this dataset to be approximately 11. Interestingly, the best inception score for CIFAR-10 with generated images is about 8.8 at the time of writing using a progressive growing GAN.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

# calculate inception score for cifar-10 in Keras from math import floor from numpy import ones from numpy import expand_dims from numpy import log from numpy import mean from numpy import std from numpy import exp from numpy.random import shuffle from keras.applications.inception_v3 import InceptionV3 from keras.applications.inception_v3 import preprocess_input from keras.datasets import cifar10 from skimage.transform import resize from numpy import asarray # scale an array of images to a new size def scale_images(images, new_shape): images_list = list() for image in images: # resize with nearest neighbor interpolation new_image = resize(image, new_shape, 0) # store images_list.append(new_image) return asarray(images_list) # assumes images have any shape and pixels in [0,255] def calculate_inception_score(images, n_split=10, eps=1E-16): # load inception v3 model model = InceptionV3() # enumerate splits of images/predictions scores = list() n_part = floor(images.shape[0] / n_split) for i in range(n_split): # retrieve images ix_start, ix_end = i * n_part, (i+1) * n_part subset = images[ix_start:ix_end] # convert from uint8 to float32 subset = subset.astype('float32') # scale images to the required size subset = scale_images(subset, (299,299,3)) # pre-process images, scale to [-1,1] subset = preprocess_input(subset) # predict p(y|x) p_yx = model.predict(subset) # calculate p(y) p_y = expand_dims(p_yx.mean(axis=0), 0) # calculate KL divergence using log probabilities kl_d = p_yx * (log(p_yx + eps) - log(p_y + eps)) # sum over classes sum_kl_d = kl_d.sum(axis=1) # average over images avg_kl_d = mean(sum_kl_d) # undo the log is_score = exp(avg_kl_d) # store scores.append(is_score) # average across images is_avg, is_std = mean(scores), std(scores) return is_avg, is_std # load cifar10 images (images, _), (_, _) = cifar10.load_data() # shuffle images shuffle(images) print('loaded', images.shape) # calculate inception score is_avg, is_std = calculate_inception_score(images) print('score', is_avg, is_std) |

Running the example loads the dataset, prepares the model, and calculates the inception score on the CIFAR-10 training dataset.

We can see that the score is 11.3, which is close to the expected score of 11.24.

Note: the first time that the CIFAR-10 dataset is used, Keras will download the images in a compressed format and store them in the ~/.keras/datasets/ directory. The download is about 161 megabytes and may take a few minutes based on the speed of your internet connection.

|

1 2 |

loaded (50000, 32, 32, 3) score 11.317895 0.14821531 |

Problems With the Inception Score

The inception score is effective, but it is not perfect.

Generally, the inception score is appropriate for generated images of objects known to the model used to calculate the conditional class probabilities.

In this case, because the inception v3 model is used, this means that it is most suitable for 1,000 object types used in the ILSVRC 2012 dataset. This is a lot of classes, but not all objects that may interest us.

You can see a full list of the classes here:

It also requires that the images are square and have the relatively small size of about 300×300 pixels, including any scaling required to get your generated images to that size.

A good score also requires having a good distribution of generated images across the possible objects supported by the model, and close to an even number of examples for each class. This can be hard to control for many GAN models that don’t offer controls over the types of objects generated.

Shane Barratt and Rishi Sharma take a closer look at the inception score and list a number of technical issues and edge cases in their 2018 paper titled “A Note on the Inception Score.” This is a good reference if you wish to dive deeper.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

- Improved Techniques for Training GANs, 2016.

- A Note on the Inception Score, 2018.

- Rethinking the Inception Architecture for Computer Vision, 2015.

Projects

- Code for the paper “Improved Techniques for Training GANs”

- Large Scale Visual Recognition Challenge 2012 (ILSVRC2012)

API

Articles

- Image Generation on CIFAR-10

- Inception Score calculation, 2017.

- A simple explanation of the Inception Score

- Inception Score — evaluating the realism of your GAN, 2018.

- Kullback–Leibler divergence, Wikipedia.

- Entropy (information theory), Wikipedia.

Summary

In this tutorial, you discovered the inception score for evaluating the quality of generated images.

Specifically, you learned:

- How to calculate the inception score and the intuition behind what it measures.

- How to implement the inception score in Python with NumPy and the Keras deep learning library.

- How to calculate the inception score for small images such as those in the CIFAR-10 dataset.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

How can we calculate the Inception Score for MNIST datasets??

Perhaps transform the MNIST to have 3 color channels from the black and white pixel data?

Thanks for your nice article!

I would like to use the IS as a custom loss function for training a Keras model (autoencoder).

Your implementation uses numpy, but Keras expects Tensorflow tensors.

What is a simple way to make it work with Keras?

The tutorial shows how to calculate IS using Keras. Perhaps re-read it?

Firstly, thank you for the great article.

I have a question:

I want to use Inception Score for evaluating human face images generated by my GAN. Would this method be feasible for evaluating generated face images? Because I think there are no classes for this type of data in Inception V3 Model.

Or what would be a good approach for evaluating such network?

Probably not.

Perhaps review some of the metrics here:

https://machinelearningmastery.com/how-to-evaluate-generative-adversarial-networks/

First ,thanks for your article

Second

I Have one Question :

How to get a score between to image (generated and expected) in project pix2pix for maps ?

I don’t think IS would be appropriate for that project, the images are not like standard imagenet images.

What do you recommend to me Use It ?

Isn’t this inception v3 model trained for imagenet dataset with 1000 output neurons?

If that’s true then how can we use this for cifar 10 dataset which requires 10 output neurons? Or this is just the way its’ supposed to be done?

It can identify the class of the image, it is a superset of classes. The key is that it is consistent.

If you want to limit to 10 classes only, perhaps use a pretrained model on cifar10?

Thanks for the clarification, I got it!

You’re welcome.

First, Thanks for your article

Second

I Have one Question :

IS FID good to evaluate images (generated and expected) in project pix2pix for maps?

If it is not what is the best evaluation for this project as we are facing a problem to evaluate this generated images from GAN and expected images

I’ll be grateful if you help me to solve this complex problem as I’ve been searching for many weeks to solve this problem and did not find a good solution

Thanks in advance

Good question. FID might be intersting to explore for map data.

The problem remains that a model is required that is good at extracting features from maps, and models like vgg and inception are probably not well suited.

That being said, you could run experiments to see how effective/ineffective it is – experiments like those in the FID paper.

Thanks a lot really sir

We have tried it on our dataset and it was not good enough

What is the best model for extracting features from maps ?

I don’t know, you must discover the best algorithm via controlled experiments.

I have read about FCN models trained on google map

Do you think this would be good instead of the inception model?

Perhaps try it.

Thanks a lot, sir You have helped me a lot really

and I want to thank you also for this great article

You’re welcome.

I have a neural network that classifies images of the MNIST dataset. If I extract the intermediate layer output (just after softmax has been applied in the last layer) for all the samples of my test set and pass this array to your numpy Inception Score implementation, would it give the correct score?

Thank you

Inception would not be appropriate for MNIST images. It is designed for photos of objects, e.g. from imagenet.

For a better metric, see:

https://machinelearningmastery.com/how-to-evaluate-generative-adversarial-networks/

Okay, how important is changing the number of splits in the Inception Score calculation? Why not always use n=1 ?

Perhaps run a sensitivity analysis to see how the splits impacts the variance of the score on your specific dataset.

Thanks for the reply Jason. Increasing the number of splits greater than 1 makes a my inception scores drop drastically.However, using a classifier, those images achieve a very high accuracy rate around 98%

How should I interpret this?

You need to find a balance between the number of images you have and number of splits so that each group is “representative”.

Hi! thanks for a very clear explanation! I tried implementing the score by myself and your blog was very helpful.

Just a small note. at the beginning of the blog you wrote :

“KL divergence = p(y|x) * (log(p(y|x)) – log(p(y)))

The KL divergence is then *summed over all images and averaged over all classes* and the exponent of the result is calculated to give the final score.”

but in your actual implementation of the IS you sum over classes and and average over images, so I believe it will be good to add this small fix

Thanks again for this great post

Thanks.

Hi , i was wondering If I train an inception network from scratch for a much simple dataset like fashion Mnist with just black and white images having single channel and then use it as model to calculate Inception score on generated images .Would it be good idea to think of this custom developed network score as a reliable benchmark .

Maybe. If it performs well relative to other models.

Hi, a quick question:

In the first section, its stated that: “The inception score has a highest value of the number of classes supported by the classification model”.

However, its also stated that: “The CIFAR-10 dataset is a collection of 50,000 images divided into 10 classes of objects. The original paper that introduces the inception calculated the score on the real CIFAR-10 training dataset, achieving a result of 11.24 +/- 0.12.”

My question is, if the CIFAR-10 dataset is only divided into 10 classes of objects, how does the original paper calculate an inception score of 11.24, which is higher than 10?

Thanks for the great post.

Note we get a score of 11 as well in the above tutorial.

Perhaps re-read the code for that section to see what we’re doing.

Number of classes supported by the Inception v3 classification model is 1000. So even though CIFAR-10 has only 10 classes, the model will still output predictions for all 1000 possible classes it was trained to predict. For example, two different CIFAR-10 images of a dog can lead to different class predictions (different breeds).

In short, from the point of view of the Inception model, CIFAR-10 has more than 10 classes.

Hi Jason, thanks for the informative articles.

Q: Does Inception score change when we shuffle the data? shuffle means just changing the order and keeping the data size same.

Thanks.

And also, does the Inception Score always the same for everyone? I got 11.10

Hi Prachi…The following may be of interest to you:

https://medium.com/octavian-ai/a-simple-explanation-of-the-inception-score-372dff6a8c7a

The inception score has a lowest value of 1.0 and a highest value of the number of classes supported by the classification model; in this case, the Inception v3 model supports the 1,000 classes of the ILSVRC 2012 dataset, and as such, the highest inception score on this dataset is 1,000.

The CIFAR-10 dataset is a collection of 50,000 images divided into 10 classes of objects. The original paper that introduces the inception calculated the score on the real CIFAR-10 training dataset, achieving a result of 11.24 +/- 0.12

I was wondering if the statement is correct? If CIFAR-10 contains 10 classes and maximum possible score is #classes supported by the classification model, then, how IS is 11.24??

What I’m missing??

Hi Mike…The following resource may be of interest:

https://medium.com/octavian-ai/a-simple-explanation-of-the-inception-score-372dff6a8c7a

Hi James,

Any idea on the minimum requirements in terms of memory, no. GPU(s) required etc.?

Getting errors like

2022-11-25 11:07:06.502459: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 5364060000 exceeds 10% of free system memory.

2022-11-25 11:07:09.204554: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

2022-11-25 11:07:10.278466: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 90935296 exceeds 10% of free system memory.

2022-11-25 11:07:10.542839: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 88510464 exceeds 10% of free system memory.

2022-11-25 11:07:10.732282: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 177020928 exceeds 10% of free system memory.

2022-11-25 11:07:11.098629: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 123887616 exceeds 10% of free system memory.

Thanks

Hi Kristen…You want to investigate to options if you do not have access to local GPUs.

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

https://machinelearningmastery.com/google-colab-for-machine-learning-projects/

tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:42] Overriding orig_value setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. The original config value was 0.

this code reaches to point and does not work. why?

Hi, thanks for the wonderful article! I have a doubt regarding IS-

“The inception score has a lowest value of 1.0 and a highest value of the number of classes supported by the classification model; in this case, the Inception v3 model supports the 1,000 classes of the ILSVRC 2012 dataset, and as such, the highest inception score on this dataset is 1,000.

The CIFAR-10 dataset is a collection of 50,000 images divided into 10 classes of objects. The original paper that introduces the inception calculated the score on the real CIFAR-10 training dataset, achieving a result of 11.24 +/- 0.12.”

Here, it is stated that highest IS = number of classes. If that is so, then how is the IS value 11.24, that is greater than 10, when CIFAR-10 only has 10 classes? Is the statement on “highest IS = number of classes” a special case scenario?

Hi Prakhar…You are very welcome! The following resources may add clarity:

https://kailashahirwar.medium.com/a-very-short-introduction-to-inception-score-is-c9b03a7dd788

https://github.com/Ahmed-Habashy/calculate-Inception-Score-for-CIFAR-10-in-Keras