Many decisions we make in life are based on the opinions of multiple other people.

This includes choosing a book to read based on reviews, choosing a course of action based on the advice of multiple medical doctors, and determining guilt.

Often, decision making by a group of individuals results in a better outcome than a decision made by any one member of the group. This is generally referred to as the wisdom of the crowd.

We can achieve a similar result by combining the predictions of multiple machine learning models for regression and classification predictive modeling problems. This is referred to generally as ensemble machine learning, or simply ensemble learning.

In this post, you will discover a gentle introduction to ensemble learning.

After reading this post, you will know:

- Many decisions we make involve the opinions or votes of other people.

- The ability of groups of people to make better decisions than individuals is called the wisdom of the crowd.

- Ensemble machine learning involves combining predictions from multiple skillful models.

Kick-start your project with my new book Ensemble Learning Algorithms With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Ensemble Learning

Photo by the Bureau of Land Management, some rights reserved.

Overview

This tutorial is divided into three parts; they are:

- Making Important Decisions

- Wisdom of Crowds

- Ensemble Machine Learning

Making Important Decisions

Consider important decisions you make in your life.

For example:

- What book to purchase and read next.

- What university to attend.

Candidate books are those that sound interesting, but the book we purchase might have the most favorable reviews. Candidate universities are those that offer the courses we’re interested in, but we might choose one based on the feedback from friends and acquaintances that have first-hand experience.

We might trust the reviews and star ratings because each individual that contributed a review was (hopefully) unaffiliated with the book and independent of the other people leaving a review. When this is not the case, trust in the outcome is questionable and trust in the system is shaken, which is why Amazon works hard to delete fake reviews for books.

Also, consider important decisions we make more personally.

For example, medical treatment for an illness.

We take advice from an expert, but we seek a second, third, and even more opinions to confirm we are taking the best course of action.

The advice from the second and third opinion may or may not match the first opinion, but we weigh it heavily because it is provided dispassionately, objectively, and independently. If the doctors colluded on their opinion, then we would feel like the process of seeking a second and third opinion has failed.

… whenever we are faced with making a decision that has some important consequence, we often seek the opinions of different “experts” to help us make that decision …

— Page 2, Ensemble Machine Learning, 2012.

Finally, consider decisions we make as a society.

For example:

- Who should represent a geographical area in a government.

- Whether someone is guilty of a crime.

The democratic election of representatives is based (in some form) on the independent votes of citizens.

Making decisions based on the input of multiple people or experts has been a common practice in human civilization and serves as the foundation of a democratic society.

— Page v, Ensemble Methods, 2012.

An individual’s guilt of a serious crime may be determined by a jury of independent peers, often sequestered to enforce the independence of their interpretation. Cases may also be appealed at multiple levels, providing second, third, and more opinions on the outcome.

The judicial system in many countries, whether based on a jury of peers or a panel of judges, is also based on ensemble-based decision making.

— Pages 1-2, Ensemble Machine Learning, 2012.

These are all examples of an outcome arrived at through the combination of lower-level opinions, votes, or decisions.

… ensemble-based decision making is nothing new to us; as humans, we use such systems in our daily lives so often that it is perhaps second nature to us.

— Page 1, Ensemble Machine Learning, 2012.

In each case, we can see that there are properties of the lower-level decisions that are critical for the outcome to be useful, such as a belief in their independence and that each has some validity on their own.

This approach to decision making is so common, it has a name.

Want to Get Started With Ensemble Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Wisdom of Crowds

This approach to decision making when using humans that make the lower-level decisions is often referred to as the “wisdom of the crowd.”

It refers to the case where the opinion calculated from the aggregate of a group of people is often more accurate, useful, or correct than the opinion of any individual in the group.

A famous case of this from more than 100 years ago, and often cited, is that of a contest at a fair in Plymouth, England to estimate the weight of an ox. Individuals made their guess and the person whose guess was closest to the actual weight won the meat.

The statistician Francis Galton collected all of the guesses afterward and calculated the average of the guesses.

… he added all the contestants’ estimates, and calculated the mean of the group’s guesses. That number represented, you could say, the collective wisdom of the Plymouth crowd. If the crowd were a single person, that was how much it would have guessed the ox weighed.

— Page xiii, The Wisdom of Crowds, 2004.

He found that the mean of the guesses made by the contestants was very close to the actual weight. That is, taking the average value of all the numerical weights from the 800 participants was an accurate way of determining the true weight.

The crowd had guessed that the ox, after it had been slaughtered and dressed, would weigh 1,197 pounds. After it had been slaughtered and dressed, the ox weighed 1,198 pounds. In other words, the crowd’s judgment was essentially perfect.

— Page xiii, The Wisdom of Crowds, 2004.

This example is given at the beginning of James Surowiecki’s 2004 book titled “The Wisdom of Crowds” that explores the ability of groups of humans to make decisions and predictions that are often better than the members of the group.

This intelligence, or what I’ll call “the wisdom of crowds,” is at work in the world in many different guises.

— Page xiv, The Wisdom of Crowds, 2004.

The book motivates the preference to average the guesses, votes, and opinions of groups of people when making some important decisions instead of searching for and consulting a single expert.

… we feel the need to “chase the expert.” The argument of this book is that chasing the expert is a mistake, and a costly one at that. We should stop hunting and ask the crowd (which, of course, includes the geniuses as well as everyone else) instead. Chances are, it knows.

— Page xv, The Wisdom of Crowds, 2004.

The book goes on to highlight a number properties of any system that makes decisions based on groups of people, summarized nicely in Lior Rokach’s 2010 book titled “Pattern Classification Using Ensemble Methods” (page 22), as:

- Diversity of opinion: Each member should have private information even if it is just an eccentric interpretation of the known facts.

- Independence: Members’ opinions are not determined by the opinions of those around them.

- Decentralization: Members are able to specialize and draw conclusions based on local knowledge.

- Aggregation: Some mechanism exists for turning private judgments into a collective decision.

As a decision-making system, the approach is not always the most effective (e.g. stock market bubbles, fads, etc.), but can be effective in a range of different domains where the outcomes are important.

We can use this approach to decision making in applied machine learning.

Ensemble Machine Learning

Applied machine learning often involves fitting and evaluating models on a dataset.

Given that we cannot know which model will perform best on the dataset beforehand, this may involve a lot of trial and error until we find a model that performs well or best for our project.

This is akin to making a decision using a single expert. Perhaps the best expert we can find.

A complementary approach is to prepare multiple different models, then combine their predictions. This is called an ensemble machine learning model, or simply an ensemble, and the process of finding a well-performing ensemble model is referred to as “ensemble learning“.

Ensemble methodology imitates our second nature to seek several opinions before making a crucial decision.

— Page vii, Pattern Classification Using Ensemble Methods, 2010.

This is akin to making a decision using the opinions from multiple experts.

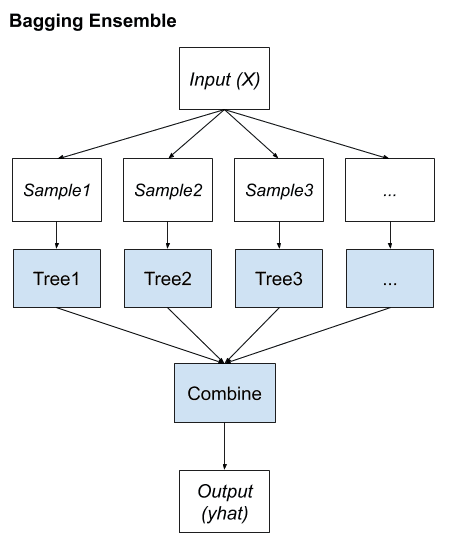

The most common type of ensemble involves training multiple versions of the same machine learning model in a way that ensures that each ensemble member is different (e.g. decision trees fit on different subsamples of the training dataset), then combining the predictions using averaging or voting.

A less common, although just as effective, approach involves training different algorithms on the same data (e.g. a decision tree, a support vector machine, and a neural network) and combining their predictions.

Like combining the opinions of humans in a crowd, the effectiveness of the ensemble relies on each model having some skill (better than random) and some independence from the other models. This latter point is often interpreted as meaning that the model is skillful in a different way from other models in the ensemble.

The hope is that the ensemble results in a better performing model than any contributing member.

The core principle is to weigh several individual pattern classifiers, and combine them in order to reach a classification that is better than the one obtained by each of them separately.

— Page vii, Pattern Classification Using Ensemble Methods, 2010.

At worst, the ensemble limits the worst case of predictions by reducing the variance of the predictions. Model performance can vary with the training data (and the stochastic nature of the learning algorithm in some cases), resulting in better or worse performance for any specific model.

… the goal of ensemble systems is to create several classifiers with relatively fixed (or similar) bias and then combining their outputs, say by averaging, to reduce the variance.

— Page 2, Ensemble Machine Learning, 2012.

An ensemble can smooth this out and ensure that predictions made are closer to the average performance of contributing members. Further, reducing the variance in predictions often results in a lift in the skill of the ensemble. This comes at the added computational cost of fitting and maintaining multiple models instead of a single model.

Although ensemble predictions will have a lower variance, they are not guaranteed to have better performance than any single contributing member.

… researchers in the computational intelligence and machine learning community have studied schemes that share such a joint decision procedure. These schemes are generally referred to as ensemble learning, which is known to reduce the classifiers’ variance and improve the decision system’s robustness and accuracy.

— Page v, Ensemble Methods, 2012.

Sometimes, the best performing model, e.g. the best expert, is sufficiently superior compared to other models that combining its predictions with other models can result in worse performance.

As such, selecting models, even ensemble models, still requires carefully controlled experiments on a robust test harness.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- The Wisdom of Crowds, 2004.

- Pattern Classification Using Ensemble Methods, 2010.

- Ensemble Methods, 2012.

- Ensemble Machine Learning, 2012.

Articles

- Ensemble learning, Wikipedia.

- Ensemble learning, Scholarpedia.

- Wisdom of the crowd, Wikipedia.

- The Wisdom of Crowds, Wikipedia.

Summary

In this post, you discovered a gentle introduction to ensemble learning.

Specifically, you learned:

- Many decisions we make involve the opinions or votes of other people.

- The ability of groups of people to make better decisions than individuals is called the wisdom of the crowd.

- Ensemble machine learning involves combining predictions from multiple skillful models.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Excellent piece of writing. Very well written and referenced.

Thanks!

Beautifully written piece. I like such pieces, they bring machine learning a bit closer to home

Thanks!

A great introduction to EL. Congratulations, and thank you, Jason.

Thanks!

Another excellent contribution. Before approaching the technical program, Jason can always direct the view of why one is proceeding in this way and that the procedure is derived from reality. Thanks a lot for this!

Thanks!

Very well presented with example,easy to understand .

Thank you.

Great post! Thanks

Thanks!

Great Introduction well done

Thanks.

Great article as always. Can you throw some light on what are the scenarios when one should go with ensemble learning and what are the scenarios where it should be avoided ? Also, is it better to start with an ensemble model when solving a problem for the first time, and then switch to one expert model when we have better domain understanding ?

Thanks!

Try it whenever you have time and only if it performs better than any single model you have tried.

I appreciated that you integrated the story of the Ox, and the exact reasons why ensemble learning (with an expert group) can achieve significant accuracy. The key here I guess, is that the experts must have familiarity with the model…

I think the point is that an average from a group of non-experts is as good or better than an expert.

Or perhaps not “non-experts” but sub-experts – experts in different domains or partial aspects of the target domain.