Logistic regression is a simple but popular machine learning algorithm for binary classification that uses the logistic, or sigmoid, function at its core. It also comes implemented in the OpenCV library.

In this tutorial, you will learn how to apply OpenCV’s logistic regression algorithm, starting with a custom two-class dataset that we will generate ourselves. We will then apply these skills for the specific image classification application in a subsequent tutorial.

After completing this tutorial, you will know:

- Several of the most important characteristics of the logistic regression algorithm.

- How to use the logistic regression algorithm on a custom dataset in OpenCV.

Kick-start your project with my book Machine Learning in OpenCV. It provides self-study tutorials with working code.

Let’s get started.

Logistic Regression in OpenCV

Photo by Fabio Santaniello Bruun. Some rights reserved.

Tutorial Overview

This tutorial is divided into two parts; they are:

- Reminder of What Logistic Regression Is

- Discovering Logistic Regression in OpenCV

Reminder of What Logistic Regression Is

The topic surrounding logistic regression has already been explained well in these tutorials by Jason Brownlee [1, 2, 3], but let’s first start with brushing up on some of the most important points:

- Logistic regression takes its name from the function used at its core, the logistic function (also known as the sigmoid function).

- Despite the use of the word regression in its name, logistic regression is a method for binary classification or, in simpler terms, problems with two-class values.

- Logistic regression can be regarded as an extension of linear regression because it maps (or squashes) the real-valued output of a linear combination of features into a probability value within the range [0, 1] through the use of the logistic function.

- Within a two-class scenario, the logistic regression method models the probability of the default class. As a simple example, let’s say that we are trying to distinguish between classes of flowers A and B from their petal count, and we are taking the default class to be A. Then, for an unseen input X, the logistic regression model would give the probability of X belonging to the default class A:

$$ P(X) = P(A = 1 | X) $$

- The input X is classified as belonging to the default class A if its probability P(X) > 0.5. Otherwise, it is classified as belonging to the non-default class B.

- The logistic regression model is represented by a set of parameters known as coefficients (or weights) learned from the training data. These coefficients are iteratively adjusted during training to minimize the error between the model predictions and the actual class labels.

- The coefficient values may be estimated during training using gradient descent or maximum likelihood estimation (MLE) techniques.

Discovering Logistic Regression in OpenCV

Let’s start with a simple binary classification task before moving on to more complex problems.

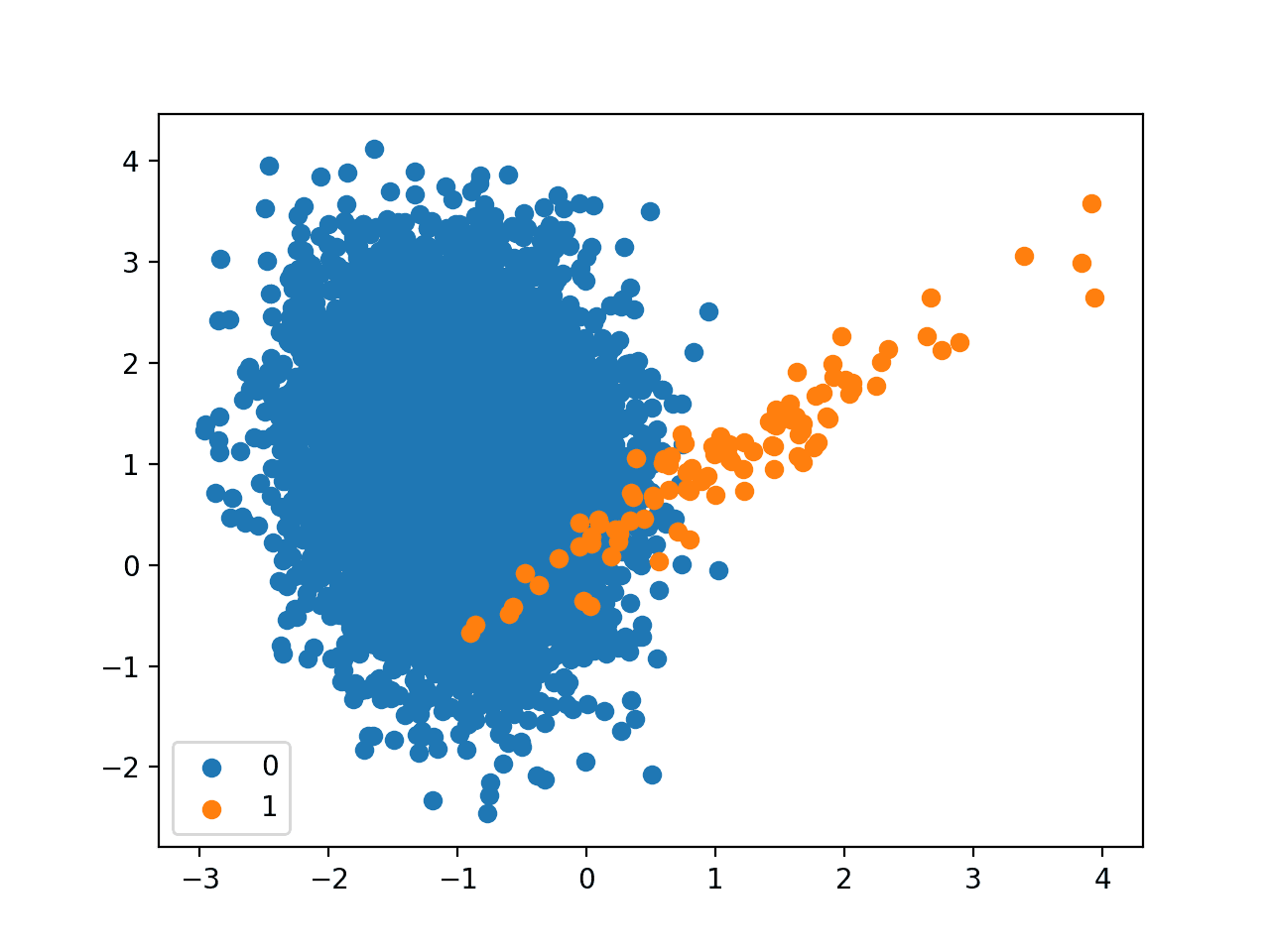

As we have already done in related tutorials through which we familiarised ourselves with other machine learning algorithms in OpenCV (such as the SVM algorithm), we shall be generating a dataset that comprises 100 data points (specified by n_samples), equally divided into 2 Gaussian clusters (specified by centers) having a standard deviation set to 5 (specified by cluster_std). To be able to replicate the results, we shall again exploit the random_state parameter, which we’re going to set to 15:

|

1 2 3 4 5 6 |

# Generate a dataset of 2D data points and their ground truth labels x, y_true = make_blobs(n_samples=100, centers=2, cluster_std=5, random_state=15) # Plot the dataset scatter(x[:, 0], x[:, 1], c=y_true) show() |

The code above should generate the following plot of data points. You may note that we are setting the color values to the ground truth labels to be able to distinguish between data points belonging to the two different classes:

The next step is to split the dataset into training and testing sets, where the former will be used to train the logistic regression model and the latter to test it:

|

1 2 3 4 5 6 7 8 9 10 |

# Split the data into training and testing sets x_train, x_test, y_train, y_test = ms.train_test_split(x, y_true, test_size=0.2, random_state=10) # Plot the training and testing datasets fig, (ax1, ax2) = subplots(1, 2) ax1.scatter(x_train[:, 0], x_train[:, 1], c=y_train) ax1.set_title('Training data') ax2.scatter(x_test[:, 0], x_test[:, 1], c=y_test) ax2.set_title('Testing data') show() |

The image above indicates that the two classes appear clearly distinguishable in the training and testing data. For this reason, we expect that this binary classification problem should be a straightforward task for the trained linear regression model. Let’s create and train a logistic regression model in OpenCV to eventually see how it performs on the testing part of the dataset.

The first step is to create the logistic regression model itself:

|

1 2 |

# Create an empty logistic regression model lr = ml.LogisticRegression_create() |

In the next step, we shall choose the training method by which we want the model’s coefficients to be updated during training. The OpenCV implementation lets us choose between two different methods: the Batch Gradient Descent and the Mini-Batch Gradient Descent methods.

If the Batch Gradient Descent method is chosen, the model’s coefficients will be updated using the entire training dataset at each iteration of the gradient descent algorithm. If we are working with very large datasets, then this method of updating the model’s coefficients can become very computationally expensive.

Want to Get Started With Machine Learning with OpenCV?

Take my free email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

A more practical approach to updating the model’s coefficients, especially when working with large datasets, is to opt for a Mini-Batch Gradient Descent method, which rather divides the training data into smaller batches (called mini-batches, hence the name of the method) and updates the model’s coefficients by processing one mini-batch at a time.

We may check what OpenCV implements as its default training method by making use of the following line of code:

|

1 2 |

# Check the default training method print(‘Training Method:’, lr.getTrainMethod()) |

|

1 |

Training Method: 0 |

The returned value of 0 represents the Batch Gradient Descent method in OpenCV. If we want to change this to the Mini-Batch Gradient Descent method, we can do so by passing ml.LogisticRegression_MINI_BATCH to the setTrainMethod function, and then proceed to set the size of the mini-batch:

|

1 2 3 |

# Set the training method to Mini-Batch Gradient Descent and the size of the mini-batch lr.setTrainMethod(ml.LogisticRegression_MINI_BATCH) lr.setMiniBatchSize(5) |

Setting the mini-batch size to 5 means that the training data will be divided into mini-batches containing 5 data points each, and the model’s coefficients will be updated iteratively after each of these mini-batches is processed in turn. If we had to set the size of the mini-batch to the total number of samples in the training dataset, this would effectively result in a Batch Gradient Descent operation since the entire batch of training data would be processed at once, at each iteration.

Next, we shall define the number of iterations that we want to run the chosen training algorithm for, before it terminates:

|

1 2 |

# Set the number of iterations lr.setIterations(10) |

We’re now set to train the logistic regression model on the training data:

|

1 2 |

# Train the logistic regressor on the set of training data lr.train(x_train.astype(float32), ml.ROW_SAMPLE, y_train.astype(float32)) |

As mentioned earlier, the training process aims to adjust the logistic regression model’s coefficients iteratively to minimize the error between the model predictions and the actual class labels.

Each training sample we have fed into the model comprises two feature values, denoted by $x_1$ and $x_2$. This means that we should expect the model we have generated to be defined by two coefficients (one per input feature) and an additional coefficient that defines the bias (or intercept).

Then the probability value, $\hat{y}$, returned the model can be defined as follows:

$$ \hat{y} = \sigma( \beta_0 + \beta_1 \; x_1 + \beta_2 \; x_2 ) $$

where $\beta_1$ and $\beta_2$ denote the model coefficients, $\beta_0$ the bias, and $\sigma$ the logistic (or sigmoid) function that is applied to the real-valued output of the linear combination of features.

Let’s print out the learned coefficient values to see whether we retrieve as many as we expect:

|

1 2 |

# Print the learned coefficients print(lr.get_learnt_thetas()) |

|

1 |

[[-0.02413555 -0.34612912 0.08475047]] |

We find that we retrieve three values as expected, which means that we can define the model that best separates between the two-class samples that we are working with by:

$$ \hat{y} = \sigma( -0.0241 – \; 0.3461 \; x_1 + 0.0848 \; x_2 ) $$

We can assign a new, unseen data point to either of the two classes by plugging in its feature values, $x_1$ and $x_2$, into the model above. If the probability value returned by the model is > 0.5, we can take it as a prediction for class 0 (the default class). Otherwise, it is a prediction for class 1.

Let’s go ahead to see how well this model predicts the target class labels by trying it out on the testing part of the dataset:

|

1 2 3 4 5 6 |

# Predict the target labels of the testing data _, y_pred = lr.predict(x_test.astype(float32)) # Compute and print the achieved accuracy accuracy = (sum(y_pred[:, 0].astype(int) == y_test) / y_test.size) * 100 print('Accuracy:', accuracy, ‘%') |

|

1 |

Accuracy: 95.0 % |

We can plot out the ground truth against the predicted classes for the testing data, as well as print out the ground truth and predicted class labels, to investigate any misclassifications:

|

1 2 3 4 5 6 7 8 9 10 11 |

# Plot the ground truth and predicted class labels fig, (ax1, ax2) = subplots(1, 2) ax1.scatter(x_test[:, 0], x_test[:, 1], c=y_test) ax1.set_title('Ground Truth Testing data') ax2.scatter(x_test[:, 0], x_test[:, 1], c=y_pred) ax2.set_title('Predicted Testing data’) show() # Print the ground truth and predicted class labels of the testing data print('Ground truth class labels:', y_test, '\n', 'Predicted class labels:', y_pred[:, 0].astype(int)) |

|

1 2 |

Ground truth class labels: [1 1 1 1 1 0 0 1 1 1 0 0 1 0 0 1 1 1 1 0] Predicted class labels: [1 1 1 1 1 0 0 1 0 1 0 0 1 0 0 1 1 1 1 0] |

Test Data Points Belonging to Ground Truth and Predicted Classes, Where a Red Circle highlights a Misclassified Data Point

In this manner, we can see that one sample originally belonged to class 1 in the ground truth data but has been misclassified as belonging to class 0 in the model’s prediction.

The entire code listing is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

from cv2 import ml from sklearn.datasets import make_blobs from sklearn import model_selection as ms from numpy import float32 from matplotlib.pyplot import scatter, show, subplots # Generate a dataset of 2D data points and their ground truth labels x, y_true = make_blobs(n_samples=100, centers=2, cluster_std=5, random_state=15) # Plot the dataset scatter(x[:, 0], x[:, 1], c=y_true) show() # Split the data into training and testing sets x_train, x_test, y_train, y_test = ms.train_test_split(x, y_true, test_size=0.2, random_state=10) # Plot the training and testing datasets fig, (ax1, ax2) = subplots(1, 2) ax1.scatter(x_train[:, 0], x_train[:, 1], c=y_train) ax1.set_title('Training data') ax2.scatter(x_test[:, 0], x_test[:, 1], c=y_test) ax2.set_title('Testing data') show() # Create an empty logistic regression model lr = ml.LogisticRegression_create() # Check the default training method print('Training Method:', lr.getTrainMethod()) # Set the training method to Mini-Batch Gradient Descent and the size of the mini-batch lr.setTrainMethod(ml.LogisticRegression_MINI_BATCH) lr.setMiniBatchSize(5) # Set the number of iterations lr.setIterations(10) # Train the logistic regressor on the set of training data lr.train(x_train.astype(float32), ml.ROW_SAMPLE, y_train.astype(float32)) # Print the learned coefficients print(lr.get_learnt_thetas()) # Predict the target labels of the testing data _, y_pred = lr.predict(x_test.astype(float32)) # Compute and print the achieved accuracy accuracy = (sum(y_pred[:, 0].astype(int) == y_test) / y_test.size) * 100 print('Accuracy:', accuracy, '%') # Plot the ground truth and predicted class labels fig, (ax1, ax2) = subplots(1, 2) ax1.scatter(x_test[:, 0], x_test[:, 1], c=y_test) ax1.set_title('Ground truth testing data') ax2.scatter(x_test[:, 0], x_test[:, 1], c=y_pred) ax2.set_title('Predicted testing data') show() # Print the ground truth and predicted class labels of the testing data print('Ground truth class labels:', y_test, '\n', 'Predicted class labels:', y_pred[:, 0].astype(int)) |

In this tutorial, we have considered setting values for two specific training parameters of the logistic regression model implemented in OpenCV. The parameters defined the training method to use and the number of iterations for which we wanted to run the chosen training algorithm during the training process.

However, these are not the only parameter values that can be set for the logistic regression method. Other parameters, such as the learning rate and the type of regularization to perform, can also be modified to achieve better training accuracy. Hence, we suggest that you explore these parameters and investigate how different values can affect the model’s training and prediction accuracy.

Further Reading

This section provides more resources on the topic if you want to go deeper.

Books

- Machine Learning for OpenCV, 2017.

- Mastering OpenCV 4 with Python, 2019.

Websites

- Logistic Regression, https://docs.opencv.org/3.4/dc/dd6/ml_intro.html#ml_intro_lr

Summary

In this tutorial, you learned how to apply OpenCV’s logistic regression algorithm, starting with a custom two-class dataset we generated.

Specifically, you learned:

- Several of the most important characteristics of the logistic regression algorithm.

- How to use the logistic regression algorithm on a custom dataset in OpenCV.

Do you have any questions?

Ask your questions in the comments below, and I will do my best to answer.

No comments yet.