Data preparation can make or break the predictive ability of your model.

In Chapter 3 of their book Applied Predictive Modeling, Kuhn and Johnson introduce the process of data preparation. They refer to it as the addition, deletion or transformation of training set data.

In this post you will discover the data pre-process steps that you can use to improve the predictive ability of your models.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

I Love Spreadsheets

Photo by Craig Chew-Moulding, some rights reserved

Data Preparation

You must pre-process your raw data before you model your problem. The specific preparation may depend on the data that you have available and the machine learning algorithms you want to use.

Sometimes, pre-processing of data can lead to unexpected improvements in model accuracy. This may be because a relationship in the data has been simplified or unobscured.

Data preparation is an important step and you should experiment with data pre-processing steps that are appropriate for your data to see if you can get that desirable boost in model accuracy.

There are three types of pre-processing you can consider for your data:

- Add attributes to your data

- Remove attributes from your data

- Transform attributes in your data

We will dive into each of these three types of pre-process and review some specific examples of operations that you can perform.

Want to Get Started With Data Preparation?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Add Data Attributes

Advanced models can extract the relationships from complex attributes, although some models require those relationships to be spelled out plainly. Deriving new attributes from your training data to include in the modeling process can give you a boost in model performance.

- Dummy Attributes: Categorical attributes can be converted into n-binary attributes, where n is the number of categories (or levels) that the attribute has. These denormalized or decomposed attributes are known as dummy attributes or dummy variables.

- Transformed Attribute: A transformed variation of an attribute can be added to the dataset in order to allow a linear method to exploit possible linear and non-linear relationships between attributes. Simple transforms like log, square and square root can be used.

- Missing Data: Attributes with missing data can have that missing data imputed using a reliable method, such as k-nearest neighbors.

Remove Data Attributes

Some methods perform poorly with redundant or duplicate attributes. You can get a boost in model accuracy by removing attributes from your data.

- Projection: Training data can be projected into lower dimensional spaces, but still characterize the inherent relationships in the data. A popular approach is Principal Component Analysis (PCA) where the principal components found by the method can be taken as a reduced set of input attributes.

- Spatial Sign: A spatial sign projection of the data will transform data onto the surface of a multidimensional sphere. The results can be used to highlight the existence of outliers that can be modified or removed from the data.

- Correlated Attributes: Some algorithms degrade in importance with the existence of highly correlated attributes. Pairwise attributes with high correlation can be identified and the most correlated attributes can be removed from the data.

Transform Data Attributes

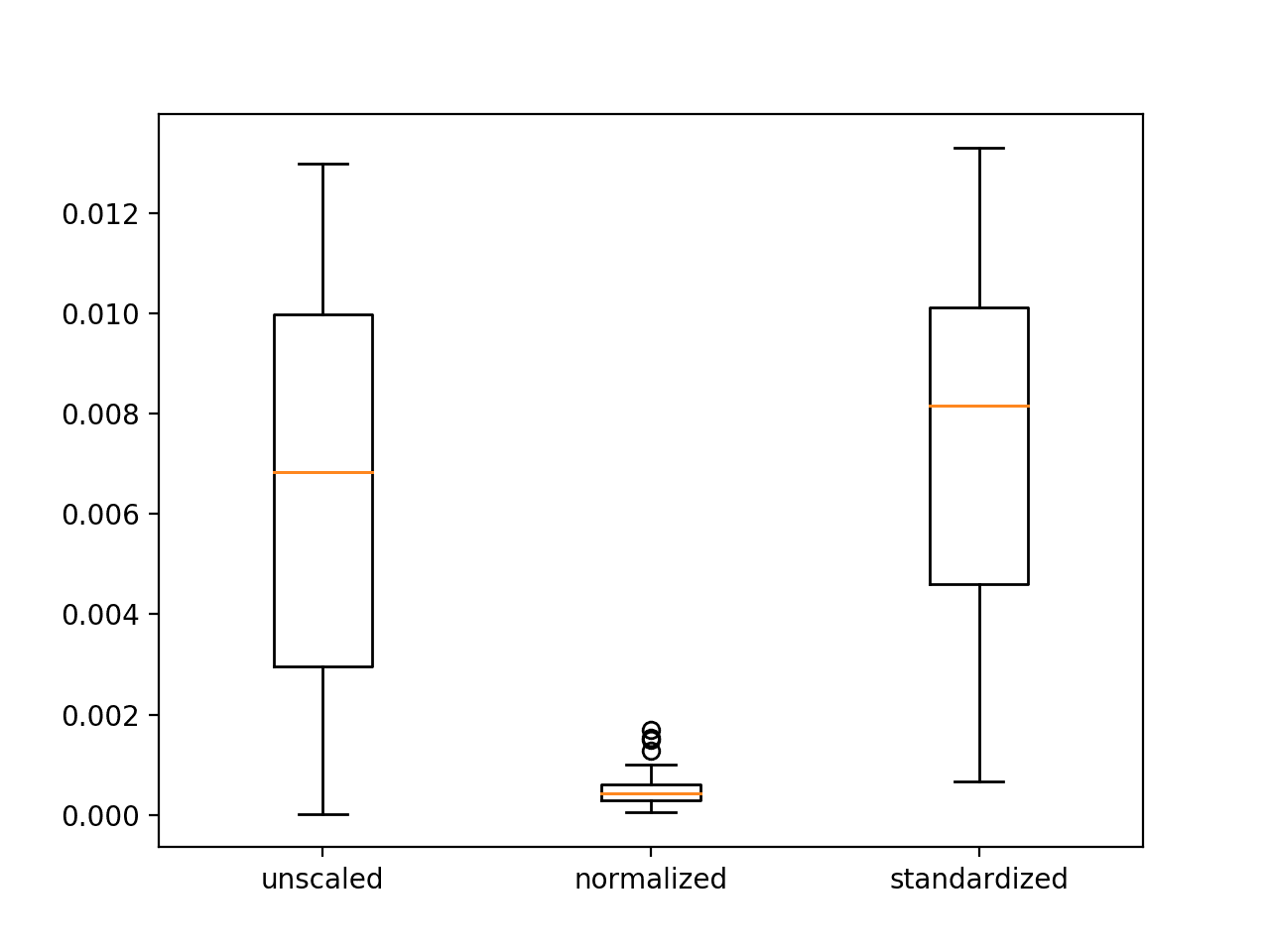

Transformations of training data can reduce the skewness of data as well as the prominence of outliers in the data. Many models expect data to be transformed before you can apply the algorithm.

- Centering: Transform the data so that it has a mean of zero and a standard deviation of one. This is typically called data standardization.

- Scaling: A standard scaling transformation is to map the data from the original scale to a scale between zero and one. This is typically called data normalization.

- Remove Skew: Skewed data is data that has a distribution that is pushed to one side or the other (larger or smaller values) rather than being normally distributed. Some methods assume normally distributed data and can perform better if the skew is removed. Try replacing the attribute with the log, square root or inverse of the values.

- Box-Cox: A Box-Cox transform or family of transforms can be used to reliably adjust data to remove skew.

- Binning: Numeric data can be made discrete by grouping values into bins. This is typically called data discretization. This process can be performed manually, although is more reliable if performed systematically and automatically using a heuristic that makes sense in the domain.

Summary

Data pre-process is an important step that can be required to prepare raw data for modeling, to meet the expectations of data for a specific machine learning algorithms, and can give unexpected boosts in model accuracy.

In this post we discovered three groups of data pre-processing methods:

- Adding Attributes

- Removing Attributes

- Transforming Attributes

The next time you are looking for a boost in model accuracy, consider what new perspectives you can engineer on your data for your models to explore and exploit.

Can you explain more detail about concept of attribute and dummy attribute?

Sure Juhyoung Lee,

You can take a categorical attribute like “color” with the values “red” and “blue and turn it into two binary attributes has_red and has_blue.

These new binary variables are dummy variables.

You can learn more here:

https://en.wikipedia.org/wiki/Dummy_variable_(statistics)

It was a great article, although i had a question suppose there is a data set consisting means, modes, min’s, max’s etc. How can we represent all those values on a common scale,or genralize the values, for example let’s say mean of heights in a group of people is x, and mode is y, and min value is z, and there is group 2 with same data, can the values be represented on a common scale?

Hi Kay,

You can scale each column (data type or feature) separately to a range of 0-1.

You can use the formula:

Where x is a given value and min and max are the limits of values on the column.

I hope that helps.

Thanks for the article, Jason!

If I have some normally distributed features and some skewed features, can I just transform the skewed data and leave the normally distributed data untouched? Can I i.e. log transform some features and leave others?

Regards!

Absolutely.

Hi!

You can add in the list also aggregations features, or statistical features in general (5-number summaries), outliers removal.

Great tip.

About “Correlated Attributes” that you mentioned in this post:

I was wondering if you have any post on using it (for example in sklearn), so I can read and understand more.

I may have an example in the R book. Perhaps search the blog?

I have a question about scaling. If we have a binary classification (0,1). Is it better to keep it as it is or change it to (-1,1) for example? Or does it depends on the data?

When I read posts about machine learning, I am not sure, if the notes are always true or not.

I noticed that in many cases, the response depends on the type of data. How can we say if a note/rule/point is dependent to a data type?

Really depends on the data and the algorithms being used.

When you say “Correlated Attributes: Some algorithms degrade in importance with the existence of highly correlated attributes. Pairwise attributes with high correlation can be identified and the most correlated attributes can be removed from the data.”

I was totally convinced of the opposite :O

I mean: I usually remove those attributes which are not correlated and keep those highly correlated… this can explain why I couldn’t optimise my models too much.

Do you have more documentation about this particular subject?

Thanks!

Multiple correlated input features do mess up most models, at worst resulting in worse skill, at best, creating a lot of redundancy in the model.

Formally, the problem is often referred to as multicollinearity:

https://en.wikipedia.org/wiki/Multicollinearity

Among all of these steps which are the most important ones? e.g. If I apply PCA for dimensionality reduction and create just two new features can I expect that other problems in the data (e.g.outliers, multicollinearity, skewed distribution) will no longer exist?

for transform attribute in add data attribute section, how can I choose which transformation is best among log, square and square root? Can I apply each of those transformations and keep all of them in the dataset. I feel like it may cause redundancy and multicollinearity.

Thank you for your great posts!!

The framing the problem offers the biggest leverage.

PCA with outliers might cause problems, better to remove them first.

Try maintaining multiple “views” of the data and try modes on each to explore the best combination of view/model/config.

Hi Jason,

For pairwise attributes with high correlation, what is the accepted level? The correlation can be -1 to +1.

Perhaps below 0.5. I’d recommend testing and see what works best for your data and algorithms.

Hi jason,

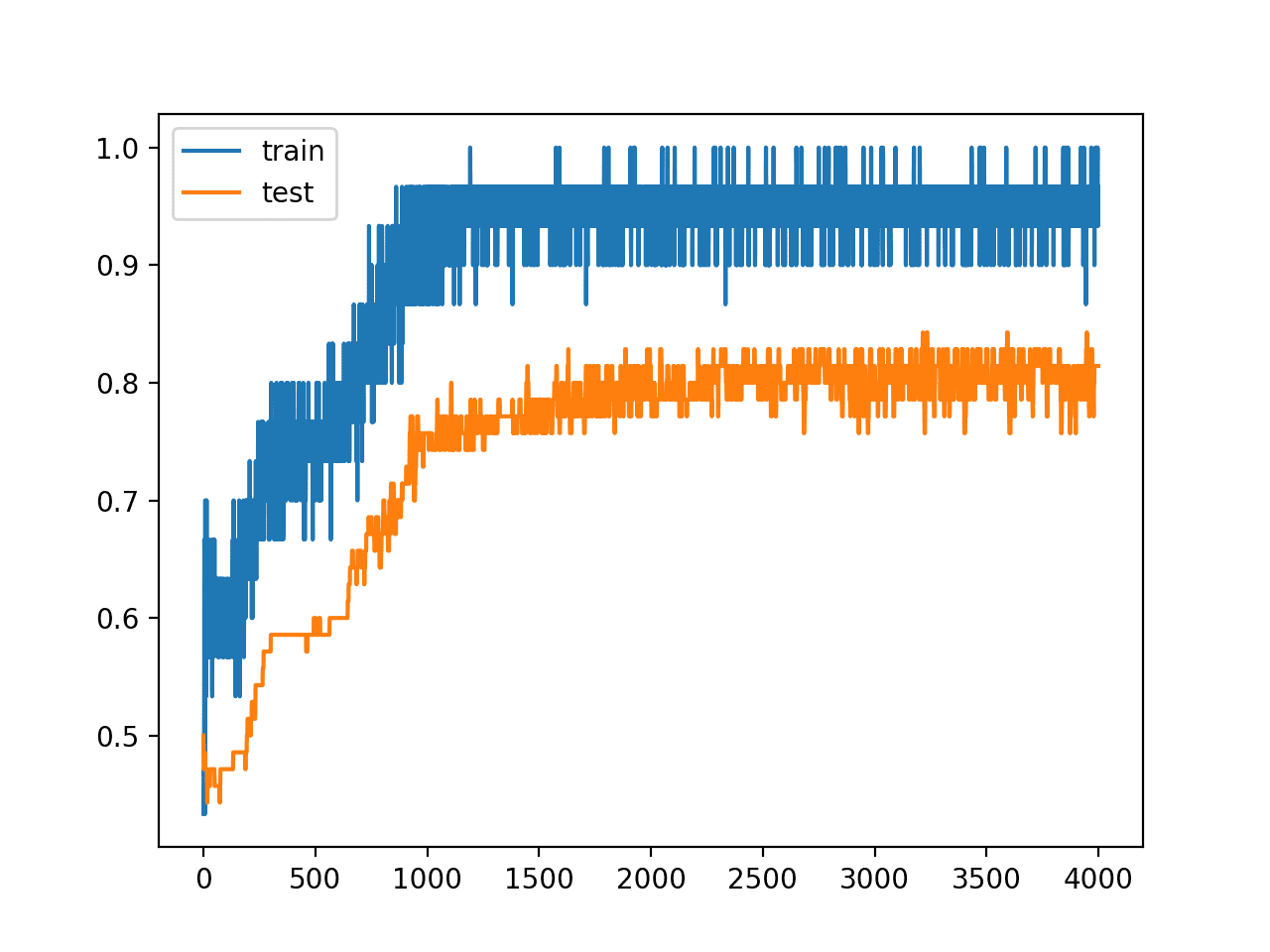

I have participated in a Kaggle competition in which i have to classify forest cover type ,

i tried everything i used like stacking various models , feature engineering , feature extraction

but my model accuracy is not increasing above 80%.

i also found that 2 types of cover type are really hard to separate so i tried to build a model to separate this 2 cover types.

nothing is working so i am frustrated it feels that i am not knowing something that others know.

it would be really helpful if you could give an insight , i am working on this for 2 weeks and very frustrated now.

and sorry for asking such a stupid question and long question.

I have some suggestions here that may help:

https://machinelearningmastery.com/machine-learning-performance-improvement-cheat-sheet/

And here:

https://machinelearningmastery.com/start-here/#better

Hi Jason,

I have a .csv file with 10 columns and roughly 6000 rows. My data is represented in the form of only 0 and 1. Each row represents a timeframe of a video.

Let’s say I want to bring the number of rows down from 6000 to 1000 rows without loosing information. What method is reliable in my case? And how it can be done?

Without losing information? Not sure I can help sorry.

Hi Sir,

As kNN is distance based algorithm so data normalization may have an impact on this algorithm. But is it possible that normalization, negatively impact model accuracy with kNN.

(I am getting RMSE=50 without Normalization, and RMSE=70 with normalization in kNN algorithm)

Is it possible or I am doing some logical mistake?

It is possible that data scaling does not help your model.

thanks for your reply

You’re welcome.

i like very much what you write , its very clear. Would you mind send me pdf versionplease ?

rustam@ui.ac.id

I believe you can print the web page into PDF using your browser.