Data can change over time. This can result in poor and degrading predictive performance in predictive models that assume a static relationship between input and output variables.

This problem of the changing underlying relationships in the data is called concept drift in the field of machine learning.

In this post, you will discover the problem of concept drift and ways to you may be able to address it in your own predictive modeling problems.

After completing this post, you will know:

- The problem of data changing over time.

- What is concept drift and how it is defined.

- How to handle concept drift in your own predictive modeling problems.

Kick-start your project with my new book Master Machine Learning Algorithms, including step-by-step tutorials and the Excel Spreadsheet files for all examples.

Let’s get started.

A Gentle Introduction to Concept Drift in Machine Learning

Photo by Joe Cleere, some rights reserved.

Overview

This post is divided into 3 parts; they are:

- Changes to Data Over Time

- What is Concept Drift?

- How to Address Concept Drift

Changes to Data Over Time

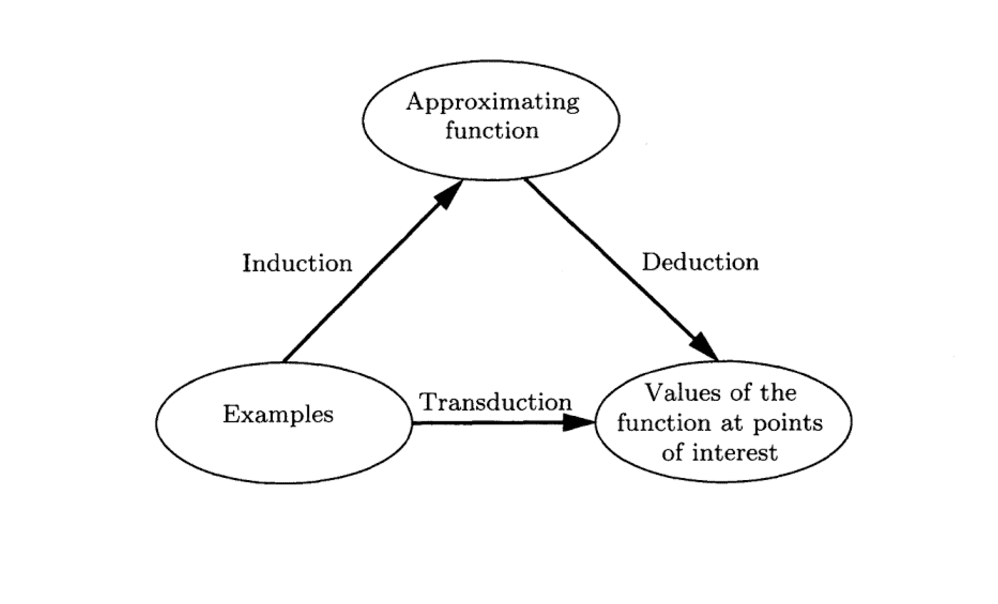

Predictive modeling is the problem of learning a model from historical data and using the model to make predictions on new data where we do not know the answer.

Technically, predictive modeling is the problem of approximating a mapping function (f) given input data (X) to predict an output value (y).

|

1 |

y = f(X) |

Often, this mapping is assumed to be static, meaning that the mapping learned from historical data is just as valid in the future on new data and that the relationships between input and output data do not change.

This is true for many problems, but not all problems.

In some cases, the relationships between input and output data can change over time, meaning that in turn there are changes to the unknown underlying mapping function.

The changes may be consequential, such as that the predictions made by a model trained on older historical data are no longer correct or as correct as they could be if the model was trained on more recent historical data.

These changes, in turn, may be able to be detected, and if detected, it may be possible to update the learned model to reflect these changes.

… many data mining methods assume that discovered patterns are static. However, in practice patterns in the database evolve over time. This poses two important challenges. The first challenge is to detect when concept drift occurs. The second challenge is to keep the patterns up-to-date without inducing the patterns from scratch.

— Page 10, Data Mining and Knowledge Discovery Handbook, 2010.

What is Concept Drift?

Concept drift in machine learning and data mining refers to the change in the relationships between input and output data in the underlying problem over time.

In other domains, this change maybe called “covariate shift,” “dataset shift,” or “nonstationarity.”

In most challenging data analysis applications, data evolve over time and must be analyzed in near real time. Patterns and relations in such data often evolve over time, thus, models built for analyzing such data quickly become obsolete over time. In machine learning and data mining this phenomenon is referred to as concept drift.

— An overview of concept drift applications, 2016.

A concept in “concept drift” refers to the unknown and hidden relationship between inputs and output variables.

For example, one concept in weather data may be the season that is not explicitly specified in temperature data, but may influence temperature data. Another example may be customer purchasing behavior over time that may be influenced by the strength of the economy, where the strength of the economy is not explicitly specified in the data. These elements are also called a “hidden context”.

A difficult problem with learning in many real-world domains is that the concept of interest may depend on some hidden context, not given explicitly in the form of predictive features. A typical example is weather prediction rules that may vary radically with the season. […] Often the cause of change is hidden, not known a priori, making the learning task more complicated.

— The problem of concept drift: definitions and related work, 2004.

The change to the data could take any form. It is conceptually easier to consider the case where there is some temporal consistency to the change such that data collected within a specific time period show the same relationship and that this relationship changes smoothly over time.

Note that this is not always the case and this assumption should be challenged. Some other types of changes may include:

- A gradual change over time.

- A recurring or cyclical change.

- A sudden or abrupt change.

Different concept drift detection and handling schemes may be required for each situation. Often, recurring change and long-term trends are considered systematic and can be explicitly identified and handled.

Concept drift may be present on supervised learning problems where predictions are made and data is collected over time. These are traditionally called online learning problems, given the change expected in the data over time.

There are domains where predictions are ordered by time, such as time series forecasting and predictions on streaming data where the problem of concept drift is more likely and should be explicitly tested for and addressed.

A common challenge when mining data streams is that the data streams are not always strictly stationary, i.e., the concept of data (underlying distribution of incoming data) unpredictably drifts over time. This has encouraged the need to detect these concept drifts in the data streams in a timely manner

— Concept Drift Detection for Streaming Data, 2015.

Indre Zliobaite in the 2010 paper titled “Learning under Concept Drift: An Overview” provides a framework for thinking about concept drift and the decisions required by the machine learning practitioner, as follows:

- Future assumption: a designer needs to make an assumption about the future data source.

- Change type: a designer needs to identify possible change patterns.

- Learner adaptivity: based on the change type and the future assumption, a designer chooses the mechanisms which make the learner adaptive.

- Model selection: a designer needs a criterion to choose a particular parametrization of the selected learner at every time step (e.g. the weights for ensemble members, the window size for variable window method).

This framework may help in thinking about the decision points available to you when addressing concept drift on your own predictive modeling problems.

How to Address Concept Drift?

There are many ways to address concept drift; let’s take a look at a few.

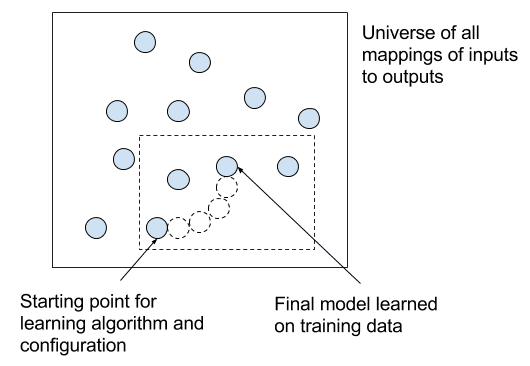

1. Do Nothing (Static Model)

The most common way is to not handle it at all and assume that the data does not change.

This allows you to develop a single “best” model once and use it on all future data.

This should be your starting point and baseline for comparison to other methods. If you believe your dataset may suffer concept drift, you can use a static model in two ways:

- Concept Drift Detection. Monitor skill of the static model over time and if skill drops, perhaps concept drift is occurring and some intervention is required.

- Baseline Performance. Use the skill of the static model as a baseline to compare to any intervention you make.

2. Periodically Re-Fit

A good first-level intervention is to periodically update your static model with more recent historical data.

For example, perhaps you can update the model each month or each year with the data collected from the prior period.

This may also involve back-testing the model in order to select a suitable amount of historical data to include when re-fitting the static model.

In some cases, it may be appropriate to only include a small portion of the most recent historical data to best capture the new relationships between inputs and outputs (e.g. the use of a sliding window).

3. Periodically Update

Some machine learning models can be updated.

This is an efficiency over the previous approach (periodically re-fit) where instead of discarding the static model completely, the existing state is used as the starting point for a fit process that updates the model fit using a sample of the most recent historical data.

For example, this approach is suitable for most machine learning algorithms that use weights or coefficients such as regression algorithms and neural networks.

4. Weight Data

Some algorithms allow you to weigh the importance of input data.

In this case, you can use a weighting that is inversely proportional to the age of the data such that more attention is paid to the most recent data (higher weight) and less attention is paid to the least recent data (smaller weight).

5. Learn The Change

An ensemble approach can be used where the static model is left untouched, but a new model learns to correct the predictions from the static model based on the relationships in more recent data.

This may be thought of as a boosting type ensemble (in spirit only) where subsequent models correct the predictions from prior models. The key difference here is that subsequent models are fit on different and more recent data, as opposed to a weighted form of the same dataset, as in the case of AdaBoost and gradient boosting.

6. Detect and Choose Model

For some problem domains it may be possible to design systems to detect changes and choose a specific and different model to make predictions.

This may be appropriate for domains that expect abrupt changes that may have occurred in the past and can be checked for in the future. It also assumes that it is possible to develop skillful models to handle each of the detectable changes to the data.

For example, the abrupt change may be a specific observation or observations in a range, or the change in the distribution of one or more input variables.

7. Data Preparation

In some domains, such as time series problems, the data may be expected to change over time.

In these types of problems, it is common to prepare the data in such a way as to remove the systematic changes to the data over time, such as trends and seasonality by differencing.

This is so common that it is built into classical linear methods like the ARIMA model.

Typically, we do not consider systematic change to the data as a problem of concept drift because it can be dealt with directly. Rather, these examples may be a useful way of thinking about your problem and may help you anticipate change and prepare data in a specific way using standardization, scaling, projections, and more to mitigate or at least reduce the effects of change to input variables in the future.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

- Learning in the Presence of Concept Drift and Hidden Contexts, 1996.

- The problem of concept drift: definitions and related work, 2004.

- Concept Drift Detection for Streaming Data, 2015.

- Learning under Concept Drift: an Overview, 2010.

- An overview of concept drift applications, 2016.

- What Is Concept Drift and How to Measure It?, 2010.

- Understanding Concept Drift, 2017.

Articles

Summary

In this post, you discovered the problem of concept drift in changing data for applied machine learning.

Specifically, you learned:

- The problem of data changing over time.

- What is concept drift and how it is defined.

- How to handle concept drift in your own predictive modeling problems.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thanks a lot for your blog, I always read with a lot of attention. Something which is a bit linked for which I did not find an answer: how should we adapt a classification model when new outputs are required ? Typically, classification for face recognition, for which you can add new people regularly (So new output classes), without regenerating a model each time?

Great question!

You may need to train a new model, but use the existing model as a starting point.

Hey,Jason could you please let me know how to start my model using existing model as starting point?

It is called transfer learning, see these tutorials:

https://machinelearningmastery.com/?s=transfer+learning&post_type=post&submit=Search

Or model updating:

https://machinelearningmastery.com/update-neural-network-models-with-more-data/

Hi Jason,

Great post, as always!

Can you post some literature on Point 5: Learn The Change? The way I think of it is, you have a global baseline model which acts like a static model. Another model which is trained on more subsequent data, which essentially captures the recent trend.

Thanks

Sorry, I don’t have refs.

Hey, thanks for your blog,It was very interesting! How would you refer to the appearnece of novel classes (which usually addressed with novelty detection algorithms). Is it also concept drift in your perspective?

Thanks

If you can anticipate them, you can leave room in the encoding.

If you can afford to refit, then refit a model once the problem has changed.

If you cannot, perhaps fit a new model that chooses to use the old model for the old classes and a new model for the new classes with a one vs reset type structure. This sounds terrible actually…

Hi, i am looking for dataset of regression problem containing drift.

Do you have an idea of where can i find such dataSet or maybe generate them (the three types (gradual, abrupt ..)

Thanks for your job and your help !

No sorry.

@sathouel you might consider financial data from around 2001 and 2008 where the global economy (and esp the US economy) had abrupt changes as well as some unusual leading indicator fluctuations leading up to the changes (2008 recession comes to mind) that break most traditional financial forecasting models.

Why?

The stock market data has hidden point “ market sentiment” which is dependent on many unknown parameters

Any code example covering the “How to Address Concept Drift?” section would be great.

I have a little on updating LSTMs here that might be of interest:

https://machinelearningmastery.com/update-lstm-networks-training-time-series-forecasting/

Hi! Thanks for this post. I would love to find “algorithms that allow you to weigh the importance of input data” but have difficulties finding some. I found this paper ( https://research.uni-sofia.bg/bitstream/10506/57/1/ECAI2000_WSTR.pdf) but the implementation is not available, do you have examples of these algorithms and especially with some code available? Thanks a lot

A simple linear or exponential weighting that pays more attention to more recent data might be a good place to start.

Hi,

Great article!

I wanted to know, though, if non-parametric approaches (like clustering) help prevent the problem of Concept Drift from arising in the first place?

How so?

By implicitly adjusting cluster compositions based on the (new) observed patterns in the users’ behaviours.

I’m not claiming that it does. Just wanted to discuss if it’s a possibility.

Hi,

I am working on a domain that belongs to concept drift problem which is spam detection in twitter where the problem that is still yet to be solved properly is ‘spam drift’, Do you have any insights or ideas on how to proceed or any algorithm that might be suitable.

Appreciate your help

Measure the drift first, then propose and test methods to address it.

How to measure drift ? can you plz elaborate it with example

A change in distribution would be a good start. Perhaps cross-entropy or kl divergence.

I’m trying to quantify the expense of concept drift. Can you please place a value in terms of time saved, processing power saved, accuracy improvement of the elimination of this problem?

I don’t follow sorry, what do you mean exactly?

Hi,

How can we justify Concept Drift inimage classification?

What do you mean justify? Do you mean detect?

A good sign of drift is a decay in performance over time with new data.

HI,

WHAT IS MODEL TRACKING AND HOW CAN IT BE DONE BEFORE DEPOLYMENT ?

THANK YOU.

I don’t know, what is model tracking?

Do you mean model evaluation?

https://machinelearningmastery.com/faq/single-faq/how-do-i-evaluate-a-machine-learning-algorithm

Thanks for the lovely article. I am working on Historical Data from Nasdaq for doing stock prediction using ensemble methods, do the concept drift improve the model accuracy if I apply it? or is it practical to use concept Drift in Stock prediction?

Thanks for your kind cooperation

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Thanks a lot for ur kind reply. However, I have the following questions:

1- How many ensemble Algorithms are currently available? or which once are considered as an ensemble

2- Is easy to modify one of the ensembles to achieve better accuracy?

thanks in advance

There are many ensemble algorithms, perhaps more than you can count.

There are maybe 3 that you should focus on to start with, e.g. stacking, bagging, boosting.

All models must be tuned to a specific dataset to improve model skill.

Can you please give simple real life example of concept drift with small dataset?

Sure: The level in a time series changes each day.

Sir, I need an mathematical example which shows change in probability distribution i.e.P(Y|X) which shows concept drift. Can you plz demonstrate concept drift on small n simple dataset

Thanks for the suggestion.

Dear Jason, thanks alot for your extremely useful article. Currently I am working on an employee churn prediction model. since our churn is limited to maximum 200 people a year, we have decided to use 5 years churn data. in order to train our machine, we need to include retained employee in data set as well.

now there’s a conflict between me and my colleague, when I think we need to use remained employees of each year and add all their records so if some one have retained in company in last 5 years will have 5 different records with 5 different values like different age, tenure, different performance score and so on. but My colleague think counting 1 retained person 5 times in data set is wrong and only their last status should be considered.

may i use this concept and tell him that my idea is correct?

You’re welcome.

I don’t know off the cuff sorry, you will need to dig into your data to discover what is true.

Dear Jason Brownlee ,

In your blog u given good information about the drift detection, i started my research doing ph.d , i have chosen the concept drift detection using ML as a problem. I need the help of choosing the methodology for the above problem dear. can u suggest new methodolgy

Defining a methodology sounds like something you should do as part of your research project.

Can any one help me a novel methodology for concept drift detection using ML.

Yes, but it would probably invalidate the idea of doing the phd in the first place. I recommend checking the literature and discussing with your research advisor.

Hi Mahshad,

In my view you are correct, each year priorities are different so good approach is use yearly data instead of complete 5 years data.

Hello, what is difference in concept drift and noise in dataset ?

Noise is random, drift is in a specific direction, e.g. concepts like classes and decision boundaries change.

Drift could be a bias, it could be a type of noise.

Can the concept drift happen in the stream data of students’ performance? And how?

Sure, any change in data over time is an example of drift.

The specific cause for a domain may depend on many factors, perhaps discuss with a domain expert.

Hi Jason,

Thanks a lot for all of your blogs. I find them very interesting and helpful.

I have built a model which predicts a dependent variable based on a number of independent variables. In practice, new combinations of independent variables are supplied and decisions are based on the resulting prediction. I have received a lot of pushback from supervisor about what would happen if the new combinations are far removed from the population used in the training dataset. Naturally a prediction will still be made, but the validity of it is dubious. My question is: how can one assess the similarity of out-of-sample independent variables to those used during training? If this is possible, I could caveat predictions with warnings when this example is far removed from the distribution in the training dataset.

Another idea is to test where each new variable fits within the distribution of the same variable during training. I have a large dataset and this is do-able. However, their is pushback about only using univariate distributions? and the case when a combination is very unlikely or impossible. Any thoughts on these two methods? Are these methods named in literature?

Thanks in advance and apologies if this does not make sense.

Regards,

Aidan

I think confidence can be provided with cross validation in your case. Please see this post: https://machinelearningmastery.com/training-validation-test-split-and-cross-validation-done-right/

At the end, you compare test set score with cross validation score. In this way, you can tell.

Thanks Adrian. I can certainly see the benefits of cross validation and it makes sense in general cases, but if the new combination of independent variables is far removed from the population this would not help. For instance, negative interest rates are a popular theme in economics and if these were supplied to my model, it would result in many independent variables changing their distribution from what was trained on.

I know this is a bad example, as the user should be aware of such a large change, but what if a user unknowingly supplies a combination of variables which is far removed from the training populations, how would we alert he/she that the model may not be accurate? Is there a technique for such things? E.g. similarity of out-of-sample independent variables to those used during training

The model will not know it is inaccurate because it will not see what is the correct prediction. But your description seems to me that you’re looking for outlier detection. Try think along that line.

Thanks for the article Adrian, we have different tests which can measure the data drift like population stability index(PSI), chi-square for categorical, k-s for continuous, but how can we quantify that data in the training set drifted by a certain value over the test set?

Do you think the sample mean and variance within test and training set can do the job?

Greeting Dr. Jason,

How to inject concept drift into the dataset for the purpose of a study?

It is best not to inject, but to collect data in different time and see how a model trained using one dataset apply on another. For example, in NLP problems, if you collect text from 2000s for training and apply it on 1900s for testing, you can tell how the drift may affect the model. If you really want to inject, you have to have a model on how the data drifts before you can inject it.