Transduction or transductive learning are terms you may come across in applied machine learning.

The term is being used with some applications of recurrent neural networks on sequence prediction problems, like some problems in the domain of natural language processing.

In this post, you will discover what transduction is in machine learning.

After reading this post, you will know:

- The definition of transduction generally and in some specific fields of study.

- What transductive learning is in machine learning.

- What transduction means when talking about sequence prediction problems.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Overview

This tutorial is divided into 4 parts; they are:

- What Is Transduction?

- Transductive Learning

- Transduction in Linguistics

- Transduction in Sequence Prediction

What Is Transduction?

Let’s start with some basic dictionary definitions.

To transduce means to convert something into another form.

transduce: to convert (something, such as energy or a message) into another form essentially sense organs transduce physical energy into a nervous signal

— Merriam-Webster Dictionary (online), 2017

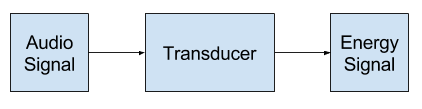

It is a popular term from the field of electronics and signal processing, where a “transducer” is a general name for components or modules converting sounds to energy or vise-versa.

All signal processing begins with an input transducer. The input transducer takes the input signal and converts it to an electrical signal. In signal-processing applications, the transducer can take many forms. A common example of an input transducer is a microphone.

— Digital Signal Processing Demystified, 1997

In biology, specifically genetics, transduction refers to the process of a microorganism transferring genetic material to another microorganism.

transduction: the action or process of transducing; especially : the transfer of genetic material from one microorganism to another by a viral agent (such as a bacteriophage)

— Merriam-Webster Dictionary (online), 2017

So, generally, we can see that transduction is about converting a signal into another form.

The signal processing description is the most salient where sound waves are turned into electrical energy for some use within a system. Each sound would be represented by some electrical signature, at some chosen level of sampling.

Example of Signal Processing Transducer

Transductive Learning

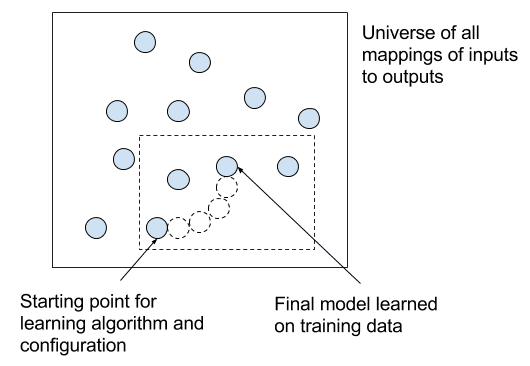

Transduction or transductive learning is used in the field of statistical learning theory to refer to predicting specific examples given specific examples from a domain.

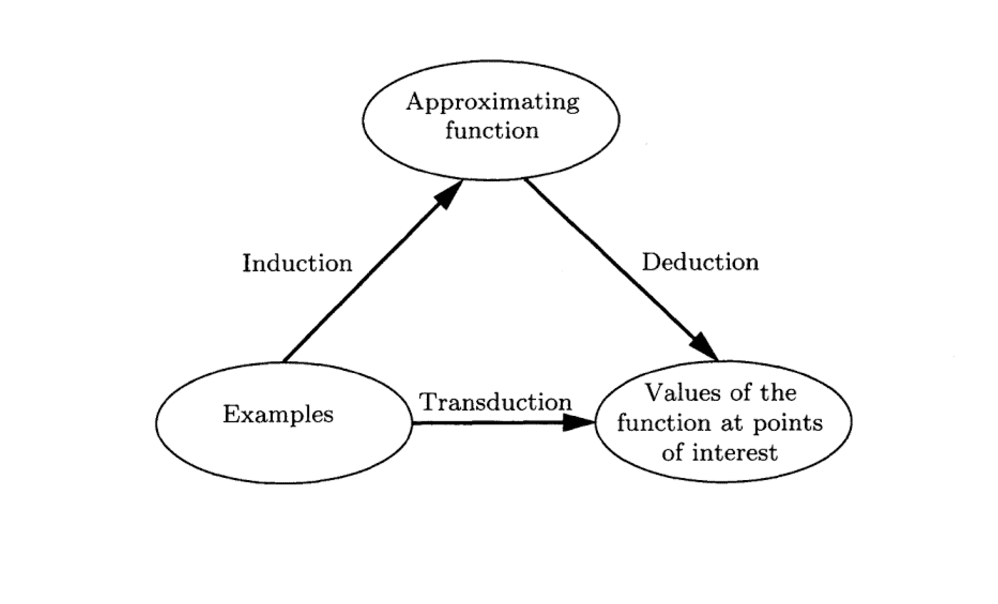

It is contrasted with other types of learning, such as inductive learning and deductive learning.

Induction, deriving the function from the given data. Deduction, deriving the values of the given function for points of interest. Transduction, deriving the values of the unknown function for points of interest from the given data.

— Page 169, The Nature of Statistical Learning Theory, 1995

Relationship between Induction, Deduction and Transduction

Taken from The Nature of Statistical Learning Theory.

It is an interesting framing of supervised learning where the classical problem of “approximating a mapping function from data and using it to make a prediction” is seen as more difficult than is required. Instead, specific predictions are made directly from the real samples from the domain. No function approximation is required.

The model of estimating the value of a function at a given point of interest describes a new concept of inference: moving from the particular to the particular. We call this type of inference transductive inference. Note that this concept of inference appears when one would like to get the best result from a restricted amount of information.

— Page 169, The Nature of Statistical Learning Theory, 1995

A classical example of a transductive algorithm is the k-Nearest Neighbors algorithm that does not model the training data, but instead uses it directly each time a prediction is required.

Transduction is naturally related to a set of algorithms known as instance-based, or case-based learning. Perhaps, the most well-known algorithm in this class is k-nearest neighbour algorithm.

— Learning by Transduction, 1998

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Transduction in Linguistics

Classically, transduction has been used when talking about natural language, such as in the field of linguistics.

For example, there is the notion of a “transduction grammar” that refers to a set of rules for transforming examples of one language into another.

A transduction grammar describes a structurally correlated pair of languages. It generates sentence pairs, rather than sentences. The language-1 sentence is (intended to be) a translation of the language-2 sentence.

— Page 460, Handbook of Natural Language Processing, 2000.

There is also the concept of a “finite-state transducer” (FST) from the theory of computation that is invoked when talking about translation tasks for mapping one set of symbols to another. Importantly, each input produces one output.

A finite state transducer consists of a number of states. When transitioning between states an input symbol is consumed and an output symbol is emitted.

— Page 294, Statistical Machine Translation, 2010.

This use of transduction when talking about theory and classical machine translation color the usage of the term when talking about modern sequence prediction with recurrent neural networks on natural language processing tasks.

Transduction in Sequence Prediction

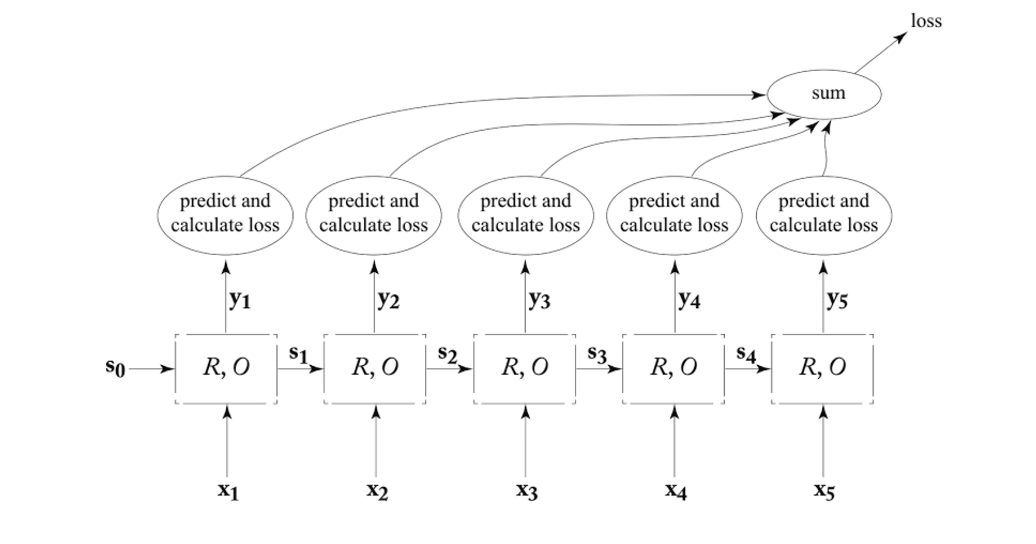

In his textbook on neural networks for language processing, Yoav Goldberg defines a transducer as a specific network model for NLP tasks.

A transducer is narrowly defined as a model that outputs one time step for each input time step provided. This maps to the linguistic usage, specifically with finite-state transducers.

Another option is to treat the RNN as a transducer, producing an output for each input it reads in.

— Page 168, Neural Network Methods in Natural Language Processing, 2017.

He proposes this type of model for sequence tagging as well as language modeling. He goes on to indicate that conditioned-generation, such as with the Encoder-Decoder architecture, may be considered a special case of the RNN transducer.

This last point is surprising given that the Decoder in the Encoder-Decoder model architecture permits a varied number of outputs for a given input sequence, breaking “one output per input” in the definition.

Transducer RNN Training Graph.

Taken from “Neural Network Methods in Natural Language Processing.”

More generally, transduction is used in NLP sequence prediction tasks, specifically translation. The definitions seem more relaxed than the strict one-output-per-input of Goldberg and the FST.

For example, Ed Grefenstette, et al. describe transduction as mapping an input string to an output string.

Many natural language processing (NLP) tasks can be viewed as transduction problems, that is learning to convert one string into another. Machine translation is a prototypical example of transduction and recent results indicate that Deep RNNs have the ability to encode long source strings and produce coherent translations

— Learning to Transduce with Unbounded Memory, 2015.

They go on to provide a list of some specific NLP tasks that help to make this broad definition concrete.

String transduction is central to many applications in NLP, from name transliteration and spelling correction, to inflectional morphology and machine translation

Alex Graves also uses transduction as a synonym for transformation and usefully also provides a list of example NLP tasks that meet the definition.

Many machine learning tasks can be expressed as the transformation—or transduction—of input sequences into output sequences: speech recognition, machine translation, protein secondary structure prediction and text-to-speech to name but a few.

— Sequence Transduction with Recurrent Neural Networks, 2012.

To summarize, we can restate a list of transductive natural language processing tasks as follows:

- Transliteration, producing words in a target form given examples in a source form.

- Spelling Correction, producing correct word spelling given incorrect word spelling.

- Inflectional Morphology, producing new sequences given source sequences and context.

- Machine Translation, producing sequences of words in a target language given examples in a source language.

- Speech Recognition, producing sequences of text given sequences of audio.

- Protein Secondary Structure Prediction, predicting 3D structure given input sequences of amino acids (not NLP).

- Text-to-Speech, or speech synthesis, producing audio given text sequences.

Finally, in addition to the notion of transduction referring to broad classes of NLP problems and RNN sequence prediction models, some new methods are explicitly being named as such. Navdeep Jaitly, et al. refer to their new RNN sequence-to-sequence prediction method as a “Neural Transducer“, which technically RNNs for sequence-to-sequence prediction would also be.

we present a Neural Transducer, a more general class of sequence-to-sequence learning models. Neural Transducer can produce chunks of outputs (possibly of zero length) as blocks of inputs arrive – thus satisfying the condition of being “online”. The model generates outputs for each block by using a transducer RNN that implements a sequence-to-sequence model.

— A Neural Transducer, 2016

Further Reading

This section provides more resources on the topic if you are looking go deeper.

Definitions

- Merriam-Webster Dictionary definition of transduce

- Digital Signal Processing Demystified, 1997

- Transduction in Genetics on Wikipedia

Learning Theory

- The Nature of Statistical Learning Theory, 1995

- Learning by Transduction, 1998

- Transduction (machine learning) on Wikipedia

Linguistics

- Handbook of Natural Language Processing, 2000.

- Finite-state transducer on Wikipedia

- Statistical Machine Translation, 2010.

Sequence Prediction

- Neural Network Methods in Natural Language Processing, 2017.

- Learning to Transduce with Unbounded Memory, 2015.

- Sequence Transduction with Recurrent Neural Networks, 2012.

- A Neural Transducer, 2016

Summary

In this post, you discovered transduction in applied machine learning.

Specifically, you learned:

- The definition of transduction generally and in some specific fields of study.

- What transductive learning is in machine learning.

- What transduction means when talking about sequence prediction problems.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

I have a set of points on the x,y plane and I find they correspond to a sine wave with a certain period, phase and amplitude: Induction.

I have a sine wave with a certain period, phase and amplitude and I’m interested to know the values of y for certain values of x: Deduction.

I have a set of points on the x,y plane and I’m interested to know the values of y for certain values of x: Interpolation.

Is there anything in transduction that can’t be called interpolation?

In the signal processing sense (and natural language processing), transduction is best thought of as a transform.

In the statistical machine learning sense, it is a type of just-in-time instance based prediction. Interpolation sounds fair.

good , explain very well

Thanks.

Hello sir

I would like to know the way to do seq2seq implementation in KERAS pyhton Can you provide me some resources for that or some books or examples

best wishes

See this tutorial:

https://machinelearningmastery.com/encoder-decoder-long-short-term-memory-networks/

Nice summary, thank you.

I’ve noticed that many transduction explanations make the point that it is about avoiding to learn a function, and instead making predictions directly for test cases. I get the idea but I find it a bit imprecise.

Take, for example, k-means. Given a set of training cases and k-means, there *is* a function mapping each test case to its cluster, namely the function defined by using those training examples with k-means. In other words, k-means itself is a function.

So it seems to me what is really meant by “not learning a function” is actually “not performing much a priori processing, and not representing a function in a compact, abstract form, and instead representing it in terms of training examples.”

This reminds me of the misnomer “non-parametric probabilistic models”, which are actually parametric but have an unbound rather than fixed number of parameters, typically expressed in terms of training data as well.

Yes, I completely agree.

You’re probably looking for “Abduction,” which already means what this post says “transduction” means

Can you elaborate please David?

Thanks a lot for this article. I get lost in all the definitions of this word.

So, can we say transduction in Sequence prediction doesn’t apply the transductive learning types of prediction? Or are they both related?

I think you can consider that as related. After all, transduction is a concept of what you want to do. Implementation can be different in a specific problem.

Helpful explanation of the word transduction which is the first word I come across as I set out to grok the “Attention is all you need” Transformer paper. Thank you.

You are very welcome Alexander! We appreciate the feedback and support.