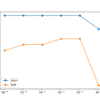

Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout works by probabilistically removing, or “dropping out,” inputs to a layer, which may be input variables in the data sample or activations from a previous layer. It has the effect of simulating a large number of networks with very different network […]