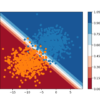

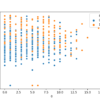

Classification algorithms learn how to assign class labels to examples, although their decisions can appear opaque. A popular diagnostic for understanding the decisions made by a classification algorithm is the decision surface. This is a plot that shows how a fit machine learning algorithm predicts a coarse grid across the input feature space. A decision […]