Datasets from real-world scenarios are important for building and testing machine learning models. You may just want to have some data to experiment with an algorithm. You may also want to evaluate your model by setting up a benchmark or determining its weaknesses using different sets of data. Sometimes, you may also want to create synthetic datasets, where you can test your algorithms under controlled conditions by adding noise, correlations, or redundant information to the data.

In this post, we’ll illustrate how you can use Python to fetch some real-world time-series data from different sources. We’ll also create synthetic time-series data using Python’s libraries.

After completing this tutorial, you will know:

- How to use the

pandas_datareader - How to call a web data server’s APIs using the

requestslibrary - How to generate synthetic time-series data

Kick-start your project with my new book Python for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.Tutorial Overview

This tutorial is divided into three parts; they are:

- Using

pandas_datareader - Using the

requestslibrary to fetch data using the remote server’s APIs - Generate synthetic time-series data

Loading Data Using pandas-datareader

This post will depend on a few libraries. If you haven’t installed them in your system, you may install them using pip:

|

1 |

pip install pandas_datareader requests |

The pandas_datareader library allows you to fetch data from different sources, including Yahoo Finance for financial market data, World Bank for global development data, and St. Louis Fed for economic data. In this section, we’ll show how you can load data from different sources.

Behind the scene, pandas_datareader pulls the data you want from the web in real time and assembles it into a pandas DataFrame. Because of the vastly different structure of web pages, each data source needs a different reader. Hence, pandas_datareader only supports reading from a limited number of sources, mostly related to financial and economic time series.

Fetching data is simple. For example, we know that the stock ticker for Apple is AAPL, so we can get the daily historical prices of Apple stock from Yahoo Finance as follows:

|

1 2 3 4 5 6 |

import pandas_datareader as pdr # Reading Apple shares from yahoo finance server shares_df = pdr.DataReader('AAPL', 'yahoo', start='2021-01-01', end='2021-12-31') # Look at the data read print(shares_df) |

The call to DataReader() requires the first argument to specify the ticker and the second argument the data source. The above code prints the DataFrame:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

High Low Open Close Volume Adj Close Date 2021-01-04 133.610001 126.760002 133.520004 129.410004 143301900.0 128.453461 2021-01-05 131.740005 128.429993 128.889999 131.009995 97664900.0 130.041611 2021-01-06 131.050003 126.379997 127.720001 126.599998 155088000.0 125.664215 2021-01-07 131.630005 127.860001 128.360001 130.919998 109578200.0 129.952271 2021-01-08 132.630005 130.229996 132.429993 132.050003 105158200.0 131.073914 ... ... ... ... ... ... ... 2021-12-27 180.419998 177.070007 177.089996 180.330002 74919600.0 180.100540 2021-12-28 181.330002 178.529999 180.160004 179.289993 79144300.0 179.061859 2021-12-29 180.630005 178.139999 179.330002 179.380005 62348900.0 179.151749 2021-12-30 180.570007 178.089996 179.470001 178.199997 59773000.0 177.973251 2021-12-31 179.229996 177.259995 178.089996 177.570007 64062300.0 177.344055 [252 rows x 6 columns] |

We may also fetch the stock price history from multiple companies with the tickers in a list:

|

1 2 3 |

companies = ['AAPL', 'MSFT', 'GE'] shares_multiple_df = pdr.DataReader(companies, 'yahoo', start='2021-01-01', end='2021-12-31') print(shares_multiple_df.head()) |

and the result would be a DataFrame with multi-level columns:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

Attributes Adj Close Close \ Symbols AAPL MSFT GE AAPL MSFT Date 2021-01-04 128.453461 215.434982 83.421600 129.410004 217.690002 2021-01-05 130.041611 215.642776 85.811905 131.009995 217.899994 2021-01-06 125.664223 210.051315 90.512833 126.599998 212.250000 2021-01-07 129.952286 216.028732 89.795753 130.919998 218.289993 2021-01-08 131.073944 217.344986 90.353485 132.050003 219.619995 ... Attributes Volume Symbols AAPL MSFT GE Date 2021-01-04 143301900.0 37130100.0 9993688.0 2021-01-05 97664900.0 23823000.0 10462538.0 2021-01-06 155088000.0 35930700.0 16448075.0 2021-01-07 109578200.0 27694500.0 9411225.0 2021-01-08 105158200.0 22956200.0 9089963.0 |

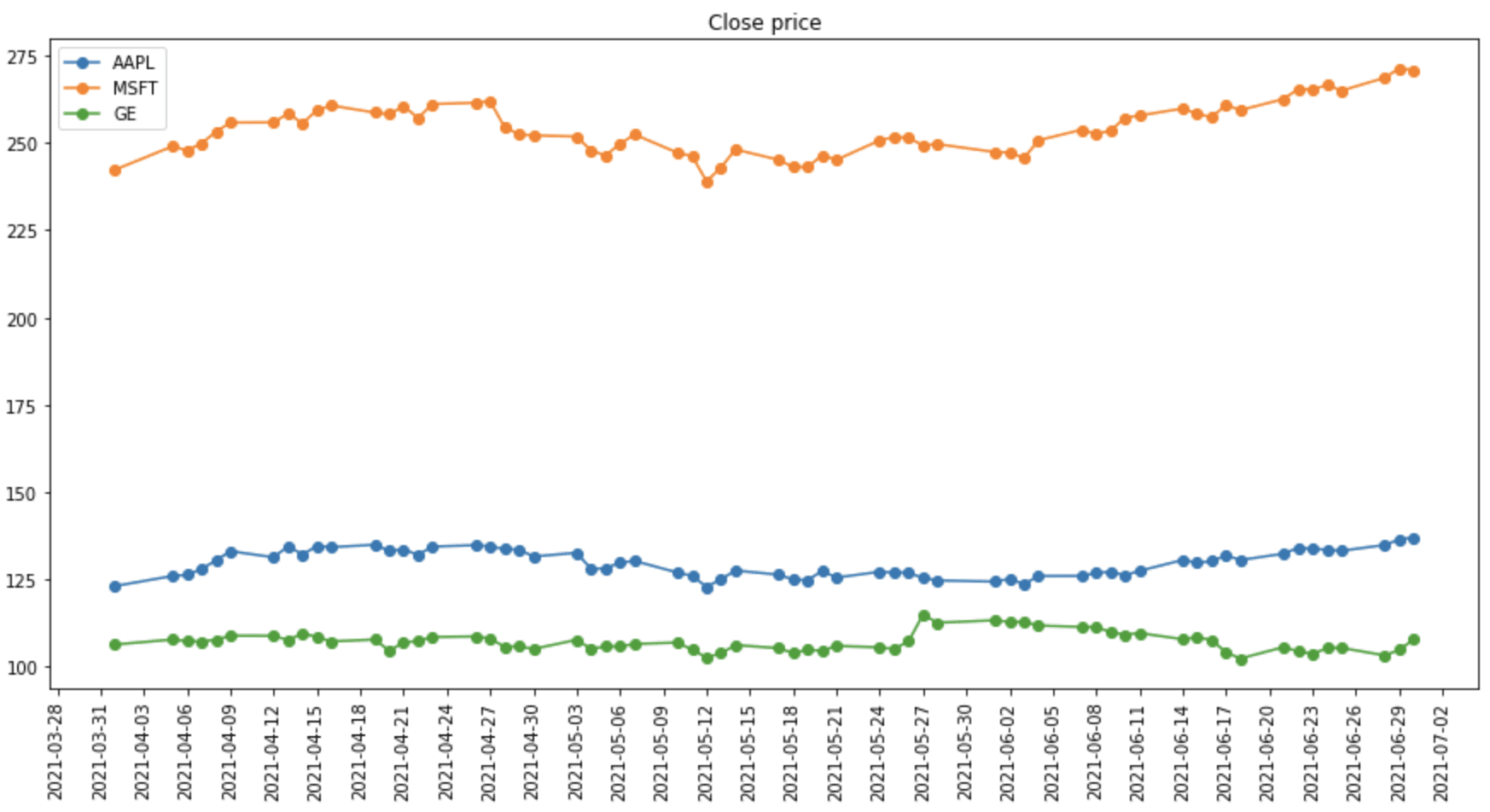

Because of the structure of DataFrames, it is convenient to extract part of the data. For example, we can plot only the daily close price on some dates using the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

import matplotlib.pyplot as plt import matplotlib.ticker as ticker # General routine for plotting time series data def plot_timeseries_df(df, attrib, ticker_loc=1, title='Timeseries', legend=''): fig = plt.figure(figsize=(15,7)) plt.plot(df[attrib], 'o-') _ = plt.xticks(rotation=90) plt.gca().xaxis.set_major_locator(ticker.MultipleLocator(ticker_loc)) plt.title(title) plt.gca().legend(legend) plt.show() plot_timeseries_df(shares_multiple_df.loc["2021-04-01":"2021-06-30"], "Close", ticker_loc=3, title="Close price", legend=companies) |

Multiple shares fetched from Yahoo Finance

The complete code is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

import pandas_datareader as pdr import matplotlib.pyplot as plt import matplotlib.ticker as ticker companies = ['AAPL', 'MSFT', 'GE'] shares_multiple_df = pdr.DataReader(companies, 'yahoo', start='2021-01-01', end='2021-12-31') print(shares_multiple_df) def plot_timeseries_df(df, attrib, ticker_loc=1, title='Timeseries', legend=''): "General routine for plotting time series data" fig = plt.figure(figsize=(15,7)) plt.plot(df[attrib], 'o-') _ = plt.xticks(rotation=90) plt.gca().xaxis.set_major_locator(ticker.MultipleLocator(ticker_loc)) plt.title(title) plt.gca().legend(legend) plt.show() plot_timeseries_df(shares_multiple_df.loc["2021-04-01":"2021-06-30"], "Close", ticker_loc=3, title="Close price", legend=companies) |

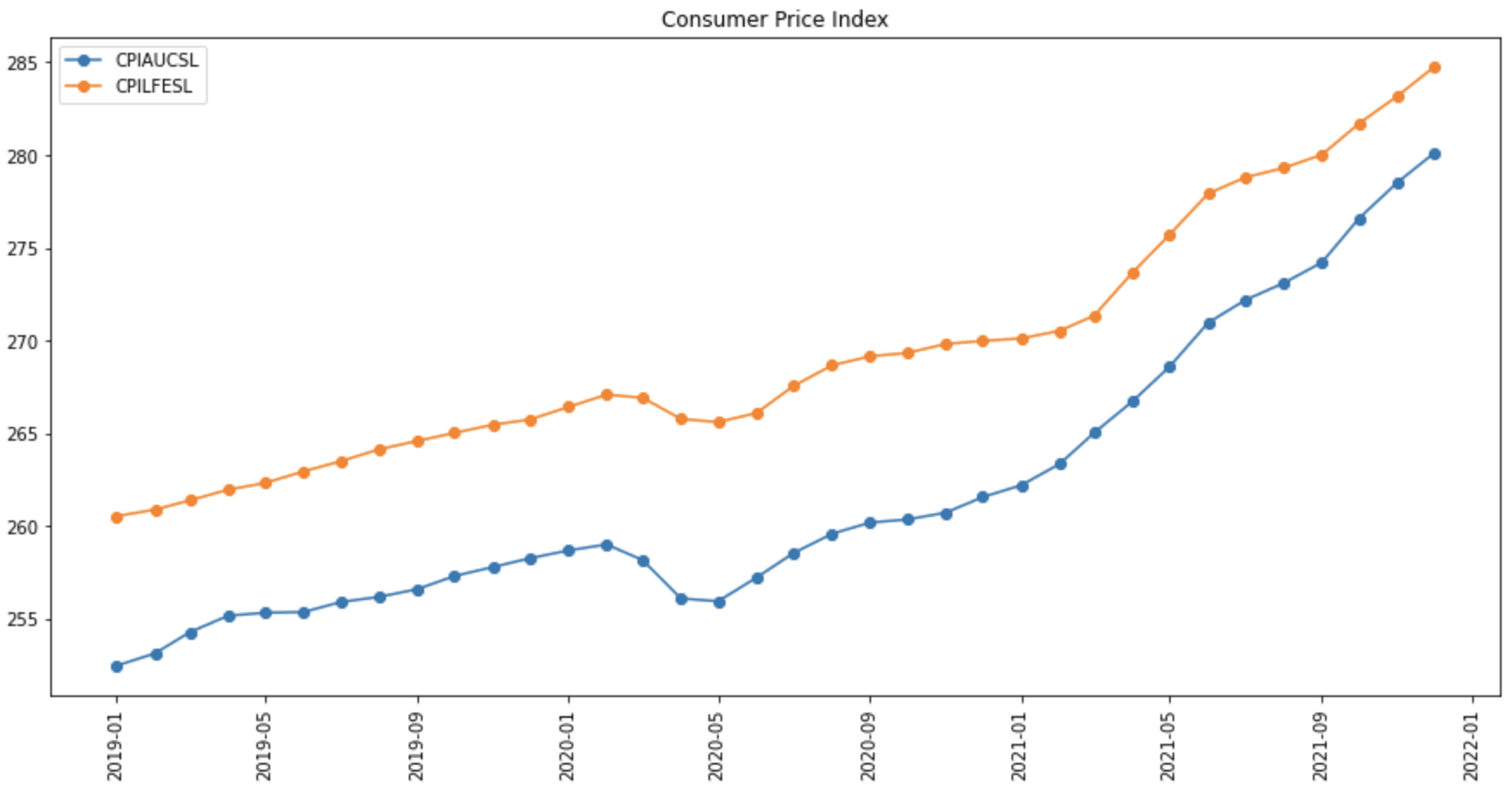

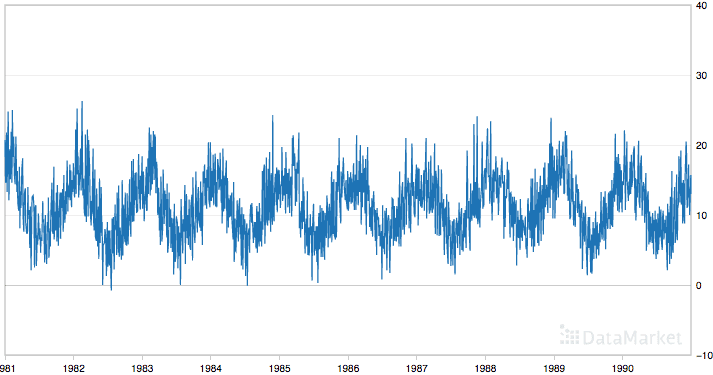

The syntax for reading from another data source using pandas-datareader is similar. For example, we can read an economic time series from the Federal Reserve Economic Data (FRED). Every time series in FRED is identified by a symbol. For example, the consumer price index for all urban consumers is CPIAUCSL, the consumer price index for all items less food and energy is CPILFESL, and personal consumption expenditure is PCE. You can search and look up the symbols from FRED’s webpage.

Below is how we can obtain two consumer price indices, CPIAUCSL and CPILFESL, and show them in a plot:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

import pandas_datareader as pdr import matplotlib.pyplot as plt # Read data from FRED and print fred_df = pdr.DataReader(['CPIAUCSL','CPILFESL'], 'fred', "2010-01-01", "2021-12-31") print(fred_df) # Show in plot the data of 2019-2021 fig = plt.figure(figsize=(15,7)) plt.plot(fred_df.loc["2019":], 'o-') plt.xticks(rotation=90) plt.legend(fred_df.columns) plt.title("Consumer Price Index") plt.show() |

Plot of Consumer Price Index

Obtaining data from World Bank is also similar, but we have to understand that the data from World Bank is more complicated. Usually, a data series, such as population, is presented as a time series and also has the countries dimension. Therefore, we need to specify more parameters to obtain the data.

Using pandas_datareader, we have a specific set of APIs for the World Bank. The symbol for an indicator can be looked up from World Bank Open Data or searched using the following:

|

1 2 3 4 |

from pandas_datareader import wb matches = wb.search('total.*population') print(matches[["id","name"]]) |

The search() function accepts a regular expression string (e.g., .* above means string of any length). This will print:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

id name 24 1.1_ACCESS.ELECTRICITY.TOT Access to electricity (% of total population) 164 2.1_ACCESS.CFT.TOT Access to Clean Fuels and Technologies for coo... 1999 CC.AVPB.PTPI.AI Additional people below $1.90 as % of total po... 2000 CC.AVPB.PTPI.AR Additional people below $1.90 as % of total po... 2001 CC.AVPB.PTPI.DI Additional people below $1.90 as % of total po... ... ... ... 13908 SP.POP.TOTL.FE.ZS Population, female (% of total population) 13912 SP.POP.TOTL.MA.ZS Population, male (% of total population) 13938 SP.RUR.TOTL.ZS Rural population (% of total population) 13958 SP.URB.TOTL.IN.ZS Urban population (% of total population) 13960 SP.URB.TOTL.ZS Percentage of Population in Urban Areas (in % ... [137 rows x 2 columns] |

where the id column is the symbol for the time series.

We can read data for specific countries by specifying the ISO-3166-1 country code. But World Bank also contains non-country aggregates (e.g., South Asia), so while pandas_datareader allows us to use the string “all” for all countries, usually we do not want to use it. Below is how we can get a list of all countries and aggregates from the World Bank:

|

1 2 3 4 |

import pandas_datareader.wb as wb countries = wb.get_countries() print(countries) |

|

1 2 3 4 5 6 7 8 9 10 11 12 |

iso3c iso2c name region adminregion incomeLevel lendingType capitalCity longitude latitude 0 ABW AW Aruba Latin America & ... High income Not classified Oranjestad -70.0167 12.5167 1 AFE ZH Africa Eastern a... Aggregates Aggregates Aggregates NaN NaN 2 AFG AF Afghanistan South Asia South Asia Low income IDA Kabul 69.1761 34.5228 3 AFR A9 Africa Aggregates Aggregates Aggregates NaN NaN 4 AFW ZI Africa Western a... Aggregates Aggregates Aggregates NaN NaN .. ... ... ... ... ... ... ... ... ... ... 294 XZN A5 Sub-Saharan Afri... Aggregates Aggregates Aggregates NaN NaN 295 YEM YE Yemen, Rep. Middle East & No... Middle East & No... Low income IDA Sana'a 44.2075 15.3520 296 ZAF ZA South Africa Sub-Saharan Africa Sub-Saharan Afri... Upper middle income IBRD Pretoria 28.1871 -25.7460 297 ZMB ZM Zambia Sub-Saharan Africa Sub-Saharan Afri... Lower middle income IDA Lusaka 28.2937 -15.3982 298 ZWE ZW Zimbabwe Sub-Saharan Africa Sub-Saharan Afri... Lower middle income Blend Harare 31.0672 -17.8312 |

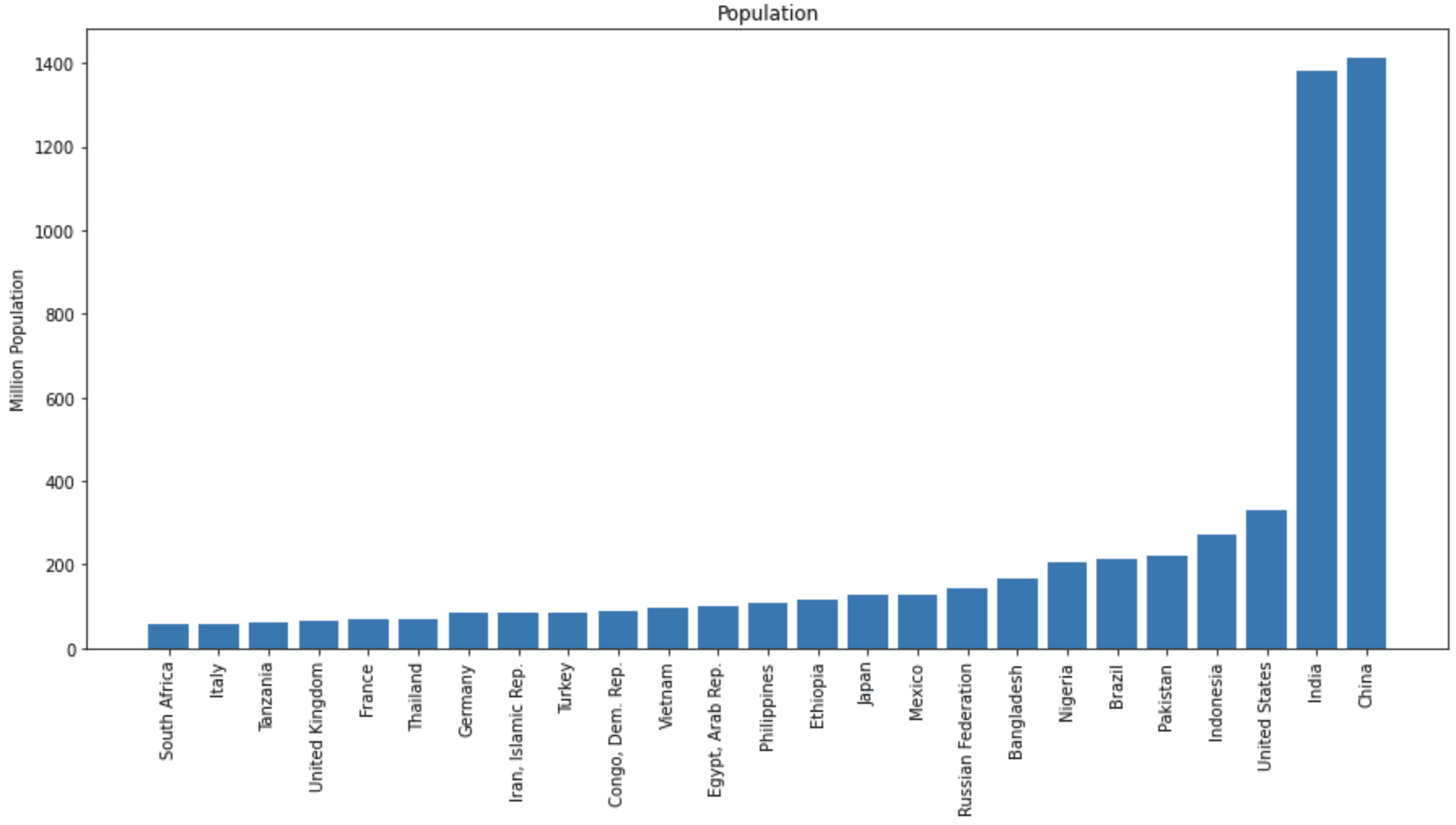

Below is how we can get the population of all countries in 2020 and show the top 25 countries in a bar chart. Certainly, we can also get the population data across years by specifying a different start and end year:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

import pandas_datareader.wb as wb import pandas as pd import matplotlib.pyplot as plt # Get a list of 2-letter country code excluding aggregates countries = wb.get_countries() countries = list(countries[countries.region != "Aggregates"]["iso2c"]) # Read countries' total population data (SP.POP.TOTL) in year 2020 population_df = wb.download(indicator="SP.POP.TOTL", country=countries, start=2020, end=2020) # Sort by population, then take top 25 countries, and make the index (i.e., countries) as a column population_df = (population_df.dropna() .sort_values("SP.POP.TOTL") .iloc[-25:] .reset_index()) # Plot the population, in millions fig = plt.figure(figsize=(15,7)) plt.bar(population_df["country"], population_df["SP.POP.TOTL"]/1e6) plt.xticks(rotation=90) plt.ylabel("Million Population") plt.title("Population") plt.show() |

Bar chart of total population of different countries

Want to Get Started With Python for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Fetching Data Using Web APIs

Instead of using the pandas_datareader library, sometimes you have the option to fetch data directly from a web data server by calling its web APIs without any authentication needed. It can be done in Python using the standard library urllib.requests, or you may also use the requests library for an easier interface.

World Bank is an example where web APIs are freely available, so we can easily read data in different formats, such as JSON, XML, or plain text. The page on the World Bank data repository’s API describes various APIs and their respective parameters. To repeat what we did in the previous example without using pandas_datareader, we first construct a URL to read a list of all countries so we can find the country code that is not an aggregate. Then, we can construct a query URL with the following arguments:

countryargument with value =allindicatorargument with value =SP.POP.TOTLdateargument with value =2020formatargument with value =json

Of course, you can experiment with different indicators. By default, the World Bank returns 50 items on a page, and we need to query for one page after another to exhaust the data. We can enlarge the page size to get all data in one shot. Below is how we get the list of countries in JSON format and collect the country codes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

import requests # Create query URL for list of countries, by default only 50 entries returned per page url = "http://api.worldbank.org/v2/country/all?format=json&per_page=500" response = requests.get(url) # Expects HTTP status code 200 for correct query print(response.status_code) # Get the response in JSON header, data = response.json() print(header) # Collect a list of 3-letter country code excluding aggregates countries = [item["id"] for item in data if item["region"]["value"] != "Aggregates"] print(countries) |

It will print the HTTP status code, the header, and the list of country codes as follows:

|

1 2 3 |

200 {'page': 1, 'pages': 1, 'per_page': '500', 'total': 299} ['ABW', 'AFG', 'AGO', 'ALB', ..., 'YEM', 'ZAF', 'ZMB', 'ZWE'] |

From the header, we can verify that we exhausted the data (page 1 out of 1). Then we can get all population data as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

... # Create query URL for total population from all countries in 2020 arguments = { "country": "all", "indicator": "SP.POP.TOTL", "date": "2020:2020", "format": "json" } url = "http://api.worldbank.org/v2/country/{country}/" \ "indicator/{indicator}?date={date}&format={format}&per_page=500" query_population = url.format(**arguments) response = requests.get(query_population) # Get the response in JSON header, population_data = response.json() |

You should check the World Bank API documentation for details on how to construct the URL. For example, the date syntax of 2020:2021 would mean the start and end years, and the extra parameter page=3 will give you the third page in a multi-page result. With the data fetched, we can filter for only those non-aggregate countries, make it into a pandas DataFrame for sorting, and then plot the bar chart:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

... # Filter for countries, not aggregates population = [] for item in population_data: if item["countryiso3code"] in countries: name = item["country"]["value"] population.append({"country":name, "population": item["value"]}) # Create DataFrame for sorting and filtering population = pd.DataFrame.from_dict(population) population = population.dropna().sort_values("population").iloc[-25:] # Plot bar chart fig = plt.figure(figsize=(15,7)) plt.bar(population["country"], population["population"]/1e6) plt.xticks(rotation=90) plt.ylabel("Million Population") plt.title("Population") plt.show() |

The figure should be precisely the same as before. But as you can see, using pandas_datareader helps make the code more concise by hiding the low-level operations.

Putting everything together, the following is the complete code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

import pandas as pd import matplotlib.pyplot as plt import requests # Create query URL for list of countries, by default only 50 entries returned per page url = "http://api.worldbank.org/v2/country/all?format=json&per_page=500" response = requests.get(url) # Expects HTTP status code 200 for correct query print(response.status_code) # Get the response in JSON header, data = response.json() print(header) # Collect a list of 3-letter country code excluding aggregates countries = [item["id"] for item in data if item["region"]["value"] != "Aggregates"] print(countries) # Create query URL for total population from all countries in 2020 arguments = { "country": "all", "indicator": "SP.POP.TOTL", "date": 2020, "format": "json" } url = "http://api.worldbank.org/v2/country/{country}/" \ "indicator/{indicator}?date={date}&format={format}&per_page=500" query_population = url.format(**arguments) response = requests.get(query_population) print(response.status_code) # Get the response in JSON header, population_data = response.json() print(header) # Filter for countries, not aggregates population = [] for item in population_data: if item["countryiso3code"] in countries: name = item["country"]["value"] population.append({"country":name, "population": item["value"]}) # Create DataFrame for sorting and filtering population = pd.DataFrame.from_dict(population) population = population.dropna().sort_values("population").iloc[-25:] # Plot bar chart fig = plt.figure(figsize=(15,7)) plt.bar(population["country"], population["population"]/1e6) plt.xticks(rotation=90) plt.ylabel("Million Population") plt.title("Population") plt.show() |

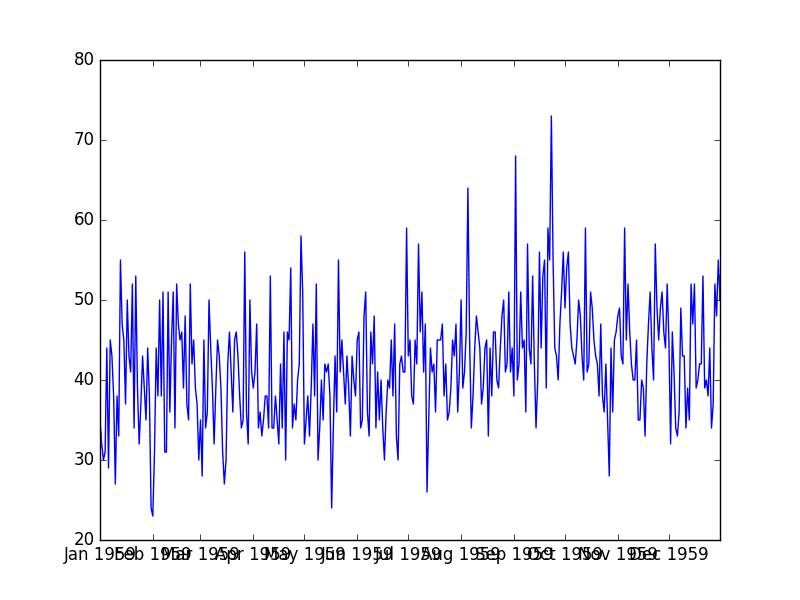

Creating Synthetic Data Using NumPy

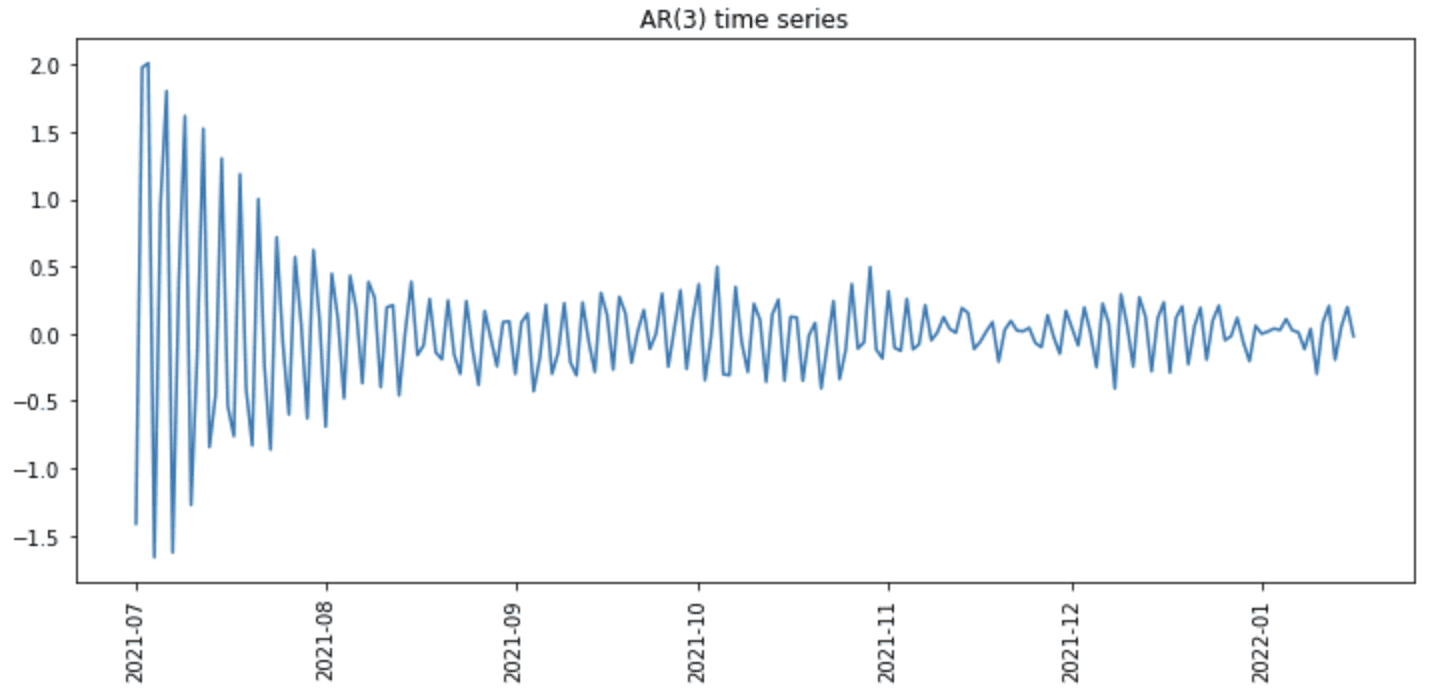

Sometimes, we may not want to use real-world data for our project because we need something specific that may not happen in reality. One particular example is to test out a model with ideal time-series data. In this section, we will see how we can create synthetic autoregressive (AR) time-series data.

The numpy.random library can be used to create random samples from different distributions. The randn() method generates data from a standard normal distribution with zero mean and unit variance.

In the AR($n$) model of order $n$, the value $x_t$ at time step $t$ depends upon the values at the previous $n$ time steps. That is,

$$

x_t = b_1 x_{t-1} + b_2 x_{t-2} + … + b_n x_{t-n} + e_t

$$

with model parameters $b_i$ as coefficients to different lags of $x_t$, and the error term $e_t$ is expected to follow normal distribution.

Understanding the formula, we can generate an AR(3) time series in the example below. We first use randn() to generate the first 3 values of the series and then iteratively apply the above formula to generate the next data point. Then, an error term is added using the randn() function again, subject to the predefined noise_level:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

import numpy as np # Predefined paramters ar_n = 3 # Order of the AR(n) data ar_coeff = [0.7, -0.3, -0.1] # Coefficients b_3, b_2, b_1 noise_level = 0.1 # Noise added to the AR(n) data length = 200 # Number of data points to generate # Random initial values ar_data = list(np.random.randn(ar_n)) # Generate the rest of the values for i in range(length - ar_n): next_val = (np.array(ar_coeff) @ np.array(ar_data[-3:])) + np.random.randn() * noise_level ar_data.append(next_val) # Plot the time series fig = plt.figure(figsize=(12,5)) plt.plot(ar_data) plt.show() |

The code above will create the following plot:

But we can further add the time axis by first converting the data into a pandas DataFrame and then adding the time as an index:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... # Convert the data into a pandas DataFrame synthetic = pd.DataFrame({"AR(3)": ar_data}) synthetic.index = pd.date_range(start="2021-07-01", periods=len(ar_data), freq="D") # Plot the time series fig = plt.figure(figsize=(12,5)) plt.plot(synthetic.index, synthetic) plt.xticks(rotation=90) plt.title("AR(3) time series") plt.show() |

after which we will have the following plot instead:

Plot of synthetic time series

Using similar techniques, we can generate pure random noise (i.e., AR(0) series), ARIMA time series (i.e., with coefficients to error terms), or Brownian motion time series (i.e., running sum of random noise) as well.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Libraries

Data source

- Yahoo! Finance

- St. Louis Fed Federal Research Economic Data

- World Bank Open Data

- World Bank Data API documentation

Books

- Think Python: How to Think Like a Computer Scientist by Allen B. Downey

- Programming in Python 3: A Complete Introduction to the Python Language by Mark Summerfield

- Python for Data Analysis, 2nd edition, by Wes McKinney

Summary

In this tutorial, you discovered various options for fetching data or generating synthetic time-series data in Python.

Specifically, you learned:

- How to use

pandas_datareaderand fetch financial data from different data sources - How to call APIs to fetch data from different web servers using

the requestslibrary - How to generate synthetic time-series data using NumPy’s random number generator

Do you have any questions about the topics discussed in this post? Ask your questions in the comments below, and I will do my best to answer.

Mehreen,

Good evening! This was fantastic, especially the last bit about developing synthetic time series data. I deal with sequences of time series data and have been using a far inferior method to develop synthetic data. While time-series data by itself is complicated to handle, sequences of time series bring additional complexities to the problem and I can use this as a guide for making my data a bit more robust. Thank you very much for posting this!

Take care,

Jeremy

version info for previous error about adding time as the index

Python: 3.9.7 (default, Sep 16 2021, 13:09:58)

[GCC 7.5.0]

scipy: 1.7.3

numpy: 1.21.2

matplotlib: 3.5.1

pandas: 1.4.1

statsmodels: 0.13.2

sklearn: 1.0.2

theano: 1.0.5

tensorflow: 2.4.1

keras: 2.4.3

Hello…Please specify the code listing you are experience an error on.

hi , am getting a remotedata error message !

can i share the log somewhere ?

Hi Amit…Could you post the exact error message?

Hello,

When try to get the data i get following error messages.

Could you support?

Error message:ConnectionError: HTTPSConnectionPool(host=’finance.yahoo.com’, port=443): Max retries exceeded with url: /quote/AAPL/history?period1=1609462800&period2=1640998799&interval=1d&frequency=1d&filter=history (Caused by NewConnectionError(‘: Failed to establish a new connection: [Errno 11001] getaddrinfo failed’))

Hi F.S…The following discussion may be of interest:

https://stackoverflow.com/questions/63881566/python-connectionerror-httpsconnectionpoolhost-finance-yahoo-com-port-443