It is important that beginner machine learning practitioners practice on small real-world datasets.

So-called standard machine learning datasets contain actual observations, fit into memory, and are well studied and well understood. As such, they can be used by beginner practitioners to quickly test, explore, and practice data preparation and modeling techniques.

A practitioner can confirm whether they have the data skills required to achieve a good result on a standard machine learning dataset. A good result is a result that is above the 80th or 90th percentile result of what may be technically possible for a given dataset.

The skills developed by practitioners on standard machine learning datasets can provide the foundation for tackling larger, more challenging projects.

In this post, you will discover standard machine learning datasets for classification and regression and the baseline and good results that one may expect to achieve on each.

After reading this post, you will know:

- The importance of standard machine learning datasets.

- How to systematically evaluate a model on a standard machine learning dataset.

- Standard datasets for classification and regression and the baseline and good performance expected on each.

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jun/2020: Added improved results for the glass and horse colic dataset.

- Update Aug/2020: Added better results for horse colic, housing, and auto imports (thanks Dragos Stan)

Results for Standard Classification and Regression Machine Learning Datasets

Photo by Don Dearing, some rights reserved.

Overview

This tutorial is divided into seven parts; they are:

- Value of Small Machine Learning Datasets

- Definition of a Standard Machine Learning Dataset

- Standard Machine Learning Datasets

- Good Results for Standard Datasets

- Model Evaluation Methodology

- Results for Classification Datasets

- Binary Classification Datasets

- Ionosphere

- Pima Indian Diabetes

- Sonar

- Wisconsin Breast Cancer

- Horse Colic

- Multiclass Classification Datasets

- Iris Flowers

- Glass

- Wine

- Wheat Seeds

- Binary Classification Datasets

- Results for Regression Datasets

- Housing

- Auto Insurance

- Abalone

- Auto Imports

Value of Small Machine Learning Datasets

There are a number of small machine learning datasets for classification and regression predictive modeling problems that are frequently reused.

Sometimes the datasets are used as the basis for demonstrating a machine learning or data preparation technique. Other times, they are used as a basis for comparing different techniques.

These datasets were collected and made publicly available in the early days of applied machine learning when data and real-world datasets were scarce. As such, they have become a standard or canonized from their wide adoption and reuse alone, not for any intrinsic interestingness in the problems.

Finding a good model on one of these datasets does not mean you have “solved” the general problem. Also, some of the datasets may contain names or indicators that might be considered questionable or culturally insensitive (which was very likely not the intent when the data was collected). As such, they are also sometimes referred to as “toy” datasets.

Such datasets are not really useful for points of comparison for machine learning algorithms, as most empirical experiments are nearly impossible to reproduce.

Nevertheless, such datasets are valuable in the field of applied machine learning today. Even in the era of standard machine learning libraries, big data, and the abundance of data.

There are three main reasons why they are valuable; they are:

- The datasets are real.

- The datasets are small.

- The datasets are understood.

Real datasets are useful as compared to contrived datasets because they are messy. There may be and are measurement errors, missing values, mislabeled examples, and more. Some or all of these issues must be searched for and addressed, and are some of the properties we may encounter when working on our own projects.

Small datasets are useful as compared to large datasets that may be many gigabytes in size. Small datasets can easily fit into memory and allow for the testing and exploration of many different data visualization, data preparation, and modeling algorithms easily and quickly. Speed of testing ideas and getting feedback is critical for beginners, and small datasets facilitate exactly this.

Understood datasets are useful as compared to new or newly created datasets. The features are well defined, the units of the features are specified, the source of the data is known, and the dataset has been well studied in tens, hundreds, and in some cases, thousands of research projects and papers. This provides a context in which results can be compared and evaluated, a property not available in entirely new domains.

Given these properties, I strongly advocate machine learning beginners (and practitioners that are new to a specific technique) start with standard machine learning datasets.

Definition of a Standard Machine Learning Dataset

I would like to go one step further and define some more specific properties of a “standard” machine learning dataset.

A standard machine learning dataset has the following properties.

- Less than 10,000 rows (samples).

- Less than 100 columns (features).

- Last column is the target variable.

- Stored in a single file with CSV format and without header line.

- Missing values marked with a question mark character (‘?’)

- It is possible to achieve a better than naive result.

Now that we have a clear definition of a dataset, let’s look at what a “good” result means.

Standard Machine Learning Datasets

A dataset is a standard machine learning dataset if it is frequently used in books, research papers, tutorials, presentations, and more.

The best repository for these so-called classical or standard machine learning datasets is the University of California at Irvine (UCI) machine learning repository. This website categorizes datasets by type and provides a download of the data and additional information about each dataset and references relevant papers.

I have chosen five or fewer datasets for each problem type as a starting point.

All standard datasets used in this post are available on GitHub here:

Download links are also provided for each dataset and for additional details about the dataset (the so-called a “.name” file).

Each code example will automatically download a given dataset for you. If this is a problem, you can download the CSV file manually, place it in the same directory as the code example, then change the code example to use the filename instead of the URL.

For example:

|

1 2 3 |

... # load dataset dataframe = read_csv('ionosphere.csv', header=None) |

Good Results for Standard Datasets

A challenge for beginners when working with standard machine learning datasets is what represents a good result.

In general, a model is skillful if it can demonstrate a performance that is better than a naive method, such as predicting the majority class in classification or the mean value in regression. This is called a baseline model or a baseline of performance that provides a relative measure of performance specific to a dataset. You can learn more about this here:

Given that we now have a method for determining whether a model has skill on a dataset, beginners remain interested in the upper limits of performance for a given dataset. This is required information to know whether you are “getting good” at the process of applied machine learning.

Good does not mean perfect predictions. All models will have prediction errors, and perfect predictions are not possible (tractable?) on real-world datasets.

Defining “good” or “best” results for a dataset is challenging because it is dependent generally on the model evaluation methodology, and specifically on the versions of the dataset and libraries used in the evaluation.

Good means “good-enough” given available resources. Often, this means a skill score that is above the 80th or 90th percentile of what might be possible for a dataset given unbounded skill, time, and computational resources.

In this tutorial, you will discover how to calculate the baseline performance and “good” (near-best) performance that is possible on each dataset. You will also discover how to specify the data preparation and model used to achieve the performance.

Rather than explain how to do this, a short Python code example is given that you can use to reproduce the baseline and good result.

Model Evaluation Methodology

The evaluation methodology is simple and fast, and generally recommended when working with small predictive modeling problems.

The procedure is evaluated as follows:

- A model is evaluated using 10-fold cross-validation.

- The evaluation procedure is repeated three times.

- The random seed for the cross-validation split is the repeat number (1, 2, or 3).

This results in 30 estimates of model performance from which a mean and standard deviation can be calculated to summarize the performance of a given model.

Using the repeat number as the seed for each cross-validation split ensures that each algorithm evaluated on the dataset gets the same splits of the data, ensuring a fair direct comparison.

Using the scikit-learn Python machine learning library, the example below can be used to evaluate a given model (or Pipeline). The RepeatedStratifiedKFold class defines the number of folds and repeats for classification, and the cross_val_score() function defines the score and performs the evaluation and returns a list of scores from which a mean and standard deviation can be calculated.

|

1 2 3 |

... cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') |

For regression we can use the RepeatedKFold class and the MAE score.

|

1 2 3 |

... cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') |

The “good” scores reported are the best that I can get out of my own personal set of “get a good result fast on a given dataset” scripts. I believe the scores represent good scores that can be achieved on each dataset, perhaps in the 90th or 95th percentile of what is possible for each dataset, if not better.

That being said, I am not claiming that they are the best possible scores as I have not performed hyperparameter tuning for the well-performing models. I leave this as an exercise for interested practitioners. Best scores are not required if a practitioner can address a given dataset as getting a top percentile score is more than sufficient to demonstrate competence.

Note: I will update the results and models as I improve my own personal scripts and achieve better scores.

Can you get a better score for a dataset?

I would love to know. Share your model and score in the comments below and I will try to reproduce it and update the post (and give you full credit!)

Let’s dive in.

Results for Classification Datasets

Classification is a predictive modeling problem that predicts one label given one or more input variables.

The baseline model for classification tasks is a model that predicts the majority label. This can be achieved in scikit-learn using the DummyClassifier class with the ‘most_frequent‘ strategy; for example:

|

1 2 |

... model = DummyClassifier(strategy='most_frequent') |

The standard evaluation for classification models is classification accuracy, although this is not ideal for imbalanced and some multi-class problems. Nevertheless, for better or worse, this score will be used (for now).

Accuracy is reported as a fraction between 0 (0% or no skill) and 1 (100% or perfect skill).

There are two main types of classification tasks: binary and multi-class classification, divided based on the number of labels to be predicted for a given dataset as two or more than two respectively. Given the prevalence of classification tasks in machine learning, we will treat these two subtypes of classification problems separately.

Binary Classification Datasets

In this section, we will review the baseline and good performance on the following binary classification predictive modeling datasets:

- Ionosphere

- Pima Indian Diabetes

- Sonar

- Wisconsin Breast Cancer

- Horse Colic

Ionosphere

- Download: ionosphere.csv

- Details: ionosphere.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# baseline and good result for Ionosphere from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import StandardScaler from sklearn.preprocessing import MinMaxScaler from sklearn.dummy import DummyClassifier from sklearn.svm import SVC # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/ionosphere.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = SVC(kernel='rbf', gamma='scale', C=10) steps = [('s',StandardScaler()), ('n',MinMaxScaler()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (351, 34), (351,) Baseline: 0.641 (0.006) Good: 0.948 (0.033) |

Pima Indian Diabetes

- Download: pima-indians-diabetes.csv

- Details: pima-indians-diabetes.name

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# baseline and good result for Pima Indian Diabetes from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.dummy import DummyClassifier from sklearn.linear_model import LogisticRegression # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = LogisticRegression(solver='newton-cg',penalty='l2',C=1) m_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (768, 8), (768,) Baseline: 0.651 (0.003) Good: 0.774 (0.055) |

Sonar

- Download: sonar.csv

- Details: sonar.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

# baseline and good result for Sonar from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import PowerTransformer from sklearn.dummy import DummyClassifier from sklearn.neighbors import KNeighborsClassifier # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/sonar.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = KNeighborsClassifier(n_neighbors=2, metric='minkowski', weights='distance') steps = [('p',PowerTransformer()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (208, 60), (208,) Baseline: 0.534 (0.012) Good: 0.882 (0.071) |

Wisconsin Breast Cancer

- Download: breast-cancer-wisconsin.csv

- Details: breast-cancer-wisconsin.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# baseline and good result for Wisconsin Breast Cancer from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import PowerTransformer from sklearn.impute import SimpleImputer from sklearn.dummy import DummyClassifier from sklearn.svm import SVC # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/breast-cancer-wisconsin.csv' dataframe = read_csv(url, header=None, na_values='?') data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = SVC(kernel='sigmoid', gamma='scale', C=0.1) steps = [('i',SimpleImputer(strategy='median')), ('p',PowerTransformer()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (699, 9), (699,) Baseline: 0.655 (0.003) Good: 0.973 (0.019) |

Horse Colic

- Download: horse-colic.csv

- Details: horse-colic.names

The complete code example for achieving baseline and a good result on this dataset is listed below (credit to Dragos Stan).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# baseline and good result for Horse Colic from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.impute import SimpleImputer from sklearn.dummy import DummyClassifier from xgboost import XGBClassifier # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/horse-colic.csv' dataframe = read_csv(url, header=None, na_values='?') data = dataframe.values ix = [i for i in range(data.shape[1]) if i != 23] X, y = data[:, ix], data[:, 23] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = XGBClassifier(colsample_bylevel=0.9, colsample_bytree=0.9, importance_type='gain', learning_rate=0.01, max_depth=4, n_estimators=200, reg_alpha=0.1, reg_lambda=0.5, subsample=1.0) imputer = SimpleImputer(strategy='median') pipeline = Pipeline(steps=[('i', imputer), ('m', model)]) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (300, 27), (300,) Baseline: 0.637 (0.010) Good: 0.893 (0.057) |

Multiclass Classification Datasets

In this section, we will review the baseline and good performance on the following multiclass classification predictive modeling datasets:

- Iris Flowers

- Glass

- Wine

- Wheat Seeds

Iris Flowers

- Download: iris.csv

- Details: iris.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

# baseline and good result for Iris from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import PowerTransformer from sklearn.dummy import DummyClassifier from sklearn.discriminant_analysis import LinearDiscriminantAnalysis # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = LinearDiscriminantAnalysis() steps = [('p',PowerTransformer()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (150, 4), (150,) Baseline: 0.333 (0.000) Good: 0.980 (0.039) |

Glass

- Download: glass.csv

- Details: glass.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

Note: The test harness was changed from 10-fold to 5-fold cross-validation to ensure each fold had examples of all classes and avoid warning messages.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

# baseline and good result for Glass from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.dummy import DummyClassifier from sklearn.ensemble import RandomForestClassifier # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/glass.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=5, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model weights = {0:1.0, 1:1.0, 2:2.0, 3:2.0, 4:2.0, 5:2.0} model = RandomForestClassifier(n_estimators=1000, class_weight=weights, max_features=2) m_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (214, 9), (214,) Baseline: 0.355 (0.009) Good: 0.815 (0.048) |

Wine

- Download: wine.csv

- Details: wine.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# baseline and good result for Wine from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import StandardScaler from sklearn.preprocessing import MinMaxScaler from sklearn.dummy import DummyClassifier from sklearn.discriminant_analysis import QuadraticDiscriminantAnalysis # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/wine.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = QuadraticDiscriminantAnalysis() steps = [('s',StandardScaler()), ('n',MinMaxScaler()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (178, 13), (178,) Baseline: 0.399 (0.017) Good: 0.992 (0.020) |

Wheat Seeds

- Download: wheat-seeds.csv

- Details: wheat-seeds.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

# baseline and good result for Wine from numpy import mean from numpy import std from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import StandardScaler from sklearn.dummy import DummyClassifier from sklearn.linear_model import RidgeClassifier # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/wheat-seeds.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = LabelEncoder().fit_transform(y.astype('str')) # evaluate naive naive = DummyClassifier(strategy='most_frequent') cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = RidgeClassifier(alpha=0.2) steps = [('s',StandardScaler()), ('m',model)] pipeline = Pipeline(steps=steps) m_scores = cross_val_score(pipeline, X, y, scoring='accuracy', cv=cv, n_jobs=-1, error_score='raise') print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (210, 7), (210,) Baseline: 0.333 (0.000) Good: 0.973 (0.036) |

Results for Regression Datasets

Regression is a predictive modeling problem that predicts a numerical value given one or more input variables.

The baseline model for classification tasks is a model that predicts the mean or median value. This can be achieved in scikit-learn using the DummyRegressor class using the ‘median‘ strategy; for example:

|

1 2 |

... model = DummyRegressor(strategy='median') |

The standard evaluation for regression models is mean absolute error (MAE), although this is not ideal for all regression problems. Nevertheless, for better or worse, this score will be used (for now).

MAE is reported as an error score between 0 (perfect skill) and a very large number or infinity (no skill).

In this section, we will review the baseline and good performance on the following regression predictive modeling datasets:

- Housing

- Auto Insurance

- Abalone

- Auto Imports

Housing

- Download: housing.csv

- Details: housing.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# baseline and good result for Housing from numpy import mean from numpy import std from numpy import absolute from pandas import read_csv from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from sklearn.dummy import DummyRegressor from xgboost import XGBRegressor # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = y.astype('float32') # evaluate naive naive = DummyRegressor(strategy='median') cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') n_scores = absolute(n_scores) print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = XGBRegressor(colsample_bylevel=0.4, colsample_bynode=0.6, colsample_bytree=1.0, learning_rate=0.06, max_depth=5, n_estimators=700, subsample=0.8) m_scores = cross_val_score(model, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') m_scores = absolute(m_scores) print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (506, 13), (506,) Baseline: 6.544 (0.754) Good: 1.928 (0.292) |

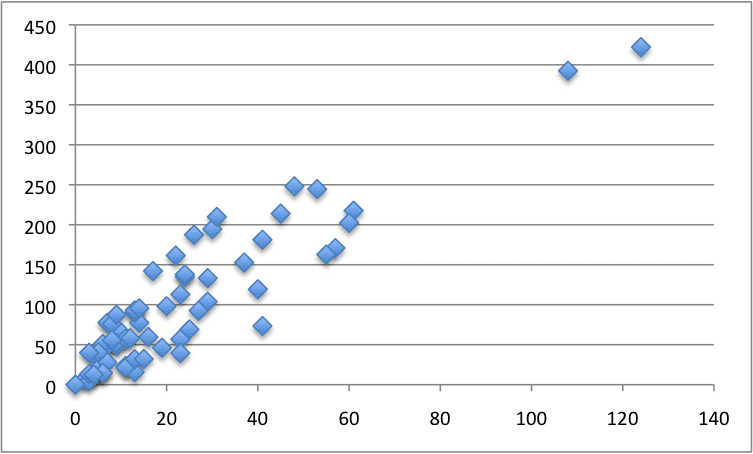

Auto Insurance

- Download: auto-insurance.csv

- Details: auto-insurance.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

# baseline and good result for Auto Insurance from numpy import mean from numpy import std from numpy import absolute from pandas import read_csv from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from sklearn.pipeline import Pipeline from sklearn.compose import TransformedTargetRegressor from sklearn.preprocessing import PowerTransformer from sklearn.dummy import DummyRegressor from sklearn.linear_model import HuberRegressor # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/auto-insurance.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset X = X.astype('float32') y = y.astype('float32') # evaluate naive naive = DummyRegressor(strategy='median') cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(naive, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') n_scores = absolute(n_scores) print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = HuberRegressor(epsilon=1.0, alpha=0.001) steps = [('p',PowerTransformer()), ('m',model)] pipeline = Pipeline(steps=steps) target = TransformedTargetRegressor(regressor=pipeline, transformer=PowerTransformer()) m_scores = cross_val_score(target, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') m_scores = absolute(m_scores) print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (63, 1), (63,) Baseline: 66.624 (19.303) Good: 28.358 (9.747) |

Abalone

- Download: abalone.csv

- Details: abalone.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# baseline and good result for Abalone from numpy import mean from numpy import std from numpy import absolute from pandas import read_csv from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from sklearn.pipeline import Pipeline from sklearn.compose import TransformedTargetRegressor from sklearn.preprocessing import OneHotEncoder from sklearn.preprocessing import PowerTransformer from sklearn.compose import ColumnTransformer from sklearn.dummy import DummyRegressor from sklearn.svm import SVR # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/abalone.csv' dataframe = read_csv(url, header=None) data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) # minimally prepare dataset y = y.astype('float32') # evaluate naive naive = DummyRegressor(strategy='median') transform = ColumnTransformer(transformers=[('c', OneHotEncoder(), [0])], remainder='passthrough') pipeline = Pipeline(steps=[('ColumnTransformer',transform), ('Model',naive)]) cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(pipeline, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') n_scores = absolute(n_scores) print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = SVR(kernel='rbf',gamma='scale',C=10) target = TransformedTargetRegressor(regressor=model, transformer=PowerTransformer(), check_inverse=False) pipeline = Pipeline(steps=[('ColumnTransformer',transform), ('Model',target)]) m_scores = cross_val_score(pipeline, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') m_scores = absolute(m_scores) print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (4177, 8), (4177,) Baseline: 2.363 (0.116) Good: 1.460 (0.075) |

Auto Imports

- Download: auto_imports.csv

- Details: auto_imports.names

The complete code example for achieving baseline and a good result on this dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

# baseline and good result for Auto Imports from numpy import mean from numpy import std from numpy import absolute from pandas import read_csv from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedKFold from sklearn.pipeline import Pipeline from sklearn.preprocessing import OneHotEncoder from sklearn.compose import ColumnTransformer from sklearn.impute import SimpleImputer from sklearn.dummy import DummyRegressor from xgboost import XGBRegressor # load dataset url = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/auto_imports.csv' dataframe = read_csv(url, header=None, na_values='?') data = dataframe.values X, y = data[:, :-1], data[:, -1] print('Shape: %s, %s' % (X.shape,y.shape)) y = y.astype('float32') # evaluate naive naive = DummyRegressor(strategy='median') cat_ix = [2,3,4,5,6,7,8,14,15,17] num_ix = [0,1,9,10,11,12,13,16,18,19,20,21,22,23,24] steps = [('c', Pipeline(steps=[('s',SimpleImputer(strategy='most_frequent')),('oe',OneHotEncoder(handle_unknown='ignore'))]), cat_ix), ('n', SimpleImputer(strategy='median'), num_ix)] transform = ColumnTransformer(transformers=steps, remainder='passthrough') pipeline = Pipeline(steps=[('ColumnTransformer',transform), ('Model',naive)]) cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1) n_scores = cross_val_score(pipeline, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') n_scores = absolute(n_scores) print('Baseline: %.3f (%.3f)' % (mean(n_scores), std(n_scores))) # evaluate model model = XGBRegressor(colsample_bylevel=0.2, colsample_bytree=0.6, learning_rate=0.05, max_depth=6, n_estimators=200, subsample=0.8) pipeline = Pipeline(steps=[('ColumnTransformer',transform), ('Model',model)]) m_scores = cross_val_score(pipeline, X, y, scoring='neg_mean_absolute_error', cv=cv, n_jobs=-1, error_score='raise') m_scores = absolute(m_scores) print('Good: %.3f (%.3f)' % (mean(m_scores), std(m_scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example, you should see the following results.

|

1 2 3 |

Shape: (201, 25), (201,) Baseline: 5509.486 (1440.942) Good: 1361.965 (290.236) |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

Articles

- UCI Machine Learning Repository

- Statlog Datasets: comparison of results, Włodzisław Duch.

- Datasets used for classification: comparison of results, Włodzisław Duch.

- Machine Learning, Neural and Statistical Classification, 1994.

- Machine Learning, Neural and Statistical Classification, Homepage, 1994.

- Dataset loading utilities, scikit-learn.

Summary

In this post, you discovered standard machine learning datasets for classification and regression and the baseline and good results that one may expect to achieve on each.

Specifically, you learned:

- The importance of standard machine learning datasets.

- How to systematically evaluate a model on a standard machine learning dataset.

- Standard datasets for classification and regression and the baseline and good performance expected on each.

Did I miss your favorite dataset?

Let me know in the comments and I will calculate a score for it, or perhaps even add it to this post.

Can you get a better score for a dataset?

I would love to know; share your model and score in the comments below and I will try to reproduce it and update the post (and give you full credit!)

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Changing C parameter to 20 in ionospehere increases score little bit (0.95) . It is a minor increase from 0.948 to 0.950 .

Should we do this or it is overfitting . Would love to hear about that.

Should we do grid search and try to get best accuracy ?

model = SVC(kernel=’rbf’, gamma=’scale’, C=20)

Very nice!

No, the robust test harness suggests it is probably not overfitting.

Yes, grid searching is a good idea!

Why should we use these code lines?

# minimally prepare dataset

X = X.astype(‘float32’)

y = LabelEncoder().fit_transform(y.astype(‘str’))

Also, it looks like all these datasets require regular techniques (encoding, hyperparameter tuning, imputation), but there is no need to use any feature-engineering. Do you believe this conclusion is correct for most of the dataset (e.g. Kaggle’s datasets)?

Thanks in advance,

Moshik

It forces inputs to be float (in case they were ints) and forces label encoding of class labels – regardless of their format.

Not in all cases, but you can get “good” results very quickly by pushing the complexity of the solution to the model rather than the data prep.

Hi, I noticed a small typo on the link about to housing (refers the wine data)

Correct link is as follows:

https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.csv

Please, I take this opportunity to ask you if you know where I can find more complex problems with the best solution obtained to date, but with the possibility of downloading exactly the same dataset used by them.

A kind of state of the art but with the dataset used for training their model.

Thanks,

Gioel

Thanks, fixed!

Perhaps kaggle?

For categorical variables can we use SimpleImputer before LabelEncoder?

If we do not convert the categorical to numbers is imputation possible?

I tried to run the code below ii gives me errors can u please help me out then I shall be highly obliged

numerical_x = reduced_df.select_dtypes(include=[‘int64’, ‘float64’]).columns

categorical_x = reduced_df.select_dtypes(include=[‘object’, ‘bool’]).columns

steps = [(‘c’, Pipeline(steps=[(‘catimp’,SimpleImputer(strategy=’most_frequent’),

(‘ohe’,OneHotEncoder(handle_unknown=’ignore’)),)]), categorical_x),

(‘n’, Pipeline(steps=[(‘numimp’,SimpleImputer(strategy=’median’)),(‘sts’,StandardScaler())]), numerical_x)]

col_transform = ColumnTransformer(transformers=steps,remainder=’passthrough’)

dt= DecisionTreeClassifier()

pl= Pipeline(steps=[(‘prep’,col_transform), (‘dt’, dt)])

pl.fit(reduced_df,y_train)

ERROR:

fit_params_steps = {name: {} for name, step in self.steps

ValueError: too many values to unpack (expected 2)

Yes.

You can also treat missing values as a label and encode them.

Hi Jason !

Horse-colic dataset, SimpleImputer(strategy = ‘median’), it seems that XGBClassifier gives a bit better accuracy, model below.

All the other Classification datasets (with XGBoost) either the same or extremely close except Ionosphere, I tried almost everything but couldn’t exceed 93.8%.

Horse-colic:

Accuracy: 89.22% (5.94%)

XGBClassifier(base_score=None, booster=None, colsample_bylevel=0.9,

colsample_bynode=None, colsample_bytree=0.9, gamma=None,

gpu_id=None, importance_type=’gain’, interaction_constraints=None,

learning_rate=0.01, max_delta_step=None, max_depth=4,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=200, n_jobs=None, num_parallel_tree=None,

random_state=None, reg_alpha=0.1, reg_lambda=0.5,

scale_pos_weight=None, subsample=1.0, tree_method=None,

validate_parameters=None, verbosity=None)

Very nice work! Thank you for sharing your findings.

I have updated the example and give you full credit.

Hi Jason,

I’ve found some small improvements for Regression datasets, using XGBoost and hyper-parameters tuning with GridSearchCV ( 7 parameters ) and cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1)!

1. Housing :Result: 1.917 (0.297)

XGBRegressor(base_score=None, booster=None, colsample_bylevel=0.4,

colsample_bynode=0.6, colsample_bytree=1.0, gamma=None,

gpu_id=None, importance_type=’gain’, interaction_constraints=None,

learning_rate=0.06, max_delta_step=None, max_depth=5,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=700, n_jobs=None, num_parallel_tree=None,

random_state=None, reg_alpha=0.0, reg_lambda=1.0,

scale_pos_weight=None, subsample=0.8, tree_method=None,

validate_parameters=None, verbosity=None)

2. auto_imports :

– NaN’s : SimpleImputer(strategy=’most_frequent’)

– Encoder for categorical : LabelEncoder()

– One Hot Encoding for categorical : pd.get_dummies(…., drop_first = True)

Result: 1376.289 (304.522)

XGBRegressor(base_score=None, booster=None, colsample_bylevel=0.2,

colsample_bynode=1.0, colsample_bytree=0.6, gamma=None,

gpu_id=None, importance_type=’gain’, interaction_constraints=None,

learning_rate=0.05, max_delta_step=None, max_depth=6,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=200, n_jobs=None, num_parallel_tree=None,

random_state=None, reg_alpha=0.0, reg_lambda=1.0,

scale_pos_weight=None, subsample=0.8, tree_method=None,

validate_parameters=None, verbosity=None)

kind regards,

Dragos

Very cool, well done!

Update: Added to the post.

Hello,

For sonar.csv, I got Good: 0.899 (0.068) when I used SVC instead of KNeighborsClassifiers, which gave Good: 0.893 (0.049)

I have no clue what model I have to use for a particular case or type of data. Could you please explain or point to a resource that does?

Thanks,

Anand