The key to getting good at applied machine learning is practicing on lots of different datasets.

This is because each problem is different, requiring subtly different data preparation and modeling methods.

In this post, you will discover 10 top standard machine learning datasets that you can use for practice.

Let’s dive in.

- Update Mar/2018: Added alternate link to download the Pima Indians and Boston Housing datasets as the originals appear to have been taken down.

- Update Feb/2019: Minor update to the expected default RMSE for the insurance dataset.

- Update Oct/2021: Minor update to the description of wheat seed dataset.

Overview

A structured Approach

Each dataset is summarized in a consistent way. This makes them easy to compare and navigate for you to practice a specific data preparation technique or modeling method.

The aspects that you need to know about each dataset are:

- Name: How to refer to the dataset.

- Problem Type: Whether the problem is regression or classification.

- Inputs and Outputs: The numbers and known names of input and output features.

- Performance: Baseline performance for comparison using the Zero Rule algorithm, as well as best known performance (if known).

- Sample: A snapshot of the first 5 rows of raw data.

- Links: Where you can download the dataset and learn more.

Standard Datasets

Below is a list of the 10 datasets we’ll cover.

Each dataset is small enough to fit into memory and review in a spreadsheet. All datasets are comprised of tabular data and no (explicitly) missing values.

- Swedish Auto Insurance Dataset.

- Wine Quality Dataset.

- Pima Indians Diabetes Dataset.

- Sonar Dataset.

- Banknote Dataset.

- Iris Flowers Dataset.

- Abalone Dataset.

- Ionosphere Dataset.

- Wheat Seeds Dataset.

- Boston House Price Dataset.

1. Swedish Auto Insurance Dataset

The Swedish Auto Insurance Dataset involves predicting the total payment for all claims in thousands of Swedish Kronor, given the total number of claims.

It is a regression problem. It is comprised of 63 observations with 1 input variable and one output variable. The variable names are as follows:

- Number of claims.

- Total payment for all claims in thousands of Swedish Kronor.

The baseline performance of predicting the mean value is an RMSE of approximately 81 thousand Kronor.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

108,392.5 19,46.2 13,15.7 124,422.2 40,119.4 |

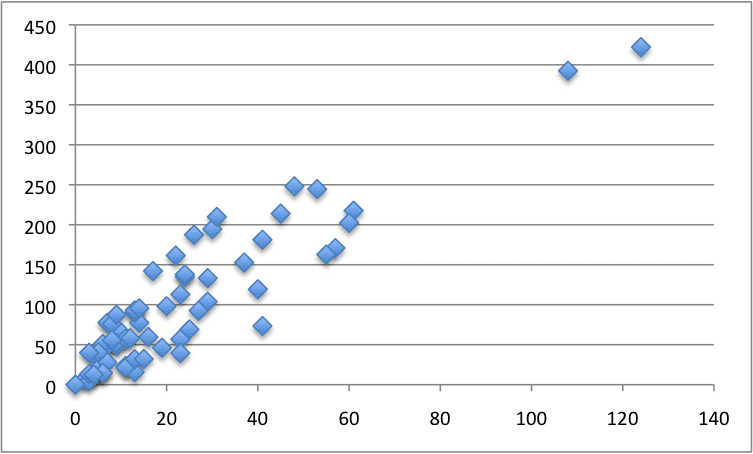

Below is a scatter plot of the entire dataset.

Swedish Auto Insurance Dataset

2. Wine Quality Dataset

The Wine Quality Dataset involves predicting the quality of white wines on a scale given chemical measures of each wine.

It is a multi-class classification problem, but could also be framed as a regression problem. The number of observations for each class is not balanced. There are 4,898 observations with 11 input variables and one output variable. The variable names are as follows:

- Fixed acidity.

- Volatile acidity.

- Citric acid.

- Residual sugar.

- Chlorides.

- Free sulfur dioxide.

- Total sulfur dioxide.

- Density.

- pH.

- Sulphates.

- Alcohol.

- Quality (score between 0 and 10).

The baseline performance of predicting the mean value is an RMSE of approximately 0.148 quality points.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

7,0.27,0.36,20.7,0.045,45,170,1.001,3,0.45,8.8,6 6.3,0.3,0.34,1.6,0.049,14,132,0.994,3.3,0.49,9.5,6 8.1,0.28,0.4,6.9,0.05,30,97,0.9951,3.26,0.44,10.1,6 7.2,0.23,0.32,8.5,0.058,47,186,0.9956,3.19,0.4,9.9,6 7.2,0.23,0.32,8.5,0.058,47,186,0.9956,3.19,0.4,9.9,6 |

3. Pima Indians Diabetes Dataset

The Pima Indians Diabetes Dataset involves predicting the onset of diabetes within 5 years in Pima Indians given medical details.

It is a binary (2-class) classification problem. The number of observations for each class is not balanced. There are 768 observations with 8 input variables and 1 output variable. Missing values are believed to be encoded with zero values. The variable names are as follows:

- Number of times pregnant.

- Plasma glucose concentration a 2 hours in an oral glucose tolerance test.

- Diastolic blood pressure (mm Hg).

- Triceps skinfold thickness (mm).

- 2-Hour serum insulin (mu U/ml).

- Body mass index (weight in kg/(height in m)^2).

- Diabetes pedigree function.

- Age (years).

- Class variable (0 or 1).

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 65%. Top results achieve a classification accuracy of approximately 77%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

6,148,72,35,0,33.6,0.627,50,1 1,85,66,29,0,26.6,0.351,31,0 8,183,64,0,0,23.3,0.672,32,1 1,89,66,23,94,28.1,0.167,21,0 0,137,40,35,168,43.1,2.288,33,1 |

4. Sonar Dataset

The Sonar Dataset involves the prediction of whether or not an object is a mine or a rock given the strength of sonar returns at different angles.

It is a binary (2-class) classification problem. The number of observations for each class is not balanced. There are 208 observations with 60 input variables and 1 output variable. The variable names are as follows:

- Sonar returns at different angles

- …

- Class (M for mine and R for rock)

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 53%. Top results achieve a classification accuracy of approximately 88%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

0.0200,0.0371,0.0428,0.0207,0.0954,0.0986,0.1539,0.1601,0.3109,0.2111,0.1609,0.1582,0.2238,0.0645,0.0660,0.2273,0.3100,0.2999,0.5078,0.4797,0.5783,0.5071,0.4328,0.5550,0.6711,0.6415,0.7104,0.8080,0.6791,0.3857,0.1307,0.2604,0.5121,0.7547,0.8537,0.8507,0.6692,0.6097,0.4943,0.2744,0.0510,0.2834,0.2825,0.4256,0.2641,0.1386,0.1051,0.1343,0.0383,0.0324,0.0232,0.0027,0.0065,0.0159,0.0072,0.0167,0.0180,0.0084,0.0090,0.0032,R 0.0453,0.0523,0.0843,0.0689,0.1183,0.2583,0.2156,0.3481,0.3337,0.2872,0.4918,0.6552,0.6919,0.7797,0.7464,0.9444,1.0000,0.8874,0.8024,0.7818,0.5212,0.4052,0.3957,0.3914,0.3250,0.3200,0.3271,0.2767,0.4423,0.2028,0.3788,0.2947,0.1984,0.2341,0.1306,0.4182,0.3835,0.1057,0.1840,0.1970,0.1674,0.0583,0.1401,0.1628,0.0621,0.0203,0.0530,0.0742,0.0409,0.0061,0.0125,0.0084,0.0089,0.0048,0.0094,0.0191,0.0140,0.0049,0.0052,0.0044,R 0.0262,0.0582,0.1099,0.1083,0.0974,0.2280,0.2431,0.3771,0.5598,0.6194,0.6333,0.7060,0.5544,0.5320,0.6479,0.6931,0.6759,0.7551,0.8929,0.8619,0.7974,0.6737,0.4293,0.3648,0.5331,0.2413,0.5070,0.8533,0.6036,0.8514,0.8512,0.5045,0.1862,0.2709,0.4232,0.3043,0.6116,0.6756,0.5375,0.4719,0.4647,0.2587,0.2129,0.2222,0.2111,0.0176,0.1348,0.0744,0.0130,0.0106,0.0033,0.0232,0.0166,0.0095,0.0180,0.0244,0.0316,0.0164,0.0095,0.0078,R 0.0100,0.0171,0.0623,0.0205,0.0205,0.0368,0.1098,0.1276,0.0598,0.1264,0.0881,0.1992,0.0184,0.2261,0.1729,0.2131,0.0693,0.2281,0.4060,0.3973,0.2741,0.3690,0.5556,0.4846,0.3140,0.5334,0.5256,0.2520,0.2090,0.3559,0.6260,0.7340,0.6120,0.3497,0.3953,0.3012,0.5408,0.8814,0.9857,0.9167,0.6121,0.5006,0.3210,0.3202,0.4295,0.3654,0.2655,0.1576,0.0681,0.0294,0.0241,0.0121,0.0036,0.0150,0.0085,0.0073,0.0050,0.0044,0.0040,0.0117,R 0.0762,0.0666,0.0481,0.0394,0.0590,0.0649,0.1209,0.2467,0.3564,0.4459,0.4152,0.3952,0.4256,0.4135,0.4528,0.5326,0.7306,0.6193,0.2032,0.4636,0.4148,0.4292,0.5730,0.5399,0.3161,0.2285,0.6995,1.0000,0.7262,0.4724,0.5103,0.5459,0.2881,0.0981,0.1951,0.4181,0.4604,0.3217,0.2828,0.2430,0.1979,0.2444,0.1847,0.0841,0.0692,0.0528,0.0357,0.0085,0.0230,0.0046,0.0156,0.0031,0.0054,0.0105,0.0110,0.0015,0.0072,0.0048,0.0107,0.0094,R |

5. Banknote Dataset

The Banknote Dataset involves predicting whether a given banknote is authentic given a number of measures taken from a photograph.

It is a binary (2-class) classification problem. The number of observations for each class is not balanced. There are 1,372 observations with 4 input variables and 1 output variable. The variable names are as follows:

- Variance of Wavelet Transformed image (continuous).

- Skewness of Wavelet Transformed image (continuous).

- Kurtosis of Wavelet Transformed image (continuous).

- Entropy of image (continuous).

- Class (0 for authentic, 1 for inauthentic).

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 50%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 6 |

3.6216,8.6661,-2.8073,-0.44699,0 4.5459,8.1674,-2.4586,-1.4621,0 3.866,-2.6383,1.9242,0.10645,0 3.4566,9.5228,-4.0112,-3.5944,0 0.32924,-4.4552,4.5718,-0.9888,0 4.3684,9.6718,-3.9606,-3.1625,0 |

6. Iris Flowers Dataset

The Iris Flowers Dataset involves predicting the flower species given measurements of iris flowers.

It is a multi-class classification problem. The number of observations for each class is balanced. There are 150 observations with 4 input variables and 1 output variable. The variable names are as follows:

- Sepal length in cm.

- Sepal width in cm.

- Petal length in cm.

- Petal width in cm.

- Class (Iris Setosa, Iris Versicolour, Iris Virginica).

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 26%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

5.1,3.5,1.4,0.2,Iris-setosa 4.9,3.0,1.4,0.2,Iris-setosa 4.7,3.2,1.3,0.2,Iris-setosa 4.6,3.1,1.5,0.2,Iris-setosa 5.0,3.6,1.4,0.2,Iris-setosa |

7. Abalone Dataset

The Abalone Dataset involves predicting the age of abalone given objective measures of individuals.

It is a multi-class classification problem, but can also be framed as a regression. The number of observations for each class is not balanced. There are 4,177 observations with 8 input variables and 1 output variable. The variable names are as follows:

- Sex (M, F, I).

- Length.

- Diameter.

- Height.

- Whole weight.

- Shucked weight.

- Viscera weight.

- Shell weight.

- Rings.

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 16%. The baseline performance of predicting the mean value is an RMSE of approximately 3.2 rings.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

M,0.455,0.365,0.095,0.514,0.2245,0.101,0.15,15 M,0.35,0.265,0.09,0.2255,0.0995,0.0485,0.07,7 F,0.53,0.42,0.135,0.677,0.2565,0.1415,0.21,9 M,0.44,0.365,0.125,0.516,0.2155,0.114,0.155,10 I,0.33,0.255,0.08,0.205,0.0895,0.0395,0.055,7 |

8. Ionosphere Dataset

The Ionosphere Dataset requires the prediction of structure in the atmosphere given radar returns targeting free electrons in the ionosphere.

It is a binary (2-class) classification problem. The number of observations for each class is not balanced. There are 351 observations with 34 input variables and 1 output variable. The variable names are as follows:

- 17 pairs of radar return data.

- …

- Class (g for good and b for bad).

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 64%. Top results achieve a classification accuracy of approximately 94%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

1,0,0.99539,-0.05889,0.85243,0.02306,0.83398,-0.37708,1,0.03760,0.85243,-0.17755,0.59755,-0.44945,0.60536,-0.38223,0.84356,-0.38542,0.58212,-0.32192,0.56971,-0.29674,0.36946,-0.47357,0.56811,-0.51171,0.41078,-0.46168,0.21266,-0.34090,0.42267,-0.54487,0.18641,-0.45300,g 1,0,1,-0.18829,0.93035,-0.36156,-0.10868,-0.93597,1,-0.04549,0.50874,-0.67743,0.34432,-0.69707,-0.51685,-0.97515,0.05499,-0.62237,0.33109,-1,-0.13151,-0.45300,-0.18056,-0.35734,-0.20332,-0.26569,-0.20468,-0.18401,-0.19040,-0.11593,-0.16626,-0.06288,-0.13738,-0.02447,b 1,0,1,-0.03365,1,0.00485,1,-0.12062,0.88965,0.01198,0.73082,0.05346,0.85443,0.00827,0.54591,0.00299,0.83775,-0.13644,0.75535,-0.08540,0.70887,-0.27502,0.43385,-0.12062,0.57528,-0.40220,0.58984,-0.22145,0.43100,-0.17365,0.60436,-0.24180,0.56045,-0.38238,g 1,0,1,-0.45161,1,1,0.71216,-1,0,0,0,0,0,0,-1,0.14516,0.54094,-0.39330,-1,-0.54467,-0.69975,1,0,0,1,0.90695,0.51613,1,1,-0.20099,0.25682,1,-0.32382,1,b 1,0,1,-0.02401,0.94140,0.06531,0.92106,-0.23255,0.77152,-0.16399,0.52798,-0.20275,0.56409,-0.00712,0.34395,-0.27457,0.52940,-0.21780,0.45107,-0.17813,0.05982,-0.35575,0.02309,-0.52879,0.03286,-0.65158,0.13290,-0.53206,0.02431,-0.62197,-0.05707,-0.59573,-0.04608,-0.65697,g |

9. Wheat Seeds Dataset

The Wheat Seeds Dataset involves the prediction of species given measurements of seeds from different varieties of wheat.

It is a multiclass (3-class) classification problem. The number of observations for each class is balanced. There are 210 observations with 7 input variables and 1 output variable. The variable names are as follows:

- Area.

- Perimeter.

- Compactness

- Length of kernel.

- Width of kernel.

- Asymmetry coefficient.

- Length of kernel groove.

- Class (1, 2, 3).

The baseline performance of predicting the most prevalent class is a classification accuracy of approximately 28%.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

15.26,14.84,0.871,5.763,3.312,2.221,5.22,1 14.88,14.57,0.8811,5.554,3.333,1.018,4.956,1 14.29,14.09,0.905,5.291,3.337,2.699,4.825,1 13.84,13.94,0.8955,5.324,3.379,2.259,4.805,1 16.14,14.99,0.9034,5.658,3.562,1.355,5.175,1 |

10. Boston House Price Dataset

The Boston House Price Dataset involves the prediction of a house price in thousands of dollars given details of the house and its neighborhood.

It is a regression problem. There are 506 observations with 13 input variables and 1 output variable. The variable names are as follows:

- CRIM: per capita crime rate by town.

- ZN: proportion of residential land zoned for lots over 25,000 sq.ft.

- INDUS: proportion of nonretail business acres per town.

- CHAS: Charles River dummy variable (= 1 if tract bounds river; 0 otherwise).

- NOX: nitric oxides concentration (parts per 10 million).

- RM: average number of rooms per dwelling.

- AGE: proportion of owner-occupied units built prior to 1940.

- DIS: weighted distances to five Boston employment centers.

- RAD: index of accessibility to radial highways.

- TAX: full-value property-tax rate per $10,000.

- PTRATIO: pupil-teacher ratio by town.

- B: 1000(Bk – 0.63)^2 where Bk is the proportion of blacks by town.

- LSTAT: % lower status of the population.

- MEDV: Median value of owner-occupied homes in $1000s.

The baseline performance of predicting the mean value is an RMSE of approximately 9.21 thousand dollars.

A sample of the first 5 rows is listed below.

|

1 2 3 4 5 |

0.00632 18.00 2.310 0 0.5380 6.5750 65.20 4.0900 1 296.0 15.30 396.90 4.98 24.00 0.02731 0.00 7.070 0 0.4690 6.4210 78.90 4.9671 2 242.0 17.80 396.90 9.14 21.60 0.02729 0.00 7.070 0 0.4690 7.1850 61.10 4.9671 2 242.0 17.80 392.83 4.03 34.70 0.03237 0.00 2.180 0 0.4580 6.9980 45.80 6.0622 3 222.0 18.70 394.63 2.94 33.40 0.06905 0.00 2.180 0 0.4580 7.1470 54.20 6.0622 3 222.0 18.70 396.90 5.33 36.20 |

- Download (update: download from here)

- More Information

Summary

In this post, you discovered 10 top standard datasets that you can use to practice applied machine learning.

Here is your next step:

- Pick one dataset.

- Grab your favorite tool (like Weka, scikit-learn or R)

- See how much you can beat the standard scores.

- Report your results in the comments below.

Thanks Jason. I will use these Datasets for practice.

Let me know how you go Benson.

Thanks. Please mention some datasets that have more than one output variable.

Format for Swedish Auto Insurance data has changed. It’s not in CSV format anymore and there are extra rows at the beginning of the data

You can copy paste the data from this page into a file and load in excel, then covert to csv:

https://www.math.muni.cz/~kolacek/docs/frvs/M7222/data/AutoInsurSweden.txt

Your posts have been a big help. Could you recommend a dataset which i can use to practice clustering and PCA on ?

Thanks.

Perhaps something where all features have the same units, like the iris flowers dataset?

Hello, in reference to the Swedish auto data, is it not possible to use Scikit-Learn to perform linear regression? I get deprecation errors that request that I reshape the data. When I reshape, I get the error that the samples are different sizes. What am I missing please. Thank you.

Sorry, I don’t know Joe. Perhaps try posting your code and errors to stackoverflow?

sir for wheat dataset i got result like this

0.97619047619

[[ 9 0 1]

[ 0 20 0]

[ 0 0 12]]

precision recall f1-score support

1.0 1.00 0.90 0.95 10

2.0 1.00 1.00 1.00 20

3.0 0.92 1.00 0.96 12

avg / total 0.98 0.98 0.98 42

is it correct sir?

What do you mean by correct?

Sir ,the confusion matrix and the accuracy what i got, is it acceptable?is that right?

It really depends on the problem. Sorry, I don’t know the problem well enough, perhaps compare it to the confusion matrix of other algorithms.

Thank you sir

I will do it

Thank you very much.

You’re welcome.

Thanks for the datasets they r going to help me as i learn ML

You’re welcome.

WHAT IS THE DIFFERENCE BETWEEN NUMERIC AND CLINICAL CANCER. OR BOTH ARE SAME . I NEED LEUKEMIA ,LUNG,COLON DATASETS FOR MY WORK. I TOO NEED IMAGE DATSET FOR MY RESEARCH .WHERE TO GET THE DATASETS

Perhaps try a google search?

Thanks a lot for sharing Jason!

I applied sklearn random forest and svm classifier to the wheat seed dataset in my very first Python notebook! 😀 The error oscilliates between 10% and 20% from an execution to an other. Can share it if anyone interrested.

Bye

Nice work!

I’m interested in the SVM classifier for the wheat seed dataset. You said you’re happy to share.

for sonar dataset got 90.47% accuracy

Well done!

Thanks Jason

I tried decision tree classifier with 70% training and 30% testing on Banknote dataset.

Achieved accuracy of 99%.

[Accuracy: 0.9902912621359223]

Well done!

used k- nearest neighbors classifier with 75% training & 25% testing on the iris data set. Achieved 0.973684 accuracy.

Well done!

used k- nearest neighbors classifier with 75% training & 25% testing on the iris data set. Achieved 0.9970845481049563 accuracy.

99.71%

Excellent.

Do you have any of these solved that I can reference back to?

Yes, I have solutions to most of them on the blog, you can try a blog search.

Hi, I used Support Vector Classifier and KNN classifier on the Wheat Seeds Dataset (80% train data, 20% test data )

Accuracy Score of SVC : 0.9047619047619048

Accuracy Score of KNN : 0.8809523809523809

Well done!

Hiya! Found some incredible toplogical trends in Iris that I am looking to replicate in another multi-class problem.

Are people typically classifying the gender of the species, or the ring number as a discrete output?

In the Abalone dataset*

The age is the target on that dataset, but you can frame any predictive modeling problem you like with the dataset for practice.

Hi sir I am looking for a data sets for wheat production bu using SVM regression algorithm .So please give me a proper data sets for machine running .

Search for datasets here:

https://machinelearningmastery.com/faq/single-faq/where-can-i-get-a-dataset-on-___

Hi guys, i am new to ML .

Thanks for this set of data !

Anyone beat the wine quality problem ?

My results are so bad.

Thanks

I did, see this:

https://machinelearningmastery.com/results-for-standard-classification-and-regression-machine-learning-datasets/

I need a data set that

Contains at least 5 dimensions/features, including at least one categorical and one numerical dimension.

• Contains a clear class label attribute (binary or multi-label).

• Be of a simple tabular structure (i.e., no time series, multimedia, etc.).

• Be of reasonable size, and contains at least 2K tuples.

This might help:

https://machinelearningmastery.com/faq/single-faq/where-can-i-get-a-dataset-on-___

Also this:

https://machinelearningmastery.com/generate-test-datasets-python-scikit-learn/

Where can I find the default result for the problems so I can compare with my result?

This has many of them:

https://machinelearningmastery.com/results-for-standard-classification-and-regression-machine-learning-datasets/

Thanks for the post – it is very helpfull!

I would like to know if anyone knows about a classification-dataset, where the importances for the features regarding the output classes is known. For example: Feature 1 is a good indicator for class 1, or Feature 3,4,5 are good indicators for class 2, …

Hope anyone can help 😉

You’re welcome.

Generally, we let the model discover the importance and how best to use input features.

Thank you very much for your answer. I was asking because I want to validate my approach to access the feature importance via global sensitivity analysis (Sobol Indices). In order to do I am searching for a dataset (or a dummy-dataset) with the described properties.

What are “Sobol Indices”?

It’s a variance based global sensitity analysis (ANOVA). It is quite similar to permutation-importance ranking but can reveal cross-correlations of features by calculation of the so called “total effect index”. If you are further interessed in the topic I can recommend the following paper:

https://www.researchgate.net/publication/306326267_Global_Sensitivity_Estimates_for_Neural_Network_Classifiers

Some Python code for straightforward calculation of sobol indices is provided here:

https://salib.readthedocs.io/en/latest/api.html#sobol-sensitivity-analysis

Coming back to my first question: Do you know about a dataset with those properties or do you have any idea how I can build up a dummy dataset with known feature importance for each output?

Thanks.

Yes, you can contrive a dataset with relevant/irrelevant inputs via the make_classification() function. I use it all the time.

Beyond that, you will have to contrive your own problem I would expect. Feature importance is not objective!

day4 lesson

code:-

import pandas as pd

url = “https://goo.gl/bDdBiA”

names = [‘preg’, ‘plas’, ‘pres’, ‘skin’, ‘test’, ‘mass’, ‘pedi’, ‘age’, ‘class’]

data = pd.read_csv(url, names=names)

description = data.describe()

print(description)

output:-

preg plas pres skin test mass pedi age class

count 768.000000 768.000000 768.000000 768.000000 768.000000 768.000000

768.000000 768.000000 768.000000

mean 3.845052 120.894531 69.105469 20.536458 79.799479 31.992578

0.471876 33.240885 0.348958

std 3.369578 31.972618 19.355807 15.952218 115.244002 7.884160 0.331329

11.760232 0.476951

min 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.078000

21.000000 0.000000

25% 1.000000 99.000000 62.000000 0.000000 0.000000 27.300000 0.243750

24.000000 0.000000

50% 3.000000 117.000000 72.000000 23.000000 30.500000 32.000000

0.372500 29.000000 0.000000

75% 6.000000 140.250000 80.000000 32.000000 127.250000 36.600000

0.626250 41.000000 1.000000

max 17.000000 199.000000 122.000000 99.000000 846.000000 67.100000

2.420000 81.000000 1.000000

The output not properly fit in comment section

Well done!

Hi there,

thanks a lot for the post!

I’m quite a beginner and something I’m not sure. I’ve fit the data with a straigth line (first dataset), but how do we measure accuracy?

Should we leave out some data points, and use to test or what?

Good question, this may help:

https://machinelearningmastery.com/regression-metrics-for-machine-learning/

Thanks, following the post, but with my own code:

mean = np.mean(Y)

l = Y.shape[1]

res = Y-mean

rmse = np.sqrt(np.dot(res,res.T)/l)

I calculated the rmse, and it yields

python

>>> rmse

array([[86.63170447]])

Also the values of wine quality have a max of 8 not 10, at least that’s what I get.

Tx for the help

the rmse is for the swedish kr

Would you please tell where 1.48 comes from in with wine dataset? I’ve calculated mean squared error but it yields 0.034, using

np.sqrt(np.mean(Y)/len(Y))or is this the sqrt(sum^m (y-y_i)*(y-y_i)/m) ?

It is probably the RMSE of a model that predicts the mean value from the training dataset.

The calculation for RMSE is here:

https://machinelearningmastery.com/regression-metrics-for-machine-learning/

Hi Jason,

The Wine quality dataset poses a multi-class classification problem. How could we have RMSE as a metric? Is that a mistake or am I missing something?

Hi Amit…yes the dataset can be utilized for classification, however in order to get that point the RMSE can be used to determine how accurate the predictions are based upon comparing averages of each quantity represented in the features.

With a linear model coded from scratch got

naive estimation rmse: [[86.63170447]]

model rmse: [[35.44597361]]

I’ve also done a simple visual of the model’s evolution here:

https://imgur.com/1X7h7gC

Nice work!

Thank you so much, Jason. Was really looking for these datasets for practice today.

You’re welcome.

Thanks a lot! I’ll use some of these for practice

Btw, it is written in a Wheat Seeds Dataset that it is a binary classification problem, however, 3 classes are given. Is this a mistake or something? Thank you

Yes, that was a mistake. Thanks for pointing out.

Just found that the evaluation dataset of wine isn’t as same as the one in here:

https://machinelearningmastery.com/results-for-standard-classification-and-regression-machine-learning-datasets/#:~:text=https%3A//raw.githubusercontent.com/jbrownlee/Datasets/master/wine.csv

Thank you for the feedback Yao!