In the previous post, you learned some basic feature extraction algorithms in OpenCV. The features are extracted in the form of classifying pixels. These indeed abstract the features from images because you do not need to consider the different color channels of each pixel, but to consider a single value. In this post, you will learn some other feature extract algorithms that can tell you about the image more concisely.

After completing this tutorial, you will know:

- What are keypoints in an image

- What are the common algorithms available in OpenCV for extracting keypoints

Kick-start your project with my book Machine Learning in OpenCV. It provides self-study tutorials with working code.

Let’s get started.

Image Feature Extraction in OpenCV: Keypoints and Description Vectors

Photo by Silas Köhler, some rights reserved.

Overview

This post is divided into two parts; they are:

- Keypoint Detection with SIFT and SURF in OpenCV

- Keypoint Detection using ORB in OpenCV

Prerequisites

For this tutorial, we assume that you are already familiar with:

Keypoint Detection with SIFT and SURF in OpenCV

Scale-Invariant Feature Transform (SIFT) and Speeded-Up Robust Features (SURF) are powerful algorithms for detecting and describing local features in images. They are named scale-invariant and robust because, compared to Harris Corner Detection, for example, its result is expectable even after some change to the image.

The SIFT algorithm applies Gaussian blur to the image and computes the difference in multiple scales. Intuitively, such a difference will be zero if your entire image is a single flat color. Hence, this algorithm is called keypoint detection, which identifies a place in the image with the most significant change in pixel values, such as corners.

The SIFT algorithm derives certain “orientation” values for each keypoint and outputs a vector representing the histogram of the orientation values.

It is found quite slow to run SIFT algorithm. Hence, there is a speed-up version, SURF. Describing the SIFT and SURF algorithms in detail would be lengthy, but luckily, you do not need to understand too much to use it with OpenCV.

Let’s look at an example using the following image:

Similar to the previous post, SIFT and SURF algorithms assume a grayscale image. This time, you need to create a detector first and apply it to the image:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import cv2 # Load the image and convery to grayscale img = cv2.imread('image.jpg') gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # Initialize SIFT and SURF detectors sift = cv2.SIFT_create() surf = cv2.xfeatures2d.SURF_create() # Detect key points and compute descriptors keypoints_sift, descriptors_sift = sift.detectAndCompute(img, None) keypoints_surf, descriptors_surf = surf.detectAndCompute(img, None) |

NOTE: You may find difficulties in running the above code in your OpenCV installation. To make this run, you may need to compile your own OpenCV module from scratch. It is because SIFT and SURF were patented, so OpenCV considered them “non-free”. Since the SIFT patent has already expired (SURF is still in effect), you may find SIFT works fine if you download a newer version of OpenCV.

The output of the SIFT or SURF algorithm are a list of keypoints and a numpy array of descriptors. The descriptors array is Nx128 for N keypoints, each represented by a vector of length 128. Each keypoint is an object with several attributes, such as the orientation angle.

There can be a lot of keypoints detected by default, because this helps one of the best uses for detected keypoints — to find associations between distorted images.

To reduce the number of detected keypoint in the output, you can set a higher “contrast threshold” and lower “edge threshold” (default to be 0.03 and 10 respectively) in SIFT or increase the “Hessian threshold” (default 100) in SURF. These can be adjusted at the detector object using sift.setContrastThreshold(0.03), sift.setEdgeThreshold(10), and surf.setHessianThreshold(100) respectively.

To draw the keypoints on the image, you can use the cv2.drawKeypoints() function and apply the list of all keypoints to it. The complete code, using only the SIFT algorithm and setting a very high threshold to keep only a few keypoints, is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

import cv2 # Load the image and convery to grayscale img = cv2.imread('image.jpg') gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # Initialize SIFT detector sift = cv2.SIFT_create() sift.setContrastThreshold(0.25) sift.setEdgeThreshold(5) # Detect key points and compute descriptors keypoints, descriptors = sift.detectAndCompute(img, None) for x in keypoints: print("({:.2f},{:.2f}) = size {:.2f} angle {:.2f}".format(x.pt[0], x.pt[1], x.size, x.angle)) img_kp = cv2.drawKeypoints(img, keypoints, None, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS) cv2.imshow("Keypoints", img_kp) cv2.waitKey(0) cv2.destroyAllWindows() |

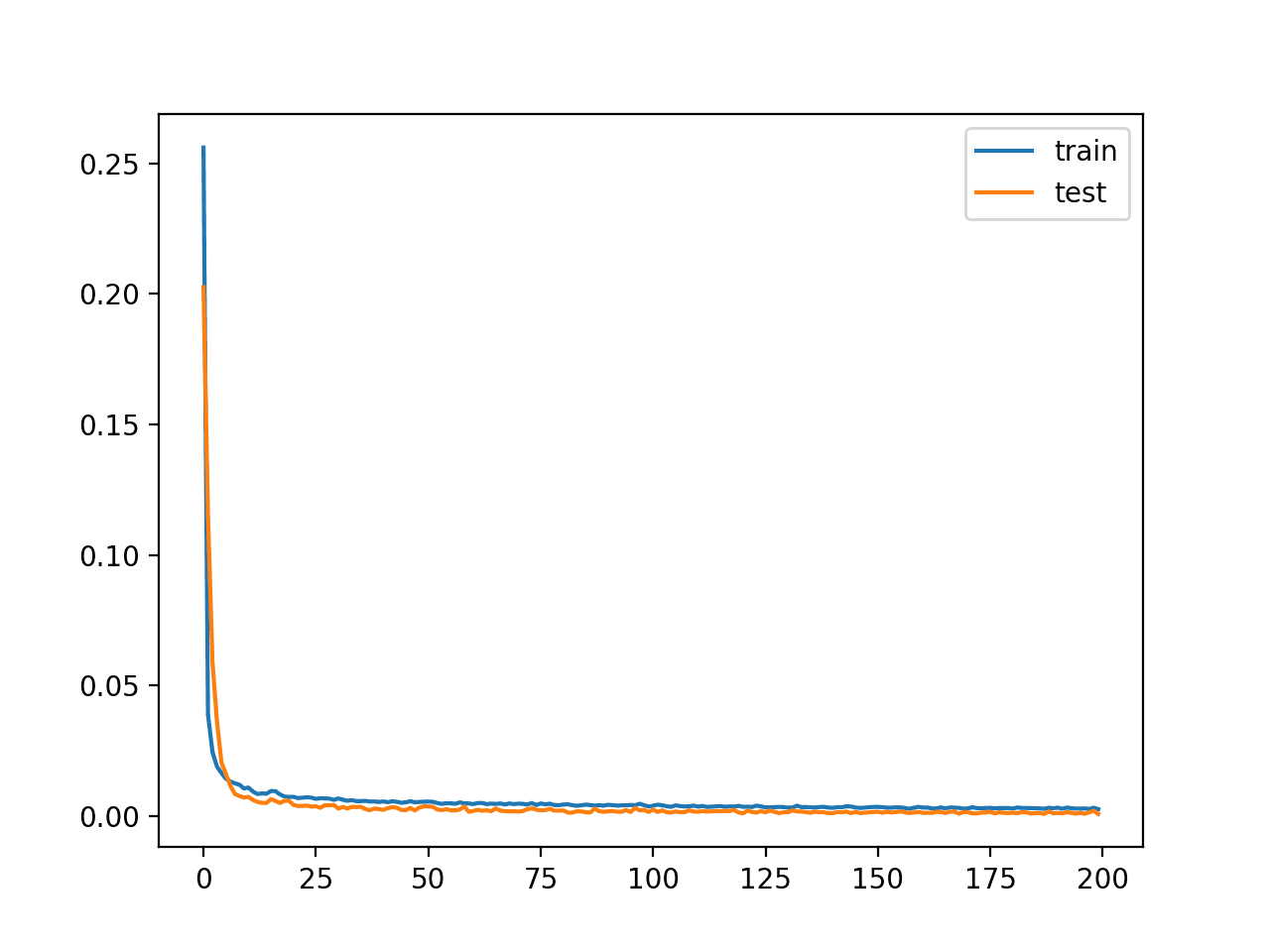

The image created is as follows:

Keypoints detected by the SIFT algorithm (zoomed in)

Original photo by Gleren Meneghin, some rights reserved.

The function cv2.drawKeypoints() will not modify your original image, but return a new one. In the picture above, you can see the keypoints drawn as circles proportional to its “size” with a stroke indicating the orientation. There are keypoints on the number “17” on the door as well as on the mail slots. But there are indeed more. From the for loop above, you can see that some keypoints are overlapped because multiple orientation angles are found.

In showing the keypoints on the image, you used the keypoint objects returned. However, you may find the feature vectors stored in descriptors useful if you want to further process the keypoints, such as running a clustering algorithm. But note that you still need the list of keypoints for information, such as the coordinates, to match the feature vectors.

Keypoint Detection using ORB in OpenCV

Since the SIFT and SURF algorithms are patented, there is an incentive to develop a free alternative that doesn’t need to be licensed. It is a product of the OpenCV developers themselves.

ORB stands for Oriented FAST and Rotated BRIEF. It is a combination of two other algorithms, FAST and BRIEF with modifications to match the performance of SIFT and SURF. You do not need to understand the algorithm details to use it, and its output is also a list of keypoint objects, as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

import cv2 # Load the image and convery to grayscale img = cv2.imread('image.jpg') gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # Initialize ORB detector orb = cv2.ORB_create(30) # Detect key points and compute descriptors keypoints, descriptors = orb.detectAndCompute(img, None) for x in keypoints: print("({:.2f},{:.2f}) = size {:.2f} angle {:.2f}".format( x.pt[0], x.pt[1], x.size, x.angle)) img_kp = cv2.drawKeypoints(img, keypoints, None, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS) cv2.imshow("Keypoints", img_kp) cv2.waitKey(0) cv2.destroyAllWindows() |

In the above, you set the ORB to generate the top 30 keypoints when you created the detector. By default, this number will be 500.

The detector returns a list of keypoints and a numpy array of descriptors (feature vector of each keypoint) exactly as before. However, the descriptors of each keypoint are now of length-32 instead of 128.

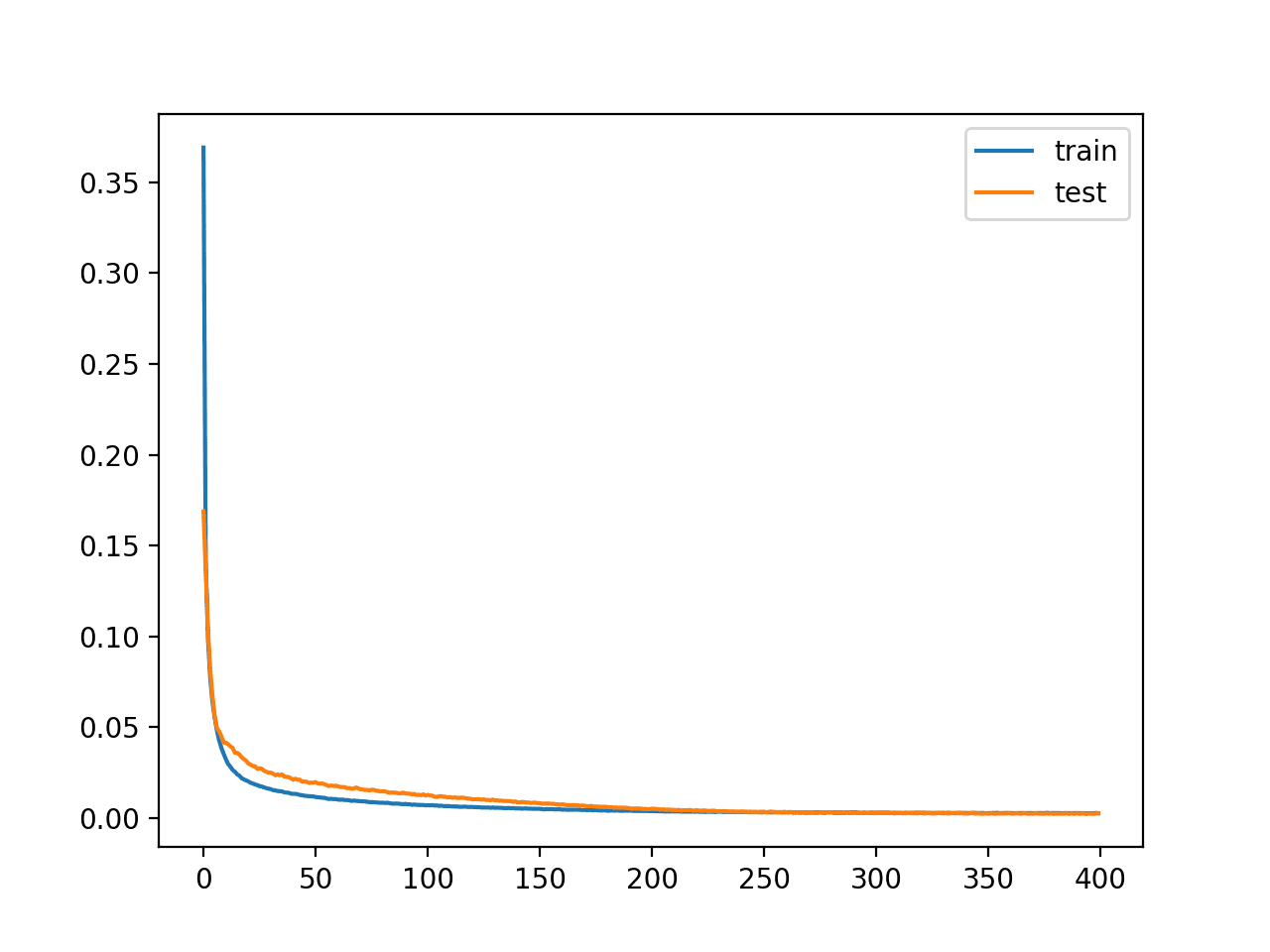

The generated keypoints are as follows:

Keypoints detected by ORB algorithm

Original photo by Gleren Meneghin, some rights reserved.

You can see, keypoints are generated roughly at the same location. The results are not exactly the same because there are overlapping keypoints (or offset by a very small distance) and easily the ORB algorithm reached the maximum count of 30. Moreover, the size are not comparable between different algorithms.

Want to Get Started With Machine Learning with OpenCV?

Take my free email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

Websites

- OpenCV, https://opencv.org/

- OpenCV Feature Detection and Description, https://docs.opencv.org/4.x/db/d27/tutorial_py_table_of_contents_feature2d.html

Summary

In this tutorial, you learned how to apply OpenCV’s keypoint detection algorithms, SIFT, SURF, and ORB.

Specifically, you learned:

- What is a keypoint in an image

- How to find the keypoints and the associated description vectors using OpenCV functions.

If you have any questions, please leave a comment below.

No comments yet.