The predictions you make with a predictive model do not matter, it is the use of those predictions that matters.

Jeremy Howard was the President and Chief Scientist of Kaggle, the competitive machine learning platform. In 2012 he presented at the O’reilly Strata conference on what he called the Drivetrain Approach for building “data products” that go beyond just predictions.

In this post you will discover Howard’s Drivetrain Approach and how you can use it to structure the development of systems rather than make predictions.

The Drivetrain Approach

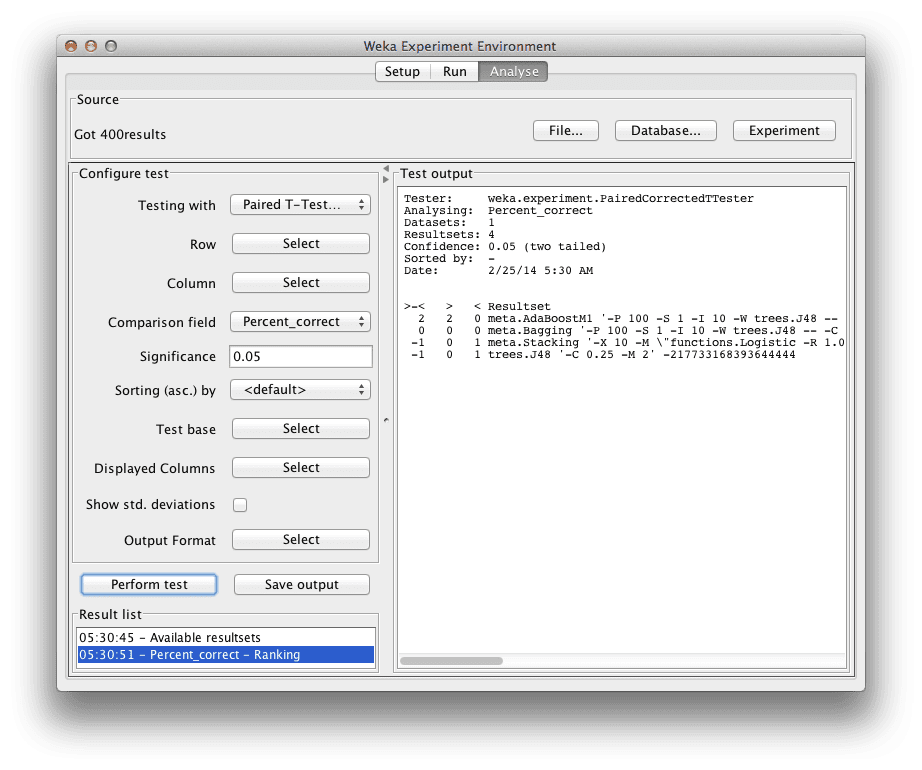

Image from O’Reilly, all rights reserved

Motivating the Approach

Jeremy Howard was a top Kaggle participant before investing in and joining the company. In talks like Getting In Shape For The Sport Of Data Science you get deep insight into Howard’s keen ability at diving into data and building effective models.

By the 2012 Strata talk, Howard had been at Kaggle for a year or two and had see a lot of competitions and a lot of competitive data scientists. You can’t help but think that his pitch of a more rounded methodology was born out of his frustration of the focus on just predictions and their accuracy.

The predictions are the accessible piece and it makes sense that they are the focus of competitions. I see his Drivetrain Approach as him throwing down the gauntlet and challenging the community to strive for more.

The Drivetrain Approach

Howard delivered a 35 minute talk at Strata 2012 on the approach titled “From Predictive Modelling to Optimization: The Next Frontier“.

The approach is also described in a blog post “Designing great data products: The Drivetrain Approach: A four-step process for building data products“, which is also available as a standalone free ebook (exactly the same content from what I can tell).

In the talk he presents a four step process for his Drivetrain Approach:

- Define Objectives: What outcome am I trying to achieve?

- Levers: What inputs can we control?

- Data: What data can we collect?

- Models: How do the levers influence the objectives?

He describes collecting data because what he is really referring to is the need for causality data, which most organizations do not collect. This data must be collected through by performing a large number of random experiments.

This is key. It goes beyond mealy A/B testing a new page title, it involves the evaluation of unbiased behaviour, such as the response to randomly selected recommendations.

The forth step of Modeling is a pipeline comprising of the following sub-processes:

- Objective: What outcome am I trying to achieve.

- Raw data: Unbiased causal data

- Modeller: Statistical model of the causal relationships in the data.

- Simulator: The ability to plug in ad hoc inputs (move the levers) and evaluate the effects on the objective.

- Optimizer: The search of inputs (leaver values) using the simulator toward maximizing (or minimizing) a desired outcome.

- Actionable outcome: Achieving the objective with the result

Case Studies

The approach is a little abstract, and needs clarification with some examples.

In the presentation, Howard uses Google search as an example:

- Objective: What webpage do you want to read?

- Levers: The ordering of the sites you could visit on the SERP.

- Data: The link network between pages.

- Model: Not discussed, but one would assume the ongoing experimentation on and refinement of the authority indicators for pages.

Extending this example, Google is very likely performing random experience in the SERP by injecting other results and seeing how users behave. This would permit a predictive model to be constructed based on the likelihood of clicking, the simulation of user clicks and the optimization of the most clickable entries in the SERP for a given user. Now, I expect an approach just like this is used for Google’s advertising, which would have been a clearer example.

Howard also gives Marketing as a suggested area for improvement. He comments that the objective is the maximization of CLTV. Levers include the recommendation of products, offers, discounts and customer care calls. The causal relationships that could be collected as raw data would be the probability or purchase and the probability of liking the product, but not know about the product.

He also gives the example of a prior start-up in the Optimal Decisions Group for maximizing profit in insurance. He also touches on the Google Self-Driving Car as another example, instead of mealy route finding as in current GPS displays.

I feel like there is greater opportunity to elaborate on these ideas. I think that if the methodology was presented in a clearer way with a step-by-step example, that there would have been greater response to these ideas.

Summary

The notions of going beyond the predictions needs to be repeated often. It is easy to get caught up in a given problem. We talk a lot about defining the problem up front as an attempt to reduce such effects.

Howard’s Drivetrain Approach is a tool that you can use to design a system to solve a complex problem that uses machine learning, rather than use machine learning to make predictions and call it a day.

There is a lot of overlap in these ideas with Response Surface Methodology (RSM). Although not explicitly spelled out, the link is hinted at in a related post at the same time by Irfan Ahmad in his Taxonomy of Predictive Modeling, required to clarify some of Howard’s terms.

No comments yet.