Do you have questions like:

- What data is best for my problem?

- What algorithm is best for my data?

- How do I best configure my algorithm?

Why can’t a machine learning expert just give you a straight answer to your question?

In this post, I want to help you see why no one can ever tell you what algorithm to use or how to configure it for your specific dataset.

I want to help you see that finding good data/algorithm/configuration is in fact the hard part of applied machine learning and the only part you need to focus on solving.

Let’s get started.

Analytical vs Numerical Solutions in Machine Learning

Photo by dr_tr, some rights reserved.

Analytical vs Numerical Solutions

In mathematics, some problems can be solved analytically and numerically.

- An analytical solution involves framing the problem in a well-understood form and calculating the exact solution.

- A numerical solution means making guesses at the solution and testing whether the problem is solved well enough to stop.

An example is the square root that can be solved both ways.

We prefer the analytical method in general because it is faster and because the solution is exact. Nevertheless, sometimes we must resort to a numerical method due to limitations of time or hardware capacity.

A good example is in finding the coefficients in a linear regression equation that can be calculated analytically (e.g. using linear algebra), but can be solved numerically when we cannot fit all the data into the memory of a single computer in order to perform the analytical calculation (e.g. via gradient descent).

Sometimes, the analytical solution is unknown and all we have to work with is the numerical approach.

Analytical Solutions

Many problems have well-defined solutions that are obvious once the problem has been defined.

A set of logical steps that we can follow to calculate an exact outcome.

For example, you know what operation to use given a specific arithmetic task such as addition or subtraction.

In linear algebra, there are a suite of methods that you can use to factorize a matrix, depending on if the properties of your matrix are square, rectangular, contain real or imaginary values, and so on.

We can stretch this more broadly to software engineering, where there are problems that turn up again and again that can be solved with a pattern of design that is known to work well, regardless of the specifics of your application. Such as the visitor pattern for performing an operation on each item in a list.

Some problems in applied machine learning are well defined and have an analytical solution.

For example, the method for transforming a categorical variable into a one hot encoding is simple, repeatable and (practically) always the same methodology regardless of the number of integer values in the set.

Unfortunately, most of the problems that we care about solving in machine learning do not have analytical solutions.

Numerical Solutions

There are many problems that we are interested in that do not have exact solutions.

Or at least, analytical solutions that we have figured out yet.

We have to make guesses at solutions and test them to see how good the solution is. This involves framing the problem and using trial and error across a set of candidate solutions.

In essence, the process of finding a numerical solution can be described as a search.

These types of solutions have some interesting properties:

- We often easily can tell a good solution from a bad solution.

- We often don’t objectively know what a “good” solution looks like; we can only compare the goodness between candidate solutions that we have tested.

- We are often satisfied with an approximate or “good enough” solution rather than the single best solution.

This last point is key, because often the problems that we are trying to solve with numerical solutions are challenging (as we have no easy way to solve them), where any “good enough” solution would be useful. It also highlights that there are many solutions to a given problem and even that many of them may be good enough to be usable.

Most of the problems that we are interested in solving in applied machine learning require a numerical solution.

It’s worse than this.

The numerical solutions to each sub-problem along the way influences the space of possible solutions for subsequent sub-problems.

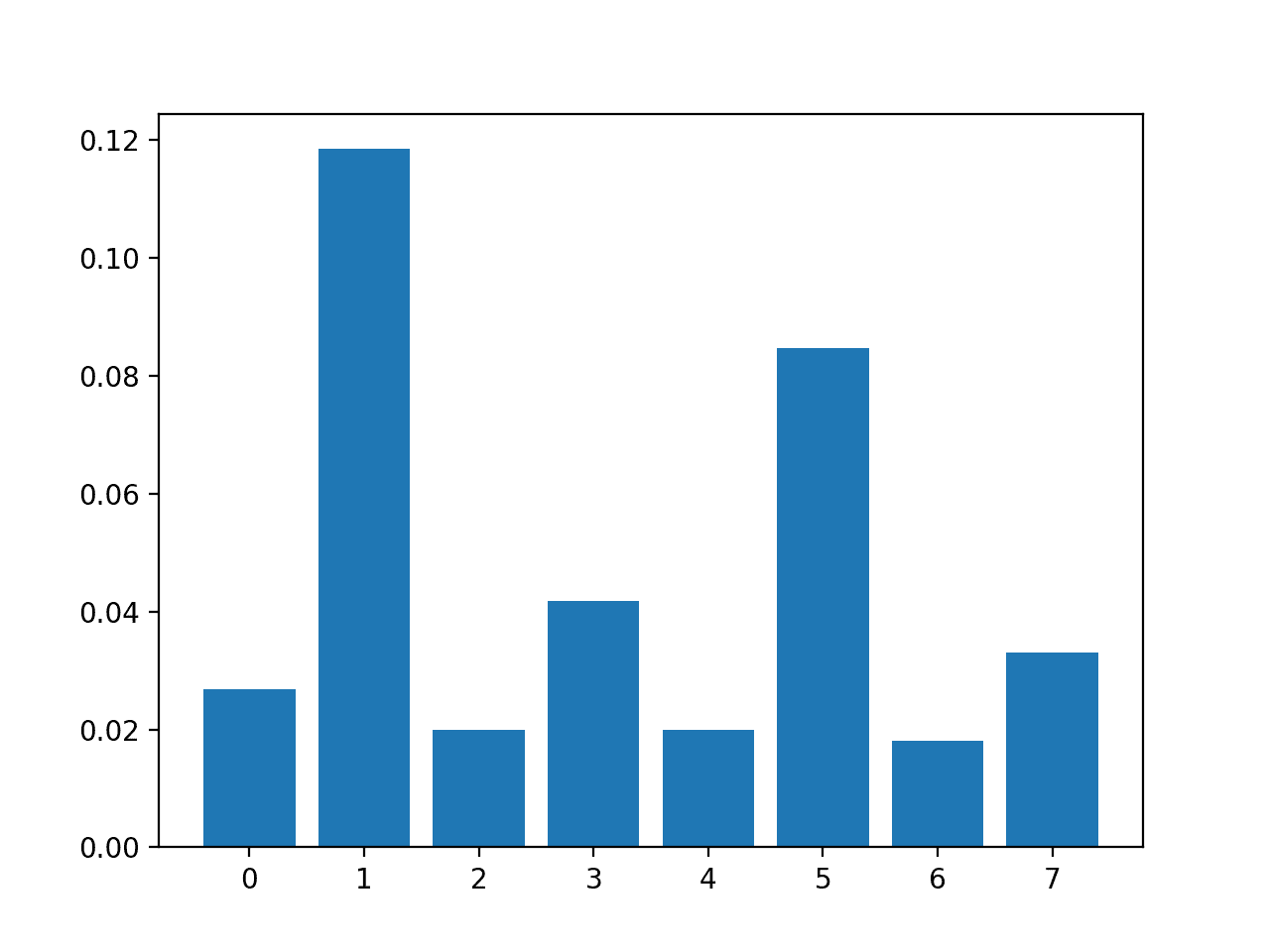

Numerical Solutions in Machine Learning

Applied machine learning is a numerical discipline.

The core of a given machine learning model is an optimization problem, which is really a search for a set of terms with unknown values needed to fill an equation. Each algorithm has a different “equation” and “terms“, using this terminology loosely.

The equation is easy to calculate in order to make a prediction for a given set of terms, but we don’t know the terms to use in order to get a “good” or even “best” set of predictions on a given set of data.

This is the numerical optimization problem that we always seek to solve.

It’s numerical, because we are trying to solve the optimization problem with noisy, incomplete, and error-prone limited samples of observations from our domain. The model is trying hard to interpret the data and create a map between the inputs and the outputs of these observations.

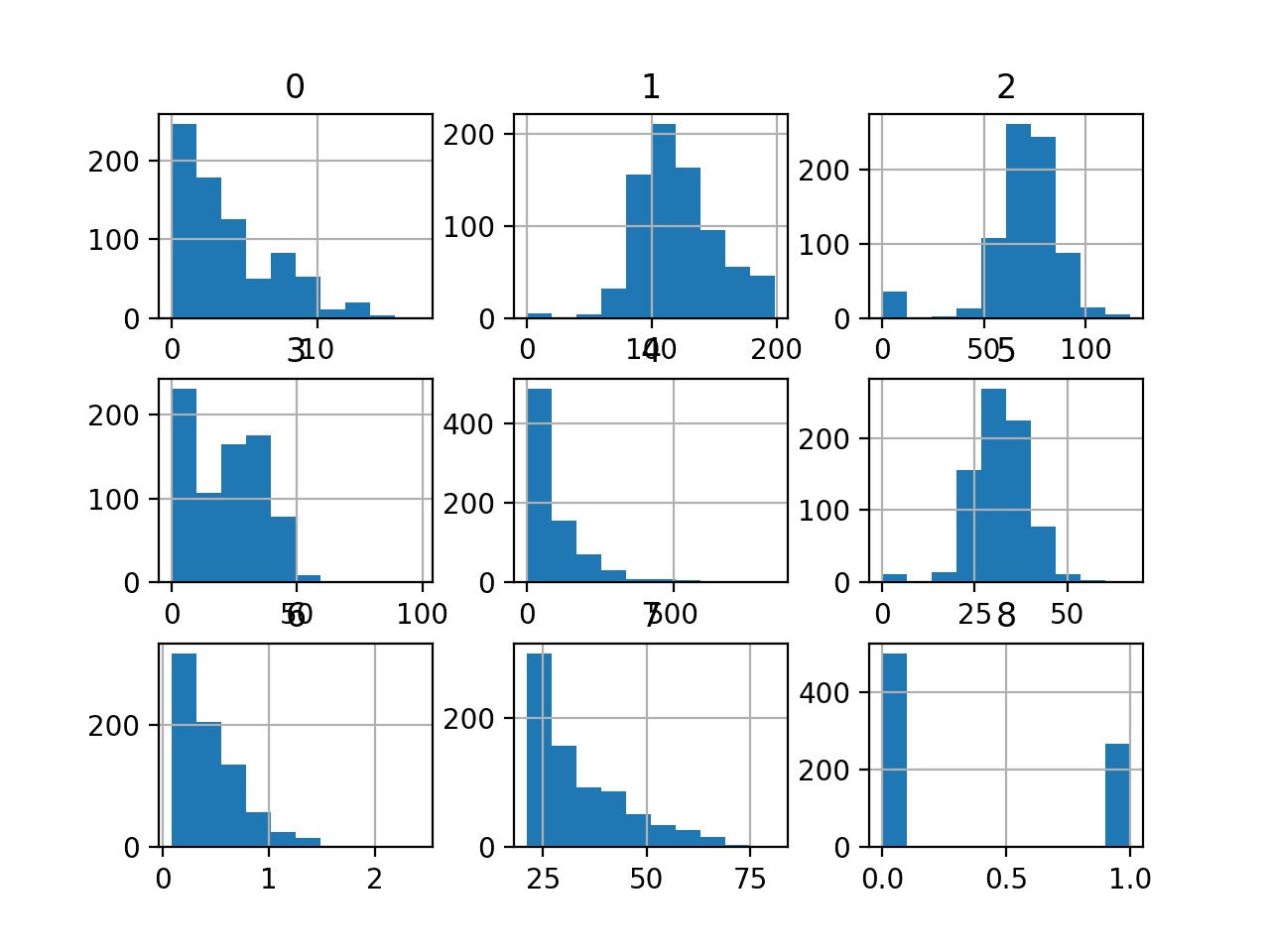

Broader Empirical Solution in Machine Learning

The numerical optimization problem at the core of a chosen machine learning algorithm is nested in a broader problem.

The specific optimization problem is influenced by many factors, all of which greatly contribute to the “goodness” of the ultimate solution, and all of which do not have analytical solutions.

For example:

- What data to use.

- How much data to use.

- How to treat the data prior to modeling.

- What modeling algorithm or algorithms to use.

- How to configure the algorithms

- How to evaluate machine learning algorithms.

Objectively, these are all part of the open problem that your specific predictive modeling machine learning problem represents.

There is no analytical solution; you must discover what combination of these elements works best for your specific problem.

It is one big search problem where combinations of elements are trialed and evaluated.

Where you only really know what a good score is relative to the scores of other candidate solutions that you have tried.

Where there is no objective path through this maze other than trial and error and perhaps borrowing ideas from other related problems that do have known “good enough” solutions.

This great empirical approach to applied machine learning is often referred to as “machine learning as search” and is described further in the post:

This is also covered in the post:

Answering Your Question

We bring this back to the specific question you have.

The question of what data, algorithm, or configuration will work best for your specific predictive modeling problem.

No one can look at your data or a description of your problem and tell you how to solve it best, or even well.

Experience may inform an expert on areas to start looking, and some of those early guesses may pay off, but more often than not, early guesses are too complicated or plain wrong.

A predictive modeling problem must be worked in order to find a good-enough solution and it is your job as the machine learning practitioner to work it.

This is the hard work of applied machine learning and it is the area to practice and get good at to be considered competent in the field.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- A Data-Driven Approach to Choosing Machine Learning Algorithms

- A Gentle Introduction to Applied Machine Learning as a Search Problem

- Why Applied Machine Learning Is Hard

- What’s the difference between analytical and numerical approaches to problems?

Summary

In this post, you discovered the difference between analytical and numerical solutions and the empirical nature of applied machine learning.

Specifically, you learned:

- Analytical solutions are logical procedures that yield an exact solution.

- Numerical solutions are trial-and-error procedures that are slower and result in approximate solutions.

- Applied Machine learning has a numerical solution at the core with an adjusted mindset in order to choose data, algorithms, and configurations for a specific predictive modeling problem.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

What about the backpropagation in deep learning? I always thought it was done numerically, but then some of the libraries talk about analytical or symbolic computations for the backprop. How does this fit in with the topic of analytical vs numerical?

Here’s my off the cuff riff on the topic (happy to be corrected):

Backprop is the calculus of updating the weights with the error gradient.

The calculation of the gradient is estimated numerically in almost all cases. Sometimes we want to calculate complicated error gradients, and rather than specifying them directly, we can use symbolic libs like theano/tensorflow to specify these calculations. Under the covers, some numerical work is going on at execution time.

The iterative process of these two elements (gradient estimates and weight updates) is batch/mini-batch/stochastic gradient descent which is a numerical optimization procedure.

Sir, please send me the topic on LDA and PCA technique for dimensionality reduction. My research field is networking. Now I am interested in machine learning.

Here is information on PCA:

https://machinelearningmastery.com/calculate-principal-component-analysis-scratch-python/

Dear Jason, would you please send me a topic where ML has a potential to be applied to contemporary high voltage product or high voltage power system research.

I cannot. Perhaps search google scholar?

Dear Jason, would you please send me an example in numerical using kares function

What do you mean exactly? Regression?

Here is a regression example in Keras:

https://machinelearningmastery.com/regression-tutorial-keras-deep-learning-library-python/

Dear Jason,

I am naive to machine learning and want to solve the problem of Ax=b and A^’x approx b^’+ e, given A,A^’,b,b^’ can we recover x correctly where x is a binary solution.

Perhaps post on crossvalidated or mathoverflow?