Whether it is a supervised learning problem or an unsupervised problem, there will be some optimization algorithm working in the background. Almost any classification, regression or clustering problem can be cast as an optimization problem.

In this tutorial, you will discover what is optimization and concepts related to it.

After completing this tutorial, you will know:

- What is Mathematical programming or optimization

- Difference between a maximization and minimization problems

- Difference between local and global optimal solutions

- Difference between constrained and unconstrained optimization

- Difference between linear and non-linear programming

- Examples of optimization

Let’s get started.

Tutorial Overview

This tutorial is divided into two parts; they are:

- Various introductory topics related to optimization

- Constrained vs. unconstrained optimization

- Equality vs. inequality constraints

- Feasible region

- Examples of optimization in machine learning

What Is Optimization or Mathematical Programming?

In calculus and mathematics, the optimization problem is also termed as mathematical programming. To describe this problem in simple words, it is the mechanism through which we can find an element, variable or quantity that best fits a set of given criterion or constraints.

Maximization Vs. Minimization Problems

The simplest cases of optimization problems are minimization or maximization of scalar functions. If we have a scalar function of one or more variables, f(x_1, x_2, … x_n) then the following is an optimization problem:

Find x_1, x_2, …, x_n where f(x) is minimum

Or we can have an equivalent maximization problem.

When we define functions quantifying errors or penalties, we apply a minimization problem. On the other hand, if a learning algorithm constructs a function modeling the accuracy of a method, we would maximize this function.

Many automated software tools for optimization, generally implement either a maximization problem or a minimization task but not both. Hence, we can convert a maximization problem to a minimization problem (and vice versa) by adding a negative sign to f(x), i.e.,

Maximize f(x) w.r.r x is equivalent to Minimize -f(x) w.r.t. x

As the two problems are equivalent, we’ll only talk about either minimization or maximization problems in the rest of the tutorial. The same rules and definitions apply to its equivalent.

Want to Get Started With Calculus for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Global Vs. Local Optimum Points

In machine learning, we often encounter functions, which are highly non-linear with a complex landscape. It is possible that there is a point where the function has the lowest value within a small or local region around that point. Such a point is called a local minimum point.

This is opposed to global minimum point, which is a point where the function has the least value over its entire domain. The following figure shows local and global maximum points.

Unconstrained Vs. Constrained Optimization

There are many problems in machine learning, where we are interested in finding the global optimum point without any constraints or restrictions on the region in space. Such problems are called unconstrained optimization problems.

At times we have to solve an optimization problem subject to certain constraints. Such optimization problems are termed as constrained optimization problems. For example:

Minimize x^2 + y^2 subject to. x + y <= 1

Examples of constrained optimization are:

- Find minimum of a function when the sum of variables in the domain must sum to one

- Find minimum of a function such that certain vectors are normal to each other

- Find minimum of a function such that certain domain variables lie in a certain range.

Feasible Region

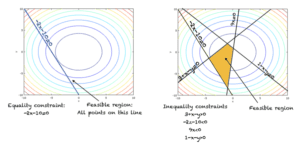

All the points in space where the constraints on the problem hold true comprise the feasible region. An optimization algorithm searches for optimal points in the feasible region. The feasible region for the two types of constraints is shown in the figure of the next section.

For an unconstrained optimization problem, the entire domain of the function is a feasible region.

Equality Vs. Inequality Constraints

The constraints imposed in an optimization problem could be equality constraints or inequality constraints. The figure below shows the two types of constraints.

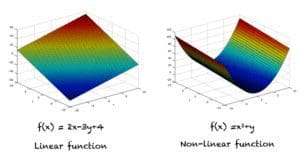

Linear Vs. Non-linear Programming

An optimization problem where the function is linear and all equality or inequality constraints are also linear constraints is called a linear programming problem.

If either the objective function is non-linear or one or more than one constraints is non-linear, then we have a non-linear programming problem.

To visualize the difference between linear and non-linear functions you can check out the figure below.

Examples of Optimization in Machine Learning

Listed below are some well known machine learning algorithms that employ optimization. You should keep in mind that almost all machine learning algorithms employ some kind of optimization.

- Gradient descent in neural networks (unconstrained optimization).

- Method of Lagrange multipliers in support vector machines (constrained optimization).

- Principal component analysis (constrained optimization)

- Clustering via expectation maximization algorithm (constrained optimization)

- Logistic regression (unconstrained optimization)

- Genetic algorithms in evolutionary learning algorithms (different variants exist to solve both constrained and unconstrained optimization problems).

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Method of Lagrange multipliers

- Non-linear optimization techniques

- The simplex method

If you explore any of these extensions, I’d love to know. Post your findings in the comments below.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

- Function of several variables and gradient vectors

- Gradient descent for machine learning

- Why optimization is important in machine learning

- How to choose an optimization algorithm

Resources

- Additional resources on Calculus Books for Machine Learning

Books

- Thomas’ Calculus, 14th edition, 2017. (based on the original works of George B. Thomas, revised by Joel Hass, Christopher Heil, Maurice Weir)

- Calculus, 3rd Edition, 2017. (Gilbert Strang)

- Calculus, 8th edition, 2015. (James Stewart)

Summary

In this tutorial, you discovered what is mathematical programming or optimization problem. Specifically, you learned:

- Maximization vs. minimization

- Constrained vs. unconstrained optimization

- Why optimization is important in machine learning

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer

Nice article. Can you write an article with source code regarding Interpolation in Machine Learning?

Thanks for your suggestion.

I love the math and optimization tutorials. Very nicely done!

Glad you like it!

Sir. Please explain all the six algorithm with example

We have most of these in our blog. Please use the search function and you should find them easily.

Hi, how can I subscribe to this network. I’m an engineer and decide to branch out, excuse the pun, into ML. Thx, Sean.

You can subscribe to our mailing list! Go to our blog (https://machinelearningmastery.com/blog/) and click open the tab at bottom right corner to enter your email address. Wish you will enjoy that.