In the literature, the term Jacobian is often interchangeably used to refer to both the Jacobian matrix or its determinant.

Both the matrix and the determinant have useful and important applications: in machine learning, the Jacobian matrix aggregates the partial derivatives that are necessary for backpropagation; the determinant is useful in the process of changing between variables.

In this tutorial, you will review a gentle introduction to the Jacobian.

After completing this tutorial, you will know:

- The Jacobian matrix collects all first-order partial derivatives of a multivariate function that can be used for backpropagation.

- The Jacobian determinant is useful in changing between variables, where it acts as a scaling factor between one coordinate space and another.

Let’s get started.

A Gentle Introduction to the Jacobian

Photo by Simon Berger, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Partial Derivatives in Machine Learning

- The Jacobian Matrix

- Other Uses of the Jacobian

Partial Derivatives in Machine Learning

We have thus far mentioned gradients and partial derivatives as being important for an optimization algorithm to update, say, the model weights of a neural network to reach an optimal set of weights. The use of partial derivatives permits each weight to be updated independently of the others, by calculating the gradient of the error curve with respect to each weight in turn.

Many of the functions that we usually work with in machine learning are multivariate, vector-valued functions, which means that they map multiple real inputs, n, to multiple real outputs, m:

For example, consider a neural network that classifies grayscale images into several classes. The function being implemented by such a classifier would map the n pixel values of each single-channel input image, to m output probabilities of belonging to each of the different classes.

In training a neural network, the backpropagation algorithm is responsible for sharing back the error calculated at the output layer, among the neurons comprising the different hidden layers of the neural network, until it reaches the input.

The fundamental principle of the backpropagation algorithm in adjusting the weights in a network is that each weight in a network should be updated in proportion to the sensitivity of the overall error of the network to changes in that weight.

– Page 222, Deep Learning, 2019.

This sensitivity of the overall error of the network to changes in any one particular weight is measured in terms of the rate of change, which, in turn, is calculated by taking the partial derivative of the error with respect to the same weight.

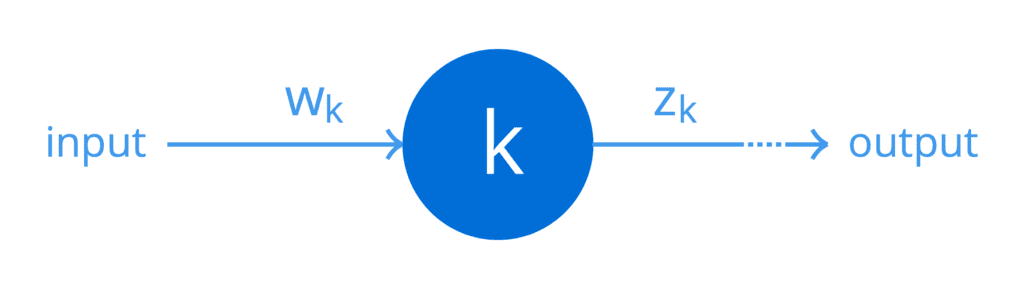

For simplicity, suppose that one of the hidden layers of some particular network consists of just a single neuron, k. We can represent this in terms of a simple computational graph:

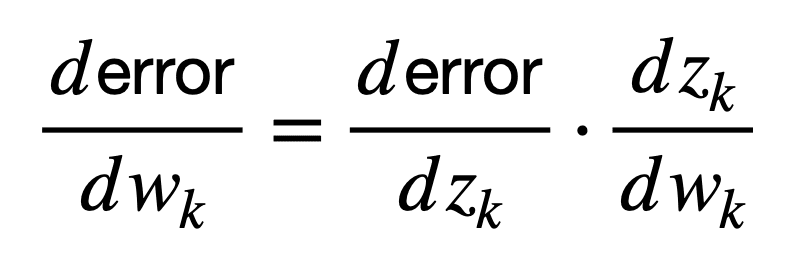

Again, for simplicity, let’s suppose that a weight, wk, is applied to an input of this neuron to produce an output, zk, according to the function that this neuron implements (including the nonlinearity). Then, the weight of this neuron can be connected to the error at the output of the network as follows (the following formula is formally known as the chain rule of calculus, but more on this later in a separate tutorial):

Here, the derivative, dzk / dwk, first connects the weight, wk, to the output, zk, while the derivative, derror / dzk, subsequently connects the output, zk, to the network error.

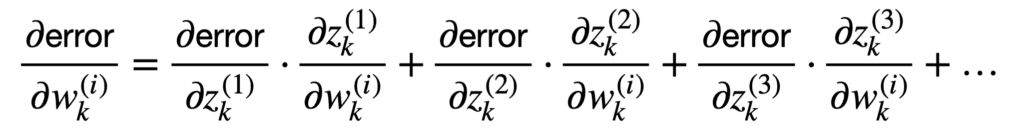

It is more often the case that we’d have many connected neurons populating the network, each attributed a different weight. Since we are more interested in such a scenario, then we can generalise beyond the scalar case to consider multiple inputs and multiple outputs:

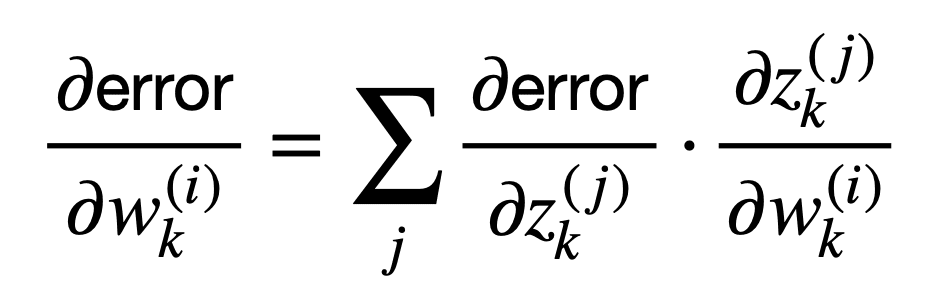

This sum of terms can be represented more compactly as follows:

Or, equivalently, in vector notation using the del operator, ∇, to represent the gradient of the error with respect to either the weights, wk, or the outputs, zk:

The back-propagation algorithm consists of performing such a Jacobian-gradient product for each operation in the graph.

– Page 207, Deep Learning, 2017.

This means that the backpropagation algorithm can relate the sensitivity of the network error to changes in the weights, through a multiplication by the Jacobian matrix, (∂zk / ∂wk)T.

Hence, what does this Jacobian matrix contain?

The Jacobian Matrix

The Jacobian matrix collects all first-order partial derivatives of a multivariate function.

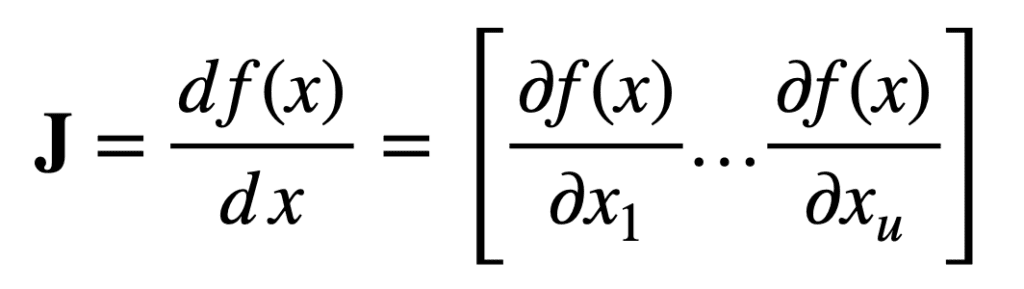

Specifically, consider first a function that maps u real inputs, to a single real output:

Then, for an input vector, x, of length, u, the Jacobian vector of size, 1 × u, can be defined as follows:

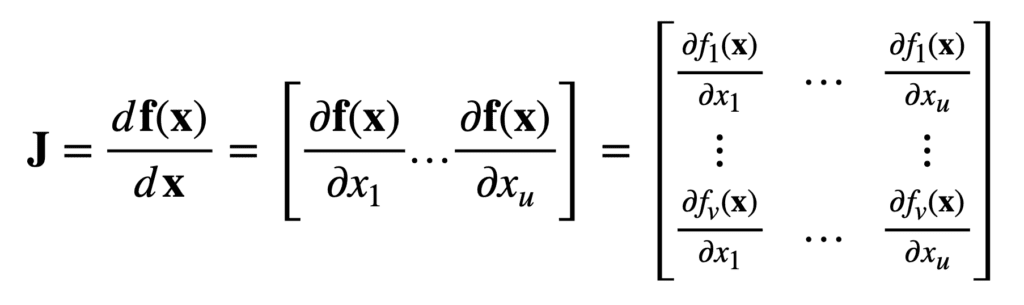

Now, consider another function that maps u real inputs, to v real outputs:

Then, for the same input vector, x, of length, u, the Jacobian is now a v × u matrix, J ∈ ℝv×u, that is defined as follows:

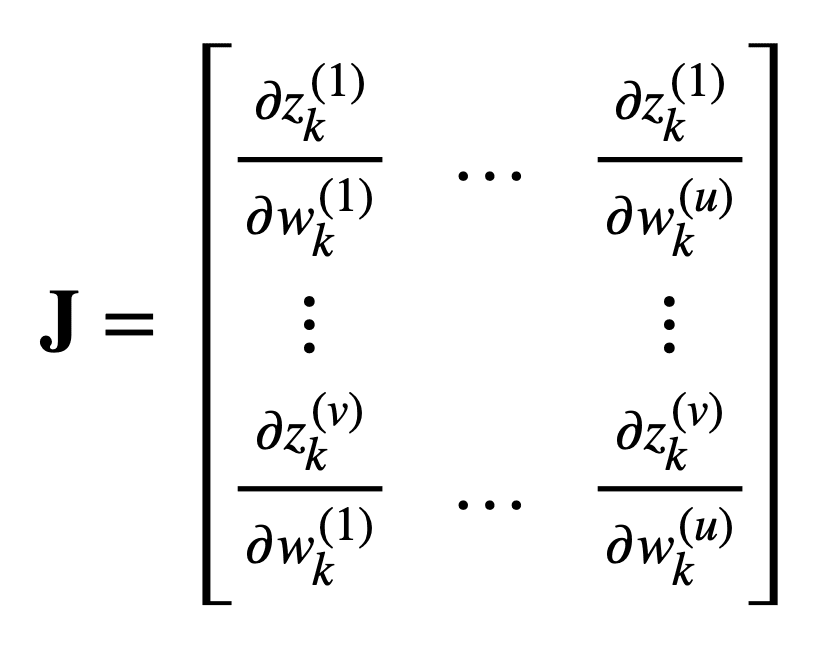

Reframing the Jacobian matrix into the machine learning problem considered earlier, while retaining the same number of u real inputs and v real outputs, we find that this matrix would contain the following partial derivatives:

Want to Get Started With Calculus for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Other Uses of the Jacobian

An important technique when working with integrals involves the change of variables (also referred to as, integration by substitution or u-substitution), where an integral is simplified into another integral that is easier to compute.

In the single variable case, substituting some variable, x, with another variable, u, can transform the original function into a simpler one for which it is easier to find an antiderivative. In the two variable case, an additional reason might be that we would also wish to transform the region of terms over which we are integrating, into a different shape.

In the single variable case, there’s typically just one reason to want to change the variable: to make the function “nicer” so that we can find an antiderivative. In the two variable case, there is a second potential reason: the two-dimensional region over which we need to integrate is somehow unpleasant, and we want the region in terms of u and v to be nicer—to be a rectangle, for example.

– Page 412, Single and Multivariable Calculus, 2020.

When performing a substitution between two (or possibly more) variables, the process starts with a definition of the variables between which the substitution is to occur. For example, x = f(u, v) and y = g(u, v). This is then followed by a conversion of the integral limits depending on how the functions, f and g, will transform the u–v plane into the x–y plane. Finally, the absolute value of the Jacobian determinant is computed and included, to act as a scaling factor between one coordinate space and another.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Deep Learning, 2017.

- Mathematics for Machine Learning, 2020.

- Single and Multivariable Calculus, 2020.

- Deep Learning, 2019.

Articles

Summary

In this tutorial, you discovered a gentle introduction to the Jacobian.

Specifically, you learned:

- The Jacobian matrix collects all first-order partial derivatives of a multivariate function that can be used for backpropagation.

- The Jacobian determinant is useful in changing between variables, where it acts as a scaling factor between one coordinate space and another.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

very interesting

Thank you!

So great, Stefania. Thanks!!!

You’re welcome!

Very interesting, l am working on the application of Jacobian matrix to algebra of order 4

What are the uses of these in simultaneous equations in applied side.

If you would like to solve a system of nonlinear equations by Newton’s method, this is how the Jacobian would be used.

Well explained

Thank you!

Great tutorial about math compact notations! Thank you!

I did not know that Jacobian matrix was behind backpropagation algorithm!

so it is one of the main pillars of machine learning …

because of first order derivatives are behind the way to distribute the cost function (error) into each of the neuron layers’s weights ..as some kind of error sensitivity to all weighs !

Anyway, many times math scares people because of symbols or compact notation …where so many things are expressed in a “simple” expression …they call it an elegant way …

So I like the way where such compact functions are explained in detail, or break-down into an operative way …an later on rebuilt the expression with your own invented symbols :-))

Thank you for the insight!

Nicely explain

Thank you.

Is the lower left term in this formula a typo?

Should it be ∂zk(v) / ∂wk(1) instead?

https://machinelearningmastery.com/wp-content/uploads/2021/07/jacobian_10.png

Indeed, thank you for that!

Thanks Stephanie for a nice article.

Just one small comment that while you defined Jacobian matrix, I guess you want to say $J \in \R^{v\times u}$

Indeed, thank you for this!

Great article, Stefania! Shouldn’t the Jacobian for the first function be of size 1 x u and not u x 1?

Hi Atul…Please clarify which function you are referring to so that I may better assist you.

There is an error in the article. It states that f:R^u -> R^v produces v*u matrix, hence f:R^u -> R^1 should produce 1*u matrix. Previous paragraph however states that f:R^u -> R produces a u*1 matrix. This is an error because 1*u matrix and u*1 matrix are mathematically different objects.

Thank you for the feedback Risto! We will review the items you noted.

Thank you for the article, very clear !

I want to know how can we compute uncertainty of the output of the input from the jacobian? Thank you in advance.

Hi Sarah…The following resource may be of interest to you:

https://www.cambridge.org/core/journals/design-science/article/uncertainty-quantification-and-reduction-using-jacobian-and-hessian-information/957A5E1284BB22E1DC734187E9625396