When it comes to machine learning tasks such as classification or regression, approximation techniques play a key role in learning from the data. Many machine learning methods approximate a function or a mapping between the inputs and outputs via a learning algorithm.

In this tutorial, you will discover what is approximation and its importance in machine learning and pattern recognition.

After completing this tutorial, you will know:

- What is approximation

- Importance of approximation in machine learning

Let’s get started.

Tutorial Overview

This tutorial is divided into 3 parts; they are:

- What is approximation?

- Approximation when the form of function is not known

- Approximation when the form of function is known

What Is Approximation?

We come across approximation very often. For example, the irrational number π can be approximated by the number 3.14. A more accurate value is 3.141593, which remains an approximation. You can similarly approximate the values of all irrational numbers like sqrt(3), sqrt(7), etc.

Approximation is used whenever a numerical value, a model, a structure or a function is either unknown or difficult to compute. In this article we’ll focus on function approximation and describe its application to machine learning problems. There are two different cases:

- The function is known but it is difficult or numerically expensive to compute its exact value. In this case approximation methods are used to find values, which are close to the function’s actual values.

- The function itself is unknown and hence a model or learning algorithm is used to closely find a function that can produce outputs close to the unknown function’s outputs.

Approximation When Form of Function is Known

If the form of a function is known, then a well known method in calculus and mathematics is approximation via Taylor series. The Taylor series of a function is the sum of infinite terms, which are computed using function’s derivatives. The Taylor series expansion of a function is discussed in this tutorial.

Another well known method for approximation in calculus and mathematics is Newton’s method. It can be used to approximate the roots of polynomials, hence making it a useful technique for approximating quantities such as the square root of different values or the reciprocal of different numbers, etc.

Want to Get Started With Calculus for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Approximation When Form of Function is Unknown

In data science and machine learning, it is assumed that there is an underlying function that holds the key to the relationship between the inputs and outputs. The form of this function is unknown. Here, we discuss several machine learning problems that employ approximation.

Approximation in Regression

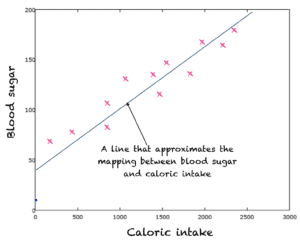

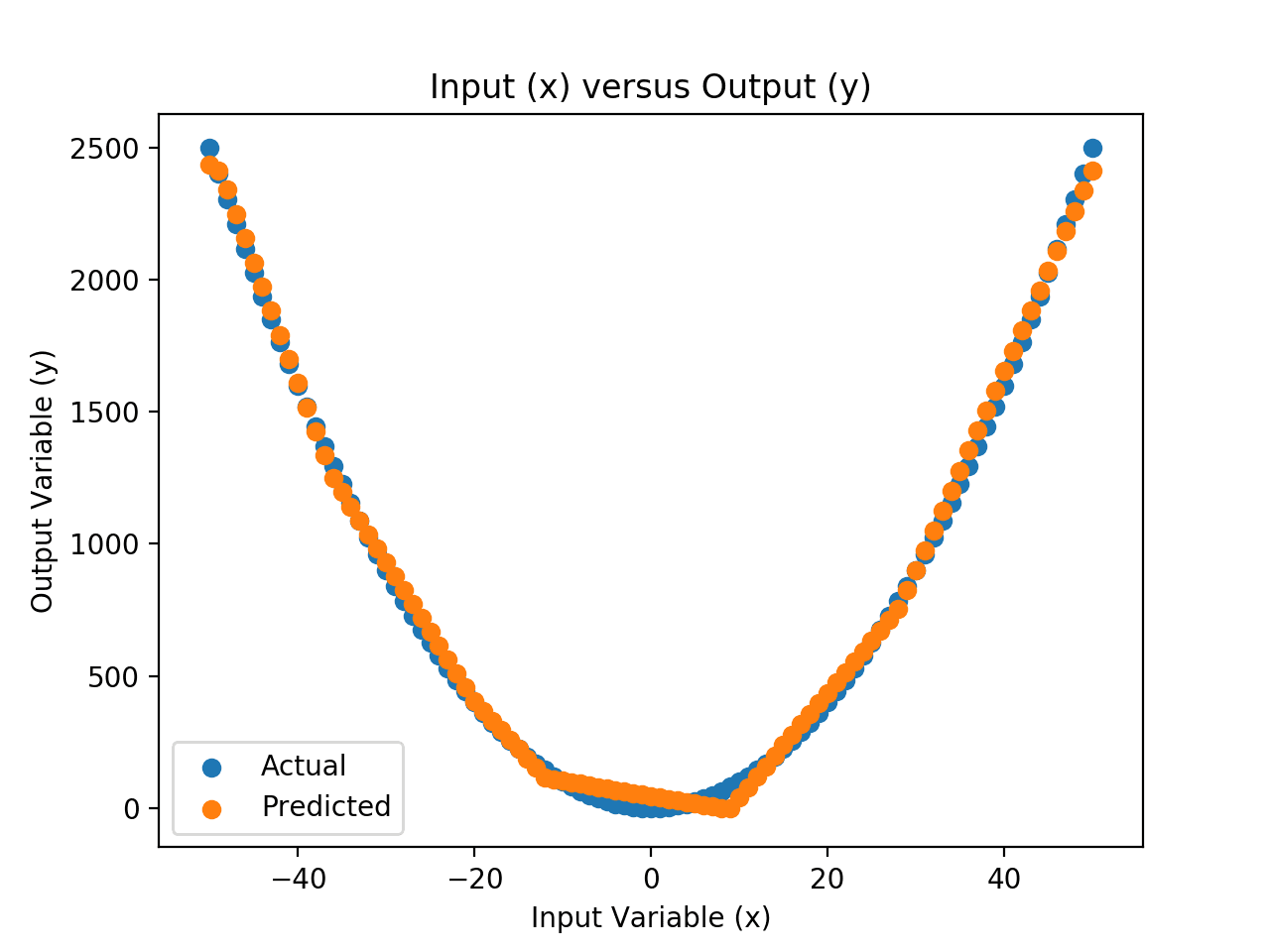

Regression involves the prediction of an output variable when given a set of inputs. In regression, the function that truly maps the input variables to outputs is not known. It is assumed that some linear or non-linear regression model can approximate the mapping of inputs to outputs.

For example, we may have data related to consumed calories per day and the corresponding blood sugar. To describe the relationship between the calorie input and blood sugar output, we can assume a straight line relationship/mapping function. The straight line is therefore the approximation of the mapping of inputs to outputs. A learning method such as the method of least squares is used to find this line.

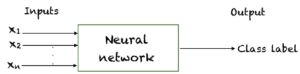

Approximation in Classification

A classic example of models that approximate functions in classification problems is that of neural networks. It is assumed that the neural network as a whole can approximate a true function that maps the inputs to the class labels. Gradient descent or some other learning algorithm is then used to learn that function approximation by adjusting the weights of the neural network.

Approximation in Unsupervised Learning

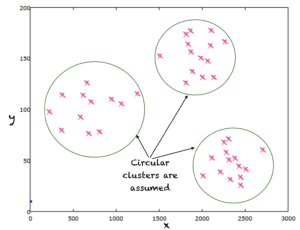

Below is a typical example of unsupervised learning. Here we have points in 2D space and the label of none of these points is given. A clustering algorithm generally assumes a model according to which a point can be assigned to a class or label. For example, k-means learns the labels of data by assuming that data clusters are circular, and hence, assigns the same label or class to points lying in the same circle or an n-sphere in case of multi-dimensional data. In the figure below we are approximating the relationship between points and their labels via circular functions.

A clustering algorithm approximates a model that determines clusters or unknown labels of input points

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Maclaurin series

- Taylor’s series

If you explore any of these extensions, I’d love to know. Post your findings in the comments below.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

Resources

- Jason Brownlee’s excellent resource on Calculus Books for Machine Learning

Books

- Pattern recognition and machine learning by Christopher M. Bishop.

- Deep learning by Ian Goodfellow, Joshua Begio, Aaron Courville.

- Thomas’ Calculus, 14th edition, 2017. (based on the original works of George B. Thomas, revised by Joel Hass, Christopher Heil, Maurice Weir)

Summary

In this tutorial, you discovered what is approximation. Specifically, you learned:

- Approximation

- Approximation when the form of a function is known

- Approximation when the form of a function is unknown

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer

Good approximation summary.

Thanks.

Very good learning material on machine learning

Thank you.

Can you mention function approximation algorithms for multi-input and multi-output? except NN

Please see the email reply that was sent in response to this same question.

Can you mention function approximation algorithms for multi-input and multi-output? except NN

if we have algorithms for that, which one is better? and how can I make a comparison?