It is hyperbole to say deep learning is achieving state-of-the-art results across a range of difficult problem domains. A fact, but also hyperbole.

There is a lot of excitement around artificial intelligence, machine learning and deep learning at the moment. It is also an amazing opportunity to get on on the ground floor of some really powerful tech.

I try hard to convince friends, colleagues and students to get started in deep learning and bold statements like the above are not enough. It requires stories, pictures and research papers.

In this post you will discover amazing and recent applications of deep learning that will inspire you to get started in deep learning.

Getting started in deep learning does not have to mean go and study the equations for the next 2-3 years, it could mean download Keras and start running your first model in 5 minutes flat. Start applied deep learning. Build things. Get excited and turn it into code and systems.

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

I have been wanting to write this post for a while. Let’s get started.

Inspirational Applications of Deep Learning

Photo by Nick Kenrick, some rights reserved.

Overview

Below is the list of the specific examples we are going to look at in this post.

Not all of the examples are technology that is ready for prime time, but guaranteed, they are all examples that will get you excited.

Some are examples that seem ho hum if you have been around the field for a while. In the broader context, they are not ho hum. Not at all.

Frankly, to an old AI hacker like me, some of these examples are a slap in the face. Problems that I simply did not think we could tackle for decades, if at all.

I’ve focused on visual examples because we can look at screenshots and videos to immediately get an idea of what the algorithm is doing, but there are just as many if not more examples in natural language with text and audio data that are not listed.

Here’s the list:

- Colorization of Black and White Images.

- Adding Sounds To Silent Movies.

- Automatic Machine Translation.

- Object Classification in Photographs.

- Automatic Handwriting Generation.

- Character Text Generation.

- Image Caption Generation.

- Automatic Game Playing.

Need help with Deep Learning in Python?

Take my free 2-week email course and discover MLPs, CNNs and LSTMs (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

1. Automatic Colorization of Black and White Images

Image colorization is the problem of adding color to black and white photographs.

Traditionally this was done by hand with human effort because it is such a difficult task.

Deep learning can be used to use the objects and their context within the photograph to color the image, much like a human operator might approach the problem.

A visual and highly impressive feat.

This capability leverages of the high quality and very large convolutional neural networks trained for ImageNet and co-opted for the problem of image colorization.

Generally the approach involves the use of very large convolutional neural networks and supervised layers that recreate the image with the addition of color.

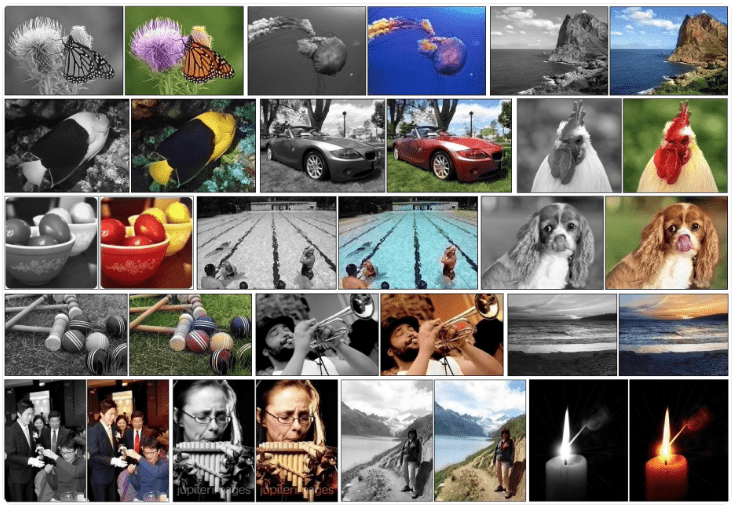

Colorization of Black and White Photographs

Image taken from Richard Zhang, Phillip Isola and Alexei A. Efros.

Impressively, the same approach can be used to colorize still frames of black and white movies

https://www.youtube.com/watch?v=_MJU8VK2PI4

Further Reading

Papers

- Deep Colorization [pdf], 2015

- Colorful Image Colorization [pdf] (website), 2016

- Learning Representations for Automatic Colorization [pdf] (website), 2016

- Image Colorization with Deep Convolutional Neural Networks [pdf], 2016

2. Automatically Adding Sounds To Silent Movies

In this task the system must synthesize sounds to match a silent video.

The system is trained using 1000 examples of video with sound of a drum stick striking different surfaces and creating different sounds. A deep learning model associates the video frames with a database of pre-rerecorded sounds in order to select a sound to play that best matches what is happening in the scene.

The system was then evaluated using a turing-test like setup where humans had to determine which video had the real or the fake (synthesized) sounds.

A very cool application of both convolutional neural networks and LSTM recurrent neural networks.

Further Reading

- Artificial intelligence produces realistic sounds that fool humans

- Machines can generate sound effects that fool humans

Papers

- Visually Indicated Sounds (webpage), 2015

3. Automatic Machine Translation

This is a task where given words, phrase or sentence in one language, automatically translate it into another language.

Automatic machine translation has been around for a long time, but deep learning is achieving top results in two specific areas:

- Automatic Translation of Text.

- Automatic Translation of Images.

Text translation can be performed without any preprocessing of the sequence, allowing the algorithm to learn the dependencies between words and their mapping to a new language. Stacked networks of large LSTM recurrent neural networks are used to perform this translation.

As you would expect, convolutional neural networks are used to identify images that have letters and where the letters are in the scene. Once identified, they can be turned into text, translated and the image recreated with the translated text. This is often called instant visual translation.

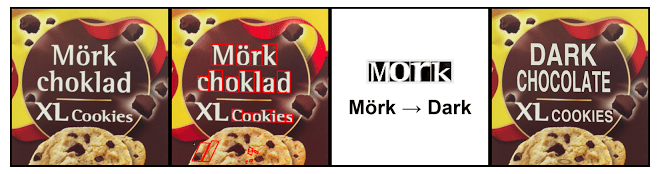

Instant Visual Translation

Example of instant visual translation, taken from the Google Blog.

Further Reading

It’s hard to find good resources for this example, if you know any, can you leave a comment.

Papers

- Sequence to Sequence Learning with Neural Networks [pdf], 2014

- Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation [pdf], 2014

- Deep Neural Networks in Machine Translation: An Overview [pdf], 2015

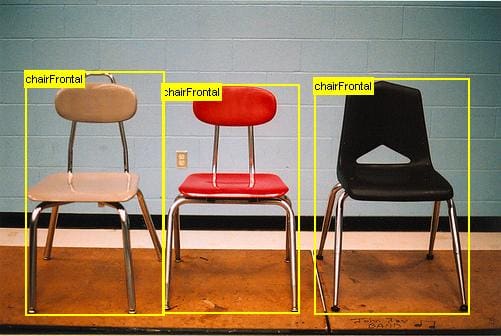

4. Object Classification and Detection in Photographs

This task requires the classification of objects within a photograph as one of a set of previously known objects.

State-of-the-art results have been achieved on benchmark examples of this problem using very large convolutional neural networks. A breakthrough in this problem by Alex Krizhevsky et al. results on the ImageNet classification problem called AlexNet.

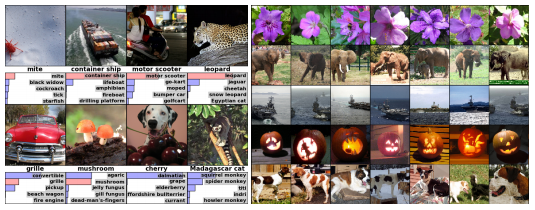

Example of Object Classification

Taken from ImageNet Classification with Deep Convolutional Neural Networks

A more complex variation of this task called object detection involves specifically identifying one or more objects within the scene of the photograph and drawing a box around them.

Example of Object Detection within Photogaphs

Taken from the Google Blog.

Further Reading

Papers

- ImageNet Classification with Deep Convolutional Neural Networks [pdf], 2012

- Some Improvements on Deep Convolutional Neural Network Based Image Classification [pdf], 2013

- Scalable Object Detection using Deep Neural Networks [pdf], 2013

- Deep Neural Networks for Object Detection [pdf], 2013

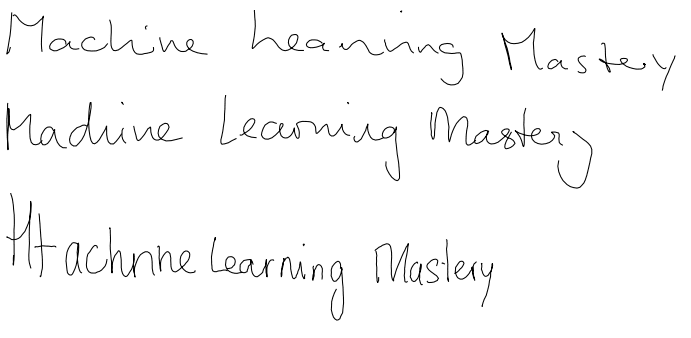

5. Automatic Handwriting Generation

This is a task where given a corpus of handwriting examples, generate new handwriting for a given word or phrase.

The handwriting is provided as a sequence of coordinates used by a pen when the handwriting samples were created. From this corpus the relationship between the pen movement and the letters is learned and new examples can be generated ad hoc.

What is fascinating is that different styles can be learned and then mimicked. I would love to see this work combined with some forensic hand writing analysis expertise.

Sample of Automatic Handwriting Generation

Further Reading

Papers

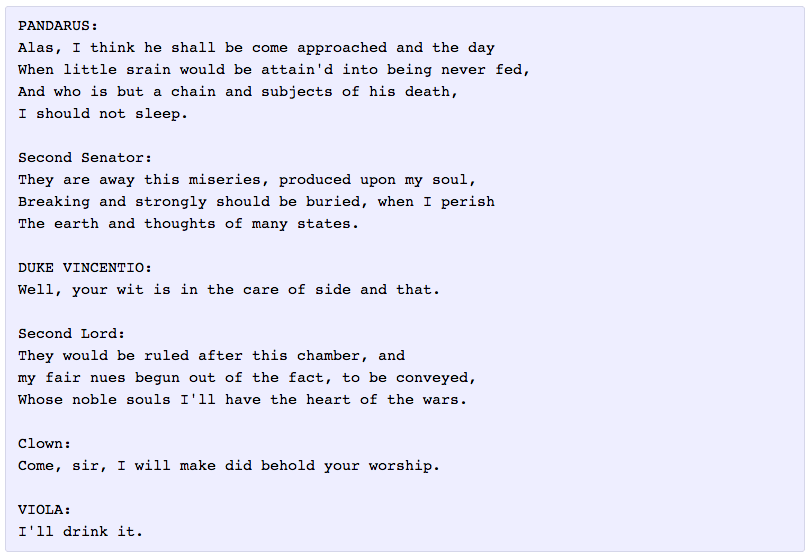

6. Automatic Text Generation

This is an interesting task, where a corpus of text is learned and from this model new text is generated, word-by-word or character-by-character.

The model is capable of learning how to spell, punctuate, form sentiences and even capture the style of the text in the corpus.

Large recurrent neural networks are used to learn the relationship between items in the sequences of input strings and then generate text. More recently LSTM recurrent neural networks are demonstrating great success on this problem using a character-based model, generating one character at time.

Andrej Karpathy provides many examples in his popular blog post on the topic including:

- Paul Graham essays

- Shakespeare

- Wikipedia articles (including the markup)

- Algebraic Geometry (with LaTeX markup)

- Linux Source Code

- Baby Names

Automatic Text Generation Example of Shakespeare

Example taken from Andrej Karpathy blog post

Further Reading

- The Unreasonable Effectiveness of Recurrent Neural Networks

- Auto-Generating Clickbait With Recurrent Neural Networks

Papers

- Generating Text with Recurrent Neural Networks [pdf], 2011

- Generating Sequences With Recurrent Neural Networks [pdf], 2013

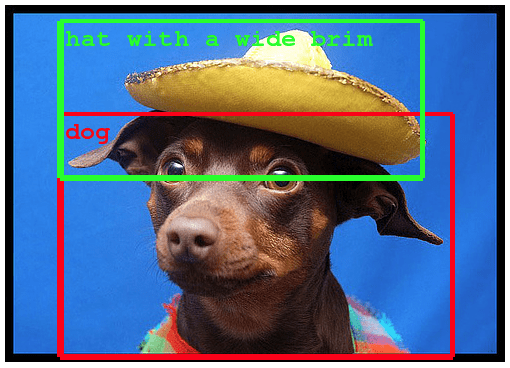

7. Automatic Image Caption Generation

Automatic image captioning is the task where given an image the system must generate a caption that describes the contents of the image.

In 2014, there were an explosion of deep learning algorithms achieving very impressive results on this problem, leveraging the work from top models for object classification and object detection in photographs.

Once you can detect objects in photographs and generate labels for those objects, you can see that the next step is to turn those labels into a coherent sentence description.

This is one of those results that knocked my socks off and still does. Very impressive indeed.

Generally, the systems involve the use of very large convolutional neural networks for the object detection in the photographs and then a recurrent neural network like an LSTM to turn the labels into a coherent sentence.

Automatic Image Caption Generation

Sample taken from Andrej Karpathy, Li Fei-Fei

These techniques have also been expanded to automatically caption video.

Further Reading

- A picture is worth a thousand (coherent) words: building a natural description of images

- Rapid Progress in Automatic Image Captioning

Papers

- Deep Visual-Semantic Alignments for Generating Image Descriptions [pdf] (and website), 2015

- Explain Images with Multimodal Recurrent Neural Networks [pdf, 2014]

- Long-term Recurrent Convolutional Networks for Visual Recognition and Description [pdf], 2014

- Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models [pdf], 2014

- Sequence to Sequence — Video to Text [pdf], 2015

8. Automatic Game Playing

This is a task where a model learns how to play a computer game based only on the pixels on the screen.

This very difficult task is the domain of deep reinforcement models and is the breakthrough that DeepMind (now part of google) is renown for achieving.

This work was expanded and culminated in Google DeepMind’s AlphaGo that beat the world master at the game Go.

Further Reading

- Deep Reinforcement Learning

- DeepMind YouTube Channel

- Deep Q Learning Demo

- DeepMind’s AI is an Atari gaming pro now

Papers

- Playing Atari with Deep Reinforcement Learning [pdf], 2013

- Human-level control through deep reinforcement learning, 2015

- Mastering the game of Go with deep neural networks and tree search, 2016

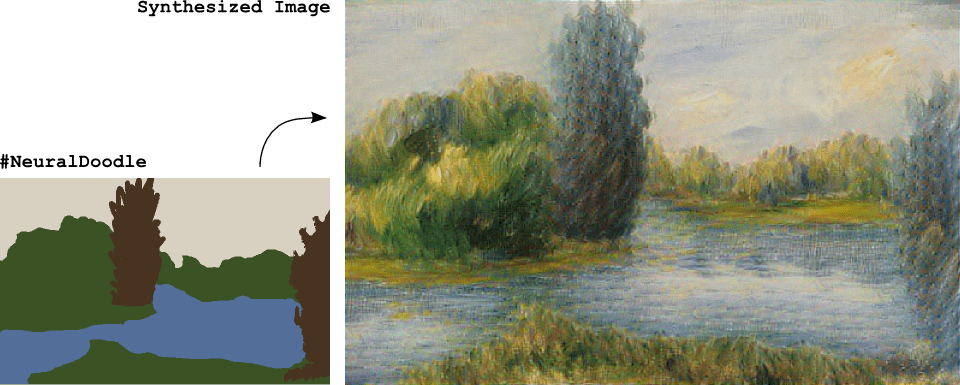

Additional Examples

Below are some additional examples to those listed above.

- Automatic speech recognition.

- Automatic speech understanding.

- Automatically focus attention on objects in images.

- Recurrent Models of Visual Attention [pdf], 2014

- Automatically answer questions about objects in a photograph.

- Automatically turing sketches into photos.

- Convolutional Sketch Inversion [pdf], 2016

- Automatically create stylized images from rough sketches.

Automatically Create Styled Image From Sketch

Image take from NeuralDoodle

More Resources

There are a lot of great resources, talks and more to help you get excited about the capabilities and potential for deep learning.

Below are a few additional resources to help get you excited.

- The Unreasonable Effectiveness of Deep Learning, talk by Yann LeCun in 2014

- Awesome Deep Vision List of top deep learning computer vision papers

- The wonderful and terrifying implications of computers that can learn, TED talk by Jeremy Howard

- Which algorithm has achieved the best results, list of top results on computer vision datasets

- How Neural Networks Really Work, Geoffrey Hinton 2016

Summary

In this post you have discovered 8 applications of deep learning that are intended to inspire you.

This show rather than tell approach is expect to cut through the hyperbole and give you a clearer idea of the current and future capabilities of deep learning technology.

Do you know of any inspirational examples of deep learning not listed here? Let me know in the comments.

Fantastic !!

I’m glad you found it useful Nader.

Hi Jason, lovely examples, great links 🙂 This is an awesome post. Thank you!

I’m glad you found the post useful Saty.

Hi Jason, Nice article.

lately there has been lots of talk of deep learning applied to create tools which can generate

requirements – designs – software code – create builds – test builds as well help with deploying builds to various environments.

Is it really possible to map creative functionality of human brain with ml?

Interesting, I have not seen that.

I’m not sure about mapping creative functions of the brain, but deep learning and other AI methods can be creative (stochastic within the bounds of what we think as aesthetically pleasing).

Thank you for the examples. I found the automatic colarization so remarkable that I might start working in a project with it.

Thanks, I’m glad to hear that Arthur.

Very nice and useful article, thanks a lot

Thanks Rodolphe.

You know what Jason Brownlee, I started mt PhD this year in Aug. I was taking stress on myself to find a good path for research. I somehow figured out and decided to work on deep learning, after lot of searches in internet I found your post which cleared my stress clouds in my brain. Thank you so much Jason 🙂

Charan Gudla

Hang in there Charan Gudla, let me know how you go with your research.

hi brother.. i am doing my M tech,and i want do my project in this area..could you please suggest any problem

Perhaps one of the examples in the above post?

Thank you. This post is among the best posts on deep learning applications and abilities.

Thanks Farhad.

Very informative . Thx.

Thanks Satis.

Many thanks dear prof.

Could you please add codes for these applications

Hi Mustafa, great idea! Many of these projects are academic and the code is open source.

Perhaps you could help to track down the github repositories?

Hi dear jason

Tnx for great article, i have a question that how can i use deep learning for recommender system?

Hi hamid, I don’t have an example of deep learning for recommender systems.

I don’t see why you couldn’t slot a deep learning algorithm in for a model of item-based or user-based collaborative filtering.

Hey Jason,

Just a quick question, I noticed that the examples provided are more geared towards the aspects of image and audio applications. Just wondering if it deep learning is just as applicable in traditional areas such as business data analysis?

Thanks

Deep learning is best suited to analog type data like text, images and audio.

It can be used on standard tabular data, but you will very likely do better using xgboost or more traditional machine learning methods.

Hey Jason,

I see you have covered Automatic Image Caption generation, you could add a 9th application of automatic image generation based on the caption or rather text. It comes under the concept of generative modelling and has received many compelling results using GANS.

Papers : https://arxiv.org/abs/1406.2661, https://arxiv.org/abs/1605.05396

Thanks

Thanks Tejas.

Hi Jason

There is a very nice app called Deep Art Effects that uses Deep Learning algorithms to create art. You upload a photo, choose an art style and a neural network interprets it and turns your photo into a “painting” in this particular style. A fun aspect of Deep Learning!

Thanks for the note Christian.

Thank you…Your blog is very interesting.. I like to do my research in deep learning… can you note me the research areas…

Thanks Aruna.

Sorry, I am no longer an academic, my focus is industrial machine learning. My best advice is to talk to your advisor.

Very nice post. Do you think machine learning and time series methods are better suited to prediction/forecasting problems involving regression?

I am talking about problems not involving vision and audio.

I’m not sure I follow your question, perhaps you can restate it?

Are deep learning methods suited for non-vision non-audio problems?

Say for a typical time series, do you think deep learning outperforms traditional time series and machine learning methods?

I am talking about time series like financial time series, electricity demand etc. etc.

Deep learning can be used for a wide range of problems.

Is deep learning state of the art for finance? I don’t know. I expect the people exploring this question are keeping findings secret for obvious reasons.

I have seen some promising results for LSTMs for time series forecasting, but they take a lot of training.

Great thanks it really inspires me.

Thanks Jerry, I’m glad to hear that.

I am waooed. I have being searching for a topic and here comes the ONE STOP SHOP. Imagine this fantastic site after a years search, How I wish I found it earlier. Any ways, better late than never. Thank u Dr.

Thanks.

Very informative and easy to undersatnd. Thanks Jason!!

Thanks Krishna, I’m glad it helped.

Wonderful!!..Excellent..Thank you so much jason.

Thanks, I’m glad it helped.

Thanks for very informative article

I’m glad it helped.

Many thanks for examples. Some components and the ideas were extremely useful to the project of the self-organized adaptive systems of control of arbitrary engineering systems. Once again thanks.

I’m glad to hear that.

An interesting post. Jason, thanks for the wide list of examples and links. I have started following you.

Thanks.

Hello Jason,

Very Interesting and useful list of applications.

As this post dates back 2016, and from then lot of advances in ML/DL has been achieved. So do you have any updated list of apps or resources for solving above mentioned applications.

It might be time for me to create a new list, thanks for the ping.

What is the difference between deep learning and zero-shot learning ? what is the challenges of deep learning that solved with zero-shot learning?

Zero shot learning is learning with a model (any ML model, not just deep learning) without the model having seen any examples before.

Hi Jason,

This is very useful and interesting. I am also very interested in applying Deep Learning especially image recognition into diagnosis field. Do you have any examples? I am very curious about this field.

I don’t have examples of medical diagnosis sorry.

This might be a good place to start:

https://machinelearningmastery.com/start-here/#deeplearning

Thank you for the information. Deep Learning is also known as deep structured learning and is a subfield of machine learning methods based on learning data representations, concerned with algorithms inspired by the structure and function of the brain called artificial neural networks.

Where did you pick-up “deep structured learning” from?

finally i have come to the right place

I’m glad to hear that.

Nice post! Found the image caption generator pretty cool would work on something similar soon!

Thanks.

Awesome post.

Also, here is the list of all deep learning projects sorted in respective categories. And the list is contantly updated too.

https://deeplink.ml

Thanks for sharing.

Thanks for this informative article. Deep leaning. All the applications mentioned are very innovative.

Thanks.

I found Automatic Game playing amazing!

I read about Deep Learning Technologies and wanted to read about its applications, thank for providing it Jason.

It is an interesting area, but not really useful at work.

hello

your book in deep learning is very best but i can’t found it in my country and i can’t buy it because We are sanctione(i live in iran

how we can download it?

Thanks.

My books can be purchased and downloaded directly from my website:

https://machinelearningmastery.com/products/

Thank you Jason! The show rather than tell is always a good approach to convince people and specially when it’s about technology. In an era where AI and deep learning are being developed and implemented every single day to make life easier, it shall always be a curious subject to get started with. The 8 applications should change the mind of many. But I believe you missed out “self driven cars”- one of my favorites. Nonetheless, good job!

Thanks, great example!

I am new in EEG signal analysis. I would like to Cellular neural network. I would like to know from starting of the Cellular neural network. How cellular neural network is working? Can you please guide me?

Thank you

What is a cellular neural network?

Thanks, for this really helped with my project

You’re welcome!

Dear sir Iam very much interesting to learn machine and deep learning and wants to do some real time projects for the purpose of software job company oriented.Please guide me what are the skills need to learn and how can i learn real time projects on ML and DL?

You can get started here:

https://machinelearningmastery.com/start-here/

Dear Jason this is one of best post I have gone through and the topics are quite wide which further can be divided to many research projects, I feel you should give us some insights in healthcare.

Thanks for the suggestion.

Your suggestion is really good

Thanks.

Thank you for the examples. This really helped with my project.

You’re welcomed.

Now I feel like AI market is dominated by big tech firms (ChatGPT, Bard etc.) which have access to large resources. So it seems like there’s no room for small players to do anything useful or profitable in AI market.

Hi Abdullah..What would you consider “useful” or “profitable”? The same could be said for designing circuits and other electrical devices, however there are many opportunities for software and hardware engineers and technicians.