In their book Applied Predictive Modeling, Kuhn and Johnson comment early on the trade-off of model prediction accuracy versus model interpretation.

For a given problem, it is critical to have a clear idea of the which is a priority, accuracy or explainability so that this trade-off can be made explicitly rather than implicitly.

In this post you will discover and consider this important trade-off.

Accuracy and Explainability

Model performance is estimated in terms of its accuracy to predict the occurrence of an event on unseen data. A more accurate model is seen as a more valuable model.

Model interpretability provides insight into the relationship between in the inputs and the output. An interpreted model can answer questions as to why the independent features predict the dependent attribute.

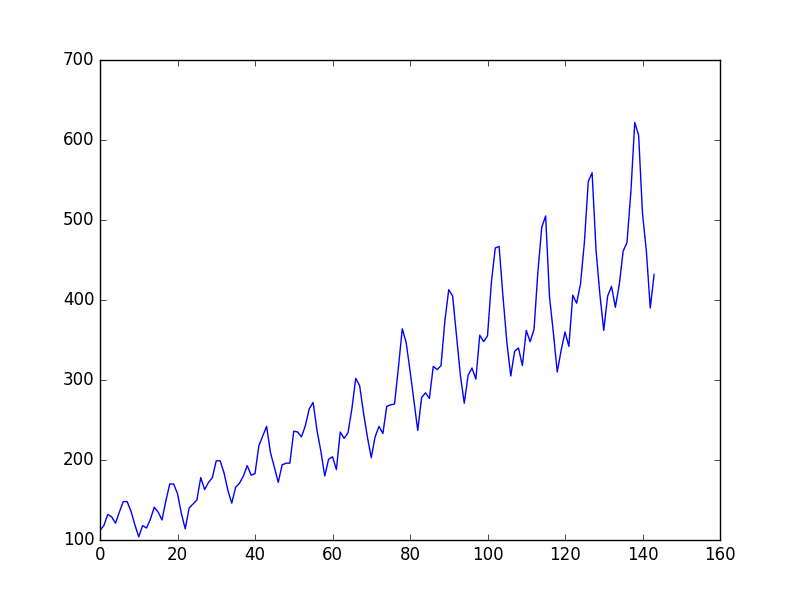

The issue arises because as model accuracy increases so does model complexity, at the cost of interpretability.

Model Complexity

A model with higher the accuracy can mean more opportunities, benefits, time or money to a company. And as such prediction accuracy is optimized.

The optimization of accuracy leads to further increases in the complexity of models in the form of additional model parameters (and resources required to tune those parameters).

“Unfortunately, the predictive models that are most powerful are usually the least interpretable.“

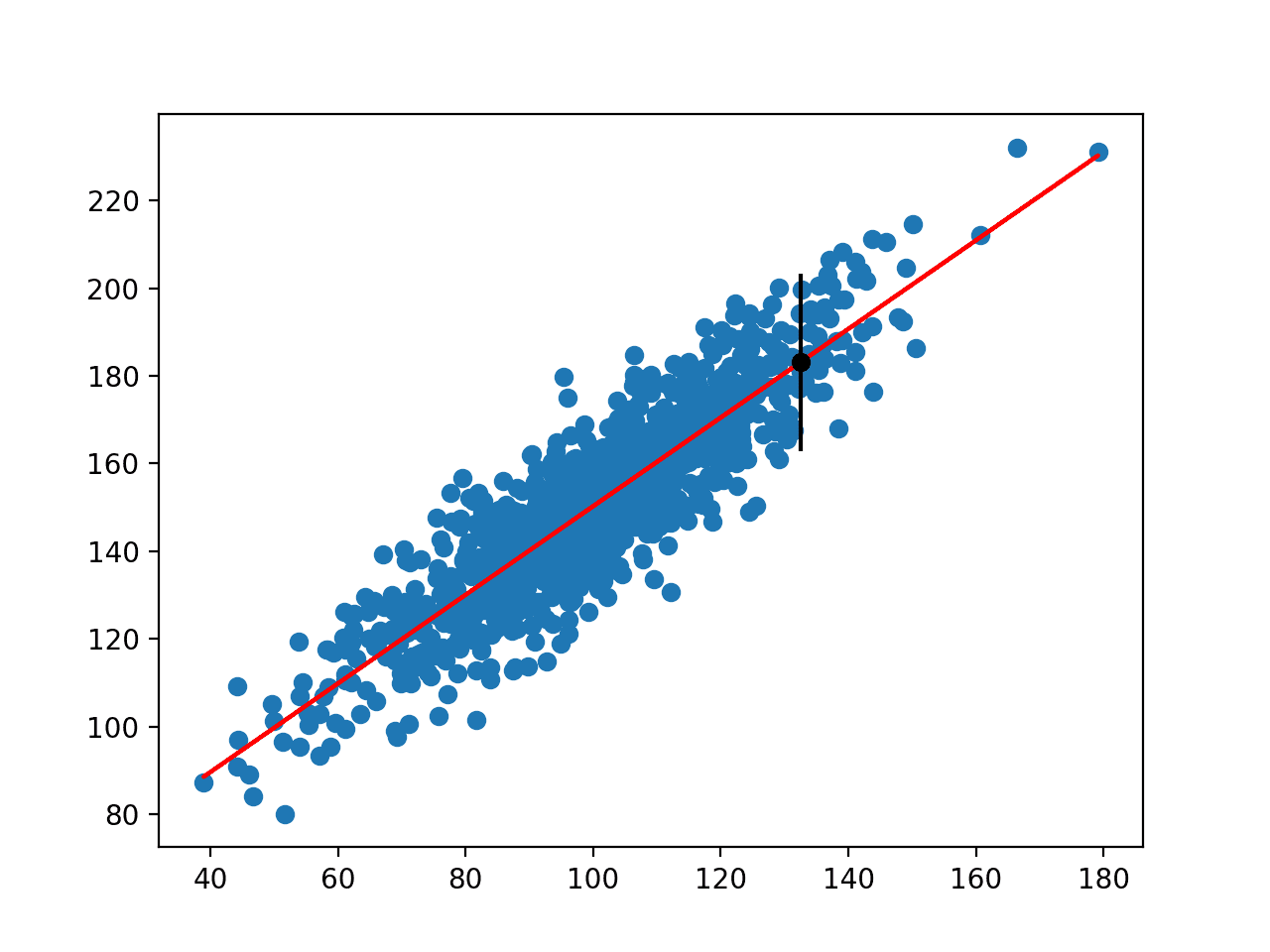

A model with fewer parameters is easier to interpret. This is intuitive. A linear regression model has a coefficient per input feature and an intercept term. For example, you can look at each term and understand how they contribute to the output. Moving to logistic regression gives more power in terms of the underlying relationships that can be modeled at the expense of a function transform to the output that now too must be understood along with the coefficients.

A decision tree (of modest size) may be understandable, a bagged decision tree requires a different perspective to interpret why an event is predicted to occur. Pushing further, the optimized blend of multiple models into a single prediction may beyond meaningful or timely interpretation.

Accuracy Trumps Explainability

In their book, Kuhn and Johnson are concerned with model accuracy at the expense of interpretation.

They comment:

“As long as complex models are properly validated, it may be improper to use a model that is built for interpretation rather than predictive performance.“

Interpretation is secondary to model accuracy and they site examples such as discriminating email into spam and non-spam and the evaluation of a house as examples of problems where this is the case. Medical examples are touched on twice and in both cases are used to defend the absolute need and desirability for accuracy of explainability, as long as the models are appropriately validated.

I’m sure that “but I validated my model” would be no defense at an inquest when a model makes predictions that result in loss of life. Nevertheless, there is do doubt that this is an important issue that requires careful consideration.

Summary

Whenever you are modeling a problem, you are making a decision on the trade-off between model accuracy and model interpretation.

You can use knowledge of this trade-off in the selection of methods you use to model your problem and be clear of your objectives when presenting results.

Thank you so much for such a concise explanation. I was referred here by an instructor for a class. Would like to ask if you can proof/ edit a little; some sentences appeared to be missing words and that got in the way of comprehension.. for example I could not fully grasp the second part of this sentence:

“Moving to logistic regression gives more power in terms of the underlying relationships that can be modeled at the expense of a function transform to the that now too must be understood along with the coefficients.”

Thank you again.

Thanks Michelle. Noted. Also, I fixed the sentence in question.

Hi Jason,

Do you have any post for Fuzzy Logic (FL) especially regarding Mamdani and Mendel’s approaches as FLs are considered to be interpretable systems.

Sorry, I don’t have material on fuzzy logic.

Jason always doing your job well. Thank you.

Thanks.