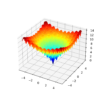

Convergence refers to the limit of a process and can be a useful analytical tool when evaluating the expected performance of an optimization algorithm. It can also be a useful empirical tool when exploring the learning dynamics of an optimization algorithm, and machine learning algorithms trained using an optimization algorithm, such as deep learning neural […]