For as long as I have been participating in data mining and machine learning competitions, I have thought about automating my participation. Maybe it shows that I want to solve the problem of building the tool more than I want to solve the problem at hand.

When working on a dataset, I typically spend a disproportionate amount of time thinking about algorithm tuning and running tuning experiments. I am prone to performing post-competition analysis and regret my allocation of time, almost always thinking that more time could be put into feature engineering, exploring different models and hypotheses, and blending.

Automatic Machine Learner from Hell

More than a few times I have sketched the “perfect” system where model running, tuning and result blending is automated and human effort is focused on defining different perspectives on the problem.

I have even built imperfect variations of this system a few times in a few different languages for competitions. The first version was in Java more than 10 years ago. The most recent version was in R with make files and bash scripts about 6 months ago.

Machine Learning Pipeline

Photo by Seattle Municipal Archives, some rights reserved.

System Design

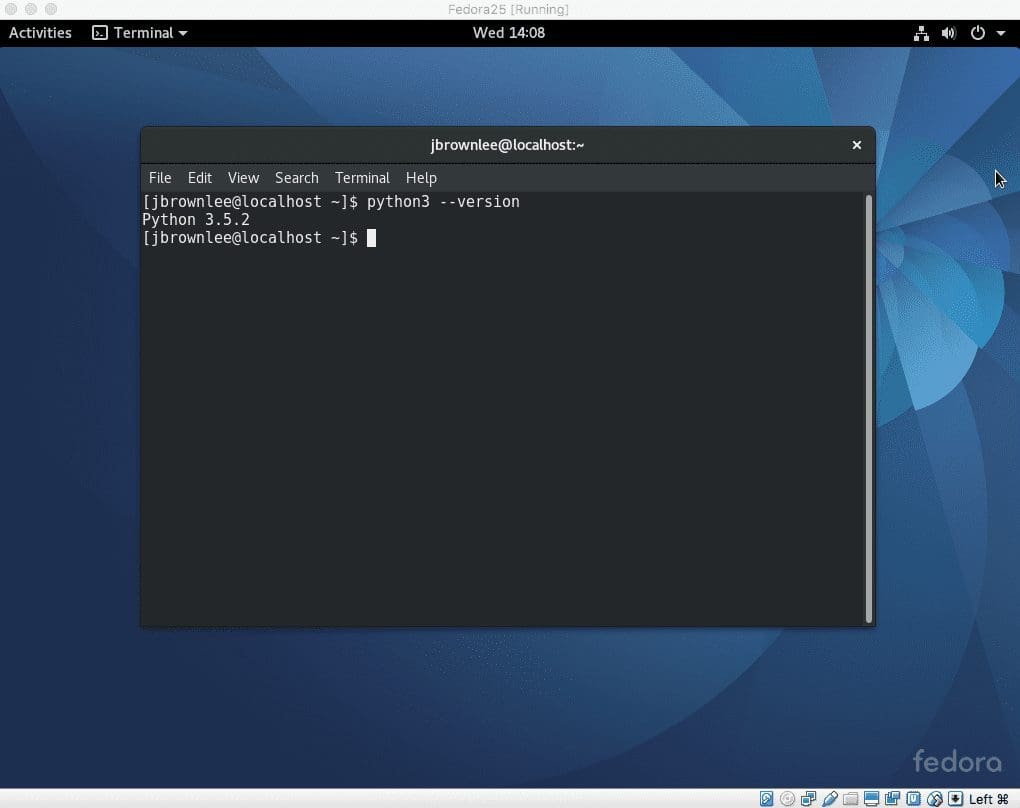

Every time it looks like a pipeline and I make it better by overcoming a fatal fault with the previous iteration. The gist of the pipeline design looks like the following:

- Raw Data: The raw data source (database access) or files

- Data Views: Views on the problem defined as queries or flat files

- Data Partitions: Splitting of data views into cross-validation folds, test/train folds and any other folds needed to evaluate models and make predictions for the competition.

- Analysis Reports: Summarization of a data view using descriptive statistics and plots.

- Models: Machine learning algorithm and configuration that together with input data is used to construct a model just-in-time.

- Model Outputs: The raw results for models on all data partitions for all data views.

- Blends: Ensemble algorithms and configurations designed to create blends of model outputs for all data partitions on all data views.

- Scoreboard: Local scoreboard that describes all completed runs and their scores that can be sorted and summarized.

- Predictions: Submittable predictions for uploading to competition leader boards, etc.

The system is focused on classification and regression problems, because most problems I look at can be reduced to a problem in this form.

With this model, operators spend most of their time defining new data views to plug into the “Data Views” section. These are human-defined or intelligent-automated ways of describing the problem to algorithms. Automatic views can be created such as features from feature selection algorithms and transforms such as standardization, normalization, squaring, etc. of the attributes.

It is data or configuration-driven. For example, a “run” is an algorithm configuration combined with a data view. If the results don’t exist for that combination, they are created upon next run. Same with the reports, blends, predictions, etc. This provides natural check-pointing and at any time the “state” of the system is defined by the work product that has been created from the configurations that have been defined. I recently modeled this with files on disk, but it could just as easily be modeled in a database.

System Benefits

As stated, the pipeline design is intended to let the computer(s) focus on automating the procedures allowing the operator to focus on coming up with new perspectives on the problem at hand. Some benefits of the approach include:

- Algorithms fall over all the time and it is important to be able to fix and tweak and re-run.

- A limiting factor in a competition is typically fresh perspectives on the problem, not CPU time or algorithm tuning, a continuous integration system can re-run the main script all day looking for new results to integrate, new configs to use, new blends to promote.

- The design allows ideas to be added with wild abandon in the form of new data views and new model configuration files.

Additional reports can be plugged into the “Analysis Reports” section, the results of which are designed to provoke more ideas for the “Data Views” section.

Additional models are added to the “Models” section in the form of algorithms and algorithm configurations. Very quickly you can build up a corpus of standard algorithms and standard configuration profiles for algorithms. Grid-searching and random searching of algorithm parameters can add new configurations en-mass or as the results of the searching process.

It’s pluggable and you can see how you can keep adding to the framework, having the lessons learned aggregate across projects. My vision is to have the framework as public open source and quickly accrue algorithms and reports at all levels exploiting multiple machine learning libraries and distributing computing infrastructure where required.

Exercise in Futility

The system as stated is a seductive trap. It abdicates the responsibility of designing a solution to a data problem. Of intelligently selecting attributes and algorithms based on domain knowledge and expertise. It treats the problem as a search problem and unleashes the CPU hounds.

I think building a production-grade version of this system is an exercise in futility. I think this because it is designed to solve a contrived problem: machine learning competitions.

I mostly believe this, like 99.99%. I keep probing the idea though just in case I’m wrong. This post is an example of me pushing the boundaries on this belief.

If such a system as I describe existed, would I use it or would I still want to build my own version of the system? What would you do?

Machine Learning as a Service (MLaaS)

I think flavours of such a system do exist, or I can fool myself into thinking it exists for the sake of argument. I think the business friendly versions of this vision already exists, it is commoditized machine learning or machine learning as a service (MLaaS).

The system I described above is focused on one problem: given a dataset, what are the best predictions that can be generated? The Google Prediction API could be an example of thus type of system (if I close one eye and squint with the other). I don’t care how I get the best results (or good enough results), just give them too me, damn it.

I differentiate this from a so-called “business-friendly” MLaaS. I think of BigML is an example of this type of service. They are what I would imagine such a service would look if it was dressed up nice to be sold into the enterprise. A key point of differentiation is the ability for model introspection at the expense of prediction accuracy.

You don’t just want “the best predictions ever, damn it”, you want to know how. The numbers need a narrative around them. The business needs this information so they can internalize and transfer it to other problems and to the same problem in the future after the concepts have drifted. That knowledge is the secret sauce, not the model that created the knowledge.

I think this also informs the reason why the results from machine learning competitions for the businesses involved are limited. At best the results of a competition can inject new ideas for methods and processes into the business, but the actual predictions and even the actual models are throw-away.

Black-box Machine Learning

There may be a place for black-box machine learning, and that is problems where the models don’t matter. An example that comes to mind is gambling (like horse racing or the stock market). The models don’t matter because the become stale very quickly. As such only the next batch of predictions and the process of building models matters the most.

Black Box Machine Learning

Photo by Abode of Chaos, some rights reserved

I think of black-box machine learning like I do automatic programming. Automatic programming can give you a program that solves a well defined problem, but you have no idea how ugly that program is under the covers, and you probably don’t want to know. The very idea of this is repulsive to programmers, for the very same idea that a magic black box machine learning system is repulsive to a machine learning practitioner (data scientist?). The details, the how, really matters for most problems.

I have never disagreed more with a blog post.

Keen to hear your thoughts.

Great blog. It sheds lights on the less known territory in the ML space. I would see that this is a trend as some data scientists don’t have a sound programming background

I’d always said that black box models have limited usefulness to me as an analyst because they are impossible to interpret and don’t expose their knowledge to me. This is before I started exploring the world of machine learning myself.

I am now starting to believe that a black box model is ok as long as you can open it up. And that can be done with sensitivity analysis/simulations. Ultimately the business I work for does not care about the predictions themselves, they want to know what levers they can play with and be able to formulate hypotheses around tests around pulling those levers.

This is how I am getting into machine learning as a beginner practitioner, with the firm idea that the model is simply a platform for me to run simulations on e.g. If we increase x by 10% or if customers do y 5% more often, then our desired outcome z will likely increase between 3-10%.

I have been surprised to see how accuracy-driven the machine learning community is vs the above approach. What are your thoughts on this Jason?

I may be late to this party but better late than never! I totally agree with Jason!! If some of the benefits Jason mentioned matter less, then by all means go ahead and adopt the silver-valley model; hire-and-fire!

Why do we need more than just black box models/predictions?

We want to build;

1. Build theory

2. Improve practice

3. Prescriptiveness

4. Build trust

5. etc…

A model is a representation of specific purpose – Its value is way more than just “predictions”. Mere predictions in themselves may not always be the ultimate need to a business. Understanding “causality” for example could be more of informative than the actual predictions. But in order to understand causality, one needs more than just back box knowledge of an algorithm. For example underlying assumptions, or search behavior of an algorithm – in short how did an algorithm arrive at a given decision is important! This calls for decoding an algorithm, understanding the underlying math etc…

Thanks Ed.

I see the usefulness of black-box machine learning system in a similar way of webpage builders such as Wix, WordPress.com or Square Space: If your needs can be satisfied within a strict set of boundaries, then you’re good to go. But if you need a bit more room for experimentation or insight, you’ll have to implement a custom solution.

Interesting way to think about it.

sir please tell me how after grid serach optimization deep learning performance can be furthur improved

Yes, see this:

https://machinelearningmastery.com/start-here/#better