In a recent presentation at MLConf, Xavier Amatriain described 10 lessons that he has learned about building machine learning systems as the Research/Engineering Manager at Netflix.

In this you will discover these 10 lessons in a summary from his talk and slides.

Lessons Learned from Building Machine Learning Systems Taken from Xavier’s presentation

10 Lessons Learned

The 10 lessons that Xavier presents can be summarized as follows:

- More data vs./and Better Models

- You might not need all your Big Data

- The fact that a more complex model does not improve things does not mean you don’t need one

- Be thoughtful about your training data

- Learn to deal with (The curse of) Presentation Bias

- The UI is the algorithm’s only communication channel with that which matters most: the users

- Data and Models are great. You know what’s even better? The right evaluation approach

- Distributing algorithms is important, but knowing at what level to do it is even more important

- It pays off to be smart about choosing your hyperparameters

- There are things you can do offline and there are things you can’t… and there is nearline for everything in between

We will look at each in turn through the rest of the post.

1. More data vs better models

Xavier questions the oft quoted “more data beats better models“. I points to Anand Rajaraman’s post “More data usually beats better algorithms” which can be summarized by this quote:

To sum up, if you have limited resources, add more data rather than fine-tuning the weights on your fancy machine-learning algorithm.

He also points to Novig’s 2009 talk to Facebook Engineering on More Data vs Better Algorithms.

He then points to a the paper “Recommending new movies: even a few ratings are more valuable than metadata” where the point out the obvious that less data that is highly predictive is better that more data that is not.

It is not or, it’s and. You need more data and better algorithms.

2. You might not need all your big data

In this lesson he points out that just because you have big data does not mean that you should use it.

He comments that samples of big data can give good results, and smarter sampling (such as stratified or balanced sampling) can lead to better results.

3. Complex data may need complex models

This next lesson is a subtle but important reminder about feature engineering.

He comments that adding more complex features to a linear model may not show an improvement. Conversely, using a complex model with simple features may also not lead to an improvement.

His point is that sometimes a complex model is required to model complex features.

I would also point out that complex features may be able to be decomposed into simple features for use by simple linear models.

4. Be thoughtful about your training data

Xavier comments on the difficulty that can exist in denormalizing user behavior data. He points to the problem of selecting positive and negative cases where decisions of where to draw the line have to be made thoughtfully up front before modeling the problem. It’s a data representation problem that has an enormous impact on the results that you can achieve.

I would suggest you generate ideas for many such possible lines and test them all, or the most promising.

He also cautions that if you see great results in an offline experiment, that you should check for time traveling – where a prediction decision made use of out of sample information, such as a summary that includes a users future behaviour.

5. Learn to deal with presentation bias

Lesson five is about the problem that all possible choices presented to the user do not have uniform probability.

The user interface and human user behavior influence the probability that a presented item will be selected. Those items that were predicted but not presented may not have failed and should not be modeled as such.

This is a complex lesson about the need to model the click behavior in order to tease out the actual performance of model predictions.

6. UI <=> algorithms via users

Related to lesson 5, this is the observation that the modeling algorithms and the user interface are tightly coupled.

A change to the user interface will likely require a change to the algorithms.

7. Use right evaluation approach

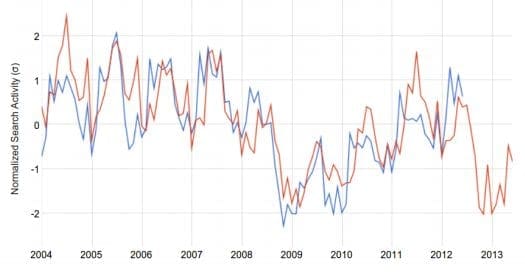

Xavier gives a great summary of the online-offline test process that is used.

Offline Online Training Process

Taken from Xavier’s presentation

The image shows the back-testing of models offline to test a hypothesis and the verification of those results with A/B testing online. This is a valuable slide.

He points out that a model can be optimized for short term goals like clicks or watches, but that user retention is the one true goal, called the overall evaluation criteria.

He cautions to use long-term metrics whenever possible and only short-term metrics when they align with the long term.

Related to their offline-online approach, Xavier comments on the open problem of relating offline metrics to online A/B tests.

8. Choose the right level

He points to three levels that a given experiment can be divided in order to test a hypothesis, and that each level has a different set of requirements.

- population subset

- combination of hyper-parameters

- subset of the training data

Choose carefully.

9. It pays off to be smart about choosing your hyperparameters

Xavier cautions that it is important to choose the right metric when tuning models. But he also mentions that including something like model complexity is also an important concern.

In addition to grid or random parameters searches, Xavier reminds us to look at probabilistic methods that can decrease search time.

10. Offline, online and nearline

The final lessons cautions to spend time thinking about when elements of a model need to be computed and compute those things as early as possible. He points out that in addition to offline and online computing, you can do near-time (he calls it nearline).

Summary

It’s a great set of lessons that you can apply to your own modeling.

You can review Xavier’s slide deck here: “10 Lessons Learned from Building Machine Learning Systems”

Xavier’s presentation was recorded and you can watch the whole thing here: “Xavier Amatriain, Director of Algorithms Engineering, Netflix @ MLconf SF”

No comments yet.