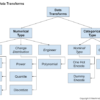

Data preparation is an important part of a predictive modeling project. Correct application of data preparation will transform raw data into a representation that allows learning algorithms to get the most out of the data and make skillful predictions. The problem is choosing a transform or sequence of transforms that results in a useful representation […]