In the literature on language models, you will often encounter the terms “zero-shot prompting” and “few-shot prompting.” It is important to understand how a large language model generates an output. In this post, you will learn:

- What is zero-shot and few-shot prompting?

- How to experiment with them in GPT4All

What Are Zero-Shot Prompting and Few-Shot Prompting

Picture generated by the author using Stable Diffusion. Some rights reserved.

Get started and apply ChatGPT with my book Maximizing Productivity with ChatGPT. It provides real-world use cases and prompt examples designed to get you using ChatGPT quickly.

Let’s get started.

Overview

This post is divided into three parts; they are:

- How Do Large Language Models Generate Output?

- Zero-Shot Prompting

- Few-Shot Prompting

How Do Large Language Models Generate Output?

Large language models were trained with massive amounts of text data. They were trained to predict the next word from the input. It is found that, given the model is large enough, not only the grammar of human languages can be learned, but also the meaning of words, common knowledge, and primitive logic.

Therefore, if you give the fragmented sentence “My neighbor’s dog is” to the model (as input, also known as prompt), it may predict with “smart” or “small” but not likely with “sequential,” although all these are adjectives. Similarly, if you provide a complete sentence to the model, you can expect a sentence that follows naturally from the model’s output. Repeatedly appending the model’s output to the original input and invoking the model again can make the model generate a lengthy response.

Zero-Shot Prompting

In natural language processing models, zero-shot prompting means providing a prompt that is not part of the training data to the model, but the model can generate a result that you desire. This promising technique makes large language models useful for many tasks.

To understand why this is useful, imagine the case of sentiment analysis: You can take paragraphs of different opinions and label them with a sentiment classification. Then you can train a machine learning model (e.g., RNN on text data) to take a paragraph as input and generate classification as output. But you would find that such a model is not adaptive. If you add a new class to the classification or ask not to classify the paragraph but summarize them, this model must be modified and retrained.

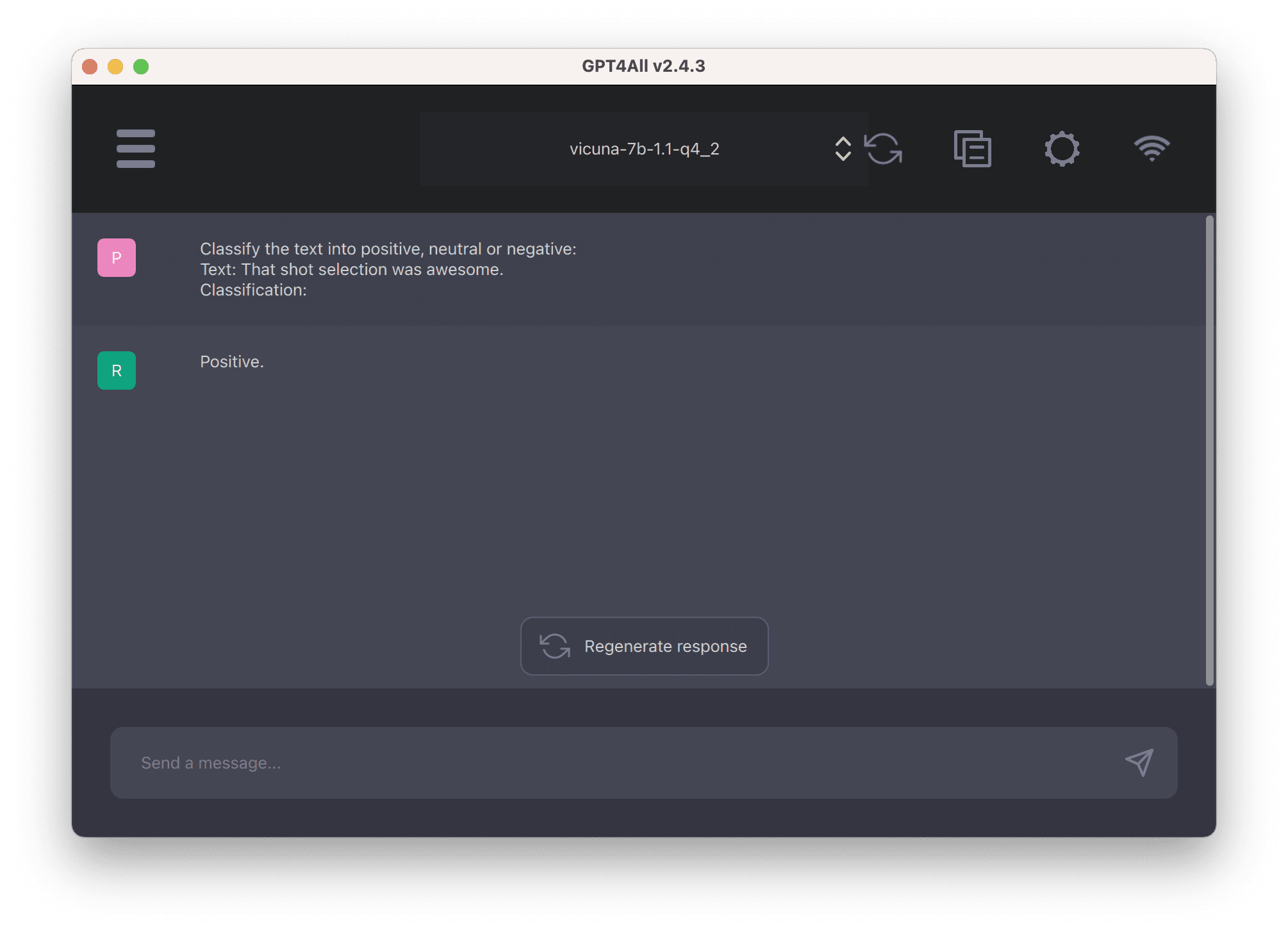

A large language model, however, needs not to be retrained. You can ask the model to classify a paragraph or summarize it if you know how to ask correctly. This means the model probably cannot classify a paragraph into categories A or B since the meaning of “A” and “B” are unclear. Still, it can classify into “positive sentiment” or “negative sentiment” since the model knows what should “positive” and “negative” be. This works because, during the training, the model learned the meaning of these words and acquired the ability to follow simple instructions. An example is the following, demonstrated using GPT4All with the model Vicuna-7B:

The prompt provided was:

|

1 2 3 |

Classify the text into positive, neutral or negative: Text: That shot selection was awesome. Classification: |

The response was a single word, “positive.” This is correct and concise. The model obviously can understand “awesome” is a positive sensation, but knowing to identify the sensation is because of the instruction at the beginning, “Classify the text into positive, neutral or negative.”

In this example, you found that the model responded because it understood your instruction.

Few-Shot Prompting

If you cannot describe what you want but still want a language model to give you answers, you can provide some examples. It is easier to demonstrate this with the following example:

Still using the Vicuna-7B model in GPT4All, but this time, we are providing the prompt:

|

1 2 3 4 5 6 7 8 |

Text: Today the weather is fantastic Classification: Pos Text: The furniture is small. Classification: Neu Text: I don't like your attitude Classification: Neg Text: That shot selection was awful Classification: |

Here you can see that no instruction on what to do is provided, but with some examples, the model can figure out how to respond. Also, note that the model responds with “Neg” rather than “Negative” since it is what is provided in the examples.

Note: Due to the model’s random nature, you may be unable to reproduce the exact result. You may also find a different output produced each time you run the model.

Guiding the model to respond with examples is called few-shot prompting.

Summary

In this post, you learned some examples of prompting. Specifically, you learned:

- What are one-shot and few-shot prompting

- How a model works with one-shot and few-shot prompting

- How to test out these prompting techniques with GPT4All

Very clear explanation that gives me the envy to follow you again!

Thank you .It is very helpful for me.But I also have two questions.

Firstly,what are Zero-shot learning ,few-shot learning ,one-shot learning,can you give me some examples?

Secondly,what are the differences between one-shot learning and one-shot prompting?

This has troubled me for a long time, please help me to solve it.

Hi Summer…The following resource may be of interest:

https://towardsdatascience.com/zero-and-few-shot-learning-c08e145dc4ed