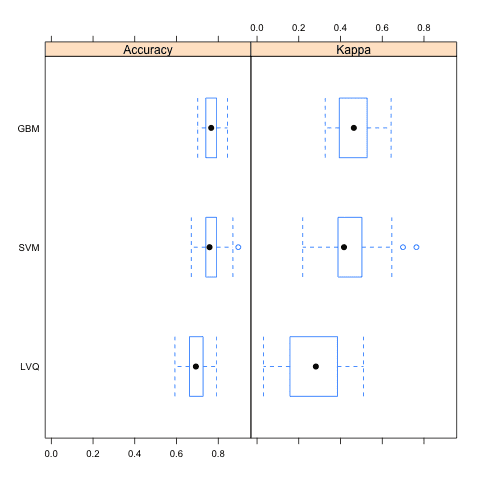

The choice of a statistical hypothesis test is a challenging open problem for interpreting machine learning results.

In his widely cited 1998 paper, Thomas Dietterich recommended the McNemar’s test in those cases where it is expensive or impractical to train multiple copies of classifier models.

This describes the current situation with deep learning models that are both very large and are trained and evaluated on large datasets, often requiring days or weeks to train a single model.

In this tutorial, you will discover how to use the McNemar’s statistical hypothesis test to compare machine learning classifier models on a single test dataset.

After completing this tutorial, you will know:

- The recommendation of the McNemar’s test for models that are expensive to train, which suits large deep learning models.

- How to transform prediction results from two classifiers into a contingency table and how the table is used to calculate the statistic in the McNemar’s test.

- How to calculate the McNemar’s test in Python and interpret and report the result.

Kick-start your project with my new book Statistics for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Calculate McNemar’s Test for Two Machine Learning Classifiers

Photo by Mark Kao, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Statistical Hypothesis Tests for Deep Learning

- Contingency Table

- McNemar’s Test Statistic

- Interpret the McNemar’s Test for Classifiers

- McNemar’s Test in Python

Need help with Statistics for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Statistical Hypothesis Tests for Deep Learning

In his important and widely cited 1998 paper on the use of statistical hypothesis tests to compare classifiers titled “Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms“, Thomas Dietterich recommends the use of the McNemar’s test.

Specifically, the test is recommended in those cases where the algorithms that are being compared can only be evaluated once, e.g. on one test set, as opposed to repeated evaluations via a resampling technique, such as k-fold cross-validation.

For algorithms that can be executed only once, McNemar’s test is the only test with acceptable Type I error.

— Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithm, 1998.

Specifically, Dietterich’s study was concerned with the evaluation of different statistical hypothesis tests, some operating upon the results from resampling methods. The concern of the study was low Type I error, that is, the statistical test reporting an effect when in fact no effect was present (false positive).

Statistical tests that can compare models based on a single test set is an important consideration for modern machine learning, specifically in the field of deep learning.

Deep learning models are often large and operate on very large datasets. Together, these factors can mean that the training of a model can take days or even weeks on fast modern hardware.

This precludes the practical use of resampling methods to compare models and suggests the need to use a test that can operate on the results of evaluating trained models on a single test dataset.

The McNemar’s test may be a suitable test for evaluating these large and slow-to-train deep learning models.

Contingency Table

The McNemar’s test operates upon a contingency table.

Before we dive into the test, let’s take a moment to understand how the contingency table for two classifiers is calculated.

A contingency table is a tabulation or count of two categorical variables. In the case of the McNemar’s test, we are interested in binary variables correct/incorrect or yes/no for a control and a treatment or two cases. This is called a 2×2 contingency table.

The contingency table may not be intuitive at first glance. Let’s make it concrete with a worked example.

Consider that we have two trained classifiers. Each classifier makes binary class prediction for each of the 10 examples in a test dataset. The predictions are evaluated and determined to be correct or incorrect.

We can then summarize these results in a table, as follows:

|

1 2 3 4 5 6 7 8 9 10 11 |

Instance, Classifier1 Correct, Classifier2 Correct 1 Yes No 2 No No 3 No Yes 4 No No 5 Yes Yes 6 Yes Yes 7 Yes Yes 8 No No 9 Yes No 10 Yes Yes |

We can see that Classifier1 got 6 correct, or an accuracy of 60%, and Classifier2 got 5 correct, or 50% accuracy on the test set.

The table can now be reduced to a contingency table.

The contingency table relies on the fact that both classifiers were trained on exactly the same training data and evaluated on exactly the same test data instances.

The contingency table has the following structure:

|

1 2 3 |

Classifier2 Correct, Classifier2 Incorrect Classifier1 Correct ?? ?? Classifier1 Incorrect ?? ?? |

In the case of the first cell in the table, we must sum the total number of test instances that Classifier1 got correct and Classifier2 got correct. For example, the first instance that both classifiers predicted correctly was instance number 5. The total number of instances that both classifiers predicted correctly was 4.

Another more programmatic way to think about this is to sum each combination of Yes/No in the results table above.

|

1 2 3 |

Classifier2 Correct, Classifier2 Incorrect Classifier1 Correct Yes/Yes Yes/No Classifier1 Incorrect No/Yes No/No |

The results organized into a contingency table are as follows:

|

1 2 3 |

Classifier2 Correct, Classifier2 Incorrect Classifier1 Correct 4 2 Classifier1 Incorrect 1 3 |

McNemar’s Test Statistic

McNemar’s test is a paired nonparametric or distribution-free statistical hypothesis test.

It is also less intuitive than some other statistical hypothesis tests.

The McNemar’s test is checking if the disagreements between two cases match. Technically, this is referred to as the homogeneity of the contingency table (specifically the marginal homogeneity). Therefore, the McNemar’s test is a type of homogeneity test for contingency tables.

The test is widely used in medicine to compare the effect of a treatment against a control.

In terms of comparing two binary classification algorithms, the test is commenting on whether the two models disagree in the same way (or not). It is not commenting on whether one model is more or less accurate or error prone than another. This is clear when we look at how the statistic is calculated.

The McNemar’s test statistic is calculated as:

|

1 |

statistic = (Yes/No - No/Yes)^2 / (Yes/No + No/Yes) |

Where Yes/No is the count of test instances that Classifier1 got correct and Classifier2 got incorrect, and No/Yes is the count of test instances that Classifier1 got incorrect and Classifier2 got correct.

This calculation of the test statistic assumes that each cell in the contingency table used in the calculation has a count of at least 25. The test statistic has a Chi-Squared distribution with 1 degree of freedom.

We can see that only two elements of the contingency table are used, specifically that the Yes/Yes and No/No elements are not used in the calculation of the test statistic. As such, we can see that the statistic is reporting on the different correct or incorrect predictions between the two models, not the accuracy or error rates. This is important to understand when making claims about the finding of the statistic.

The default assumption, or null hypothesis, of the test is that the two cases disagree to the same amount. If the null hypothesis is rejected, it suggests that there is evidence to suggest that the cases disagree in different ways, that the disagreements are skewed.

Given the selection of a significance level, the p-value calculated by the test can be interpreted as follows:

- p > alpha: fail to reject H0, no difference in the disagreement (e.g. treatment had no effect).

- p <= alpha: reject H0, significant difference in the disagreement (e.g. treatment had an effect).

Interpret the McNemar’s Test for Classifiers

It is important to take a moment to clearly understand how to interpret the result of the test in the context of two machine learning classifier models.

The two terms used in the calculation of the McNemar’s Test capture the errors made by both models. Specifically, the No/Yes and Yes/No cells in the contingency table. The test checks if there is a significant difference between the counts in these two cells. That is all.

If these cells have counts that are similar, it shows us that both models make errors in much the same proportion, just on different instances of the test set. In this case, the result of the test would not be significant and the null hypothesis would not be rejected.

Under the null hypothesis, the two algorithms should have the same error rate …

— Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithm, 1998.

If these cells have counts that are not similar, it shows that both models not only make different errors, but in fact have a different relative proportion of errors on the test set. In this case, the result of the test would be significant and we would reject the null hypothesis.

So we may reject the null hypothesis in favor of the hypothesis that the two algorithms have different performance when trained on the particular training

— Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithm, 1998.

We can summarize this as follows:

- Fail to Reject Null Hypothesis: Classifiers have a similar proportion of errors on the test set.

- Reject Null Hypothesis: Classifiers have a different proportion of errors on the test set.

After performing the test and finding a significant result, it may be useful to report an effect statistical measure in order to quantify the finding. For example, a natural choice would be to report the odds ratios, or the contingency table itself, although both of these assume a sophisticated reader.

It may be useful to report the difference in error between the two classifiers on the test set. In this case, be careful with your claims as the significant test does not report on the difference in error between the models, only the relative difference in the proportion of error between the models.

Finally, in using the McNemar’s test, Dietterich highlights two important limitations that must be considered. They are:

1. No Measure of Training Set or Model Variability.

Generally, model behavior varies based on the specific training data used to fit the model.

This is due to both the interaction of the model with specific training instances and the use of randomness during learning. Fitting the model on multiple different training datasets and evaluating the skill, as is done with resampling methods, provides a way to measure the variance of the model.

The test is appropriate if the sources of variability are small.

Hence, McNemar’s test should only be applied if we believe these sources of variability are small.

— Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithm, 1998.

2. Less Direct Comparison of Models

The two classifiers are evaluated on a single test set, and the test set is expected to be smaller than the training set.

This is different from hypothesis tests that make use of resampling methods as more, if not all, of the dataset is made available as a test set during evaluation (which introduces its own problems from a statistical perspective).

This provides less of an opportunity to compare the performance of the models. It requires that the test set is an appropriately representative of the domain, often meaning that the test dataset is large.

McNemar’s Test in Python

The McNemar’s test can be implemented in Python using the mcnemar() Statsmodels function.

The function takes the contingency table as an argument and returns the calculated test statistic and p-value.

There are two ways to use the statistic depending on the amount of data.

If there is a cell in the table that is used in the calculation of the test statistic that has a count of less than 25, then a modified version of the test is used that calculates an exact p-value using a binomial distribution. This is the default usage of the test:

|

1 |

stat, p = mcnemar(table, exact=True) |

Alternately, if all cells used in the calculation of the test statistic in the contingency table have a value of 25 or more, then the standard calculation of the test can be used.

|

1 |

stat, p = mcnemar(table, exact=False, correction=True) |

We can calculate the McNemar’s on the example contingency table described above. This contingency table has a small count in both the disagreement cells and as such the exact method must be used.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Example of calculating the mcnemar test from statsmodels.stats.contingency_tables import mcnemar # define contingency table table = [[4, 2], [1, 3]] # calculate mcnemar test result = mcnemar(table, exact=True) # summarize the finding print('statistic=%.3f, p-value=%.3f' % (result.statistic, result.pvalue)) # interpret the p-value alpha = 0.05 if result.pvalue > alpha: print('Same proportions of errors (fail to reject H0)') else: print('Different proportions of errors (reject H0)') |

Running the example calculates the statistic and p-value on the contingency table and prints the results.

We can see that the test strongly confirms that there is very little difference in the disagreements between the two cases. The null hypothesis not rejected.

As we are using the test to compare classifiers, we state that there is no statistically significant difference in the disagreements between the two models.

|

1 2 |

statistic=1.000, p-value=1.000 Same proportions of errors (fail to reject H0) |

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Find a research paper in machine learning that makes use of the McNemar’s statistical hypothesis test.

- Update the code example such that the contingency table shows a significant difference in disagreement between the two cases.

- Implement a function that will use the correct version of the McNemar’s test based on the provided contingency table.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

- Note on the sampling error of the difference between correlated proportions or percentages, 1947.

- Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms, 1998.

API

Articles

Summary

In this tutorial, you discovered how to use the McNemar’s test statistical hypothesis test to compare machine learning classifier models on a single test dataset.

Specifically, you learned:

- The recommendation of the McNemar’s test for models that are expensive to train, which suits large deep learning models.

- How to transform prediction results from two classifiers into a contingency table and how the table is used to calculate the statistic in the McNemar’s test.

- How to calculate the McNemar’s test in Python and interpret and report the result.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi Jason,

Thanks for this nice post. Any practical python post about 5×2 CV + paired t-test coming soon?

Best,

Elie

Good question. 5×2 is straight forward with sklearn. I do have a post on how to code the t-test from scratch scheduled. It can be modified with the suggestions from:

https://machinelearningmastery.com/statistical-significance-tests-for-comparing-machine-learning-algorithms/

Thanks Jason. I found a nice Kaggle kernel treating 5×2 CV t-test: https://www.kaggle.com/ogrellier/parameter-tuning-5-x-2-fold-cv-statistical-test

Nice.

What if you have a nested cross validation? Can we sum up all the folds and create a single contingency matrix?

Thank you Jason.

I’ve learnt alot from you

I’m glad to hear that.

Thank you for the post. I wanted to ask you about K x K contingency tables with K>2; so non-binary classifiers? Can this test be applied to that or is that a restriction, for which a generalised test like Cochrane Q should be used?

Not this test I believe.

Could you reduce your multi-class labels to “correct”/”incorrect” by saying e.g. correct = the right label is in the top 3 and then use McNemar’s test like you described?

Perhaps, I’m not sure that the findings will be valid/sensible.

First of all, thank you Jason for your article. It’s helped me a lot! In addition, I wanted ask you a question:

Using the statsmodels library, could I change the condition value Alpha to 0.1, for instance, and evaluate if the pvalue is greater than or lesser than this new Alpha Value to reject or not the H0?

Thank you in advance for the answer!

You can use any alpha you wish, it is not coded in the statamodels library, it is in our code.

Is McNemar’s Test just for comparing 2 machine learning classifier models? Or can it be used to compare more than 2?

Yes, just pair-wise comparisons.

Thanks for the answer!

I am developing a scientific work in the area of machine learning but I am having difficulty finding statistical tests for models of machine learning classifiers that compare more than two models.

In my research I found the Friedman test that meets the requirements, and if it is not uncomfortable you would know some other test that meets this requirement?

Thank you!

Yes, I recommend reading this post:

https://machinelearningmastery.com/statistical-significance-tests-for-comparing-machine-learning-algorithms/

Hello Jason, kindly help. How do I figure out f11, f12, f21, and f22 from my confusion matrix below. I know that in Remote Sensing, many authors have reduced a multi-class confusion matrix into a 2-by-2 matrix, but I don’t know how. See my R-code below.

classes = c(“Maize”,”Grass”,”Urban”,”Bare_soil”,”Water”,”Forest”)

Maize=c(130,13,12,0,0,12); Grass=c(40,4490,68,92,112,129); Urban=c(7,60,114,2,100,68)

Bare_soil=c(0,51,0,11,0,0); Water=c(0,5,3,4,1474,0); Forest=c(50,156,350,0,51,2396)

CM1 <- matrix(c(Maize, Grass, Urban, Bare_soil, Water, Forest), nrow = 6,

ncol = 6, byrow=TRUE, dimnames=list(classes,classes) )

Maize2=c(226,0,1,0,0,0); Grassland2=c(6,4870,4,1,0,1); Urban2=c(1,0,526,1,0,0)

Bare_soil2=c(0,2,4,137,0,0); Water2=c(0,0,1,0,1691,0); Forest2=c(0,0,0,0,0,2528)

CM2 <- matrix(c(Maize2, Grassland2, Urban2, Bare_soil2, Water2, Forest2), nrow = 6,

ncol = 6, byrow=TRUE, dimnames=list(classes,classes) )

I would like to figure out the mcnemar's 2-by-2 input matrix from this data, so I can do a statistical significance test between the 2 matrices (model predictions).

Sorry, I cannot debug your code for you, perhaps try posting to stackoveflow?

I don’t see how you could reduce a n-class result to a 2×2 matrix, unless you had multiple pairwise matrices.

Hi Jason, thank you for this wonderful post! Truly helped me a lot.

You’re welcome, I’m happy to hear that.

Hello Jason, you truly know how to explain clearly, and concisely. Out of all the articles/videos I saw explaining McNemar’s test, yours gets the price. Thank you so much!

hanks, I’m glad it helped!

Is the data in the contingency table is filled from validation results or the test results?

Test results.

What should I do when the “fail to reject H0” occur?

Try another model or config?

Which case is better: “reject H0” or “fail to reject H0”?

Better for what?

I work on a regression problem

I try using the paired t-test using the ttest_rel() on validation loss the result is “fail to reject H0”

however, when I use the ttest_rel() on validation correlation coefficient, the result is “reject H0”

Which one should I use; the validation loss or the validation correlation?

Thus, we can not compare two models, if their statistical test is “fail to reject H0”?

Does “reject H0” means that the any difference in the two models is due to the two models are different?

Yes, but it is a probabilistic answer, not crisp. E.g. still chance of 5% that results are not different.

No. Models are compared and a failure to reject null suggests no statistical difference between the results.

I observed that two models with large difference in their accuracy gives “reject H0”.

however, models with small differences in their accuracy results give “fail to reject H0”.

Does it means that models must have big differences in their accuracy results inorder to compare them?

No, only that the difference must be statistically significant. Smaller differences may require large data samples.

Is there is any reason to set alpha = 0.05?

Yes, to have 95% probability of no statistical fluke.

sorry, but i am confused between “reject H0” and “failure reject”. which one means that the two models are different?

fail to reject H0 suggests that the results are the same distribution, no difference.

Reject H0 suggests they are different.

when the test results is: “fail to reject” Should I run one model again with different seed till the result becomes “reject H0” inorder to compare two models?

No. This does not make any sense.

Perhaps email me directly and outline what you are trying to achieve:

https://machinelearningmastery.com/contact/

Hi Jason,

plz check the correctness of the following statement:

“In order to compare two regressors, they must have the same Gaussian distribution.”

Where is that written exactly?

I need is to compare more than one regression model.

Can you please revise with me the steps for preforming this comparison:

1- use a statistical test on each two models, if the result is “fail to reject”, then the performance of two models are the same.

otherwise, if the result is “reject H0” then the performance of the two models are different. thus I have to compare them using the MSE

The statistical tests are performed on the MSE scores for each model.

A McNemar’s test would not be appropriate, consider a modified paired student’s test.

This post is fantastic! Well done!!!

Simple question…..Say that two observers classify 100 images into 3 classes: cat, dog, deer. How can we statistically compare the agreement between the two observers?…I guess McNemar could be used to test separately (i.e. for each class) the observers agreement, but what about the overall agreement??

Good question. Perhaps a chi-squared test, or the distance between two discrete distributions, perhaps a cross entropy score?

I found an answer, so to help out the community:

1. For categorical variables (like in the example given), Cohen’s kappa is a suitable test.

2. For ordinal variables (e.g. low, medium. high), Weighted kappa (a Cohen’s kappa variation).

3. To compare >2 observers, Fleiss’ kappa (either for ordinal or categorical variables).

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5654219/

Finally, for >2 observers and ORDINAL variables, some people say that ‘Kendall coefficient of concordance’ is more suitable than Fleiss’ kappa.

https://www.youtube.com/watch?v=X_3IMzRkT0k

Thanks for sharing.

Hello Jason, thanks for the post. Is it applicable to compare and select regressors? do you have a post of hypothesis test for regression tasks? thanks

No, this test is for classification only.

For regression, you can use any of the tests for comparing sample means. E.g. the student t-test.

What would that mean if p value is always 0 for a given contingency table?

See this:

https://en.wikipedia.org/wiki/Fisher%27s_exact_test

Great tutorial!! I just wanted to ask whether the exact test is necessary if one of the cells that are not used (correct/correct, incorrect/incorrect) are 0. The rest are always > 25

Thanks!

I believe so.

Great explanation Dr. Jason!

I had a related question from a business point of view. Imagine a situation which goes like this: While presenting a new classification algo/model to my client, I asked him to run his existing algo on 1000 objects and give me the Precision, Recall as well as the “Yes” and “No” metrics. (Their objective is to have high Precision)

I then took the same 1000 objects and ran my new algo. I found the Precision and Recall to be slighly better than the old one. I also used the McNemar’s test like you have outlined and the p-value came out to be less than 0.05, thus giving me the confidence to tell the client that the new algo is different from the old one.

But can I also say that new algo is “better” than the old one in terms of Precision and Recall? Since only 2 algos are being compared, is it a valid statement? If not, how can I construct a McNemar’s test, or something similar where I can use Precision and Recall as the entries and conclude that one is staistically better than the other?

If the scores are better and the test says it’s probably real, then yes.

Or at least, you can qualify the statement and say: most likely better, or better with a significance level of xxx.

Thank you, that helped me make a decision!

I tried McNemar’s approach and also that of comparing “Wins” using the binomial distribution.

Both are good approaches to know and I finally used the binomial approach to present the findings to the customer, as they found it more intuitive.

I wrote a post to explain how we did it; hope it helps others too: https://medium.com/@himanshuhc/how-to-acquire-clients-for-ai-ml-projects-by-using-probability-e92ca0f3ba68

Well done.

Hello Jason,

thank you for your nice posts! I always learn a lot from these.

I have a question regarding this post and your “randomness in Machine Learning” posts, e.g. this post: https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

I want to compare a Deep Learning Model (stochastic due to random initialization etc.) and SVM model performance on the same data set. How would you practically proceed on this task to get a significant result?

Apart from this, when researching this, I found this paper, where McNemar test is used to maka a claim, which method works best for the classification of agricultural land scapes:

https://doi.org/10.1016/j.rse.2011.11.020

Thanks.

McNemar is for a single run. For multiple runs, you can use a paired student’s t-test. See this:

https://machinelearningmastery.com/statistical-significance-tests-for-comparing-machine-learning-algorithms/

Hi Jason,

Nice post!

I was wondering if you could clarify what the appropriate statistical test would be for comparing more than 2 techniques that produce a continuous score on each sample in a test set. The test set predictions are not repeated, and the scores are not normally distributed. So the conditions are:

– paired samples across all techniques

– data is not normally distributed

– multiple comparisons

Here’s an example truncated data table (let’s pretend the values are Dice score):

Test Set Case | U-Net | FCNN | DenseNet | TiramisuNet

1 | 0.2 | 0.7 | 0.1 | 0.9

2 | 0.45 | 0.2 | 0.02 | 0.8

3 | 0.0 | 1.0 | 0.5 | 0.6

…

Since there are more than 2 techniques to compare, we have to use something like an ANOVA to avoid increasing errors in multiple comparisons. However an ANOVA cannot be used for non-normal data.

Perhaps pair-t test for the mean of the outputs or mean error in the outputs.

Unfortunately I don’t think a paired t-test can be used because the normality assumption is not valid, and a t-test is not suitable for comparing more than 2 groups.

For the sample means? Fair enough, try Wilcoxon Signed-Rank Test:

https://machinelearningmastery.com/nonparametric-statistical-significance-tests-in-python/

Yes, the sample means. The problem is that I cannot find an extension of the Wilcoxon Signed-Rank Test for more than 2 groups (i.e. what ANOVA with repeated measures is to paired t-tests). The Kruskal-Wallis test comes closest, but that is not valid for paired samples, which is what I have in my case.

Use the paired test multiple times, for each pair of results. This is a very common procedure.

If multiple groups are compared the Type 1 error to grow significantly: https://en.wikipedia.org/wiki/Multiple_comparisons_problem

Which is why paired tests are not recommended when comparing more than 2 groups (i.e. you shouldn’t do multiple t-tests or wilcoxon signed-rank tests when comparing more than one comparison).

Yes, thanks.

If you are interested I found a solution in this very helpful paper: https://www.jmlr.org/papers/volume17/benavoli16a/benavoli16a.pdf

Solution: Do the omnibus Friedman’s test and then wilcoxon signed-rank tests for post-hoc analysis between groups (the alpha level has to be adjusted to prevent large family-wise error rates)

Thanks for sharing.

And the Friedman test is not an extension of the Wilcoxon Signed-Rank Test (although it is commonly thought to be), it is actually an extension of the sign test

Hi, I was wondering if the sample size matters when doing the McNemar test. If the incongruences (yes/no, no/yes) were, let’s say 10 and 50, the difference betweeen the models performances would seem much more significant if we had a 100 samples dataset than if we had a 1000 samples data set. Am I right? Or the sample size does not matter?

PS: If it does matter, how can I check if the McNemar test statistic I get is significant taking into account the size of my dataset?

Perhaps there is a calculation of statistical power appropriate for the test, I recommend checking a good textbook on the topic.

It does, need at least above a min number of examples in each cell of the matrix. 23 or something if I recall correctly off the cuff.

Hi Jason,

I have run two classifiers, MLP and RF, to classify infected and healthy oil palm trees. How can I choose the more significant between these two classifiers using statistical analysis and also the suitable variables for the classification?

Variable Dataset MLP RF

1 Training 54.25 54.75

Testing 52.30 40.80

2 Training 75.45 52.15

Testing 95.65 92.70

3 Training 61.90 56.40

Testing 78.00 64.20

4 Training 60.25 58.05

Testing 85.40 79.55

5 Training 63.50 75.40

Testing 77.25 66.35

6 Training 64.50 63.60

Testing 79.40 69.30

thanks

Model selection can be challenging.

Sorry, I try to avoid interpreting results for people. Only you can know what a good model will be for your project.

Perhaps find a balance of minimizing model complexity and maximizing model performance.

thanks for the suggestion

Thank you for the nice post. I have a question:

I have used stratified 5-fold cross validation to generate five data folds to compare several (N) deep learning models on a single dataset. I can’t change the cross validation setup now. Is it beneficial to do a pairwise McNemar’s test after summing up all the contingency metrics from different folds, I mean Cochran’s Q test followed by McNemar? Any suggestions will be highly appreciated.

You’re welcome.

I don’t think so, I believe mcnemar’s is only appropriate for a single train/test split, instead consider a modified t-test:

https://machinelearningmastery.com/statistical-significance-tests-for-comparing-machine-learning-algorithms/

Hi Jason,

Thanks a lot for the great article!

May I ask what statistical method would be suitable for comparing 2 multi-class algorithms which have been trained on a very large dataset?

Thank you!!

Calculate a metric for each algorithm, like accuracy then select a statistical test to compare the two scores. The above test might be appropriate.

Hi Jason, thanks for this very neat article.

In my scenario, I’m training two models on slightly different datasets (Dataset A and Dataset B) and evaluating them on the exact same dataset.

You write that “The contingency table relies on the fact that both classifiers were trained on exactly the same training data and evaluated on exactly the same test data instances.”

Why is that the case for the training data? My goal is to show that a model trained with Dataset A performs better/worse than a model trained on Dataset B. Is McNemar’s test not applicable here?

It should be fine as long as each model makes predictions on the same third dataset.

Great read, thanks!

Is there a solution also for non-categorial variables?

for example, my models predict a probability a user will click an ad, and at the end I know if the user clicked or not.

So I can say that if a user clicked, the model that predicted a higher probability was right, and the other was wrong.

And if the user didn’t click then the other way around – the model that predicted the lower probability was right, and the other model was wrong.

and than I can run the chi-square test on the proportions

would that work?

I disagree with your “right” and “wrong” because it is a probability. But I agree that, if you collected enough samples, using chi-square should give you a good measure of the performance.

Hi, First of all, great post.

I want to test my model with other models given that all the models have different training datasets (around 200 proteins) with different algorithms but the problem is I have a very small test dataset(around 20 proteins). I want to compare the performance of each model on this test dataset only. I used the McNemar test, but I read in this post that it requires models to be trained on the same dataset, is that really so? If yes, could you suggest some statistical tests that I could use to compare the performance of my model with state-of-art models on this small test dataset?

Hi Ajay,

The following resource may help you understand some of the “dangers” and considerations that must be made when working with very small test datasets:

https://www.tgroupmethod.com/blog/small-data-big-decisions/

Can mcnemar test be applied to multiclass/multilabel problem if we only consider if each prediction is correct/incorrect?

Did you resolve this? I’ve just read through this article and all the comments and I’m left with the exact same question. A statistician has just recommended that I should do this but I’m unsure.

May I ask, mustn’t the data be paired in order to run the McNemar test?

Also, is a paired permutation test applicable here? Each pair of predictions for each test example is shuffled, such that the prediction of classifier A could equally occur as the prediction of classifier B under the null hypothesis?

I suggested this recently to a statistician and they didn’t really give me any feedback and instead referred me to a McNemar test (In which case I would have to binarise my data according to whether it was correct or not) which is what brought me here.

Hi Michael…The following is a great resource for many of your questions:

https://towardsdatascience.com/have-you-ever-evaluated-your-model-in-this-way-a6a599a2f89c

Hi

First of all great article!

I was wondering if the McNemar test can be used for machine learning models that use different features to predict the same binary outcome of the same test set? For example model x using features a is significantly different from model y using features b on the same test dataset.

Thanks in advance!

Hi Arthur…I see no reason this test should not be useful in this case. Proceed with it and let us know your findings.

Hi James

Do you mean that the test should not be used (the test is not useful) or that the test is indeed useful and you see no reason why it should not be useful?

Thank you for the clarification!

To anyone wondering why the formula provided does not match the values calculated in Python:

– exact=True calculates an exact p-value using a binomial distribution

– exact=False, correction=True will use (|b – c| – 1)²/(b+c). See wikipedia for more info

– exact=False, correction=False will calculate the value using the formula provided: (b – c)²/(b+c)