Having one or two algorithms that perform reasonably well on a problem is a good start, but sometimes you may be incentivised to get the best result you can given the time and resources you have available.

In this post, you will review methods you can use to squeeze out extra performance and improve the results you are getting from machine learning algorithms.

When tuning algorithms you must have a high confidence in the results given by your test harness. This means that you should be using techniques that reduce the variance of the performance measure you are using to assess algorithm runs. I suggest cross validation with a reasonably high number of folds (the exact number of which depends on your dataset).

Tuning Fork

Photo attributed to eurok, some rights reserved

The three strategies you will learn about in this post are:

- Algorithm Tuning

- Ensembles

- Extreme Feature Engineering

Algorithm Tuning

The place to start is to get better results from algorithms that you already know perform well on your problem. You can do this by exploring and fine tuning the configuration for those algorithms.

Machine learning algorithms are parameterized and modification of those parameters can influence the outcome of the learning process. Think of each algorithm parameter as a dimension on a graph with the values of a given parameter as a point along the axis. Three parameters would be a cube of possible configurations for the algorithm, and n-parameters would be an n-dimensional hypercube of possible configurations for the algorithm.

The objective of algorithm tuning is to find the best point or points in that hypercube for your problem. You will be optimizing against your test harness, so again you cannot underestimate the importance of spending the time to build a trusted test harness.

You can approach this search problem by using automated methods that impose a grid on the possibility space and sample where good algorithm configuration might be. You can then use those points in an optimization algorithm to zoom in on the best performance.

You can repeat this process with a number of well performing methods and explore the best you can achieve with each. I strongly advise that the process is automated and reasonably coarse grained as you can quickly reach points of diminishing returns (fractional percentage performance increases) that may not translate to the production system.

The more tuned the parameters of an algorithm, the more biased the algorithm will be to the training data and test harness. This strategy can be effective, but it can also lead to more fragile models that overfit your test harness and don’t perform as well in practice.

Ensembles

Ensemble methods are concerned with combining the results of multiple methods in order to get improved results. Ensemble methods work well when you have multiple “good enough” models that specialize in different parts of the problem.

This may be achieved through many ways. Three ensemble strategies you can explore are:

- Bagging: Known more formally as Bootstrapped Aggregation is where the same algorithm has different perspectives on the problem by being trained on different subsets of the training data.

- Boosting: Different algorithms are trained on the same training data.

- Blending: Known more formally as Stacked Generalization or Stacking is where a variety of models whose predictions are taken as input to a new model that learns how to combine the predictions into an overall prediction.

It is a good idea to get into ensemble methods after you have exhausted more traditional methods. There are two good reasons for this, they are generally more complex than traditional methods and the traditional methods give you a good base level from which you can improve and draw from to create your ensembles.

Ensemble Learning

Photo attributed to ancasta1901, some rights reserved

Extreme Feature Engineering

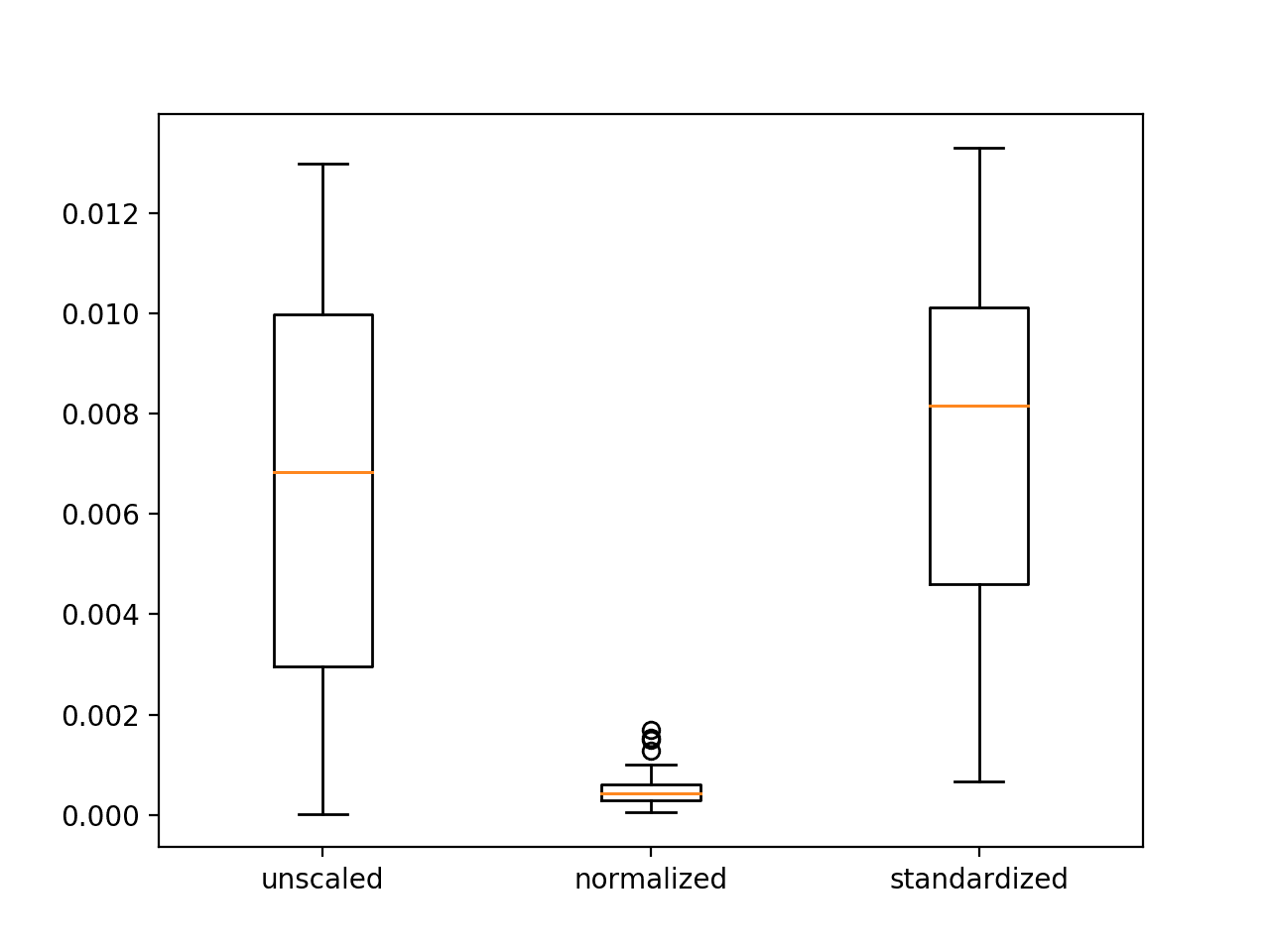

The previous two strategies have looked at getting more from machine learning algorithms. This strategy is about exposing more structure in the problem for the algorithms to learn. In data preparation learned about feature decomposition and aggregation in order to better normalize the data for machine learning algorithms. In this strategy, we push that idea to the limits. I call this strategy extreme feature engineering, when really the term “feature engineering” would suffice.

Think of your data as having complex multi-dimensional structures embedded in it that machine learning algorithms know how to find and exploit to make decisions. You want to best expose those structures to algorithms so that the algorithms can do their best work. A difficulty is that some of those structures may be too dense or too complex for the algorithms to find without help. You may also have some knowledge of such structures from your domain expertise.

Take attributes and decompose them widely into multiple features. Technically, what you are doing with this strategy is reducing dependencies and non-linear relationships into simpler independent linear relationships.

This is might be a foreign idea, so here are three examples:

- Categorical: You have a categorical attribute that had the values [red, green blue], you could split that into 3 binary attributes of red, green and blue and give each instance a 1 or 0 value for each.

- Real: You have a real valued quantity that has values ranging from 0 to 1000. You could create 10 binary attributes, each representing a bin of values (0-99 for bin 1, 100-199 for bin 2, etc.) and assign each instance a binary value (1/0) for the bins.

I recommend performing this process one step at a time and creating a new test/train dataset for each modification you make and then test algorithms on the dataset. This will start to give you an intuition for attributes and features in the database that are exposing more or less information to the algorithms and the effects on the performance measure. You can use these results to guide further extreme decompositions or aggregations.

Summary

I this post you learned about three strategies for getting improved results from machine learning algorithms on your problem:

- Algorithm Tuning where discovering the best models is treated like a search problem through model parameter space.

- Ensembles where the predictions made by multiple models are combined.

- Extreme Feature Engineering where the attribute decomposition and aggregation seen in data preparation is pushed to the limits.

Resources

If you are looking to dive deeper into this subject, take a look at the resources below.

- Machine Learning for Hackers, Chapter 12: Model Comparison

- Data Mining: Practical Machine Learning Tools and Techniques, Chapter 7: Transformations: Engineering the input and output

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Chapter 16: Ensemble Learning

Update

For 20 tips and tricks for getting more from your algorithms, see the post:

The content is very clear and what really interests me is the second method, ensembles. Through this method, many ai algorithms can be called to solve one problems, but it seems hard to organize different algorithms. And the most used way is still always selecting and creating more useful features(extreme feature engineering) or choosing best algorithm after algorithms tune, as I am concerned.

And I want to ask some more questions about writing blog. Your websites look really very well, and I like it very much. What I want to ask is as follows,

1. How to make the inserted pictures look like that in your article, which is centered and framed, the bottom of which can show more infos.

2. How did you write math formula, is there some good latex plugins recommended?

Thanks a lot for your patience. Look forward to your reply.

Thanks!

I use wordpress with the canvas plug-in that does all the formatting for me. I don’t have any latex plug-in’s and generally don’t have any maths on my site.

In real-world Jason, I think we would use one or all three approaches depending on time and complexity of the problem and dataset in hand. The approach is definitely not linear – it all proverbial “depends” in software engineering. This is a good post. I like the way you relate ML to software engineers. Thank you.

Good point Sravan.

Just a random question –

I want to predict the outcome of one data row and accuracy of the model is 50%, so if I re-predict it for 3 times in a row then what happens to the accuracy?

Will it be 87.5% (50+25+12.5)?

Or will it remain 50%?

Hi Jason,

First of all, thanks for this great post. I have a question regarding extreme feature engineering. In the example of ‘Real’, you said it is beneficial sometimes to change continuous feature to binary features (10 binary features in your example). I understand this is beneficial in practice but would you please explain more why the engineered binary features are better than the original feature for some of ML algorithm? why the engineered features are more easily for some ML algo to discover the pattern in data? It would be great if you could provide a simple example. Thank you.

Some algorithms would model the different categorical variables. By making them binary, we pull the complexity out of the model and have it in the input representation, meaning less work for the model to do.

Hello Jason,

As usual, very simple and plain explanation. I have a question about Boosting where you mentioned “Different algorithms are trained on the same training data.”. As far as I know the AdaBoost algorithm initially puts equal weights to all training data points and then trains a classifier. Misclassified data points then get higher weights and are included in the training data for the next classifier. See for example, http://mccormickml.com/2013/12/13/adaboost-tutorial/ (the third equation to decide which data point to be included to train the next classifier).

Is AdaBoost any different than Boosting that you have mentioned?

I have been a little fast and loose with describing boosting. I cover adaboost here:

https://machinelearningmastery.com/boosting-and-adaboost-for-machine-learning/

Thanks Jason for the info. I have a question about algorithm tuning.

Does “type” of data affect the behavior of parameters?

In other words, suppose an algorithm has n parameters and for a given training set, increasing parameter n1 results in better prediction scores.

If we use different type of data, will increasing n1 still help?

It really depends on the specific data you are working with.

I would suggest try some changes and evaluate their effect on model skill.

Thanks.

Thanks for the good article, Jason. I personally found the third strategy (Extreme Feature Engineering) the hardest of them all. It might be due to the fact that it is the most time consuming, but it tends to be the most helpful as well. Ensemble methods are a great aid as well.

Of the techniques you exposed, which is the most helpful to you?

Nice.

Ensembles take me a long way. Mainly ensembles of models built on different views of the problem/dataset.

I think the categoricals values are also involved in the normalization process where you want to make sure all numerical and categorical column values fall within the scale of -1, 0, 1. Using pd.get_dummies(), or key and value helps in the normalization of the categorical columns. To summ it all up i often test the accuracy of each feature in the paramater individually in order to ascertain what features should make up my input for improved accuracies. Very productive article, congratulations.

It depends on your data preparation step. If the categorical variables are one hot encoded, no need to scale them.