Spot-checking algorithms is about getting a quick assessment of a bunch of different algorithms on your machine learning problem so that you know what algorithms to focus on and what to discard.

Photo by withassociates, some rights reserved

In this post you will discover the 3 benefits of spot-checking algorithms, 5 tips for spot-checking on your next problem and the top 10 most popular data mining algorithms that you could use in your suite of algorithms to spot-check.

Spot-Checking Algorithms

Spot-checking algorithms is a part of the process of applied machine learning. On a new problem, you need to quickly determine which type or class of algorithms is good at picking out the structure in your problem and which are not.

The alternative to spot checking is that you feel overwhelmed by the vast number of algorithms and algorithm types that you could try that you end up trying very few or going with what has worked for you in the past. This results in wasted time and sub-par results.

Benefits of Spot-Checking Algorithms

There are 3 key benefits of spot-checking algorithms on your machine learning problems:

- Speed: You could spend a lot of time playing around with different algorithms, tuning parameters and thinking about what algorithms will do well on your problem. I have been there and end up testing the same algorithms over and over because I have not been systematic. A single spot-check experiment can save hours, days and even weeks of noodling around.

- Objective: There is a tendency to go with what has worked for you before. We pick our favorite algorithm (or algorithms) and apply them to every problem we see. The power of machine learning is that there are so many different ways to approach a given problem. A spot-check experiment allows you to automatically and objectively discover those algorithms that are the best at picking out the structure in the problem so you can focus your attention.

- Results: Spot-checking algorithms gets you usable results, fast. You may discover a good enough solution in the first spot experiment. Alternatively, you may quickly learn that your dataset does not expose enough structure for any mainstream algorithm to do well. Spot-checking gives you the results you need to decide whether to move forward and optimize a given model or backward and revisit the presentation of the problem.

I think spot checking mainstream algorithms on your problem is a no-brainer first step.

Tips for Spot-Checking Algorithms

There are some things you can do when you are spot-checking algorithms to ensure you are getting useful and actionable results.

Tips for Spot-Checking Algorithms

Photo by vintagedept, some rights reserved.

Below are 5 tips to ensure you are getting the most from spot-checking machine learning algorithms on your problem.

- Algorithm Diversity: You want a good mix of algorithm types. I like to include instance based methods (live LVQ and knn), functions and kernels (like neural nets, regression and SVM), rule systems (like Decision Table and RIPPER) and decision trees (like CART, ID3 and C4.5).

- Best Foot Forward: Each algorithm needs to be given a chance to put it’s best foot forward. This does not mean performing a sensitivity analysis on the parameters of each algorithm, but using experiments and heuristics to give each algorithm a fair chance. For example if kNN is in the mix, give it 3 chances with k values of 1, 5 and 7.

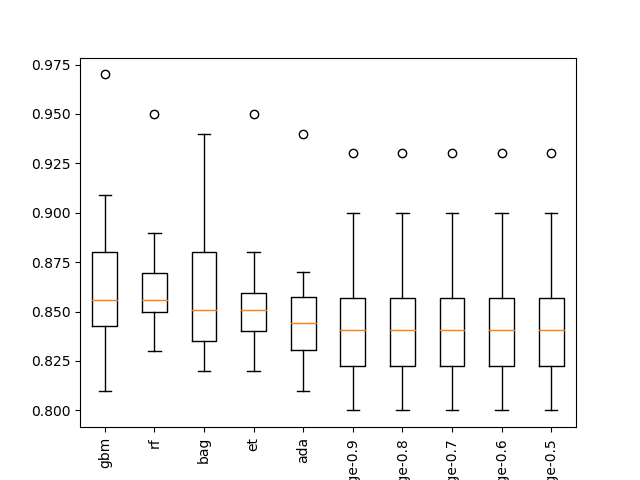

- Formal Experiment: Don’t play. There is a huge temptation to try lots of different things in an informal manner, to play around with algorithms on your problem. The idea of spot-checking is to get to the methods that do well on the problem, fast. Design the experiment, run it, then analyze the results. Be methodical. I like to rank algorithms by their statistical significant wins (in pairwise comparisons) and take the top 3-5 as a basis for tuning.

- Jumping-off Point: The best performing algorithms are a starting point not the solution to the problem. The algorithms that are shown to be effective may not be the best algorithms for the job. They are most likely to be useful pointers to types of algorithms that perform well on the problem. For example, if kNN does well, consider follow-up experiments on all the instance based methods and variations of kNN you can think of.

- Build Your Short-list: As you learn and try many different algorithms you can add new algorithms to the suite of algorithms that you use in a spot-check experiment. When I discover a particularly powerful configuration of an algorithm, I like to generalize it and include it in my suite, making my suite more robust for the next problem.

Start building up your suite of algorithms for spot check experiments.

Top 10 Algorithms

There was a paper published in 2008 titled “Top 10 algorithms in data mining“. Who could go past a title like that? It was also turned into a book “The Top Ten Algorithms in Data Mining” and inspired the structure of another “Machine Learning in Action“.

This might be a good paper for you to jump start your short-list of algorithms to spot-check on your next machine learning problem. The top 10 algorithms for data mining listed in the paper were.

- C4.5 This is a decision tree algorithm and includes descendent methods like the famous C5.0 and ID3 algorithms.

- k-means. The go-to clustering algorithm.

- Support Vector Machines. This is really a huge field of study.

- Apriori. This is the go-to algorithm for rule extraction.

- EM. Along with k-means, go-to clustering algorithm.

- PageRank. I rarely touch graph-based problems.

- AdaBoost. This is really the family of boosting ensemble methods.

- knn (k-nearest neighbor). Simple and effective instance-based method.

- Naive Bayes. Simple and robust use of Bayes theorem on data.

- CART (classification and regression trees) another tree-based method.

There is also a great Quora question on this topic that you could mine for ideas of algorithms to try on your problem.

Resources

- Top 10 algorithms in data mining (2008)

- Quora: What are some Machine Learning algorithms that you should always have a strong understanding of, and why?

Which algorithms do you like to spot-check on problems? Do you have a favorite?

Hi Jason. When you say each algorithm needs to put its “best foot forward” by using a range of parameter values, how do you know what values to use? Do you have any resources regarding what parameter values to test for some of the most common algorithms? Thanks, Jeremy

Great question Jeremy.

Generally, you can gather this information from papers, posts and competition outcomes, as well as experience. It is hard earned knowledge and sadly not written down anywhere.

For the mean time, you may be best to grid search the parameters of a given algorithm on a suite of standard algorithms to start to build up an intuition for “classes of configuration”.

I see the benefit of spot checking, but how do you know that a model that underperforms in spot checking wouldn’t be better to use once fully tuned? For example, suppose model A has a 65% classification accuracy with no tuning, and model B is 70% accurate with no tuning. Is it possible for model A to overtake model B once they have been tuned? Or is it common for models to maintain the same performance relative to one another, even after tuning? For the sake of argument, I’m ignoring the effect of any overfitting, but perhaps that is part of the answer.

Really great point.

It’s hard.

You need to give each algorithm its best chance but pull back from full algorithm tuning.

This applies both to algorithm config and to transformed input data.

Generally, I would advise designing a suite of transformed inputs (views of the data) and a suite of algorithm/configs and run all combinations to see what floats to the top, and then double down.

A lot of this can be automated with good tools.

Great article Jason. I really like your advice on not trying to hard to make an algorithm work.

Just a question around tools. Could you mention the different tools that you use to automate and run through different algorithms?

I recommend sklearn in Python or caret in R.

I have many tutorials on both on the blog.

Hi Jason,

After reading your this post, I indeed realize it is so important to first do spot-check on the algorithms! I feel very curious about the above question raised by Nick on Dec 22, 2016 (though it was long time ago), because I have the exactly the same question with his. To follow up his question, I was wondering when we are doing the spot-check, after we select a bunch of different types of algorithms for test, should we compare them after config tuning? What do you mean “give each algorithm a fair chance” in the part of “Best foot forward”? Should we achieve this by just automatically and randomly select several values for each parameter to tune OR we should use a more strict “grid” tuning strategy to have each algorithm fully tuned and them compare them in the spot-check step?

One approach is to spot check a suite of algorithms with “standard” config then tune. The risk is overfitting the dataset.

Alternately, you can tune each algorithm as part of spot check, using so-called nested cross-validation.

Thank you Jason for your reply! Sorry that your statements with these two approaches make me even more curious, and want to ask:

1. In the first approach, you mentioned “spot check a suite of algorithms with “standard” config then tune”. I was understanding model configs = model hyperparameters, is it correct? If so, do you mean that we first evaluate a suite of algorithms with some “standard” hyperparameters, and if we find it is the best model, then tune the hyperparameters systematically with “grid” function?

2. The “nested cross-validation” sounds amazing and interesting! Do you mean in the inner cv loop, we tune the hyperparameters by using grid search for each model that we want to compare; while in the outer cv loop, we measure the performance of the model with the hyperparameter combinations that win in the inner cv loop? If I don’t understand correctly, do you have clarified it somewhere or could you please suggest the related materials that I can learn?

Many thanks!

1. Yes and yes.

2. Yes. I have a tutorial on this written and scheduled. Generally you can loop yourself and perform the search within the loop, or apply the cross val to a grid search object directly. The latter is less code.

Great resource, Jason. Thanks for publishing it! I think it would be very useful for my next machine learning endeavor 🙂

Does this process work with deep learning? Given that deep learning tasks tend to take a lot more time than their machine learning counterpart, is it feasible to spot-check different architectures?

Thanks in advance for your time and attention!

Absolutely.

Is it possible to do this in WEKA? I Tried to download packages for all the algorithms you mentioned in the introduction, but can’t seem to find them inside the experimenter.

Yes, you can spot check in Weka.

Thanks Jason, great post.

How would you split your dataset for this spot checking experiment?

Let’s assume you have 20K of samples in your data.

Would you use all of it? part of it?

How many train\test splits will you consider? would you get results over k-fold CV?

Another problem is with the hyperparameters. If you say you have multiple parameters options for each model, would you get the results on a nester cross-validation splits or a regular CV?

My opinion is splitting the 20K to 80% train and 20% test, and for each model perform k-fold cross-validation *only on the train data* (Not inner CV, choose k to be 5 or 10).

Then use these results to perform pair-wise significance tests as you proposed.

Pick the top 3-4 models (regardless of their hyper-parameters).

That way you will not get any assessments from the test set which is good.

Does it sound reasonable? Is there a more safe\reliable way to do it?

Thanks

Perhaps start with 10-fold cross validation.

Perhaps this framework will help:

https://machinelearningmastery.com/spot-check-machine-learning-algorithms-in-python/

Thank you Jason. Your posts are great. I really learn a lot from every posts.

THanks.

Hi Jason,

You mentioned “go-to algorithm” in Top 10 algorithm in this post, I wonder what does it mean? Sorry English is not my mother tongue, and I don’t want to misunderstand your meaning:-) Thanks!

Sorry, “go-to” means most common or most widely used / recommended.

Thank you for clarifying this Jason!

You’re welcome.