After spot checking algorithms on your problem and tuning the better few, you ultimately need to select one or two best models with which to proceed.

This problem is called model selection and can be vexing because you need to make a choice given incomplete information. This is where the test harness you create and test options you choose are critical.

In this post you will discover the tips for model selection inspired from competitive machine learning and ideas on how you can take those tips to the next level and study your test harness like any other complex system.

Model Selection

Photo by tami.vroma, some rights reserved

Model Selection in Competitions

In a machine learning competition, you are provided with a sample of data from which you need to construct your models.

You submit your predictions for an unseen test dataset and a small proportion of those predictions are evaluated and the accuracy reported on a public leaderboard. At the end of a competition, your rank in the competition is determined by your predictions on the complete test dataset.

In some competitions you must select those one or two sets of predictions (and the models that created them) that you think represent your best efforts to compete with all other participants for your final rank. The selection requires you to evaluate the accuracy of your model based on the estimated accuracy from your own test harness and from the accuracy reported by the public leaderboard, alone.

This is a problem of model selection and it is challenging because you have incomplete information. Worse still, the accuracy reported by the public leaderboard and your own private test harness very likely disagree. Which model should you choose and how can you make a good decision under this uncertainty?

Avoid Overfitting the Training Dataset

Log0 explores this problem in his post “How to Select Your Final Models in a Kaggle Competition“.

He comments that he has personally suffered the problem of overfitting the training dataset on prior competitions and now works hard to avoid this pitfall. The symptom in a competition is that you rank well on the public leaderboard, but when the final scores are released, your rank falls on the private leaderboard, often a long way.

He provides a number of tips that he suggests can aid in overcoming this problem.

1. Always use Cross-Validation

He comments that a single validation dataset will given an unreliable estimate of the accuracy of a model on unseen data. A single small validation dataset is exactly what the public leaderboard is.

He suggests to always use Cross Validation (CV) and defer to your own CV estimate of accuracy when selecting a model, even though it can be optimistic.

2. Do Not Trust the Public Leaderboard

The public leaderboard is very distracting. It shows all participants how good you are compared to everyone else. Except, that it is full of lies. It is not a meaningful estimate of the accuracy of your model, it is in fact often a terrible estimate of the accuracy of your model.

Log0 comments that the public leaderboard score is unstable, especially when compared to your own off-line CV test harness. He comments that if your CV looks unstable that you can increase the number of folds and that if it is slow to run, that you can operate on a sample of the data.

These are good counters to common objections for not using cross validation.

3. Pick Diverse Models

Diversify. Log0 suggests that if you are able to select two or more models, that you should use the opportunity to select a diverse sub-set of models.

He suggests that you group models by type or methodology and that you select the best from each group. From this short list you can select the final models.

He suggest that selecting similar models means that if the strategy is poor, that the models will fail together, leaving you with little. A counter argument is that diversity is a strategy to use when you are unable to make a clear decision and that doubling down can lead to the biggest pay-off. You need to carefully consider the trust you have in your models.

Log0 also cautions to select robust models, that, they themselves are less likely to overfit, such as large (many parameter) model.

Extension for a Robust Test Harness

It’s a great post and some great tips, but I think you can and should go further.

You can study the stability of your test harness as you would any system.

Study the Stability of Your Cross-Validation Harness

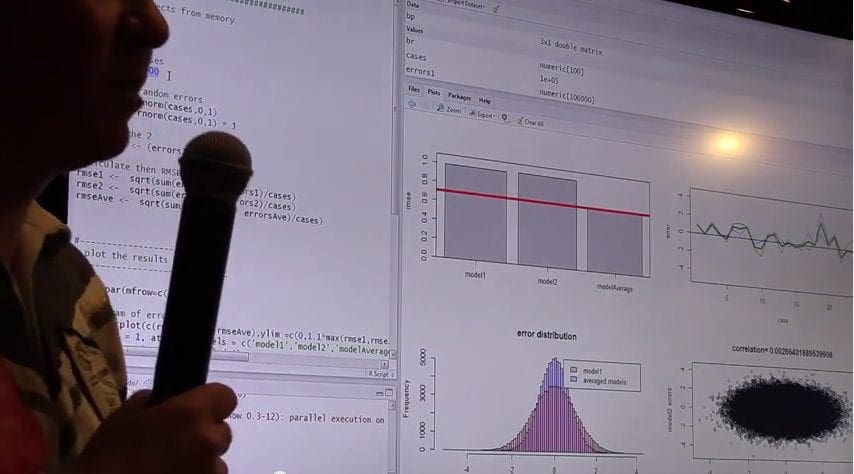

You can take a standard robust algorithm and evaluate the stability of the number of folds in your cross validation configuration. Perform 30 repeats for each Cross-Validation (CV) fold and repeat for CV fold sizes from 1-to-10. Plot the results and think about the spread for a given CV fold number and the change in accuracy as CV fold is increase from 1 (your baseline).

You could use the upper/lower bounds on a given CV fold number as a rough uncertainty on your accuracy. You could also use the difference in accuracy between CV fold=1 and your chosen CV fold to correct the optimistic bias.

Study the Stability of Your Data Sample

Similar tricks can be used when sampling. Sampling theory is a large and complex topic. We can perform a similar process as described above and take n-samples of a given size and estimate accuracy then try different sized samples.

Plotting the results as box-plots or similar can give an idea of the stability of your sampling size (and sampling method, if you are stratifying or rebalancing classes – which you probably should be trying).

Be Careful

Overfitting is lurking everywhere.

A study of your CV parameters or sampling method is using all of your available data. You are learning about the stability of a given standard algorithm on the dataset, but you are also choosing a configuration using more data than is available a given fold or sample used to evaluate a model. This can lead to overfitting.

Nevertheless, this can be useful and valuable and you need to balance the very real problem of overfitting with improving your understanding of your problem.

Summary

In this post you discovered three tips that you can use when selecting final models when working on a machine learning. These tips are useful for competitive machine learning and can also be used in data analysis and production systems, where the predictions from a few selected models are ensemble combined.

You also learned how these tips can be extended and how you can study the configuration of your test harness for a given machine learning problem just like you would the parameters of any machine learning algorithm.

Insight into the stability of the problem as described by your sample data can give you great insight into the expected accuracy of your model on unseen data.

No comments yet.