We all know the satisfaction of running an analysis and seeing the results come back the way we want them to: 80% accuracy; 85%; 90%?

The temptation is strong just to turn to the Results section of the report we’re writing, and put the numbers in. But wait: as always, it’s not that straightforward.

Succumbing to this particular temptation could undermine the impact of otherwise completely valid analysis.

With most machine learning algorithms it’s really important to think about how those results were generated: not just the algorithm, but the dataset and how it’s used can have significant effects on the results obtained. Complex algorithms applied to too-small datasets can lead to overfitting, leading to misleadingly good results.

Light at the end of the tunnel

Photo by darkday, some rights reserved

What is Overfitting?

Overfitting occurs when a machine learning algorithm, such as a classifier, identifies not only the signal in a dataset, but the noise as well. All datasets are noisy. The values recorded in an experiment can be affected by a host of issues:

- Mechanical issues, such as heat or humidity changing the characteristics of the recording device;

- Physical issues: some mice are larger than others;

- Or simply inherent noise in the system under investigation. The production of proteins from DNA, for example, is inherently noisy, occurring not in a steady stream as is usually visualized, but in a series of steps, each of which is stochastic, dependent upon the presence of appropriate molecules at appropriate times.

- Data gathered from human subjects is likewise affected by factors such as time of day, the subjects’ state of health, and even their mood.

The situation worsens as the number of parameters in the dataset grows. A dataset with, for example, 100 records, each with 500 observations is very prone to overfitting, while 1000 records with 5 observations each will be far less of a problem.

When your model has too many parameters relative to the number of data points, you’re prone to overestimate the utility of your model.

— Jessica Su in “What is an intuitive explanation of overfitting?”

Why is Overfitting a Problem?

The aim of most machine learning algorithms is to find a mapping from the signal in the data, the important values, to an output. Noise interferes with the establishment of this mapping.

The practical outcome of overfitting is that a classifier which appears to perform well on its training data may perform poorly, possibly very badly, on new data from the same problem. The signal in the data tends to be pretty much the same from dataset to dataset, but the noise can be very different.

If a classifier fits the noise as well as the signal, it will not be able to separate signal from noise on new data. And the aim of developing most classifiers is to have them generalise to new data in a predictable way.

A model that has been overfit will generally have poor predictive performance, as it can exaggerate minor fluctuations in the data

— Overfitting, Wikipedia.

Overcoming Overfitting

There are two main approaches to overcoming overfitting: three-set validation, and cross-validation.

Three-Set Validation

How can an ethical analyst overcome the problem of overfitting? The simplest, and hardest to achieve, solution is simply to have lots and lots of data. With enough data, an analyst can develop and algorithm on one set of data (the training set), and then test its performance on a completely new, unseen, dataset generated by the same means (the test set).

The problem with using only two datasets is that as soon as you use the test set, it becomes contaminated. The process of training on set 1, testing on set 2, and then using the results of these tests to modify the algorithm means that set 2 is really part of the training data.

To be completely objective, a third dataset (the validation set) is required. The validation set should be kept in glorious isolation until all of the training is complete. The results of the trained classifier on the validation set are the ones which should be reported.

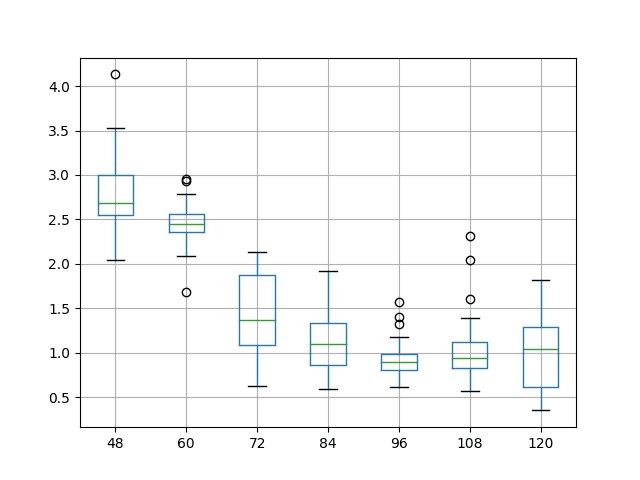

One popular approach is to train the classifier on the training set and, every few iterations, test its performance on the test set. Initially, as the signal in the dataset is fitted, the error of both the training and test sets will go down.

Eventually, however, the classifier will start to fit the noise, and although the error rate on the training set still decreases, the error rate on the test set will start to increase. At this point training should be stopped, and the trained classifier applied to the validation set to estimate the real performance.

The process thus becomes:

- Develop an algorithm;

- Train on set 1 (training set);

- Test on set 2 (testing set);

- Modify the algorithm, or stop training, using the results of step 3;

- Iterate steps 1 – 4 until satisfied with the results of the algorithm;

- Run the algorithm on set 3 (validation set);

- Report the results of step 6.

Sadly, few projects generate enough data to enable the analyst to indulge in the luxury of the three-data-set approach. An alternative must be found where each result is generated by a classifier which has not used that data item in its training.

Cross-Validation

With cross-validation the entire available dataset is divided into more-or-less equal sized subsets. Say we have a dataset of 100 observations. We could divide it into three subsets of 33, 33, and 34 observations each. Let’s call these three subsets set1, set2 and set3.

To develop our classifier, we use two-thirds of the data; say set1 and set2, to train the algorithm. We then run the classifier on set3, so far unseen, and record these results.

The process is then repeated, using another two-thirds, say set1 and set3, and the results on set2 are recorded. Similarly, a classifier trained on set2 and set3 produces the results for set1.

The three results sets are then combined, and become the results for the entire dataset.

The process described above is called three-fold cross-validation, because three datasets were used. Any number of subsets may be used; ten-fold cross-validation is widely used. The ultimate cross-validation scheme is, of course, to train each classifier on all of the data except one case, and then run it on the left out case. This practice is known as leave-one-out validation.

Cross-validation is important in guarding against testing hypotheses suggested by the data (called “Type III errors”), especially where further samples are hazardous, costly or impossible to collect.

— Overfitting, Wikipedia.

Advantages of Cross-Validation for Avoiding Overfitting

The major advantage of any form of cross-validation is that each result is generated using a classifier which was not trained on that result.

Also, because the training set for each classifier is made up of the majority of the data the classifiers, although probably slightly different, should be more-or-less the same. This is particularly true in the case of leave-one-out, where each classifier is trained on an almost identical dataset.

Disadvantages of Cross-Validation

There are two main disadvantages to the use of cross validation:

- The classifier used to generate the results is not a single classifier, but a suite of closely-related classifiers. As already mentioned, these classifiers should be pretty similar, and this drawback is generally not considered to be a major one.

- The test set can no longer be used to modify the classification algorithm. Because the algorithm is trained on the majority of the data and then tested on the smaller subset, these results can no longer be considered “unseen”. Whatever the results are, they are the ones which should be reported. In theory, this is a significant drawback, but in practice this is rarely the case.

In summary, if data is plentiful the three-set validation approach should be used. When datasets are limited, however, cross-validation makes the best use of the data in a principled manner.

Statistical Approaches

Because overfitting is a widespread problem, there has been a lot of research into avoiding the issue using statistical approaches. Some standard textbooks with good coverage of these approaches include:

- Duda, R. O., Hart, P. E., & Stork, D. G. (2012). Pattern Classification: John Wiley & Sons.

- Bishop, C. M. (2006). Pattern Recognition and Machine Learning (Vol. 1): springer New York.

Tutorials on Avoiding Overfitting

For an example, with code, using the R statistical language see “Evaluating model performance – A practical example of the effects of overfitting and data size on prediction“.

For a detailed tutorial using SPSS see the slides “Logistic Regression – Complete Problems” (PPT).

And coverage from the SAS User’s Guide, see the “The GLMSELECT Procedure“.

Further Reading

An interesting overview on the practical effects of overfitting can be found at the MIT Technology Review titled “The Emerging Pitfalls Of Nowcasting With Big Data“.

An excellent introductory lecture from CalTech is available on YouTube titled “Overfitting“:

A much more detailed article, from Vrije Universiteit Amsterdam titled “What You See May Not Be What You Get: A Brief, Nontechnical Introduction to Overfitting in Regression-Type Models” (PDF).

Hi,

It is the validation set that is used for validation and to avoid overfitting, not the test set.

Perhaps, depends on the specifics of the test harness.

Do you mean if e.g. CV is used?

I think there is a mix up of the words validation and test in many books and web sites. For me the test set is something that is being used at the last stage, before the final model.

That would be the validation set. To validate the final model. The test set is used for model selection.

Hello sir,

You are doing a good job on spreading the gospel of Machine Learning. Am new to ML. I see most of your graphs only contain the training and test graph. I have seen some graphs containing training, test and validation graph. My question is if we use cross-validation can we graph it with the training and test graph.

Yes, you will need to keep all of the predictions from each CV fold.