Numerical linear algebra is concerned with the practical implications of implementing and executing matrix operations in computers with real data.

It is an area that requires some previous experience of linear algebra and is focused on both the performance and precision of the operations. The company fast.ai released a free course titled “Computational Linear Algebra” on the topic of numerical linear algebra that includes Python notebooks and video lectures recorded at the University of San Francisco.

In this post, you will discover the fast.ai free course on computational linear algebra.

After reading this post, you will know:

- The motivation and prerequisites for the course.

- An overview of the topics covered in the course.

- Who exactly this course is a good fit for, and who it is not.

Kick-start your project with my new book Linear Algebra for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

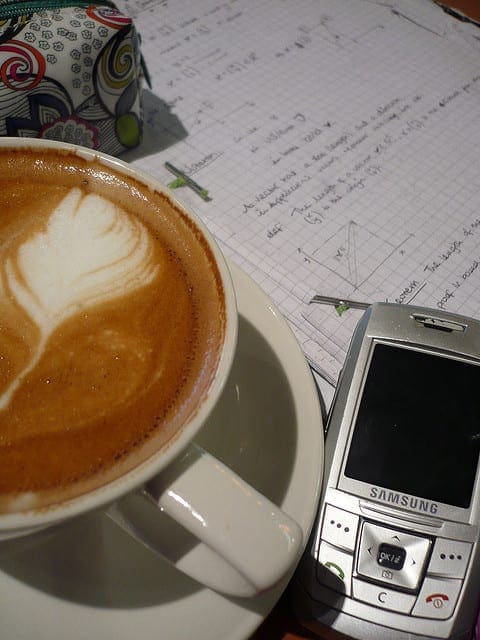

Computational Linear Algebra for Coders Review

Photo by Ruocaled, some rights reserved.

Course Overview

The course “Computational Linear Algebra for Coders” is a free online course provided by fast.ai. They are a company dedicated to providing free education resources related to deep learning.

The course was originally taught in 2017 by Rachel Thomas at the University of San Francisco as part of a masters degree program. Rachel Thomas is a professor at the University of San Francisco and co-founder of fast.ai and has a Ph.D. in mathematics.

The focus of the course is numerical methods for linear algebra. This is the application of matrix algebra on computers and addresses all of the concerns around the implementation and use of the methods such as performance and precision.

This course is focused on the question: How do we do matrix computations with acceptable speed and acceptable accuracy?

The course uses Python with examples using NumPy, scikit-learn, numba, pytorch, and more.

The material is taught using a top-down approach, much like MachineLearningMastery, intended to give a feeling for how to do things, before explaining how the methods work.

Knowing how these algorithms are implemented will allow you to better combine and utilize them, and will make it possible for you to customize them if needed.

Need help with Linear Algebra for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Course Prerequisites and References

The course does assume familiarity with linear algebra.

This includes topics such as vectors, matrices, operations such as matrix multiplication and transforms.

The course is not for novices to the field of linear algebra.

Three references are suggested for you to review prior to taking the course if you are new or rusty with linear algebra. They are:

- 3Blue 1Brown Essence of Linear Algebra, Video Course

- Immersive Linear Algebra, Interactive Textbook

- Chapter 2 of Deep Learning, 2016.

Further, while working through the course, references are provided as needed.

Two general reference texts are suggested up front. They are the following textbooks:

- Numerical Linear Algebra, 1997.

- Numerical Methods, 2012.

Course Contests

This section provides a summary of the 8 (9) parts to the course. They are:

- 0. Course Logistics

- 1. Why are we here?

- 2. Topic Modeling with NMF and SVD

- 3. Background Removal with Robust PCA

- 4. Compressed Sensing with Robust Regression

- 5. Predicting Health Outcomes with Linear Regressions

- 6. How to Implement Linear Regression

- 7. PageRank with Eigen Decompositions

- 8. Implementing QR Factorization

Really, there are only 8 parts to the course as the first is just administration details for the students that took the course at the University of San Francisco.

Lecture Breakdown

In this section, we will step through the 9 parts of the course and summarize their contents and topics covered to give you a feel for what to expect and to see whether it is a good fit for you.

Part 0. Course Logistics

This first lecture is not really part of the course.

It provides an introduction to the lecturer, the material, the way it will be taught, and the expectations of the student in the masters program.

I’ll be using a top-down teaching method, which is different from how most math courses operate. Typically, in a bottom-up approach, you first learn all the separate components you will be using, and then you gradually build them up into more complex structures. The problems with this are that students often lose motivation, don’t have a sense of the “big picture”, and don’t know what they’ll need.

The topics covered in this lecture are:

- Lecturer background

- Teaching Approach

- Importance of Technical Writing

- List of Excellent Technical Blogs

- Linear Algebra Review Resources

Videos and Notebook:

Part 1. Why are we here?

This part introduces the motivation for the course, and touches on the importance of matrix factorization: the importance of the performance and accuracy of these calculations and some example applications.

Matrices are everywhere, anything that can be put in an Excel spreadsheet is a matrix, and language and pictures can be represented as matrices as well.

A great point made in this lecture is how the whole class of matrix factorization methods and one specific method, the QR decomposition, were reported as being among the top 10 most important algorithms of the 20th century.

A list of the Top 10 Algorithms of science and engineering during the 20th century includes: the matrix decompositions approach to linear algebra. It also includes the QR algorithm

The topics covered in this lecture are:

- Matrix and Tensor Products

- Matrix Decompositions

- Accuracy

- Memory use

- Speed

- Parallelization & Vectorization

Videos and Notebook:

Part 2. Topic Modeling with NMF and SVD

This part focuses on the use of matrix factorization in the application to topic modeling for text, specifically the Singular Value Decomposition method, or SVD.

Useful in this part are the comparisons of calculating the methods from scratch or with NumPy and with the scikit-learn library.

Topic modeling is a great way to get started with matrix factorizations.

The topics covered in this lecture are:

- Topic Frequency-Inverse Document Frequency (TF-IDF)

- Singular Value Decomposition (SVD)

- Non-negative Matrix Factorization (NMF)

- Stochastic Gradient Descent (SGD)

- Intro to PyTorch

- Truncated SVD

Videos and Notebook:

- Computational Linear Algebra 2: Topic Modelling with SVD & NMF

- Computational Linear Algebra 3: Review, New Perspective on NMF, & Randomized SVD

- Notebook

Part 3. Background Removal with Robust PCA

This part focuses on the Principal Component Analysis method, or PCA, that uses the eigendecomposition and multivariate statistics.

The focus is on using PCA on image data such as separating background from foreground to isolate changes. This part also introduces the LU decomposition from scratch.

When dealing with high-dimensional data sets, we often leverage on the fact that the data has low intrinsic dimensionality in order to alleviate the curse of dimensionality and scale (perhaps it lies in a low-dimensional subspace or lies on a low-dimensional manifold).

The topics covered in this lecture are:

- Load and View Video Data

- SVD

- Principal Component Analysis (PCA)

- L1 Norm Induces Sparsity

- Robust PCA

- LU factorization

- Stability of LU

- LU factorization with Pivoting

- History of Gaussian Elimination

- Block Matrix Multiplication

Videos and Notebook:

- Computational Linear Algebra 3: Review, New Perspective on NMF, & Randomized SVD

- Computational Linear Algebra 4: Randomized SVD & Robust PCA

- Computational Linear Algebra 5: Robust PCA & LU Factorization

- Notebook

Part 4. Compressed Sensing with Robust Regression

This part introduces the important concepts of broadcasting used in NumPy arrays (and elsewhere) and sparse matrices that crop up a lot in machine learning.

The application focus of this part is the use of robust PCA for background removal in CT scans.

The term broadcasting describes how arrays with different shapes are treated during arithmetic operations. The term broadcasting was first used by Numpy, although is now used in other libraries such as Tensorflow and Matlab; the rules can vary by library.

The topics covered in this lecture are:

- Broadcasting

- Sparse matrices

- CT Scans and Compressed Sensing

- L1 and L2 regression

Videos and Notebook:

- Computational Linear Algebra 6: Block Matrix Mult, Broadcasting, & Sparse Storage

- Computational Linear Algebra 7: Compressed Sensing for CT Scans

- Notebook

Part 5. Predicting Health Outcomes with Linear Regressions

This part focuses on the development of linear regression models demonstrated with scikit-learn.

The Numba library is also used to demonstrate how to speed up the matrix operations involved.

We would like to speed this up. We will use Numba, a Python library that compiles code directly to C.

The topics covered in this lecture are:

- Linear regression in sklearn

- Polynomial Features

- Speeding up with Numba

- Regularization and Noise

Videos and Notebook:

- Computational Linear Algebra 8: Numba, Polynomial Features, How to Implement Linear Regression

- Notebook

Part 6. How to Implement Linear Regression

This part looks at how to solve linear least squares for linear regression using a suite of different matrix factorization methods. Results are compared to the implementation in scikit-learn.

Linear regression via QR has been recommended by numerical analysts as the standard method for years. It is natural, elegant, and good for “daily use”.

The topics covered in this lecture are:

- How did Scikit Learn do it?

- Naive solution

- Normal equations and Cholesky factorization

- QR factorization

- SVD

- Timing Comparison

- Conditioning & Stability

- Full vs Reduced Factorizations

- Matrix Inversion is Unstable

Videos and Notebook:

- Computational Linear Algebra 8: Numba, Polynomial Features, How to Implement Linear Regression

- Notebook

Part 7. PageRank with Eigen Decompositions

This part introduces the eigendecomposition and the implementation and application of the PageRank algorithm to a Wikipedia links dataset.

The QR algorithm uses something called the QR decomposition. Both are important, so don’t get them confused.

The topics covered in this lecture are:

- SVD

- DBpedia Dataset

- Power Method

- QR Algorithm

- Two-phase approach to finding eigenvalues

- Arnoldi Iteration

Videos and Notebook:

- Computational Linear Algebra 9: PageRank with Eigen Decompositions

- Computational Linear Algebra 10: QR Algorithm to find Eigenvalues, Implementing QR Decomposition

- Notebook

Part 8. Implementing QR Factorization

This final part introduces three ways to implement the QR decomposition from scratch and compares the precision and performance of each method.

We used QR factorization in computing eigenvalues and to compute least squares regression. It is an important building block in numerical linear algebra.

The topics covered in this lecture are:

- Gram-Schmidt

- Householder

- Stability Examples

Videos and Notebook:

- Computational Linear Algebra 10: QR Algorithm to find Eigenvalues, Implementing QR Decomposition

- Notebook

Comments on the Course

I think the course is excellent.

A fun walk through numerical linear algebra with a focus on applications and executable code.

The course delivers on the promise of focusing on the practical concerns of matrix operations such as memory, speed, and precision or numerical stability. The course begins with a careful look at issues of floating point precision and overflow.

Throughout the course, frequently comparisons are made between methods in terms of execution speed.

Don’t Take This Course, If…

This course is not an introduction to linear algebra for developers, and if that is the expectation going in, you may be left behind.

The course does assume a reasonable fluency with the basics of linear algebra, notation, and operations. And it does not hide this assumption up front.

I don’t think this course is required if you are interested in deep learning or learning more about the linear algebra operations used in deep learning methods.

Take This Course, If…

If you are implementing matrix algebra methods in your own work and you’re looking to get more out of them, I would highly recommend this course.

I would also recommend this course if you are generally interested in the practical implications of matrix algebra.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Course

- New fast.ai course: Computational Linear Algebra

- Computational Linear Algebra on GitHub

- Computational Linear Algebra Video Lectures

- Community Forums

References

- 3Blue 1Brown Essence of Linear Algebra, Video Course

- Immersive Linear Algebra, Interactive Textbook

- Chapter 2 of Deep Learning

- Numerical Linear Algebra, 1997.

- Numerical Methods, 2012.

Summary

In this post, you discovered the fast.ai free course on computational linear algebra.

Specifically, you learned:

- The motivation and prerequisites for the course.

- An overview of the topics covered in the course.

- Who exactly this course is a good fit for, and who it is not.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

I want to know more about Artificial Intelligence

This video give me some idea about AI

https://www.youtube.com/watch?v=YT5KhoEh7ns

Thanks for sharing.

Thanks for sharing this Dr Jason. Do you have any similar recommendation for Statistics and Probability?

Yes, I am working on the book/course right now. It should be available soon.