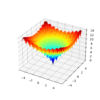

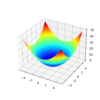

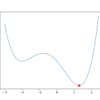

Stochastic optimization refers to the use of randomness in the objective function or in the optimization algorithm. Challenging optimization algorithms, such as high-dimensional nonlinear objective problems, may contain multiple local optima in which deterministic optimization algorithms may get stuck. Stochastic optimization algorithms provide an alternative approach that permits less optimal local decisions to be made […]