Getting started in machine learning can be frustrating. There’s so much to learn that it feels overwhelming.

So much so that many developers interested in machine learning never get started. The idea of creating models on ad hoc datasets and entering a Kaggle competition sounds exciting a far off goal.

So how did a Philosophy graduate get started in machine learning?

In this post I interview Brian Thomas.

Brian got started in machine learning using a top-down approach of actually practicing applied machine learning after finding frustration with the theory heavy online courses.

Discover Brian’s story and the the tools and resources he used.

If Brian can find a way to get started in machine learning, so can you.

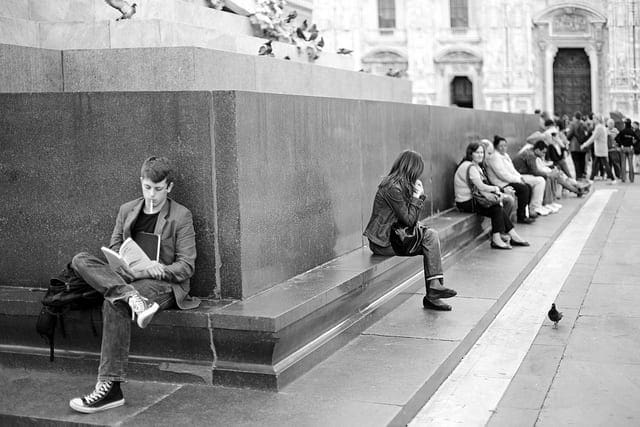

Philosophy Graduate to Machine Learning Practitioner

Photo by Andrew E. Larsen, some rights reserved.

Q: What resources have you already tried to understand machine learning?

What works:

Your Jump-Start Scikit-Learn and Jump-Start Machine Learning in R were very valuable early on as maps of the ML territory using those two tools to get in and start playing with the different machine learning models. I liked having all the different algorithms broken down and laid out like a map that organized my efforts in paying them a visit, as it were.

From there I went on to Brett Lantz’s Machine Learning with R, which I found especially good.

Currently I am working thru Stephen Marsland’s Machine Learning: An Algorithmic Perspective. It’s quite good, and I am finding it much easier to get through than I did when I first picked it up about a year ago.

Overall, what seems to work best is to just get in there and start playing with different data sets and different models. I would have to say that scikit-learn in particular really helped me come to grips with the topic. I also have to tip my hat to the IPython, er, should I say Jupyter notebook. For me it was extremely beneficial to be able to load up some data, try different models out from scikit-learn, and then add some markdown cells that would explain the model and the results in my own words.

Recently I have also been going through some online machine learning tutorials, particularly those by Jake VanderPlas and Olivier Grisel on scikit-learn. Being able to clone their git repos and play with the code along with their presentation is also most illuminating.

- Jake VanderPlas’s IPython Notebooks on Scikit-Learn

- Oliver Grisel’s IPython Notebooks on Scikit-Learn

What didn’t work:

Pretty much the 2 or 3 online courses I tried to get through, including Andrew Ng’s CS229 ML course, and Nando de Freitas’ online ML courses from UBC.

Not that they were bad or anything, I just didn’t find trying to sit and watch a lecture for 50 minutes about stochastic gradient descent very helpful, especially without the math background. I came to have a better understanding of SGD from pasting the code provided by Marsland in his ML book into a Jupyter notebook and playing around with it.

Of course I didn’t officially enroll for these courses or anything, I just downloaded all the lectures, notes, and assignments, and tried to work my way thru them. In the end it just seemed to get bogged down in theory. I think that bespeaks the problem with a lot of this: people come into this without a lot of math background (such as myself) and see all this mathematical theory and run away screaming.

Code first, then let the theoretical understanding evolve. That seems to be the proper approach and I know you certainly sympathize with that.

Q: Are you able to share a little bit about your background and work?

I graduated from university with a bachelor’s degree in philosophy in 1995.

Surprisingly, that inadvertently opened the door for me to enter into the IT job market, as I landed an administrative gig at this place where I was pulling a contract job. From that job I ended up learning a lot on my own about databases and programming.

Coming from a background in philosophy, I have always been able to break things down into their component parts and to see how they all play with each other (which probably accounts for my pretty decent problem-solving skills). However, coming from that background, my math skills were non-existent! I stopped at second year algebra way back in high school and never went beyond that.

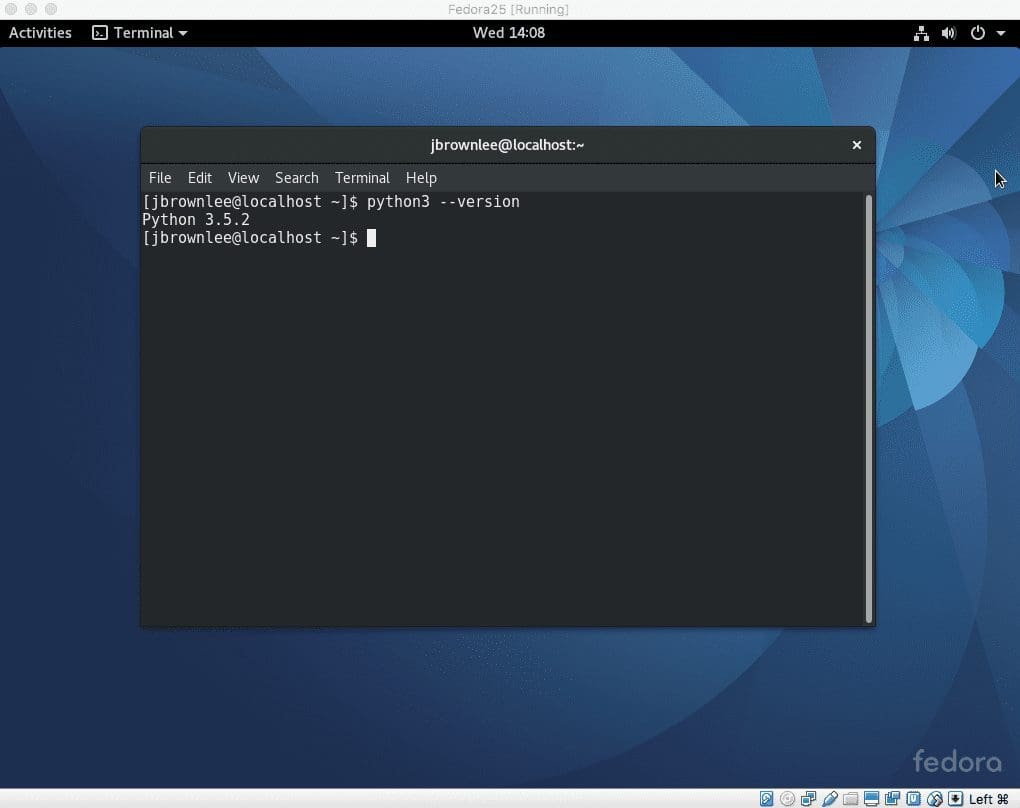

For the past 7 years I have been wearing many different hats at a major insurance company, with my daily duties including server and software testing administration, which includes developing numerous PowerShell (and now Python) applications to assist in this.

Q: What are some specific examples of algorithms and datasets you’ve played around with?

The first ML book I really cut my teeth on was Brett Lantz’s Machine Learning with R and I went through all of the datasets and algorithms in there as well as the “classic hits” such as the Iris dataset. It’s a great book for a beginner IMHO.

At the same time I was going thru Lantz’s book I was also teaching myself Python (through books such as Charles Dierbach’s Introduction to Computer Science Using Python: A Computational Problem-Solving Focus, that focused on programming in Python per se, not ML).

Soon after the Lantz book, I found myself gravitating more and more towards Python in my day-to-day, however. The only machine learning book for Python that I have worked with is Stephen Marsland’s Machine Learning: An Algorithmic Perspective.

Recently I also played around with the Titanic dataset and practiced cleaning up the data, selecting the proper features, and then tried a whole wide range of algorithms from scikit-learn such as Naïve Bayes, k-nearest neighbor, AdaBoost, and Random Forest Classifier.

I am also starting to explore Python packages that utilize the GPU (I recently purchased an ASUS laptop with an NVIDIA GeForce 950M GPU and have a nice CUDA environment up and running on it), notably Theano.

Q: I notice you’ve tried Python and R for machine learning, what are your impressions on both?

I delved into actually DOING machine learning at first with R rather than Python, so this might have skewed my views on the two.

However, it DID seem that learning machine learning with R was more straightforward.

Was it the Lantz book?

Was it because R is a statistical programming language and therefore you have to get right to the various mathematical concepts when coding?

I definitely think the latter might have something to do with this impression.

Now, however, I am pretty much firmly in Python territory, mainly due to packages such as pandas and Theano (my two favorites at this point). I am particularly interested in, and have been having fun playing around with, Theano.

I like the way you can declare variables, their types, then build up expressions, and then functions out of these that can be automatically compiled for utilization by the GPU.

That’s pretty cool!

Q: What are your goals or ambition in diving into machine learning?

Hearkening back to my own philosophical background, a philosophical curiosity is what got me into it.

Machines….learn?! How?!?

You have to admit, the whole history and practice of the field is nothing short of fascinating, touching upon an assortment of issues that are ultimately philosophical in nature. Plus the whole field is just getting more interesting with recent developments such as deep learning.

Also related to deep learning is the paradigm shift of sorts that has occurred with the advent of massively parallel GPU programming.

It seems that the recent advances in deep learning could not have happened without this, and it is cool to tell Theano to utilize my GPU then churn thru the deep learning tutorial algorithms and MNIST data on the LISA Lab’s deep learning website.

Final Words

Thanks to Brian for sharing his story and experiences.

Brian has made his start, built skills in working through problems in R and Python and is now taking on the more complex topic of deep learning.

Even though still near the beginning, his machine learning journey is off to a great start. He can actually practice applied machine learning.

I think that Brian’s story is inspiring if you are looking to make your start in machine learning.

What are you waiting for?

This story is indeed inspiring one. Keep up Brian…

I have briefly go over the effect of increasing k in kNN as a fraction of the training data set size and the effect of selecting different kernels in SVM on binary classification problems.

What are the different attribute scaling on logistic regression on a binary classification ?

Please explain if possible with its effects.

Rasananda Behera, DTM

Principal Data Scientist

ScientistRBehera@gmail.com