XGBoost With Python

Discover The Algorithm That Is Winning Machine Learning Competitions

$37 USD

XGBoost is the dominant technique for predictive modeling on regular data.

The gradient boosting algorithm is the top technique on a wide range of predictive modeling problems, and XGBoost is the fastest implementation. When asked, the best machine learning competitors in the world recommend using XGBoost.

In this new Ebook written in the friendly Machine Learning Mastery style that you’re used to, learn exactly how to get started and bring XGBoost to your own machine learning projects. After purchasing you will get:

- Read on all devices: English PDF format EBook, no DRM.

- Tons of tutorials: 30 step-by-step lessons, 115 pages.

- Working code: 31 Python (.py) code files included.

Apply XGBoost To Your Projects Today!

Convinced?

Why Is XGBoost So Powerful?

… the secret is its “speed” and “model performance”

The Gradient Boosting algorithm has been around since 1999. So why is it so popular right now?

The reason is that we now have machines fast enough and enough data to really make this algorithm shine.

Academics and researchers knew it was a dominant algorithm, more powerful than random forest, but few people in industry knew about it.

This was due to two main reasons:

- The implementations of gradient boosting in R and Python were not really developed for performance and hence took a long time to train even modest sized models.

- Because of the lack of attention on the algorithm, there were few good heuristics on which parameters to tune and how to tune them.

Naive implementations are slow, because the algorithm requires one tree to be created at a time to attempt to correct the errors of all previous trees in the model.

This sequential procedure results in models with really great predictive capability, but can be very slow to train when hundreds or thousands of trees need to be created from large datasets.

XGBoost Changed Everything

XGBoost was developed by Tianqi Chen and collaborators for speed and performance.

Tianqi is a top machine learning researcher, so he knows deeply how the algorithm works. He is also a very good engineer, so he knows how to build high-quality software.

This combination allowed him to combine his talents and re-frame the interns of the gradient boosting algorithm in such a way that it can exploit the full potential of the memory and CPU cores of your hardware.

In XGBoost, individual trees are created using multiple cores and data is organized to minimize the lookup times, all good computer science tips and tricks.

The result is an implementation of gradient boosting in the XGBoost library that can be configured to squeeze the best performance from your machine, whilst offering all of the knobs and dials to tune the behavior of the algorithm to your specific problem.

This Power Did Not Go Unnoticed

Soon after the release of XGBoost, top machine learning competitors started using it.

More than that, they started winning competitions on sites like Kaggle. And they were not shy about sharing the news about XGBoost.

For example, here are some quotes from top Kaggle competitors:

As the winner of an increasing amount of Kaggle competitions, XGBoost showed us again to be a great all-round algorithm worth having in your toolbox.

— Dato Winners’ Interview, Mad Professors

I only used XGBoost.

— Liberty Mutual Property Inspection Winner’s Interview, Qingchen Wang

In fact, the formally ranked #1 Kaggle competitor in the world, Owen Zhang, strongly encourages the use of XGBoost:

When in doubt, use xgboost.

— Avito Winner’s Interview, Owen Zhang

XGBoost is a powerhouse when it comes to developing predictive models.

So how do you get started using it?

How Do You Get Started Using XGBoost

…be systematic and develop a new core skill

The Slow Way

The way that most people get started with XGBoost is the slow way.

- They try and find and read all of the official documentation for the library.

- Next, they try to adapt demos and examples to their problem.

The problem is they don’t even know anything about the underlying algorithm that XGBoost implements. Therefore, they don’t know what parameters to tune to best adapt the algorithm to their problem.

They most definitely don’t know about the full capabilities of the library.

This is the slow and frustrating way to get started with XGBoost, and sadly it is the most common.

The Fast Way

Knowing that things can be different, you can see the faster path:

- Learn something about the underlying algorithm so you know how to configure it.

- Learn about the suite of key features supported by the library.

- Practice using features of the library on small well understood problems.

- Get started applying XGBoost to your own problem.

This will cut the time taken in going from beginner to proficient practitioner by a factor of 2x or 4x if not more.

You also get the benefits of really knowing how to wield XGBoost in a range of different situations.

But you still have to find and gather all of the materials together yourself, and then study them.

The Best Way

There is an even faster way:

- Find an expert who has actually done all of the research and who has actually use XGBoost on real problems.

- Have them prepare the materials for you to study.

In addition to saving you a lot of wasteful time researching algorithm and library details, this approach can speed up the learning process by giving you access to:

- Tips and tricks to get past roadblocks and get the most from the algorithm.

- Code examples that work, can be run immediately and can provide templates for your own problems.

- An expert who can answer questions and point you to the best results to learn more.

If you want to get started with XGBoost, then you are in the right place.

Introducing “XGBoost With Python”

…your ticket to developing and tuning XGBoost models

This book was designed using for you as a developer to rapidly get up to speed with applying Gradient Boosting in Python using the best-of-breed library XGBoost.

The Ebook uses a step-by-step tutorial approach throughout to help you focus on getting results in your projects and delivering value.

The goal is to get you up to speed on gradient boosting and XGBoost to quickly create your first gradient boosting model as fast as possible, then guide you through the finer points of the library and tuning your models.

This Ebook is your guide to developing and tuning XGBoost models on your own machine learning projects.

Let’s take a closer look at the breakdown of what you will discover inside this Ebook.

Everything You Need To Know to Develop XGBoost Model in Python

This Ebook designed to get you up and running with XGBoost as fast as possible.

As such, a series of step-by-step tutorial based lessons was designed to lead you from XGBoost beginner to being an effective XGBoost practitioner.

Below is an overview of the step-by-step lessons on XGBoost you will complete divided into three parts:

Part 1: XGBoost Basics

- Lesson 01: A Gentle Introduction to Gradient Boosting.

- Lesson 02: A Gentle Introduction to XGBoost.

- Lesson 03: How to Develop your First XGBoost Model in Python.

- Lesson 04: How to Best Prepare Data For Use With XGBoost.

- Lesson 05: How to Evaluate the Performance of Models.

- Lesson 06: How to Visualize Individual Decision Trees in XGBoost.

Part 2: XGBoost Advanced

- Lesson 07: How to Save And Load XGBoost Models.

- Lesson 08: How to Review and Use Feature Importance.

- Lesson 09: How to Monitor Performing and Use Early Stopping.

- Lesson 10: How to Configure XGBoost for Multithreading.

- Lesson 11: How to Develop Large XGBoost models in the Cloud.

Part 3: XGBoost Tuning

- Lesson 12: Best Practices When Configuring XGBoost.

- Lesson 13: How to Tune the Number and Size of Decision Trees.

- Lesson 14: How to Tune Learning Rate and Number of Trees.

- Lesson 15: How to Tune Sampling in Stochastic Gradient Boosting.

Each lesson was designed to be completed in about 30 minutes by the average developer

XGBoost With Python Table of Contents

Here’s Everything You’ll Get…

in XGBoost With Python

Hands-On Tutorials

A digital download that contains everything you need, including:

- Clear algorithm descriptions that help you to understand the principles that underlie the technique.

- Step-by-step XGBoost tutorials to show you exactly how to apply each method.

- Python source code recipes for every example in the book so that you can run the tutorial and project code in seconds.

- Digital Ebook in PDF format so that you can have the book open side-by-side with the code and see exactly how each example works.

The XGBoost basics to get you started and build a foundation, including:

- The gradient boosting algorithm description and the 4 extensions that improve performance.

- The XGBoost implementation of gradient boosting and the key differences that make it so fast.

- The application of XGBoost to a simple predictive modeling problem, step-by-step.

- The 2 important steps in data preparation you must know when using XGBoost with scikit-learn.

- The surprising automatic handling of missing values and how it compares to imputing values manually.

- The 2 ways to estimate model performance of XGBoost models with scikit-learn.

- The visualization of individual trees within a trained XGBoost model.

Advanced Usage and Tuning

The advanced XGBoost usage to speed-up your own projects, including:

- The 2 techniques to save a trained XGBoost model and later load it to make predictions on new data.

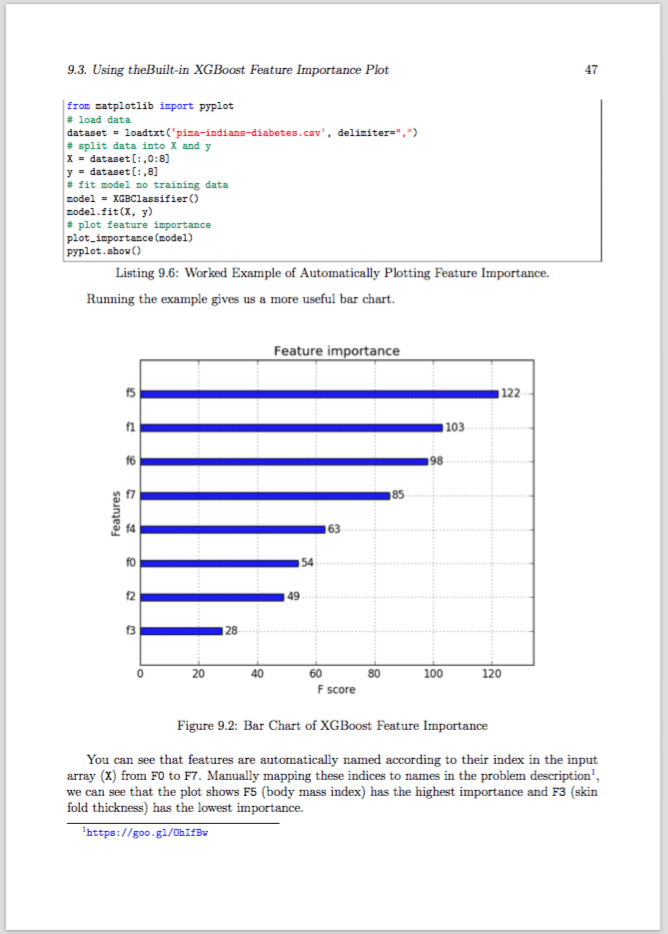

- The calculation of feature importance scores and the 2 ways to plot the results.

- The diagnostics of plotting learning curves from XGBoost models and how to stop training early.

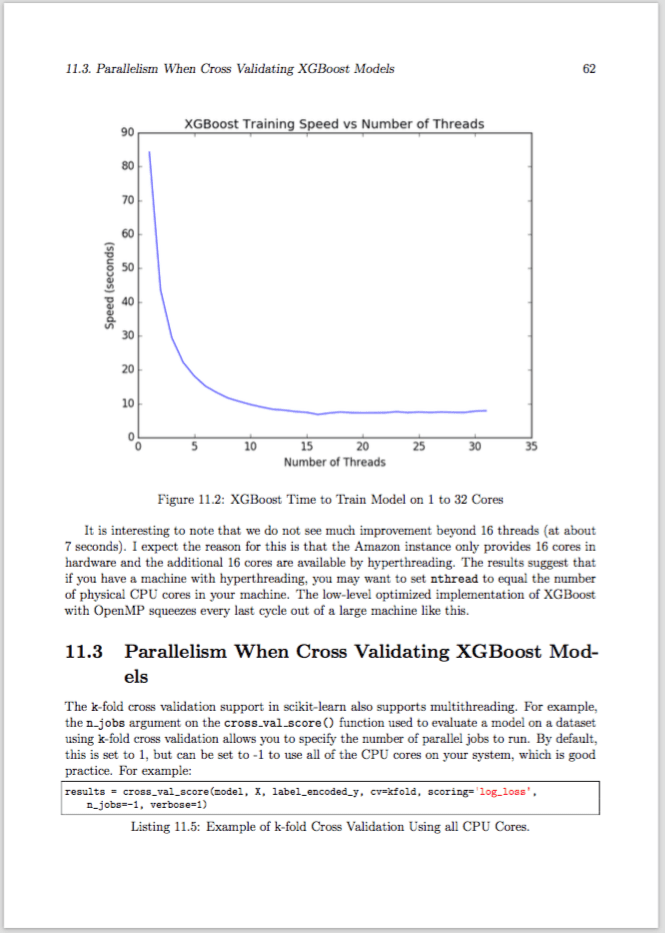

- The multithreading support of XGBoost and how to best harness this feature when parallelizing models.

- The use of Amazon cloud computing to speed up the training of very large XGBoost models using lots of CPU cores.

The important XGBoost model tuning steps needed to get the best results, including:

- The expert best practices that you need to know when tuning gradient boosting models.

- The balance between the size and number of decision trees when tuning XGBoost models.

- The slowing down of learning during training with learning rate and the impact on the number of trees.

- The careful use of random sampling of rows and columns in tree construction and how this affects the mean and variance of performance.

Resources you need to go deeper, when you need to, including:

- Top machine learning textbooks and the specific chapters that discuss gradient boosting to deepen your understanding, if you crave more.

- Seminal gradient boosting papers by the experts and links to download the PDF versions.

- The best places online where you can find more details about the XGBoost library.

What More Do You Need?

Take a Sneak Peek Inside The Ebook

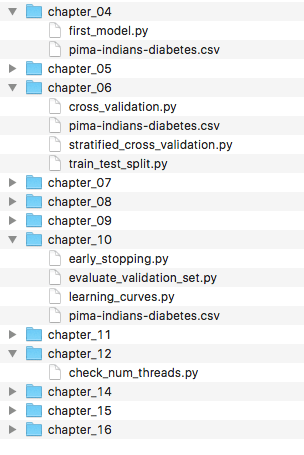

BONUS: XGBoost Python Code Recipes

…you also get 30 fully working XGBoost scripts

Each recipe presented in the book is standalone, meaning that you can copy and paste it into your project and use it immediately.

- You get one Python script (.py) for each example provided in the book.

- You get the datasets used throughout the book.

Your XGBoost Code Recipe Library covers the following topics:

- Binary Classification

- Multiclass Classification

- One Hot Encoding

- k-fold Cross Validation

- Train-Test Splits

- Tree Visualization

- Model Serialization

- Feature Importance Scoring

- Feature Selection

- Early Stopping

- Multicore and Multithreaded Configuration

- Grid Search Hyperparameter Tuning

This means that you can follow along and compare your answers to a known working implementation of each algorithm in the provided Python files.

This helps a lot to speed up your progress when working through the details of a specific task.

Code Provided with XGBoost with Python

About The Author

Hi, I'm Jason Brownlee. I run this site and I wrote and published this book.

Hi, I'm Jason Brownlee. I run this site and I wrote and published this book.

I live in Australia with my wife and sons. I love to read books, write tutorials, and develop systems.

I have a computer science and software engineering background as well as Masters and PhD degrees in Artificial Intelligence with a focus on stochastic optimization.

I've written books on algorithms, won and ranked well in competitions, consulted for startups, and spent years in industry. (Yes, I have spend a long time building and maintaining REAL operational systems!)

I get a lot of satisfaction helping developers get started and get really good at applied machine learning.

I teach an unconventional top-down and results-first approach to machine learning where we start by working through tutorials and problems, then later wade into theory as we need it.

I'm here to help if you ever have any questions. I want you to be awesome at machine learning.

Get Your Sample Chapter

Want to take a look before you buy? Download a free sample chapter PDF.

Want to take a look before you buy? Download a free sample chapter PDF.

Enter your email address and your sample chapter will be sent to your inbox.

Click Here to Get Your Sample Chapter

Check Out What Customers Are Saying:

You're Not Alone in Choosing Machine Learning Mastery

Trusted by Over 53,938 Practitioners

...including employees from companies like:

...students and faculty from universities like:

![]()

and many thousands more...

Absolutely No Risk with...

100% Money Back Guarantee

Plus, as you should expect of any great product on the market, every Machine Learning Mastery Ebook

comes with the surest sign of confidence: my gold-standard 100% money-back guarantee.

100% Money-Back Guarantee

If you're not happy with your purchase of any of the Machine Learning Mastery Ebooks,

just email me within 90 days of buying, and I'll give you your money back ASAP.

No waiting. No questions asked. No risk.

Get Results With The Algorithm That Is

Winning Machine Learning Competitions

Choose Your Package:

Basic Package

You will get:

- XGBoost With Python

(including bonus source code)

(great value!)

All prices are in US Dollars (USD).

(1) Click the button. (2) Enter your details. (3) Download immediately.

![]()

Secure Payment Processing With SSL Encryption

Do you have any Questions?

What Are Skills in Machine Learning Worth?

Your boss asks you:

Hey, can you build a predictive model for this?

Imagine you had the skills and confidence to say:

"YES!"

...and follow through.

I have been there. It feels great!

How much is that worth to you?

The industry is demanding skills in machine learning.

The market wants people that can deliver results, not write academic papers.

Business knows what these skills are worth and are paying sky-high starting salaries.

A Data Scientists Salary Begins at:

$100,000 to $150,000.

A Machine Learning Engineers Salary is Even Higher.

What Are Your Alternatives?

You made it this far.

You're ready to take action.

But, what are your alternatives? What options are there?

(1) A Theoretical Textbook for $100+

...it's boring, math-heavy and you'll probably never finish it.

(2) An On-site Boot Camp for $10,000+

...it's full of young kids, you must travel and it can take months.

(3) A Higher Degree for $100,000+

...it's expensive, takes years, and you'll be an academic.

OR...

For the Hands-On Skills You Get...

And the Speed of Results You See...

And the Low Price You Pay...

Machine Learning Mastery Ebooks are

Amazing Value!

And they work. That's why I offer the money-back guarantee.

You're A Professional

The field moves quickly,

...how long can you wait?

You think you have all the time in the world, but...

- New methods are devised and algorithms change.

- New books get released and prices increase.

- New graduates come along and jobs get filled.

Right Now is the Best Time to make your start.

Bottom-up is Slow and Frustrating,

...don't you want a faster way?

Can you really go on another day, week or month...

- Scraping ideas and code from incomplete posts.

- Skimming theory and insight from short videos.

- Parsing Greek letters from academic textbooks.

Targeted Training is your Shortest Path to a result.

Professionals Stay On Top Of Their Field

Get The Training You Need!

You don't want to fall behind or miss the opportunity.

Frequently Asked Questions

Customer Questions (78)

Thanks for your interest.

Sorry, I do not support third-party resellers for my books (e.g. reselling in other bookstores).

My books are self-published and I think of my website as a small boutique, specialized for developers that are deeply interested in applied machine learning.

As such I prefer to keep control over the sales and marketing for my books.

I’m sorry, I don’t support exchanging books within a bundle.

The collections of books in the offered bundles are fixed.

My e-commerce system is not sophisticated and it does not support ad-hoc bundles. I’m sure you can understand. You can see the full catalog of books and bundles here:

If you have already purchased a bundle and would like to exchange one of the books in the bundle, then I’m very sorry, I don’t support book exchanges or partial refunds.

If you are unhappy, please contact me directly and I can organize a refund.

Thanks for your interest.

I’m sorry, I cannot create a customized bundle of books for you. It would create a maintenance nightmare for me. I’m sure you can understand.

My e-commerce system is not very sophisticated. It cannot support ad-hoc bundles of books or the a la carte ordering of books.

I do have existing bundles of books that I think go well together.

You can see the full catalog of my books and bundles available here:

Sorry, I don’t sell hard copies of my books.

All of the books and bundles are Ebooks in PDF file format.

This is intentional and I put a lot of thought into the decision:

- The books are full of tutorials that must be completed on the computer.

- The books assume that you are working through the tutorials, not reading passively.

- The books are intended to be read on the computer screen, next to a code editor.

- The books are playbooks, they are not intended to be used as references texts and sit the shelf.

- The books are updated frequently, to keep pace with changes to the field and APIs.

I hope that explains my rationale.

If you really do want a hard copy, you can purchase the book or bundle and create a printed version for your own personal use. There is no digital rights management (DRM) on the PDF files to prevent you from printing them.

Sorry, I cannot create a purchase order for you or fill out your procurement documentation.

You can complete your purchase using the self-service shopping cart with Credit Card or PayPal for payment.

After you complete the purchase, I can prepare a PDF invoice for you for tax or other purposes.

Sorry, no.

I cannot issue a partial refund. It is not supported by my e-commerce system.

If you are truly unhappy with your purchase, please contact me about getting a full refund.

I stand behind my books, I know the tutorials work and have helped tens of thousands of readers.

I am sorry to hear that you want a refund.

Please contact me directly with your purchase details:

- Book Name: The name of the book or bundle that you purchased.

- Your Email: The email address that you used to make the purchase (note, this may be different to the email address you used to pay with via PayPal).

- Order Number: The order number in your purchase receipt email.

I will then organize a refund for you.

I would love to hear why the book is a bad fit for you.

Anything that you can tell me to help improve my materials will be greatly appreciated.

I have a thick skin, so please be honest.

Sample chapters are provided for each book.

Each book has its own webpage, you can access them from the catalog.

On each book’s page, you can access the sample chapter.

- Find the section on the book’s page titled “Download Your Sample Chapter“.

- Click the link, provide your email address and submit the form.

- Check your email, you will be sent a link to download the sample.

If you have trouble with this process or cannot find the email, contact me and I will send the PDF to you directly.

Yes.

I can provide an invoice that you can use for reimbursement from your company or for tax purposes.

Please contact me directly with your purchase details:

- The name of the book or bundle that you purchased.

- The email address that you used to make the purchase.

- Ideally, the order number in your purchase receipt email.

- Your full name/company name/company address that you would like to appear on the invoice.

I will create a PDF invoice for you and email it back.

Sorry, I no longer distribute evaluation copies of my books due to some past abuse of the privilege.

If you are a teacher or lecturer, I’m happy to offer you a student discount.

Contact me directly and I can organize a discount for you.

Sorry, I do not offer Kindle (mobi) or ePub versions of the books.

The books are only available in PDF file format.

This is by design and I put a lot of thought into it. My rationale is as follows:

- I use LaTeX to layout the text and code to give a professional look and I am afraid that EBook readers would mess this up.

- The increase in supported formats would create a maintenance headache that would take a large amount of time away from updating the books and working on new books.

- Most critically, reading on an e-reader or iPad is antithetical to the book-open-next-to-code-editor approach the PDF format was chosen to support.

My materials are playbooks intended to be open on the computer, next to a text editor and a command line.

They are not textbooks to be read away from the computer.

Thanks for your interest in my books

I’m sorry that you cannot afford my books or purchase them in your country.

I don’t give away free copies of my books.

I do give away a lot of free material on applied machine learning already.

You can access the best free material here:

Maybe.

I offer a discount on my books to:

- Students

- Teachers

- Retirees

If you fall into one of these groups and would like a discount, please contact me and ask.

Maybe.

I support payment via PayPal and Credit Card.

You may be able to set up a PayPal account that accesses your debit card. I recommend contacting PayPal or reading their documentation.

Sorry no.

I do not support WeChat Pay or Alipay at this stage.

I only support payment via PayPal and Credit Card.

Yes, you can print the purchased PDF books for your own personal interest.

There is no digital rights management (DRM) on the PDFs to prevent you from printing them.

Please do not distribute printed copies of your purchased books.

You can review the table of contents for any book.

I provide two copies of the table of contents for each book on the book’s page.

Specifically:

- A written summary that lists the tutorials/lessons in the book and their order.

- A screenshot of the table of contents taken from the PDF.

If you are having trouble finding the table of contents, search the page for the section titled “Table of Contents”.

Yes.

If you purchase a book or bundle and later decide that you want to upgrade to the super bundle, I can arrange it for you.

Contact me and let me know that you would like to upgrade and what books or bundles you have already purchased and which email address you used to make the purchases.

I will create a special offer code that you can use to get the price of books and bundles purchased so far deducted from the price of the super bundle.

I am happy for you to use parts of my material in the development of your own course material, such as lecture slides for an in person class or homework exercises.

I am not happy if you share my material for free or use it verbatim. This would be copyright infringement.

All code on my site and in my books was developed and provided for educational purposes only. I take no responsibility for the code, what it might do, or how you might use it.

If you use my material to teach, please reference the source, including:

- The Name of the author, e.g. “Jason Brownlee”.

- The Title of the tutorial or book.

- The Name of the website, e.g. “Machine Learning Mastery”.

- The URL of the tutorial or book.

- The Date you accessed or copied the code.

For example:

- Jason Brownlee, Machine Learning Algorithms in Python, Machine Learning Mastery, Available from https://machinelearningmastery.com/machine-learning-with-python/, accessed April 15th, 2018.

Also, if your work is public, contact me, I’d love to see it out of general interest.

Generally no.

I don’t have exercises or assignments in my books.

I do have end-to-end projects in some of the books, but they are in a tutorial format where I lead you through each step.

The book chapters are written as self-contained tutorials with a specific learning outcome. You will learn how to do something at the end of the tutorial.

Some books have a section titled “Extensions” with ideas for how to modify the code in the tutorial in some advanced ways. They are like self-study exercises.

Sorry, new books are not included in your super bundle.

I release new books every few months and develop a new super bundle at those times.

All existing customers will get early access to new books at a discount price.

Note, that you do get free updates to all of the books in your super bundle. This includes bug fixes, changes to APIs and even new chapters sometimes. I send out an email to customers for major book updates or you can contact me any time and ask for the latest version of a book.

No.

I have books that do not require any skill in programming, for example:

Other books do have code examples in a given programming language.

You must know the basics of the programming language, such as how to install the environment and how to write simple programs. I do not teach programming, I teach machine learning for developers.

You do not need to be a good programmer.

That being said, I do offer tutorials on how to setup your environment efficiently and even crash courses on programming languages for developers that may not be familiar with the given language.

No.

My books do not cover the theory or derivations of machine learning methods.

This is by design.

My books are focused on the practical concern of applied machine learning. Specifically, how algorithms work and how to use them effectively with modern open source tools.

If you are interested in the theory and derivations of equations, I recommend a machine learning textbook. Some good examples of machine learning textbooks that cover theory include:

I generally don’t run sales.

If I do have a special, such as around the launch of a new book, I only offer it to past customers and subscribers on my email list.

I do offer book bundles that offer a discount for a collection of related books.

I do offer a discount to students, teachers, and retirees. Contact me to find out about discounts.

Sorry, I don’t have videos.

I only have tutorial lessons and projects in text format.

This is by design. I used to have video content and I found the completion rate much lower.

I want you to put the material into practice. I have found that text-based tutorials are the best way of achieving this. With text-based tutorials you must read, implement and run the code.

With videos, you are passively watching and not required to take any action. Videos are entertainment or infotainment instead of productive learning and work.

After reading and working through the tutorials you are far more likely to use what you have learned.

Yes, I offer a 90-day no questions asked money-back guarantee.

I stand behind my books. They contain my best knowledge on a specific machine learning topic, and each book as been read, tested and used by tens of thousands of readers.

Nevertheless, if you find that one of my Ebooks is a bad fit for you, I will issue a full refund.

There are no physical books, therefore no shipping is required.

All books are EBooks that you can download immediately after you complete your purchase.

I support purchases from any country via PayPal or Credit Card.

Yes.

I recommend using standalone Keras version 2.4 (or higher) running on top of TensorFlow version 2.2 (or higher).

All tutorials on the blog have been updated to use standalone Keras running on top of Tensorflow 2.

All books have been updated to use this same combination.

I do not recommend using Keras as part of TensorFlow 2 yet (e.g. tf.keras). It is too new, new things have issues, and I am waiting for the dust to settle. Standalone Keras has been working for years and continues to work extremely well.

There is one case of tutorials that do not support TensorFlow 2 because the tutorials make use of third-party libraries that have not yet been updated to support TensorFlow 2. Specifically tutorials that use Mask-RCNN for object recognition. Once the third party library has been updated, these tutorials too will be updated.

The book “Long Short-Term Memory Networks with Python” is not focused on time series forecasting, instead, it is focused on the LSTM method for a suite of sequence prediction problems.

The book “Deep Learning for Time Series Forecasting” shows you how to develop MLP, CNN and LSTM models for univariate, multivariate and multi-step time series forecasting problems.

Mini-courses are free courses offered on a range of machine learning topics and made available via email, PDF and blog posts.

Mini-courses are:

- Short, typically 7 days or 14 days in length.

- Terse, typically giving one tip or code snippet per lesson.

- Limited, typically narrow in scope to a few related areas.

Ebooks are provided on many of the same topics providing full training courses on the topics.

Ebooks are:

- Longer, typically 25+ complete tutorial lessons, each taking up to an hour to complete.

- Complete, providing a gentle introduction into each lesson and includes full working code and further reading.

- Broad, covering all of the topics required on the topic to get productive quickly and bring the techniques to your own projects.

The mini-courses are designed for you to get a quick result. If you would like more information or fuller code examples on the topic then you can purchase the related Ebook.

The book “Master Machine Learning Algorithms” is for programmers and non-programmers alike. It teaches you how 10 top machine learning algorithms work, with worked examples in arithmetic, and spreadsheets, not code. The focus is on an understanding on how each model learns and makes predictions.

The book “Machine Learning Algorithms From Scratch” is for programmers that learn by writing code to understand. It provides step-by-step tutorials on how to implement top algorithms as well as how to load data, evaluate models and more. It has less on how the algorithms work, instead focusing exclusively on how to implement each in code.

The two books can support each other.

The books are a concentrated and more convenient version of what I put on the blog.

I design my books to be a combination of lessons and projects to teach you how to use a specific machine learning tool or library and then apply it to real predictive modeling problems.

The books get updated with bug fixes, updates for API changes and the addition of new chapters, and these updates are totally free.

I do put some of the book chapters on the blog as examples, but they are not tied to the surrounding chapters or the narrative that a book offers and do not offer the standalone code files.

With each book, you also get all of the source code files used in the book that you can use as recipes to jump-start your own predictive modeling problems.

My books are playbooks. Not textbooks.

They have no deep explanations of theory, just working examples that are laser-focused on the information that you need to know to bring machine learning to your project.

There is little math, no theory or derivations.

My readers really appreciate the top-down, rather than bottom-up approach used in my material. It is the one aspect I get the most feedback about.

My books are not for everyone, they are carefully designed for practitioners that need to get results, fast.

A code file is provided for each example presented in the book.

Dataset files used in each chapter are also provided with the book.

The code and dataset files are provided as part of your .zip download in a code/ subdirectory. Code and datasets are organized into subdirectories, one for each chapter that has a code example.

If you have misplaced your .zip download, you can contact me and I can send an updated purchase receipt email with a link to download your package.

Ebooks can be purchased from my website directly.

- First, find the book or bundle that you wish to purchase, you can see the full catalog here:

- Click on the book or bundle that you would like to purchase to go to the book’s details page.

- Click the “Buy Now” button for the book or bundle to go to the shopping cart page.

- Fill in the shopping cart with your details and payment details, and click the “Place Order” button.

- After completing the purchase you will be emailed a link to download your book or bundle.

All prices are in US dollars (USD).

Books can be purchased with PayPal or Credit Card.

All prices on Machine Learning Mastery are in US dollars.

Payments can be made by using either PayPal or a Credit Card that supports international payments (e.g. most credit cards).

You do not have to explicitly convert money from your currency to US dollars.

Currency conversion is performed automatically when you make a payment using PayPal or Credit Card.

After filling out and submitting your order form, you will be able to download your purchase immediately.

Your web browser will be redirected to a webpage where you can download your purchase.

You will also receive an email with a link to download your purchase.

If you lose the email or the link in the email expires, contact me and I will resend the purchase receipt email with an updated download link.

After you complete your purchase you will receive an email with a link to download your bundle.

The download will include the book or books and any bonus material.

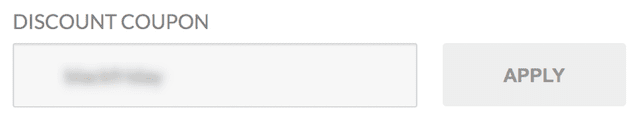

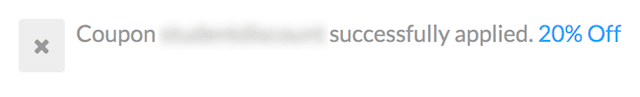

To use a discount code, also called an offer code, or discount coupon when making a purchase, follow these steps:

1. Enter the discount code text into the field named “Discount Coupon” on the checkout page.

Note, if you don’t see a field called “Discount Coupon” on the checkout page, it means that that product does not support discounts.

2. Click the “Apply” button.

3. You will then see a message that the discount was applied successfully to your order.

Note, if the discount code that you used is no longer valid, you will see a message that the discount was not successfully applied to your order.

There are no physical books, therefore no shipping is required.

All books are EBooks that you can download immediately after you complete your purchase.

I recommend reading one chapter per day.

Momentum is important.

Some readers finish a book in a weekend.

Most readers finish a book in a few weeks by working through it during nights and weekends.

You will get your book immediately.

After you complete and submit the payment form, you will be immediately redirected to a webpage with a link to download your purchase.

You will also immediately be sent an email with a link to download your purchase.

What order should you read the books?

That is a great question, my best suggestions are as follows:

- Consider starting with a book on a topic that you are most excited about.

- Consider starting with a book on a topic that you can apply on a project immediately.

Also, consider that you don’t need to read all of the books, perhaps a subset of the books will get you the skills you need or want.

Nevertheless, one suggested order for reading the books is as follows:

-

- Probability for Machine Learning

- Statistical Methods for Machine Learning

- Linear Algebra for Machine Learning

- Optimization for Machine Learning

- Calculus for Machine Learning

- The Beginner’s Guide to Data Science

- Master Machine Learning Algorithms

- Machine Learning Algorithms From Scratch

- Python for Machine Learning

- Machine Learning Mastery With Weka

- Machine Learning Mastery With Python

- Machine Learning Mastery With R

- Data Preparation for Machine Learning

- Imbalanced Classification With Python

- Time Series Forecasting With Python

- Ensemble Learning Algorithms With Python

- XGBoost With Python

- Deep Learning With Python

- Deep Learning with PyTorch

- Long Short-Term Memory Networks with Python

- Deep Learning for Natural Language Processing

- Deep Learning for Computer Vision

- Machine Learning in Open CV

- Deep Learning for Time Series Forecasting

- Better Deep Learning

- Generative Adversarial Networks with Python

- Building Transformer Models with Attention

- Productivity with ChatGPT (this book can be read in any order)

I hope that helps.

Sorry, I do not have a license to purchase my books or bundles for libraries.

The books are for individual use only.

Generally, no.

Multi-seat licenses create a bit of a maintenance nightmare for me, sorry. It takes time away from reading, writing and helping my readers.

If you have a big order, such as for a class of students or a large team, please contact me and we will work something out.

I update the books frequently and you can access the latest version of a book at any time.

In order to get the latest version of a book, contact me directly with your order number or purchase email address and I can resend your purchase receipt email with an updated download link.

I do not maintain a public change log or errata for the changes in the book, sorry.

There are no physical books, therefore no delivery is required.

All books are Ebooks in PDF format that you can download immediately after you complete your purchase.

You will receive an email with a link to download your purchase. You can also contact me any time to get a new download link.

I support purchases from any country via PayPal or Credit Card.

My best advice is to start with a book on a topic that you can use immediately.

Baring that, pick a topic that interests you the most.

If you are unsure, perhaps try working through some of the free tutorials to see what area that you gravitate towards.

Generally, I recommend focusing on the process of working through a predictive modeling problem end-to-end:

I have three books that show you how to do this, with three top open source platforms:

- Master Machine Learning With Weka (no programming)

- Master Machine Learning With R (caret)

- Master Machine Learning With Python (pandas and scikit-learn)

These are great places to start.

You can always circle back and pick-up a book on algorithms later to learn more about how specific methods work in greater detail.

Thanks for your interest.

You can see the full catalog of my books and bundles here:

Thanks for asking.

I try not to plan my books too far into the future. I try to write about the topics that I am asked about the most or topics where I see the most misunderstanding.

If you would like me to write more about a topic, I would love to know.

Contact me directly and let me know the topic and even the types of tutorials you would love for me to write.

Contact me and let me know the email address (or email addresses) that you think you used to make purchases.

I can look up what purchases you have made and resend purchase receipts to you so that you can redownload your books and bundles.

All prices are in US Dollars (USD).

All currency conversion is handled by PayPal for PayPal purchases, or by Stripe and your bank for credit card purchases.

It is possible that your link to download your purchase will expire after a few days.

This is a security precaution.

Please contact me and I will resend you purchase receipt with an updated download link.

The book “Deep Learning With Python” could be a prerequisite to”Long Short-Term Memory Networks with Python“. It teaches you how to get started with Keras and how to develop your first MLP, CNN and LSTM.

The book “Long Short-Term Memory Networks with Python” goes deep on LSTMs and teaches you how to prepare data, how to develop a suite of different LSTM architectures, parameter tuning, updating models and more.

Both books focus on deep learning in Python using the Keras library.

The book “Long Short-Term Memory Networks in Python” focuses on how to develop a suite of different LSTM networks for sequence prediction, in general.

The book “Deep Learning for Time Series Forecasting” focuses on how to use a suite of different deep learning models (MLPs, CNNs, LSTMs, and hybrids) to address a suite of different time series forecasting problems (univariate, multivariate, multistep and combinations).

The LSTM book teaches LSTMs only and does not focus on time series. The Deep Learning for Time Series book focuses on time series and teaches how to use many different models including LSTMs.

The book “Long Short-Term Memory Networks With Python” focuses on how to implement different types of LSTM models.

The book “Deep Learning for Natural Language Processing” focuses on how to use a variety of different networks (including LSTMs) for text prediction problems.

The LSTM book can support the NLP book, but it is not a prerequisite.

You may need a business or corporate tax number for “Machine Learning Mastery“, the company, for your own tax purposes. This is common in EU companies for example.

The Machine Learning Mastery company is operated out of Puerto Rico.

As such, the company does not have a VAT identification number for the EU or similar for your country or regional area.

The company does have a Company Number. The details are as follows:

- Company Name: Zeus LLC

- Company Number: 421867-1511

There are no code examples in “Master Machine Learning Algorithms“, therefore no programming language is used.

Algorithms are described and their working is summarized using basic arithmetic. The algorithm behavior is also demonstrated in excel spreadsheets, that are available with the book.

It is a great book for learning how algorithms work, without getting side-tracked with theory or programming syntax.

If you are interested in learning about machine learning algorithms by coding them from scratch (using the Python programming language), I would recommend a different book:

I write the content for the books (words and code) using a text editor, specifically sublime.

I typeset the books and create a PDF using LaTeX.

All of the books have been tested and work with Python 3 (e.g. 3.5 or 3.6).

Most of the books have also been tested and work with Python 2.7.

Where possible, I recommend using the latest version of Python 3.

After you fill in the order form and submit it, two things will happen:

- You will be redirected to a webpage where you can download your purchase.

- You will be sent an email (to the email address used in the order form) with a link to download your purchase.

The redirect in the browser and the email will happen immediately after you complete the purchase.

You can download your purchase from either the webpage or the email.

If you cannot find the email, perhaps check other email folders, such as the “spam” folder?

If you have any concerns, contact me and I can resend your purchase receipt email with the download link.

I do test my tutorials and projects on the blog first. It’s like the early access to ideas, and many of them do not make it to my training.

Much of the material in the books appeared in some form on my blog first and is later refined, improved and repackaged into a chapter format. I find this helps greatly with quality and bug fixing.

The books provide a more convenient packaging of the material, including source code, datasets and PDF format. They also include updates for new APIs, new chapters, bug and typo fixing, and direct access to me for all the support and help I can provide.

I believe my books offer thousands of dollars of education for tens of dollars each.

They are months if not years of experience distilled into a few hundred pages of carefully crafted and well-tested tutorials.

I think they are a bargain for professional developers looking to rapidly build skills in applied machine learning or use machine learning on a project.

Also, what are skills in machine learning worth to you? to your next project? and you’re current or next employer?

Nevertheless, the price of my books may appear expensive if you are a student or if you are not used to the high salaries for developers in North America, Australia, UK and similar parts of the world. For that, I am sorry.

Discounts

I do offer discounts to students, teachers and retirees.

Please contact me to find out more.

Free Material

I offer a ton of free content on my blog, you can get started with my best free material here:

About my Books

My books are playbooks.

They are intended for developers who want to know how to use a specific library to actually solve problems and deliver value at work.

- My books guide you only through the elements you need to know in order to get results.

- My books are in PDF format and come with code and datasets, specifically designed for you to read and work-through on your computer.

- My books give you direct access to me via email (what other books offer that?)

- My books are a tiny business expense for a professional developer that can be charged to the company and is tax deductible in most regions.

Very few training materials on machine learning are focused on how to get results.

The vast majority are about repeating the same math and theory and ignore the one thing you really care about: how to use the methods on a project.

Comparison to Other Options

Let me provide some context for you on the pricing of the books:

There are free videos on youtube and tutorials on blogs.

- Great, I encourage you to use them, including my own free tutorials.

There are very cheap video courses that teach you one or two tricks with an API.

- My books teach you how to use a library to work through a project end-to-end and deliver value, not just a few tricks

A textbook on machine learning can cost $50 to $100.

- All of my books are cheaper than the average machine learning textbook, and I expect you may be more productive, sooner.

A bootcamp or other in-person training can cost $1000+ dollars and last for days to weeks.

- A bundle of all of my books is far cheaper than this, they allow you to work at your own pace, and the bundle covers more content than the average bootcamp.

Sorry, my books are not available on websites like Amazon.com.

I carefully decided to not put my books on Amazon for a number of reasons:

- Amazon takes 65% of the sale price of self-published books, which would put me out of business.

- Amazon offers very little control over the sales page and shopping cart experience.

- Amazon does not allow me to contact my customers via email and offer direct support and updates.

- Amazon does not allow me to deliver my book to customers as a PDF, the preferred format for my customers to read on the screen.

I hope that helps you understand my rationale.

I am sorry to hear that you’re having difficulty purchasing a book or bundle.

I use Stripe for Credit Card and PayPal services to support secure and encrypted payment processing on my website.

Some common problems when customers have a problem include:

- Perhaps you can double check that your details are correct, just in case of a typo?

- Perhaps you could try a different payment method, such as PayPal or Credit Card?

- Perhaps you’re able to talk to your bank, just in case they blocked the transaction?

I often see customers trying to purchase with a domestic credit card or debit card that does not allow international purchases. This is easy to overcome by talking to your bank.

If you’re still having difficulty, please contact me and I can help investigate further.

When you purchase a book from my website and later review your bank statement, it is possible that you may see an additional small charge of one or two dollars.

The charge does not come from my website or payment processor.

Instead, the charge was added by your bank, credit card company, or financial institution. It may be because your bank adds an additional charge for online or international transactions.

This is rare but I have seen this happen once or twice before, often with credit cards used by enterprise or large corporate institutions.

My advice is to contact your bank or financial institution directly and ask them to explain the cause of the additional charge.

If you would like a copy of the payment transaction from my side (e.g. a screenshot from the payment processor), or a PDF tax invoice, please contact me directly.

I give away a lot of content for free. Most of it in fact.

It is important to me to help students and practitioners that are not well off, hence the enormous amount of free content that I provide.

You can access the free content:

I have thought very hard about this and I sell machine learning Ebooks for a few important reasons:

- I use the revenue to support the site and all the non-paying customers.

- I use the revenue to support my family so that I can continue to create content.

- Practitioners that pay for tutorials are far more likely to work through them and learn something.

- I target my books towards working professionals that are more likely to afford the materials.

Yes.

All updates to the book or books in your purchase are free.

Books are usually updated once every few months to fix bugs, typos and keep abreast of API changes.

Contact me anytime and check if there have been updates. Let me know what version of the book you have (version is listed on the copyright page).

Yes.

Please contact me anytime with questions about machine learning or the books.

One question at a time please.

Also, each book has a final chapter on getting more help and further reading and points to resources that you can use to get more help.

Yes, the books can help you get a job, but indirectly.

Getting a job is up to you.

It is a matching problem between an organization looking for someone to fill a role and you with your skills and background.

That being said, there are companies that are more interested in the value that you can provide to the business than the degrees that you have. Often, these are smaller companies and start-ups.

You can focus on providing value with machine learning by learning and getting very good at working through predictive modeling problems end-to-end. You can show this skill by developing a machine learning portfolio of completed projects.

My books are specifically designed to help you toward these ends. They teach you exactly how to use open source tools and libraries to get results in a predictive modeling project.