Matrix operations are used in the description of many machine learning algorithms.

Some operations can be used directly to solve key equations, whereas others provide useful shorthand or foundation in the description and the use of more complex matrix operations.

In this tutorial, you will discover important linear algebra matrix operations used in the description of machine learning methods.

After completing this tutorial, you will know:

- The Transpose operation for flipping the dimensions of a matrix.

- The Inverse operations used in solving systems of linear equations.

- The Trace and Determinant operations used as shorthand notation in other matrix operations.

Kick-start your project with my new book Linear Algebra for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Matrix Operations for Machine Learning

Photo by Andrej, some rights reserved.

Tutorial Overview

This tutorial is divided into 5 parts; they are:

- Transpose

- Inversion

- Trace

- Determinant

- Rank

Need help with Linear Algebra for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Transpose

A defined matrix can be transposed, which creates a new matrix with the number of columns and rows flipped.

This is denoted by the superscript “T” next to the matrix.

|

1 |

C = A^T |

An invisible diagonal line can be drawn through the matrix from top left to bottom right on which the matrix can be flipped to give the transpose.

|

1 2 3 4 5 6 |

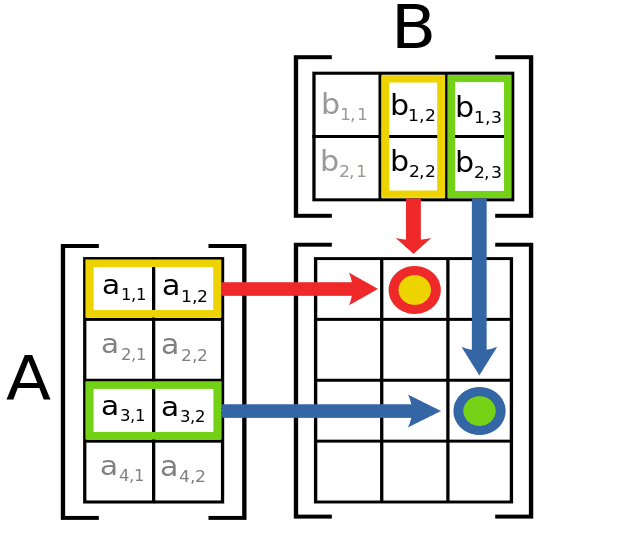

a11, a12 A = (a21, a22) a31, a32 a11, a21, a31 A^T = (a12, a22, a32) |

The operation has no effect if the matrix is symmetrical, e.g. has the same number of columns and rows and the same values at the same locations on both sides of the invisible diagonal line.

The columns of A^T are the rows of A.

— Page 109, Introduction to Linear Algebra, Fifth Edition, 2016.

We can transpose a matrix in NumPy by calling the T attribute.

|

1 2 3 4 5 6 |

# transpose matrix from numpy import array A = array([[1, 2], [3, 4], [5, 6]]) print(A) C = A.T print(C) |

Running the example first prints the matrix as it is defined, then the transposed version.

|

1 2 3 4 5 6 |

[[1 2] [3 4] [5 6]] [[1 3 5] [2 4 6]] |

The transpose operation provides a short notation used as an element in many matrix operations.

Inversion

Matrix inversion is a process that finds another matrix that when multiplied with the matrix, results in an identity matrix.

Given a matrix A, find matrix B, such that AB or BA = In.

|

1 |

AB = BA = In |

The operation of inverting a matrix is indicated by a -1 superscript next to the matrix; for example, A^-1. The result of the operation is referred to as the inverse of the original matrix; for example, B is the inverse of A.

|

1 |

B = A^-1 |

A matrix is invertible if there exists another matrix that results in the identity matrix, where not all matrices are invertible. A square matrix that is not invertible is referred to as singular.

Whatever A does, A^-1 undoes.

— Page 83, Introduction to Linear Algebra, Fifth Edition, 2016.

The matrix inversion operation is not computed directly, but rather the inverted matrix is discovered through a numerical operation, where a suite of efficient methods may be used, often involving forms of matrix decomposition.

However, A^−1 is primarily useful as a theoretical tool, and should not actually be used in practice for most software applications.

— Page 37, Deep Learning, 2016.

A matrix can be inverted in NumPy using the inv() function.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# invert matrix from numpy import array from numpy.linalg import inv # define matrix A = array([[1.0, 2.0], [3.0, 4.0]]) print(A) # invert matrix B = inv(A) print(B) # multiply A and B I = A.dot(B) print(I) |

First, we define a small 2×2 matrix, then calculate the inverse of the matrix, and then confirm the inverse by multiplying it with the original matrix to give the identity matrix.

Running the example prints the original, inverse, and identity matrices.

|

1 2 3 4 5 6 7 8 |

[[ 1. 2.] [ 3. 4.]] [[-2. 1. ] [ 1.5 -0.5]] [[ 1.00000000e+00 0.00000000e+00] [ 8.88178420e-16 1.00000000e+00]] |

Matrix inversion is used as an operation in solving systems of equations framed as matrix equations where we are interested in finding vectors of unknowns. A good example is in finding the vector of coefficient values in linear regression.

Trace

A trace of a square matrix is the sum of the values on the main diagonal of the matrix (top-left to bottom-right).

The trace operator gives the sum of all of the diagonal entries of a matrix

— Page 46, Deep Learning, 2016.

The operation of calculating a trace on a square matrix is described using the notation “tr(A)” where A is the square matrix on which the operation is being performed.

|

1 |

tr(A) |

The trace is calculated as the sum of the diagonal values; for example, in the case of a 3×3 matrix:

|

1 |

tr(A) = a11 + a22 + a33 |

Or, using array notation:

|

1 |

tr(A) = A[0,0] + A[1,1] + A[2,2] |

We can calculate the trace of a matrix in NumPy using the trace() function.

|

1 2 3 4 5 6 7 |

# trace from numpy import array from numpy import trace A = array([[1, 2, 3], [4, 5, 6], [7, 8, 9]]) print(A) B = trace(A) print(B) |

First, a 3×3 matrix is created and then the trace is calculated.

Running the example, first the array is printed and then the trace.

|

1 2 3 4 5 |

[[1 2 3] [4 5 6] [7 8 9]] 15 |

Alone, the trace operation is not interesting, but it offers a simpler notation and it is used as an element in other key matrix operations.

Determinant

The determinant of a square matrix is a scalar representation of the volume of the matrix.

The determinant describes the relative geometry of the vectors that make up the rows of the matrix. More specifically, the determinant of a matrix A tells you the volume of a box with sides given by rows of A.

— Page 119, No Bullshit Guide To Linear Algebra, 2017

It is denoted by the “det(A)” notation or |A|, where A is the matrix on which we are calculating the determinant.

|

1 |

det(A) |

The determinant of a square matrix is calculated from the elements of the matrix. More technically, the determinant is the product of all the eigenvalues of the matrix.

The intuition for the determinant is that it describes the way a matrix will scale another matrix when they are multiplied together. For example, a determinant of 1 preserves the space of the other matrix. A determinant of 0 indicates that the matrix cannot be inverted.

The determinant of a square matrix is a single number. […] It tells immediately whether the matrix is invertible. The determinant is a zero when the matrix has no inverse.

— Page 247, Introduction to Linear Algebra, Fifth Edition, 2016.

In NumPy, the determinant of a matrix can be calculated using the det() function.

|

1 2 3 4 5 6 7 |

# trace from numpy import array from numpy.linalg import det A = array([[1, 2, 3], [4, 5, 6], [7, 8, 9]]) print(A) B = det(A) print(B) |

First, a 3×3 matrix is defined, then the determinant of the matrix is calculated.

Running the example first prints the defined matrix and then the determinant of the matrix.

|

1 2 3 4 5 |

[[1 2 3] [4 5 6] [7 8 9]] -9.51619735393e-16 |

Like the trace operation, alone, the determinant operation is not interesting, but it offers a simpler notation and it is used as an element in other key matrix operations.

Matrix Rank

The rank of a matrix is the estimate of the number of linearly independent rows or columns in a matrix.

The rank of a matrix M is often denoted as the function rank().

|

1 |

rank(A) |

An intuition for rank is to consider it the number of dimensions spanned by all of the vectors within a matrix. For example, a rank of 0 suggest all vectors span a point, a rank of 1 suggests all vectors span a line, a rank of 2 suggests all vectors span a two-dimensional plane.

The rank is estimated numerically, often using a matrix decomposition method. A common approach is to use the Singular-Value Decomposition or SVD for short.

NumPy provides the matrix_rank() function for calculating the rank of an array. It uses the SVD method to estimate the rank.

The example below demonstrates calculating the rank of a matrix with scalar values and another vector with all zero values.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# vector rank from numpy import array from numpy.linalg import matrix_rank # rank v1 = array([1,2,3]) print(v1) vr1 = matrix_rank(v1) print(vr1) # zero rank v2 = array([0,0,0,0,0]) print(v2) vr2 = matrix_rank(v2) print(vr2) |

Running the example prints the first vector and its rank of 1, followed by the second zero vector and its rank of 0.

|

1 2 3 4 5 6 7 |

[1 2 3] 1 [0 0 0 0 0] 0 |

The next example makes it clear that the rank is not the number of dimensions of the matrix, but the number of linearly independent directions.

Three examples of a 2×2 matrix are provided demonstrating matrices with rank 0, 1 and 2.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# matrix rank from numpy import array from numpy.linalg import matrix_rank # rank 0 M0 = array([[0,0],[0,0]]) print(M0) mr0 = matrix_rank(M0) print(mr0) # rank 1 M1 = array([[1,2],[1,2]]) print(M1) mr1 = matrix_rank(M1) print(mr1) # rank 2 M2 = array([[1,2],[3,4]]) print(M2) mr2 = matrix_rank(M2) print(mr2) |

Running the example first prints a 0 2×2 matrix followed by the rank, then a 2×2 that with a rank 1 and finally a 2×2 matrix with a rank of 2.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[[0 0] [0 0]] 0 [[1 2] [1 2]] 1 [[1 2] [3 4]] 2 |

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Create 5 examples using each operation with your own data.

- Implement each matrix operation manually for matrices defined as lists of lists.

- Search machine learning papers and find 1 example of each operation being used.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Section 3.4 Determinants. No Bullshit Guide To Linear Algebra, 2017.

- Section 3.5 Matrix inverse. No Bullshit Guide To Linear Algebra, 2017.

- Section 5.1 The Properties of Determinants, Introduction to Linear Algebra, Fifth Edition, 2016.

- Section 2.3 Identity and Inverse Matrices, Deep Learning, 2016.

- Section 2.11 The Determinant, Deep Learning, 2016.

- Section 3.D Invertibility and Isomorphic Vector Spaces, Linear Algebra Done Right, Third Edition, 2015.

- Section 10.A Trace, Linear Algebra Done Right, Third Edition, 2015.

- Section 10.B Determinant, Linear Algebra Done Right, Third Edition, 2015.

API

- numpy.ndarray.T API

- numpy.linalg.inv() API

- numpy.trace() API

- numpy.linalg.det() API

- numpy.linalg.matrix_rank() API

Articles

- Transpose on Wikipedia

- Invertible matrix on Wikipedia

- Trace (linear algebra) on Wikipedia

- Determinant on Wikipedia

- Rank (linear algebra) on Wikipedia

Summary

In this tutorial, you discovered important linear algebra matrix operations used in the description of machine learning methods.

Specifically, you learned:

- The Transpose operation for flipping the dimensions of a matrix.

- The Inverse operations used in solving systems of linear equations.

- The Trace and Determinant operations used as shorthand notation in other matrix operations.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Amazing overview thank you!

Thanks.

Thanks for this very valuable tutorial!

Um, minor point, but is there a typo in the first example?

Shouldn’t A.T effectively be

array([[11, 21, 31],

[12, 22, 32]])

and not what you (currently) have, i.e. effectively,

array([[11, 21, 23],

[12, 22, 22]])

Thanks, fixed!

[[ 1 -2 0 4]

[ 3 1 1 0]

[ -1 -5 -1 8]

[ 3 8 2 -12]]

Rank = 2

Very nice!

good explanation

Thanks!

Helpful

Thanks.

I am so appreciative of your resources, thank you!

Thank you for the feedback Aurora!