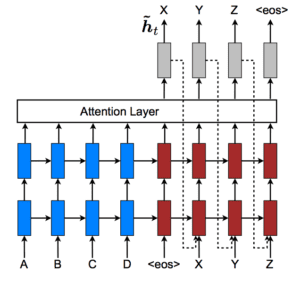

How Does Attention Work in Encoder-Decoder Recurrent Neural Networks

Attention is a mechanism that was developed to improve the performance of the Encoder-Decoder RNN on machine translation. In this tutorial, you will discover the attention mechanism for the Encoder-Decoder model. After completing this tutorial, you will know: About the Encoder-Decoder model and attention mechanism for machine translation. How to implement the attention mechanism step-by-step. … Continue reading How Does Attention Work in Encoder-Decoder Recurrent Neural Networks

57 Comments