Density estimation is the problem of estimating the probability distribution for a sample of observations from a problem domain.

There are many techniques for solving density estimation, although a common framework used throughout the field of machine learning is maximum likelihood estimation. Maximum likelihood estimation involves defining a likelihood function for calculating the conditional probability of observing the data sample given a probability distribution and distribution parameters. This approach can be used to search a space of possible distributions and parameters.

This flexible probabilistic framework also provides the foundation for many machine learning algorithms, including important methods such as linear regression and logistic regression for predicting numeric values and class labels respectively, but also more generally for deep learning artificial neural networks.

In this post, you will discover a gentle introduction to maximum likelihood estimation.

After reading this post, you will know:

- Maximum Likelihood Estimation is a probabilistic framework for solving the problem of density estimation.

- It involves maximizing a likelihood function in order to find the probability distribution and parameters that best explain the observed data.

- It provides a framework for predictive modeling in machine learning where finding model parameters can be framed as an optimization problem.

Kick-start your project with my new book Probability for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Maximum Likelihood Estimation for Machine Learning

Photo by Guilhem Vellut, some rights reserved.

Overview

This tutorial is divided into three parts; they are:

- Problem of Probability Density Estimation

- Maximum Likelihood Estimation

- Relationship to Machine Learning

Problem of Probability Density Estimation

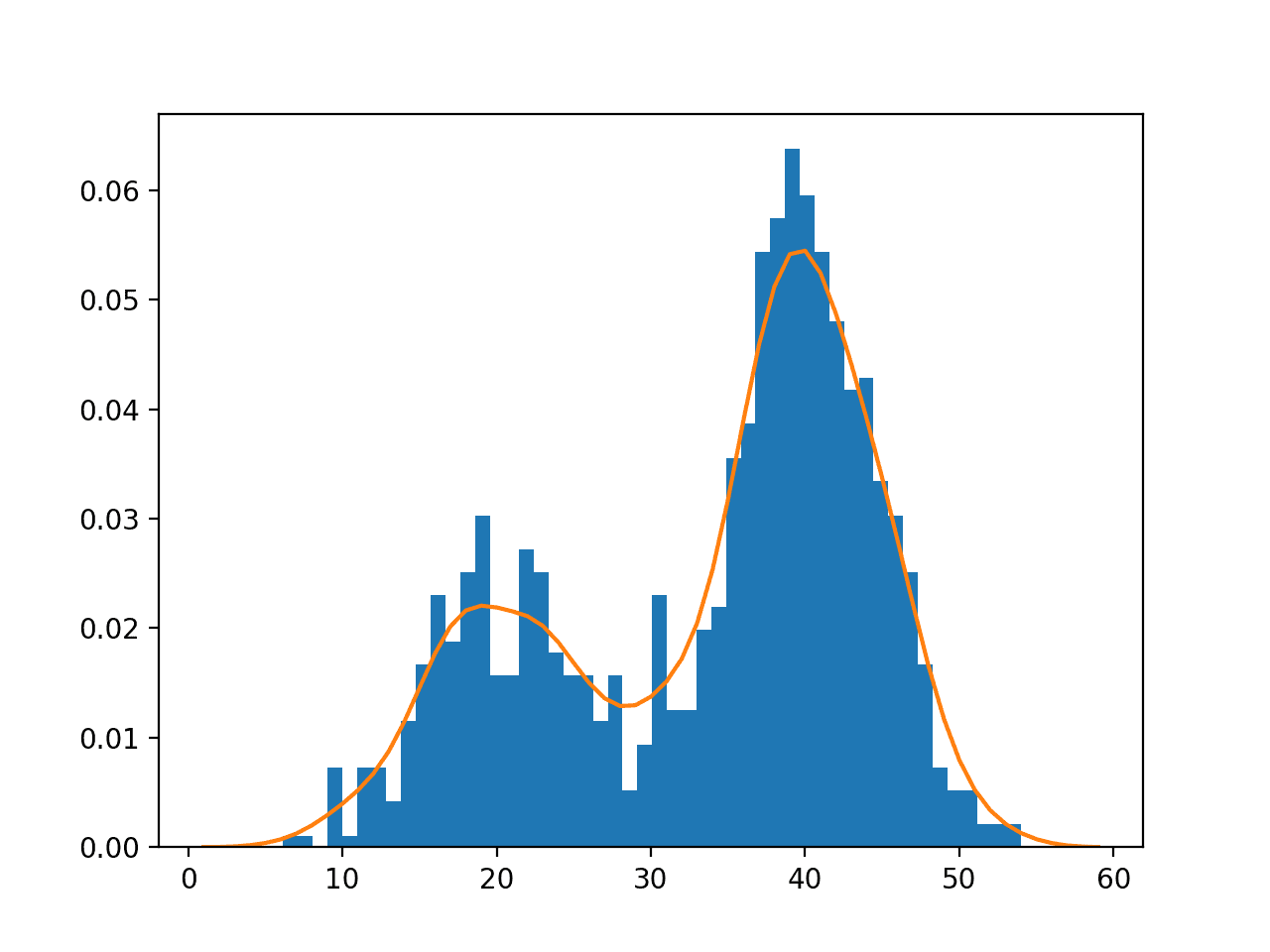

A common modeling problem involves how to estimate a joint probability distribution for a dataset.

For example, given a sample of observation (X) from a domain (x1, x2, x3, …, xn), where each observation is drawn independently from the domain with the same probability distribution (so-called independent and identically distributed, i.i.d., or close to it).

Density estimation involves selecting a probability distribution function and the parameters of that distribution that best explain the joint probability distribution of the observed data (X).

- How do you choose the probability distribution function?

- How do you choose the parameters for the probability distribution function?

This problem is made more challenging as sample (X) drawn from the population is small and has noise, meaning that any evaluation of an estimated probability density function and its parameters will have some error.

There are many techniques for solving this problem, although two common approaches are:

- Maximum a Posteriori (MAP), a Bayesian method.

- Maximum Likelihood Estimation (MLE), frequentist method.

The main difference is that MLE assumes that all solutions are equally likely beforehand, whereas MAP allows prior information about the form of the solution to be harnessed.

In this post, we will take a closer look at the MLE method and its relationship to applied machine learning.

Want to Learn Probability for Machine Learning

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Maximum Likelihood Estimation

One solution to probability density estimation is referred to as Maximum Likelihood Estimation, or MLE for short.

Maximum Likelihood Estimation involves treating the problem as an optimization or search problem, where we seek a set of parameters that results in the best fit for the joint probability of the data sample (X).

First, it involves defining a parameter called theta that defines both the choice of the probability density function and the parameters of that distribution. It may be a vector of numerical values whose values change smoothly and map to different probability distributions and their parameters.

In Maximum Likelihood Estimation, we wish to maximize the probability of observing the data from the joint probability distribution given a specific probability distribution and its parameters, stated formally as:

- P(X | theta)

This conditional probability is often stated using the semicolon (;) notation instead of the bar notation (|) because theta is not a random variable, but instead an unknown parameter. For example:

- P(X ; theta)

or

- P(x1, x2, x3, …, xn ; theta)

This resulting conditional probability is referred to as the likelihood of observing the data given the model parameters and written using the notation L() to denote the likelihood function. For example:

- L(X ; theta)

The objective of Maximum Likelihood Estimation is to find the set of parameters (theta) that maximize the likelihood function, e.g. result in the largest likelihood value.

- maximize L(X ; theta)

We can unpack the conditional probability calculated by the likelihood function.

Given that the sample is comprised of n examples, we can frame this as the joint probability of the observed data samples x1, x2, x3, …, xn in X given the probability distribution parameters (theta).

- L(x1, x2, x3, …, xn ; theta)

The joint probability distribution can be restated as the multiplication of the conditional probability for observing each example given the distribution parameters.

- product i to n P(xi ; theta)

Multiplying many small probabilities together can be numerically unstable in practice, therefore, it is common to restate this problem as the sum of the log conditional probabilities of observing each example given the model parameters.

- sum i to n log(P(xi ; theta))

Where log with base-e called the natural logarithm is commonly used.

This product over many probabilities can be inconvenient […] it is prone to numerical underflow. To obtain a more convenient but equivalent optimization problem, we observe that taking the logarithm of the likelihood does not change its arg max but does conveniently transform a product into a sum

— Page 132, Deep Learning, 2016.

Given the frequent use of log in the likelihood function, it is commonly referred to as a log-likelihood function.

It is common in optimization problems to prefer to minimize the cost function, rather than to maximize it. Therefore, the negative of the log-likelihood function is used, referred to generally as a Negative Log-Likelihood (NLL) function.

- minimize -sum i to n log(P(xi ; theta))

In software, we often phrase both as minimizing a cost function. Maximum likelihood thus becomes minimization of the negative log-likelihood (NLL) …

— Page 133, Deep Learning, 2016.

Relationship to Machine Learning

This problem of density estimation is directly related to applied machine learning.

We can frame the problem of fitting a machine learning model as the problem of probability density estimation. Specifically, the choice of model and model parameters is referred to as a modeling hypothesis h, and the problem involves finding h that best explains the data X.

- P(X ; h)

We can, therefore, find the modeling hypothesis that maximizes the likelihood function.

- maximize L(X ; h)

Or, more fully:

- maximize sum i to n log(P(xi ; h))

This provides the basis for estimating the probability density of a dataset, typically used in unsupervised machine learning algorithms; for example:

- Clustering algorithms.

Using the expected log joint probability as a key quantity for learning in a probability model with hidden variables is better known in the context of the celebrated “expectation maximization” or EM algorithm.

— Page 365, Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

The Maximum Likelihood Estimation framework is also a useful tool for supervised machine learning.

This applies to data where we have input and output variables, where the output variate may be a numerical value or a class label in the case of regression and classification predictive modeling retrospectively.

We can state this as the conditional probability of the output (y) given the input (X) given the modeling hypothesis (h).

- maximize L(y|X ; h)

Or, more fully:

- maximize sum i to n log(P(yi|xi ; h))

The maximum likelihood estimator can readily be generalized to the case where our goal is to estimate a conditional probability P(y | x ; theta) in order to predict y given x. This is actually the most common situation because it forms the basis for most supervised learning.

— Page 133, Deep Learning, 2016.

This means that the same Maximum Likelihood Estimation framework that is generally used for density estimation can be used to find a supervised learning model and parameters.

This provides the basis for foundational linear modeling techniques, such as:

- Linear Regression, for predicting a numerical value.

- Logistic Regression, for binary classification.

In the case of linear regression, the model is constrained to a line and involves finding a set of coefficients for the line that best fits the observed data. Fortunately, this problem can be solved analytically (e.g. directly using linear algebra).

In the case of logistic regression, the model defines a line and involves finding a set of coefficients for the line that best separates the classes. This cannot be solved analytically and is often solved by searching the space of possible coefficient values using an efficient optimization algorithm such as the BFGS algorithm or variants.

Both methods can also be solved less efficiently using a more general optimization algorithm such as stochastic gradient descent.

In fact, most machine learning models can be framed under the maximum likelihood estimation framework, providing a useful and consistent way to approach predictive modeling as an optimization problem.

An important benefit of the maximize likelihood estimator in machine learning is that as the size of the dataset increases, the quality of the estimator continues to improve.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Chapter 5 Machine Learning Basics, Deep Learning, 2016.

- Chapter 2 Probability Distributions, Pattern Recognition and Machine Learning, 2006.

- Chapter 8 Model Inference and Averaging, The Elements of Statistical Learning, 2016.

- Chapter 9 Probabilistic methods, Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

- Chapter 22 Maximum Likelihood and Clustering, Information Theory, Inference and Learning Algorithms, 2003.

- Chapter 8 Learning distributions, Bayesian Reasoning and Machine Learning, 2011.

Articles

- Maximum likelihood estimation, Wikipedia.

- Maximum Likelihood, Wolfram MathWorld.

- Likelihood function, Wikipedia.

- Some problems understanding the definition of a function in a maximum likelihood method, CrossValidated.

Summary

In this post, you discovered a gentle introduction to maximum likelihood estimation.

Specifically, you learned:

- Maximum Likelihood Estimation is a probabilistic framework for solving the problem of density estimation.

- It involves maximizing a likelihood function in order to find the probability distribution and parameters that best explain the observed data.

- It provides a framework for predictive modeling in machine learning where finding model parameters can be framed as an optimization problem.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thanks for your explanation. Highky insightful.

I want to ask that in your practical experience with MLE, does using MLE as an unsupervised learning to first predict a better estimate of an observed data before using the estimated data as input for a supervised learning helpful in improving generalisation capability of a model ?

Thanks.

It is not a technique, more of a probabilistic framework for framing the optimization problem to solve when fitting a model.

Such as linear regression:

https://machinelearningmastery.com/linear-regression-with-maximum-likelihood-estimation/

This product over many probabilities can be inconvenient […] it is prone to numerical underflow. To obtain a more convenient but equivalent optimization problem, we observe that taking the logarithm of the likelihood does not change its arg max but does conveniently transform a product into a sum

— Page 132, Deep Learning, 2016.

This quote is from Page 128 – based on the edition of the book in the link

Thanks George.

“We can state this as the conditional probability of the output X given the input (y) given the modeling hypothesis (h).”

Shouldn’t this be “the output (y) given the input (X) given the modeling hypothesis (h)”?

Given that we are trying to maximize the probability that given the input and parameters would give us the output.

It would be consistent with maximize L(y|X ; h)

Yes, that’s a typo.

Fixed. Thanks for pointing it out!

How can we know the likelihood function from the data given?

It is for an algorithm, not for data.

good explanation. I like how you link same technique in different fields like deep learning and unsupervised learning etc. ultimately if you understand you will know the underlying mechanism the same. thanks for the article

You’re welcome.

Dear Jason,

I have a question about ‘MLE applied to solve problem of density function’ and hope to get some help from you.

———————————

For the definition of MLE,

– it is used to estimate ‘Parameters’ that can maximize the likelihood of an event happened.

– For example, Likelihood (Height > 170 |mean = 10, standard devi. = 1.5). The MLE is trying to change two parameters ( which are mean and standard deviation), and find the value of two parameters that can result in the maximum likelihood for Height > 170 happened.

———————————

When we use MLE to solve the problem of density function, basically we just

(1) change the ‘mean = 10, standard devi. = 1.5’ into –> ‘theta (θ) that defines both the choice of the probability density function and the parameters of that distribution.’

(2) change the ‘Height > 170’ into –> sample of observation (X) from a domain (x1, x2, x3, · · · , xn),

Simply, we just use the logic/idea/framework of MLE to solve the problem of density function (Just change some elements of MLE framework).

After modifying the framework of MLE, the parameters (associated with the maximum likelihood or peak value) represents the parameters of probability density function (PDF) that can best fit for probability distribution of the observed data.

Is that correct?

Sincerely,

Yunhao

Hi Jason,

In the book, you write ‘MLE is a probabilistic framework for estimating the parameters of a model. We wish to maximize the conditional probability of observing the data (X) given a specific probability distribution and its parameters’

May I ask why ‘ parameters that maximize the conditional probability of observing the data’ are parameters that result in/belong to the best-fit Probability Density (PDF)?

I cannot understand how to figure out the relationship between maximum likelihood and best-fit.

Hi Jason,

Firstly, thank you for the detailed explanation; it really helped clarify the topic. However, I find myself a bit confused about the notation used for likelihood. In your post, you denoted likelihood as L(X | theta), implying that we aim to find theta parameters that maximize the likelihood of observing the given data. Therefore, wouldn’t it be more appropriate to use L(theta|X) instead? I also find this sort of notation more commonly used across different articles and blog posts.

Thanks

Hi Suraj… Your question brings up a common point of confusion in statistics regarding the notation and interpretation of likelihood and probability. Let’s clarify this.

### Probability vs. Likelihood

– **Probability** of observing data \(X\) given parameters \(\theta\), denoted as \(P(X | \theta)\), quantifies the probability of seeing the data \(X\) if the parameters \(\theta\) are known. This is what you’d use in a probabilistic model to predict data outcomes based on known parameters.

– **Likelihood** of parameters \(\theta\) given observed data \(X\), denoted as \(L(\theta | X)\) or sometimes simply \(L(\theta)\), is a function of \(\theta\) for a fixed \(X\). It represents how “likely” different parameter values are given the data you have observed. Unlike a probability, likelihood is not constrained to sum up to 1 over \(\theta\).

### Why \(L(\theta | X)\) and not \(L(X | \theta)\)?

The notation \(L(\theta | X)\) is indeed used to represent the likelihood of parameters given the data, but it’s crucial to understand that this is not the same as conditional probability notation even though it looks similar. The likelihood function \(L(\theta | X)\) is a function of \(\theta\) with \(X\) held fixed, essentially flipping the conditioning found in probability notation.

### The Essence of the Confusion

The confusion often arises because the likelihood function is mathematically equivalent to the probability of observing the data given the parameters, i.e., \(L(\theta | X) = P(X | \theta)\), but with a different interpretation. When we talk about likelihood, we’re focusing on how the parameters \(\theta\) fit the observed data \(X\), not on the probability of the data per se.

– **In probability:** We know \(\theta\) and ask, “What’s the probability of seeing \(X\)?”

– **In likelihood:** We know \(X\) and ask, “How likely are different values of \(\theta\)?”

### Maximizing Likelihood

When we say we aim to find the parameters \(\theta\) that maximize the likelihood of observing the given data \(X\), we’re seeking the parameter values under which the observed data would be most probable. This process is central to many statistical estimation techniques, including maximum likelihood estimation (MLE).

In summary, while the notation might suggest a probability statement, it’s essential to recognize the distinct interpretation and purpose of likelihood in statistical analysis. The use of \(L(\theta | X)\) is correct in the context of estimating parameters that make the observed data most likely.