Convolutional neural networks are now capable of outperforming humans on some computer vision tasks, such as classifying images.

That is, given a photograph of an object, answer the question as to which of 1,000 specific objects the photograph shows.

A competition-winning model for this task is the VGG model by researchers at Oxford. What is important about this model, besides its capability of classifying objects in photographs, is that the model weights are freely available and can be loaded and used in your own models and applications.

In this tutorial, you will discover the VGG convolutional neural network models for image classification.

After completing this tutorial, you will know:

- About the ImageNet dataset and competition and the VGG winning models.

- How to load the VGG model in Keras and summarize its structure.

- How to use the loaded VGG model to classifying objects in ad hoc photographs.

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Tutorial Overview

This tutorial is divided into 4 parts; they are:

- ImageNet

- The Oxford VGG Models

- Load the VGG Model in Keras

- Develop a Simple Photo Classifier

ImageNet

ImageNet is a research project to develop a large database of images with annotations, e.g. images and their descriptions.

The images and their annotations have been the basis for an image classification challenge called the ImageNet Large Scale Visual Recognition Challenge or ILSVRC since 2010. The result is that research organizations battle it out on pre-defined datasets to see who has the best model for classifying the objects in images.

The ImageNet Large Scale Visual Recognition Challenge is a benchmark in object category classification and detection on hundreds of object categories and millions of images. The challenge has been run annually from 2010 to present, attracting participation from more than fifty institutions.

— ImageNet Large Scale Visual Recognition Challenge, 2015.

For the classification task, images must be classified into one of 1,000 different categories.

For the last few years very deep convolutional neural network models have been used to win these challenges and results on the tasks have exceeded human performance.

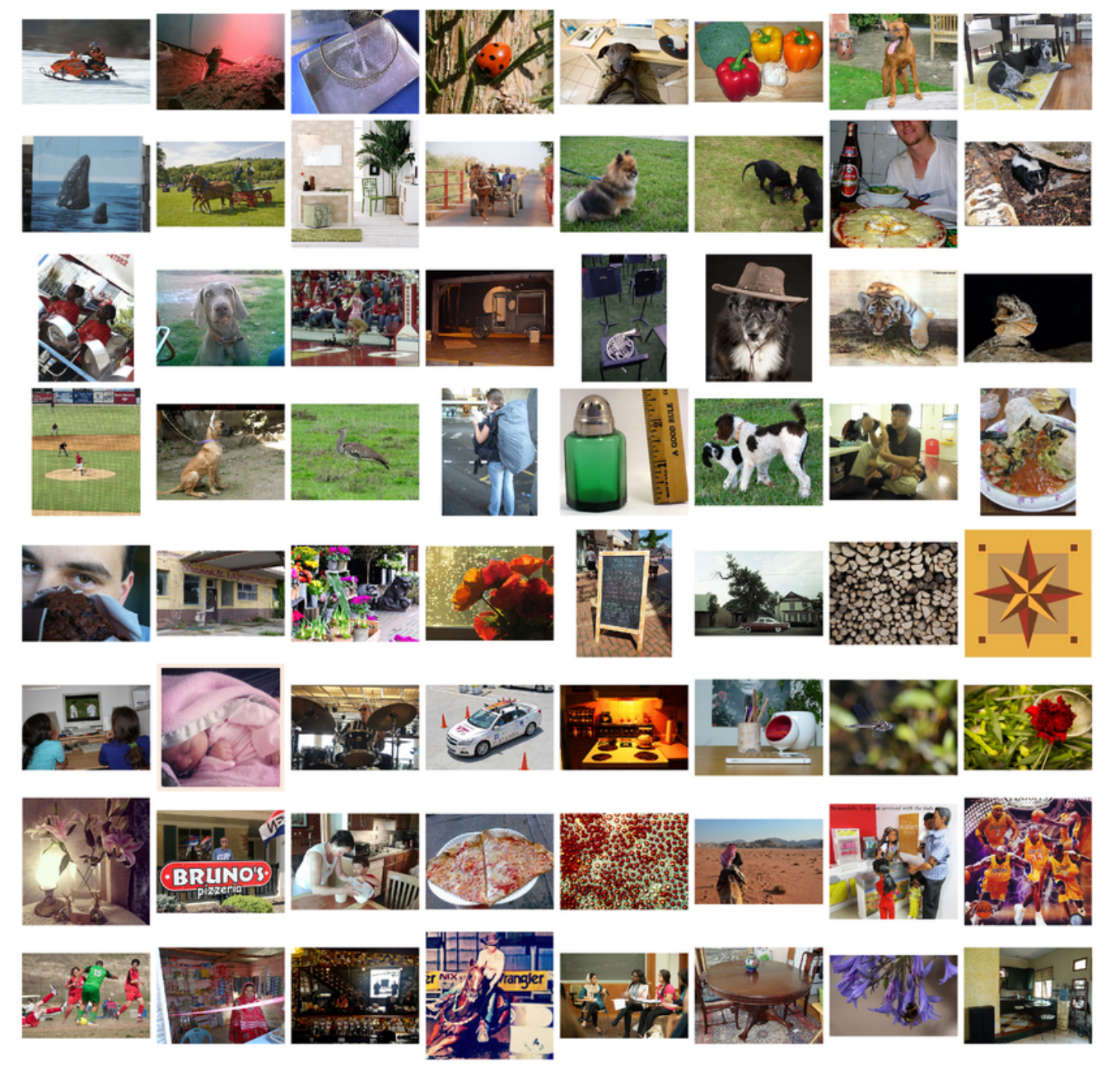

Sample of Images from the ImageNet Dataset used in the ILSVRC Challenge

Taken From “ImageNet Large Scale Visual Recognition Challenge”, 2015.

The Oxford VGG Models

Researchers from the Oxford Visual Geometry Group, or VGG for short, participate in the ILSVRC challenge.

In 2014, convolutional neural network models (CNN) developed by the VGG won the image classification tasks.

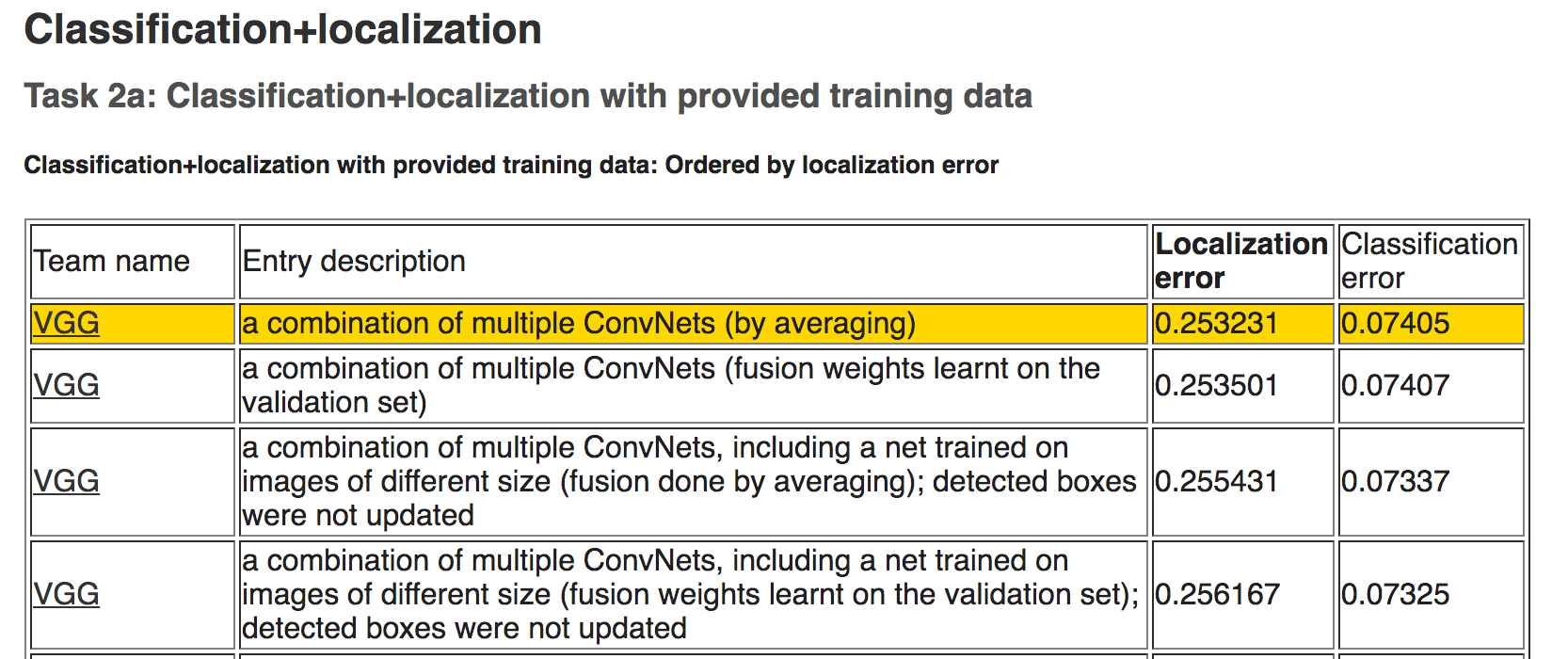

ILSVRC Results in 2014 for the Classification task

After the competition, the participants wrote up their findings in the paper:

They also made their models and learned weights available online.

This allowed other researchers and developers to use a state-of-the-art image classification model in their own work and programs.

This helped to fuel a rash of transfer learning work where pre-trained models are used with minor modification on wholly new predictive modeling tasks, harnessing the state-of-the-art feature extraction capabilities of proven models.

… we come up with significantly more accurate ConvNet architectures, which not only achieve the state-of-the-art accuracy on ILSVRC classification and localisation tasks, but are also applicable to other image recognition datasets, where they achieve excellent performance even when used as a part of a relatively simple pipelines (e.g. deep features classified by a linear SVM without fine-tuning). We have released our two best-performing models to facilitate further research.

— Very Deep Convolutional Networks for Large-Scale Image Recognition, 2014.

VGG released two different CNN models, specifically a 16-layer model and a 19-layer model.

Refer to the paper for the full details of these models.

The VGG models are not longer state-of-the-art by only a few percentage points. Nevertheless, they are very powerful models and useful both as image classifiers and as the basis for new models that use image inputs.

In the next section, we will see how we can use the VGG model directly in Keras.

Load the VGG Model in Keras

The VGG model can be loaded and used in the Keras deep learning library.

Keras provides an Applications interface for loading and using pre-trained models.

Using this interface, you can create a VGG model using the pre-trained weights provided by the Oxford group and use it as a starting point in your own model, or use it as a model directly for classifying images.

In this tutorial, we will focus on the use case of classifying new images using the VGG model.

Keras provides both the 16-layer and 19-layer version via the VGG16 and VGG19 classes. Let’s focus on the VGG16 model.

The model can be created as follows:

|

1 2 |

from keras.applications.vgg16 import VGG16 model = VGG16() |

That’s it.

The first time you run this example, Keras will download the weight files from the Internet and store them in the ~/.keras/models directory.

Note that the weights are about 528 megabytes, so the download may take a few minutes depending on the speed of your Internet connection.

The weights are only downloaded once. The next time you run the example, the weights are loaded locally and the model should be ready to use in seconds.

We can use the standard Keras tools for inspecting the model structure.

For example, you can print a summary of the network layers as follows:

|

1 2 3 |

from keras.applications.vgg16 import VGG16 model = VGG16() print(model.summary()) |

You can see that the model is huge.

You can also see that, by default, the model expects images as input with the size 224 x 224 pixels with 3 channels (e.g. color).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 224, 224, 3) 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 224, 224, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 224, 224, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 112, 112, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 112, 112, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 112, 112, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 56, 56, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 56, 56, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 28, 28, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 14, 14, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 7, 7, 512) 0 _________________________________________________________________ flatten (Flatten) (None, 25088) 0 _________________________________________________________________ fc1 (Dense) (None, 4096) 102764544 _________________________________________________________________ fc2 (Dense) (None, 4096) 16781312 _________________________________________________________________ predictions (Dense) (None, 1000) 4097000 ================================================================= Total params: 138,357,544 Trainable params: 138,357,544 Non-trainable params: 0 _________________________________________________________________ |

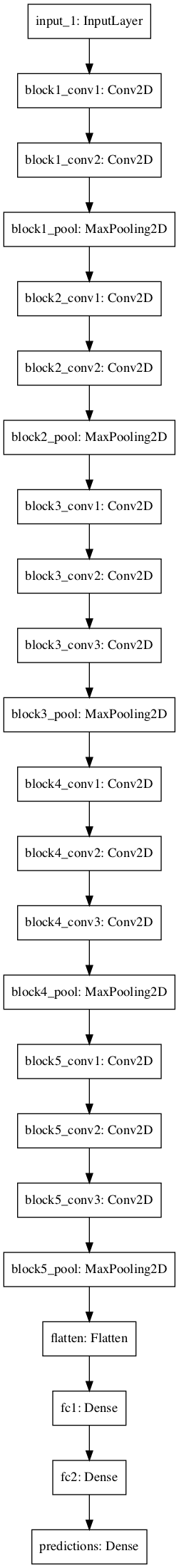

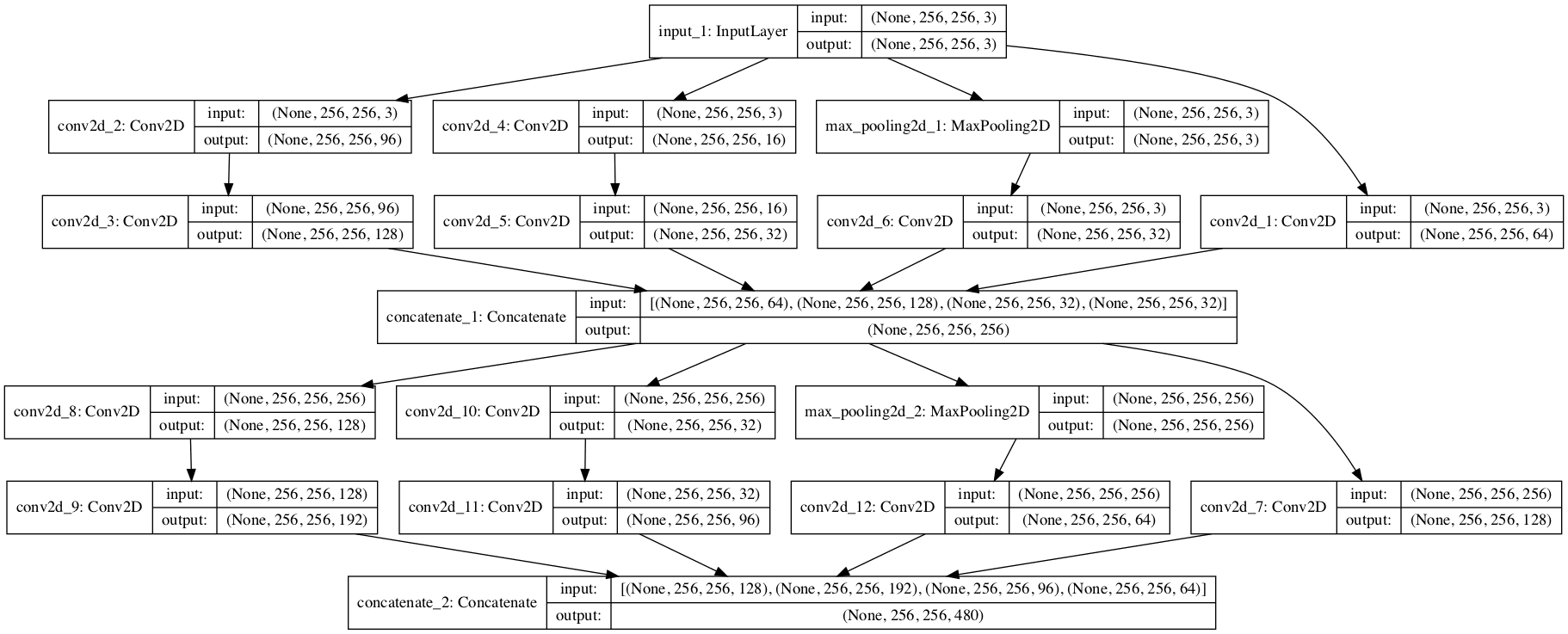

We can also create a plot of the layers in the VGG model, as follows:

|

1 2 3 4 |

from keras.applications.vgg16 import VGG16 from keras.utils.vis_utils import plot_model model = VGG16() plot_model(model, to_file='vgg.png') |

Again, because the model is large, the plot is a little too large and perhaps unreadable. Nevertheless, it is provided below.

Plot of Layers in the VGG Model

The VGG() class takes a few arguments that may only interest you if you are looking to use the model in your own project, e.g. for transfer learning.

For example:

- include_top (True): Whether or not to include the output layers for the model. You don’t need these if you are fitting the model on your own problem.

- weights (‘imagenet‘): What weights to load. You can specify None to not load pre-trained weights if you are interested in training the model yourself from scratch.

- input_tensor (None): A new input layer if you intend to fit the model on new data of a different size.

- input_shape (None): The size of images that the model is expected to take if you change the input layer.

- pooling (None): The type of pooling to use when you are training a new set of output layers.

- classes (1000): The number of classes (e.g. size of output vector) for the model.

Next, let’s look at using the loaded VGG model to classify ad hoc photographs.

Develop a Simple Photo Classifier

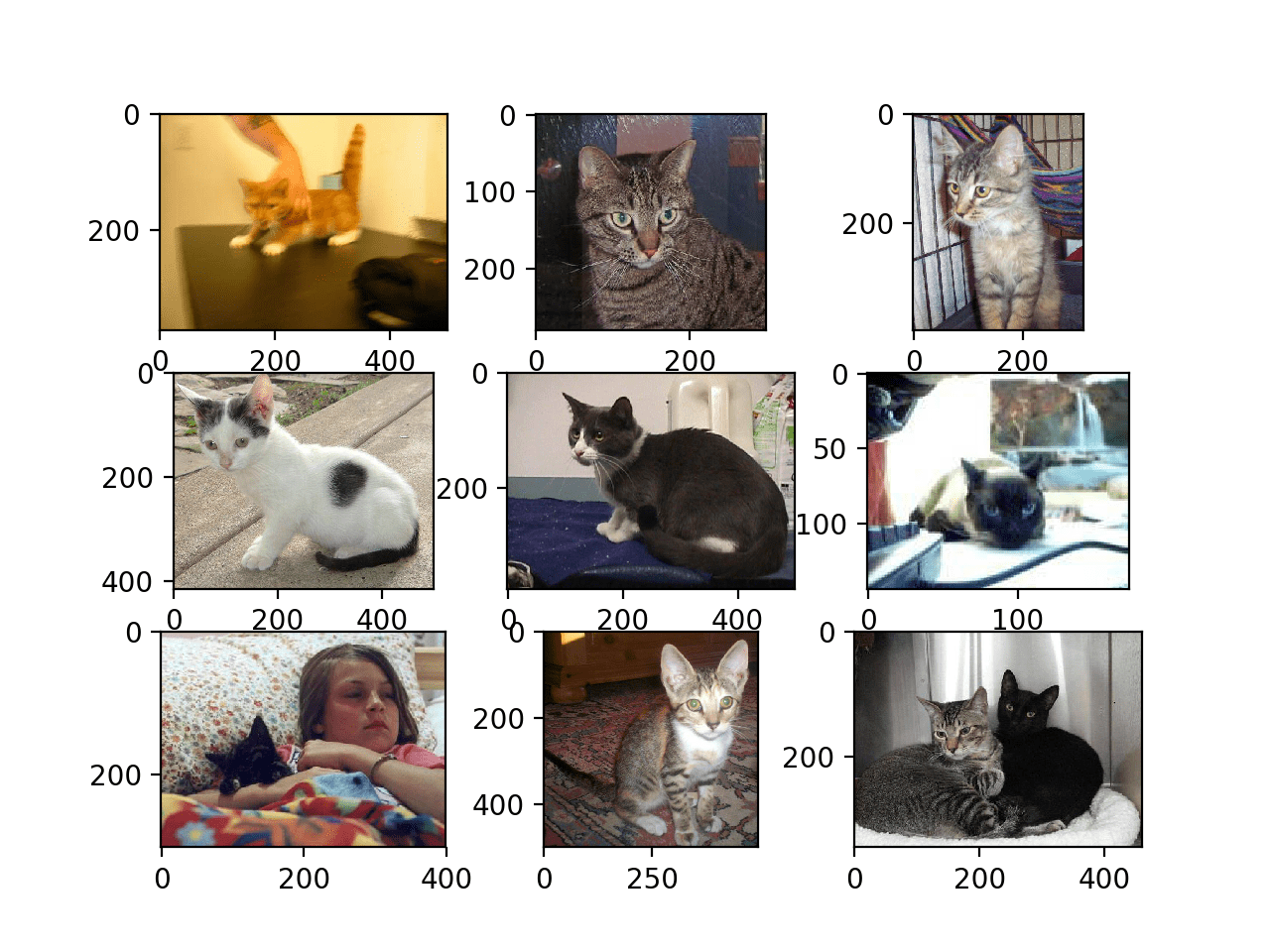

Let’s develop a simple image classification script.

1. Get a Sample Image

First, we need an image we can classify.

You can download a random photograph of a coffee mug from Flickr here.

Coffee Mug

Photo by jfanaian, some rights reserved.

Download the image and save it to your current working directory with the filename ‘mug.jpg‘.

2. Load the VGG Model

Load the weights for the VGG-16 model, as we did in the previous section.

|

1 2 3 |

from keras.applications.vgg16 import VGG16 # load the model model = VGG16() |

3. Load and Prepare Image

Next, we can load the image as pixel data and prepare it to be presented to the network.

Keras provides some tools to help with this step.

First, we can use the load_img() function to load the image and resize it to the required size of 224×224 pixels.

|

1 2 3 |

from keras.preprocessing.image import load_img # load an image from file image = load_img('mug.jpg', target_size=(224, 224)) |

Next, we can convert the pixels to a NumPy array so that we can work with it in Keras. We can use the img_to_array() function for this.

|

1 2 3 |

from keras.preprocessing.image import img_to_array # convert the image pixels to a numpy array image = img_to_array(image) |

The network expects one or more images as input; that means the input array will need to be 4-dimensional: samples, rows, columns, and channels.

We only have one sample (one image). We can reshape the array by calling reshape() and adding the extra dimension.

|

1 2 |

# reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) |

Next, the image pixels need to be prepared in the same way as the ImageNet training data was prepared. Specifically, from the paper:

The only preprocessing we do is subtracting the mean RGB value, computed on the training set, from each pixel.

— Very Deep Convolutional Networks for Large-Scale Image Recognition, 2014.

Keras provides a function called preprocess_input() to prepare new input for the network.

|

1 2 3 |

from keras.applications.vgg16 import preprocess_input # prepare the image for the VGG model image = preprocess_input(image) |

We are now ready to make a prediction for our loaded and prepared image.

4. Make a Prediction

We can call the predict() function on the model in order to get a prediction of the probability of the image belonging to each of the 1000 known object types.

|

1 2 |

# predict the probability across all output classes yhat = model.predict(image) |

Nearly there, now we need to interpret the probabilities.

5. Interpret Prediction

Keras provides a function to interpret the probabilities called decode_predictions().

It can return a list of classes and their probabilities in case you would like to present the top 3 objects that may be in the photo.

We will just report the first most likely object.

|

1 2 3 4 5 6 7 |

from keras.applications.vgg16 import decode_predictions # convert the probabilities to class labels label = decode_predictions(yhat) # retrieve the most likely result, e.g. highest probability label = label[0][0] # print the classification print('%s (%.2f%%)' % (label[1], label[2]*100)) |

And that’s it.

Complete Example

Tying all of this together, the complete example is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input from keras.applications.vgg16 import decode_predictions from keras.applications.vgg16 import VGG16 # load the model model = VGG16() # load an image from file image = load_img('mug.jpg', target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) # predict the probability across all output classes yhat = model.predict(image) # convert the probabilities to class labels label = decode_predictions(yhat) # retrieve the most likely result, e.g. highest probability label = label[0][0] # print the classification print('%s (%.2f%%)' % (label[1], label[2]*100)) |

Running the example, we can see that the image is correctly classified as a “coffee mug” with a 75% likelihood.

|

1 |

coffee_mug (75.27%) |

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Create a Function. Update the example and add a function that given an image filename and the loaded model will return the classification result.

- Command Line Tool. Update the example so that given an image filename on the command line, the program will report the classification for the image.

- Report Multiple Classes. Update the example to report the top 5 most likely classes for a given image and their probabilities.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

- ImageNet

- ImageNet on Wikipedia

- Very Deep Convolutional Networks for Large-Scale Image Recognition, 2015.

- Very Deep Convolutional Networks for Large-Scale Visual Recognition, at Oxford.

- Building powerful image classification models using very little data, 2016.

- Keras Applications API

- Keras weight files files

Summary

In this tutorial, you discovered the VGG convolutional neural network models for image classification.

Specifically, you learned:

- About the ImageNet dataset and competition and the VGG winning models.

- How to load the VGG model in Keras and summarize its structure.

- How to use the loaded VGG model to classifying objects in ad hoc photographs.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thank you Jason !

You’re welcome.

hi, i’m a PHD researcher, i want to applay this method on a desertic zone to detect dunes, is it possible?

Perhaps. Use the model as a starting point (transfer learning) and re-train the classification backend of the model for your specific problem.

More about this here:

https://machinelearningmastery.com/transfer-learning-for-deep-learning/

Hello, I am new in this area and for my master thesis I need to work on plant leaf diseases detection using a new neural network architecture without enough code that is what my supervisor told me so if you can give an hint because I am a bit loss. thanks

What does “without enough code” mean?

Hey Jason Brownlee could you help me with a deep learning project on explicit content detection?

this is my twitter handle: wilson_exex

i really need help to do this project

I don’t have the capacity to join your project, sorry.

Thank you, Jason. Very interest work.

From this point we continue our journey toward Computer vision?

If it is possible, tell us please in future works about Region of interest technique. It is difficult to understand for beginner, but very useful in practice.

Great suggestion, thanks Alexander.

For the next few months the focus will be NLP with posts related to my new book on the topic.

I don’t want to crash your party , but…

http://www.bbc.com/news/technology-41845878

Yes I saw that.

We are still making impressive progress and achieving amazing results we could not dream of 10 years ago.

Thank you Jason for the wonderful article can you please suggest which pretrained model can be used for for recognizing individual alphabets and digits.

Good question, I am not sure off the cuff, perhaps try a google search. I expect there are such models available.

If you discover some, please let me know.

Thank you Jason,

Someday can you take time to write about training VGG for objects not belonging to original 1000 classes (Imagenet dataset) but completely new 2000 classes. I am specially interested in training times for starting from scratch and training times for fine-tuning. Do the no_top weights reduce training time much?

Once again thank you for the post

Great suggestion, thanks Sam. I hope to.

Yes, the layers just before the output layer do contain valuable info! I have tested this on some image captioning examples.

Thank you Jason for the wonderful article. We really hope you a post on Object Detection stuff like SSD (Single Shot Multibox Detector ) for standard data and custome data or semantic segmentation stuff like FCN or U-Net that will be very cool.

Thanks Adel!

Many thanks for That.

You’re welcome.

i’m still confused , can i change the image dataset and train it with VGG ?

thanks

Sorry, I don’t follow, perhaps you can restate your question?

Hello Jason,

I am now learning Deep learning and your Website is a treasure trove for that.

Thank you so much.

I just finished „How to use pre-trained VGG model to Classify objects in Photographs which was very useful.

Keras + VGG16 are really super helpful at classifying Images.

Your write-up makes it easy to learn. A world of thanks.

I would like to know what tool I can use to perform Medical Image Analysis.

Any specific library that would help me to Analyse Medical Images? VGG could not.

Your Response would be highly appreciated.

Sorry, I don’t have experience in that domain. I cannot give you specific advice.

Can you help me with where to save the “mug.jpg”.

I’ve tried to save it in some directory but it always returns the following error.

FileNotFoundError: [Errno 2] No such file or directory: ‘mug.jpg’

Thank you very much!!

In the same directory as the code file, and run the code from the command line to avoid any issues from IDEs and notebooks.

Hi Jason,

Thanks for the sharing. I want to know if VGG16 model can identify different objects in an image and then extract features of each object, or is there any way to do this through Keras library?

It could be re-purposed on an application like this.

See this example which is close:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hello Jason,

Is there a similar package in R language?

There may be, I’m not across it sorry.

Is there a way to use a format different than 224×224 ?

The only example I found is here: https://github.com/fchollet/deep-learning-with-python-notebooks/blob/master/5.3-using-a-pretrained-convnet.ipynb

Where basically we need to add another level on top of the model and use a custom classifier.

I guess that since the model was trained for 224×224 image it would not work as it is with different size, am I right ?

Yes, you would need to train a new front end.

Hi Jason, I was trying to use the VGG16 model from kera, but I have a serious problem with that. Whenever I do

Vgg_model = VGG16() my computer just freezes with this warning

tensorflow/core/framework/allocator.cc.124 allocation of 449576960 exceeds 10% of system memory

I am currently using a 64 bit, 4gb ram linux mint 18 os.

I don’t have access to any king of GPU.

I think this problem has to do something with my limited ram?

Regards, aditya

It might be because of limited RAM.

Perhaps try on another machine or on an EC2 instance?

Simple yet works well with the 20 test image files I provided to this program! Great job! Thank you !

Well done!

Grateful if you could also point out how to expand the VGG16 into actual Keras or Tensorflow code so learner can modify the code on their own for training, inference, or transfer learning purpose.

Great suggestion, thanks.

Hello

I need a suggestion on

CSV file data set with some image dataset.

It has 6 columns each columns have value like (1,0,-1)

I want to use VGG16 and get a multilevel classification.

How to deal with the problem, any idea or suggestions or paper will be much helpful.

Thanks in Advance.

Generally images are not stored in CSV format, they are stored in a binary image format like JPEG or PNG.

What all classes of images are feed into the VGG model which is predicting objects?

How can we see that?

Good question, there may be a way.

Off the cuff, one way would be to enumerate all inputs to decode_predictions()

Hi Jason,

I have a similar question like Namrata, if i want to train my VGG model with some new classes, how can i do that?

ere are some solutions from a google search:

https://stackoverflow.com/questions/47474869/getting-a-list-of-all-known-classes-of-vgg-16-in-keras

@Jason,

The link you have given shows the list of classes being trained in the VGG model, my question was, can we write our own VGG model and provide the classes?

If there’s any link or a way to do it, please let me know

I do not have a link for this.

Perhaps you can look at the Keras code and adapt an existing example in the API for your use case?

Thanks for all efforts. U make dreams come true for researchers 🙂

Thanks.

Thanks for this great post.

I am new on deep learning. I have a question that can the model provide exact position of the object so we can put a bunding box on it? And can vgg16 model detect several objects in one image and give thier positions?

It can, it is called object localization and requires more than just a VGG type model. Sorry, I don’t have a worked example.

Hello Yuri,

I am dealing with the same question than you, did you make progresses on your research?

Thank you for all your kind demonstration. However, I wonder how to use pre-trained VGG net to classify my grayscale images, because number of channels of images for VGG net is 3, not 1. Can I change the number of channels of images for VGG net? for example, 2?

Great question!

Perhaps cut off the input layers for the model and train new input layers that expect 1 channel.

Awsome , Superb Work! Appreciate that.

Thanks. I’m glad it helped.

Thanks sir for this tutorial, please can i use the vgg16 to classify some images belonging to a specific domain and does not exists in the ImageNet database.

Sure, try it.

Sir,

I am a regular reader of your blog. I have read your work, like it. Furthur, in this example of your’s I could see you fed the picture to the network. I am also a fan of Dr.Adrian’s work, I was reading about transfer learning, where we removed the FC layers at the end and passed in a logistic regression there to classify a dataset (say Caltech 101) where we could get 98% accuracy. The vgg16 is trained on Imagenet but transfer learning allows us to use it on Caltech 101.

Thank you guys are teaching incredible things to us mortals. One request can you please show a similar example of transfer learning using pre trained word embedding like GloVe or wordnet to detect sentiment in a movie review.

Thanks.

I give examples of reusing word embeddings, search the blog. or Check my NLP book.

Yes, I know you have included them in your book on NLP, using a CNN and word embedding to classify the sentiments, I have implemented it too. Anyways thanks for replying.

Regards,

Anirban Ghosh.

Hey Hi,

thanks for the article but I have a doubt,

The last layer in the network is a softmax layer and we have 1000 neurons in the fully connected layer before this layer right? so we can use this for classification of 1000 objects.

What my doubt is that, is this 1000 fixed for all vgg networks even though we are trying to classify only a few say 100( some number less than 1000) or this number (number of neurons in the last fully connected layer) depends on the number of classifications we are trying to address.

The prediction is a softmax over 1000 neurons.

It is fixed at 1000, but you can re-fit the network on a different dataset with more/less classes if you wish.

Ok, so as I said if we want to predict 100 classes, we still will have 1000 neurons but only 100 of them will be used for classification. Is that what you meant? If so what happens to the other 900 neurons, can softmax layer work that way, using only some neurons out of all the available ones?

sorry if this seems so basic, I just started working with deep learning and these things confuse a bit. thanks

If you have 100 classes, you have 100 nodes in the output layer, not 1000.

got it! Thanks for the reply

Thank you very much Mr. Jason Brownlee ! You are doing a great job ! I have been following some of yours machine learning mastery “How to …” , “Intro..” . I am very impressive how you approach, outreach and advance some of the “hot and trending” topics of Deep Learning…explaining them is plain text (including basic Python concepts, and of course Keras API, tensorflow Library, …)

To me the main issue is your capability to communicate the WHOLE SOLUTIONS covering everything in between of the problem starting, with math or Deep Learning intuitions concepts, following by programming language, operative ideas of libraries modules used, references list , etc. And finally but not least providing an operative code to start experimenting by ourselves all the concepts introduced by you.

Many thanks for your really great mastery work , from JG !!

You’re welcome, I’m glad the material helps.

I wander what I should do if I would like to train my own dataset to get a new weights based on the VGG model, and do prediction on the new weights

Keep the whole VGG model fixed and only train some new output weights to interest the vgg output.

Hi, can you help me localization of an object suppose number plate in an image. I know YOLO and Faster-RCNN can be used for this. But i am facing problem in implementing Region proposals using Anchor boxes. could you please suggest something?

I hope to cover YOLO in the future.

One more time Mr. Jason Brownlee thank you very much for your VGG16 Keras apps introduction, I think your code and explanation it is perfect (at least for my level) before diving into deeper waters, such as building your own models on Keras. I like the way you structure your pieces of codes before running the full system. I appreciate your “free” job for all of us . You do a lot of appreciable things for our Machine Learning community!!. I wish you a long running on these matters !

Thanks, glad it helped.

Hi Jason,

Your blog is the best for machine learning!

I have a question regarding the performance of VGG.

For coffee mug, it is exactly detecting the object.

But I tried a very obvious snake picture (https://reikiserpent.files.wordpress.com/2013/03/snakes-guam.jpg); however the results are not that promising:

[[(‘n01833805’, ‘hummingbird’, 0.22024027),

(‘n01665541’, ‘leatherback_turtle’, 0.10800469),

(‘n01664065’, ‘loggerhead’, 0.088614523),

(‘n02641379’, ‘gar’, 0.083981715),

(‘n01496331’, ‘electric_ray’, 0.061437886)]]

Knowing that VGG is performing very well, is there any way to improve the model results (maybe some fine tuning?) without retraining the model?

Thanks a lot,

Maybe try a model other than the VGG?

I tried ResNet as well, but results are still far from reality.

I guess the test images will have to be much like the images used to train the model, e.g. imagenet.

hi sir thank you for this tutorial

I noticed some places using vgg16 but they input images of different sizes and aspect ratio such as 192×99 or 69×81 and more other and i can’t understand how they get the output, can vgg16 take image with size other than 224×224 without resize it and what is the result will be? Thank you.

Perhaps resize the image?

Perhaps change the input shape of the network to be much larger and zero-pad smaller images?

Hello,

I tried to change the type of vgg16 to sequential, but, after changing it removes the input layer.

I don’t know why. how can I fix it?

thanks

Why change it to Sequential?

Hi Jason,

I like it very much and am wondering any following ups for the fine-tune VGG?

Thanks.

Great question!

Small and decaying learning rate and early stopping would be a good start.

Thank you very much for this great work. I wonder is it possible to use this model (VGG16) in order to be able to classify daily activities.

How so?

Thank you Jason ! I’m speechless

Thanks. I’m glad it helped.

Hi,

I’ve already downloaded the vgg19.npy model. Is it possible to load from this directly instead of downloading again?

Perhaps, I don’t have an example of loading the model manually, sorry.

Thank you so much for this valuable post. Really helpful.

I have question please,

How can I retrieve the index position of top n probabilities

for example, the prediction vector of the mug will produce a vector with 1000* 1 which contains the probabilities values for each class.

lets say that the probabilities are :

[.1

.2

.3 (top 1)

.001

.002

.25(top2)

.24 (top3)

.1

.01

…

…

..

etc}

I want to retrieve the position/index in which the top 3 probabilities are located.

in previous example, I want to retrieve the position of

.3 (top 1)

and

.25(top2)

and .24 (top3)

which is [2,5,6]

Thank you .

Good question, you can code this yourself, or perhaps there is a numpy function.

Maybe argpartition can do it?

https://docs.scipy.org/doc/numpy/reference/generated/numpy.argpartition.html

Thank you so much.

Hi, Guys Thanks for this awesome tutorial. Do You guys have any tutorial on How To train with our own images..(Custom Classifier) with whatever architecture you are following now. So Please let me know. Thanks for the help.

Sure, you can load your images and perhaps use transfer learning with a VGG model as a starting point.

Dear Jason,

Very helpful post.Also,i have a question that i want to use a pretrained model with different input shape.For example the input of pretained model is (None, 3661, 128) and the input shape of new dataset which i am applying on pretrained model is (None, 900, 165).So, i want to know how to set the input shape of pretrained model for the new dataset because i am getting an error:

“ValueError: “input_length” is 3661, but received input has shape (None, 900, 165)”.

Thanx in advance

You can add a new hidden layer after the new input layer and only train the weights of this new layer.

Or resize inputs to meet the old model.

Dear Jason, I want to know that the pre-trained models (used for transfer learning) also contain the testing phase or it only contain the training phase? In other words is the pretrained model contain both the training and testing phase or only the training phase?

Thanks in advance.

They are used like any other model, e.g. fine tuning/training then testing/evaluation.

I can’t understand your point. Kindly can you explain it more. Thanx for your response.

Which part?

Hi Anam, here’s a brief explanation to your question. The network (VGG16) had been trained and tested before being deployed as a model, so, there’s no need talking about training and test sets again. When you feed in an image to be classified, all you’re doing is using a pre-trained model to do your classification. I hope this helps, otherwise, let me know if you need further clarification. @Jason Brownlee is doing a great job!!

Great explanation.

@Busayo Not really. You can use VGG16 for either of following-:

1) Only architecture and not weights. In which case you train the model on your dataset

2) Keep only some of the initial layers along with their weights and train for latter layers using your dataset

3) Use complete VGG16 as a pre-trained model and use your dataset for only testing purposes.

Great summary!

How to train model on my own using my customized training dataset?

I teach how to train models here:

https://machinelearningmastery.com/start-here/#deeplearning

I suppose it is the same principle if I want to use vgg face for facial recognition, rightr?

Perhaps, but face recognition is a very different type of problem than simple classification.

I am currently working on an app using Keras, ImageNet, and VGG16.

I was wondering if it possible to check if an image falls into one of the classes like Plant, Animal, Food, etc…? Instead of it just checking to see what type of plant or food it is?

Yes, perhaps the output or classifier part of the model needs to be re-trained on higher order class labels?

Hi sir thanks for the tutorials, I am using the Pre-Trained VGG 16 Model to finetune Classify Objects in Photographs into 6 classes which do not belong to the imagenet module. when i run my code i go the error: ValueError : ‘decode_predictions’ expect a batch of prediction (ie a 2D array of shape (samples, 1000) found an array of shape a (1, 6). can you please help me resolve this problem

You cannot use decode prediction with your own classes. You will have to map the integers to your class labels yourself.

thanks for the response sir, Can you guide with the process of mapping integers to my own class labels using the above example. Am still new in machine learning

Yes, you can use a label encoder:

https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.LabelEncoder.html

i was read all article of your but i dont understand the where and how to train dataset and how to predict using aboe code please eloborate lil bit . step by step

because i have image data set so shoulde i required to label the every image for classification or not and how to train the dataset and how to predict that please help me

Yes, every image requires a label.

I hope to provide an tutorial of what you’re asking about soon.

plz i want to know that.u applied this algo on one image -‘mug.jpg’ but if I have so many images like image1,image2,image3 then how to code?

Perhaps you can enumerate all images in a directory and make a prediction for each?

Can you give an example, please?

Yes, I have many, perhaps start here:

https://machinelearningmastery.com/start-here/#dlfcv

thanks for this well explained tutorial.

Thanks, I’m glad it helped.

Hi,

Thanks for the tutorial. Is it possible to use VGG pretrained network for time series regression? How should the input and output layers change?

Yes, but it would not make sense to use a model for image classification for time series prediction.

Thank you for your reply. Do you know of any pre-trained RNN that I can use? I have done an extensive search online but cannot find one.

I am not aware of pre-trained models for time series, sorry.

i’m not able to run

from keras.applications.vgg16 import VGG16

model = VGG16()

the following commands in spyder.It shows a lot of errors.

Sorry to hear that.

I recommend running code examples from the command line. I show how here:

https://machinelearningmastery.com/faq/single-faq/how-do-i-run-a-script-from-the-command-line

sir, how can i use this pretrained model with some other dataset?

I show how in the above tutorial.

thank you for answering.

Sorry , but in the above tutorial it is classifying on the pre trained IMAGENET dataset…however i want to use some other dataset to train the VGG model.please share the link or the code for it since i’m stuck on this for quite a number of days.

thank you

This post shows how to fit a model on another dataset:

https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-to-classify-photos-of-dogs-and-cats/

Name = decode_predictions(pre[0])

–>

—————————————————————————

ValueError Traceback (most recent call last)

in

—-> 1 Name = decode_predictions(pre[0])

2 Name = Name[0][0]

~\Anaconda3\lib\site-packages\keras\applications\__init__.py in wrapper(*args, **kwargs)

26 kwargs[‘models’] = models

27 kwargs[‘utils’] = utils

—> 28 return base_fun(*args, **kwargs)

29

30 return wrapper

~\Anaconda3\lib\site-packages\keras\applications\vgg16.py in decode_predictions(*args, **kwargs)

14 @keras_modules_injection

15 def decode_predictions(*args, **kwargs):

—> 16 return vgg16.decode_predictions(*args, **kwargs)

17

18

~\Anaconda3\lib\site-packages\keras_applications\imagenet_utils.py in decode_predictions(preds, top, **kwargs)

220 ‘a batch of predictions ‘

221 ‘(i.e. a 2D array of shape (samples, 1000)). ‘

–> 222 ‘Found array with shape: ‘ + str(preds.shape))

223 if CLASS_INDEX is None:

224 fpath = keras_utils.get_file(

ValueError:

decode_predictionsexpects a batch of predictions (i.e. a 2D array of shape (samples, 1000)). Found array with shape: (2,)I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Can Vgg-16 model use for face recognition problem of 10 person with pre-trained weights

Not directly, the model would have to be tuned on the new data.

It might be better to use facenet or vggface:

https://machinelearningmastery.com/how-to-develop-a-face-recognition-system-using-facenet-in-keras-and-an-svm-classifier/

Thanks for your reply. It really helps me for my work. the model can identify a face of my own dataset if i use embedding and SVC. But i don’t want to use embedding and SVC classifier for identification. If i add a softmax at the last layer of facenet model and fine tune the model’s last layer with my own dataset image, it gives accuracy 100% at training time, but if i test some random image it can’t identify that face. I don’t find why it happens?

Yes, you need the VGGFace model, not the VGG model, see this:

https://machinelearningmastery.com/how-to-perform-face-recognition-with-vggface2-convolutional-neural-network-in-keras/

How do you convert the VGG extract features from conv5_3 layer to input as SVC parameter?

The features are a vector (or can be made a vector via flatten()) that can be used as input to any model you wish.

how to load other trained vgg16 weights other than default ?

I believe you must specify the “weights” argument and the filename, e.g. weights=’/imagenet.h5′

You can learn more about the API here:

https://keras.io/applications/#vgg16

How to load VGG16 pretrained weights into our script and use it as classifier for cats_and_dogs dataset?

Here is an example:

https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-to-classify-photos-of-dogs-and-cats/

Thank you!

You’re welcome.

I am using VGG16 and VGG19 for my own data set. i change the image shape to 32X32. my validation accuracy didn’t change.. what is the problem with my code… I am struck with this…

I don’t want transfer learning method… Please do help..

Epoch 1/30

52/52 [==============================] – 116s 2s/step – loss: nan – acc: 0.2558 – val_loss: nan – val_acc: 0.2540

Epoch 2/30

52/52 [==============================] – 119s 2s/step – loss: nan – acc: 0.2558 – val_loss: nan – val_acc: 0.2540

Epoch 3/30

52/52 [==============================] – 121s 2s/step – loss: nan – acc: 0.2505 – val_loss: nan – val_acc: 0.2540

Epoch 4/30

52/52 [==============================] – 126s 2s/step – loss: nan – acc: 0.2522 – val_loss: nan – val_acc: 0.2540

Epoch 5/30

52/52 [==============================] – 122s 2s/step – loss: nan – acc: 0.2571 – val_loss: nan – val_acc: 0.2540

Epoch 6/30

52/52 [==============================] – 121s 2s/step – loss: nan – acc: 0.2510 – val_loss: nan – val_acc: 0.2540

I have some suggestions here:

https://machinelearningmastery.com/improve-deep-learning-performance/

THANK YOU FOR YOUR REPLY…..

You’re welcome.

Thanks for your great tutorials!

I am interested in the parent category of predictions.

For example, if the model predicts a dog. I would like to have the category animals.

Sounds like a fun project!

Sir, You may think this question is silly but please clear my doubt.

1)The transfer learning(VGG-16) works when we have different classes of data means the model is not trained on new classes or say the new data is not from imagenet dataset?2

2)Sir can you explain IF we OFF the all VGG-16 layers using vgg.trainable = False and we added our custom Conv layers on top of it the how transfer learning works? (The images is not from those 1000 classes)? How we get an information from vgg-16 to custom layers if we off the layers?

You can use the model with the same classes or different classes as imagenet. If you use different classes, you will have to train the new layers on your new classes/dataset.

It works by only training the new layers you add and leaving all other layers untouched. The existing layers will extract features from the photos and your new layer will interpret those features and classify them – it’s still amazing to me!

Jason, wonderful article on pretrained model. Can you tell me which model can i use for EEG signal processing for emotion detection? Thank you

Perhaps compare eMLP, CNN and LSTM and model as time series classification.

You can get started here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason,

First of all, thank you very much for the work you are putting on. These are really nice tutorials and I always visit this site whenever I want to search for some particular machine/deep learning concepts. However, I am confused with loading a pre-trained model and predict on the same. I have a VGG trained from scratch saved in .h5 file. I am able to load that using

“””””””from keras.models import load_model

saved_model = load_model(“/content/vgglite.h5″)

saved_model.layers[0].input_shape #(None, 224, 224, 3)””””” but when I tried predicting the test folder is not getting converted to array and I am getting [[[ IsADirectoryError: ]]]

I was using [[[[[ import os

from keras.preprocessing import image

import numpy as np

batch_holder = np.zeros((20, 224, 224, 3))

img_dir=’/content/drive/My Drive/COMPUTER VISION DOCS/imagenette_6class/test/’

for i,img in enumerate(os.listdir(img_dir)):

img = image.load_img(os.path.join(img_dir,img), target_size=(224,224))

batch_holder[i, :] = img]]]]]]]]

Kindly explain how to load a pre-trained model and predict using the test set. Thanks in advance.

You’re welcome.

Perhaps start with the example in the tutorial, confirm it works on your workstation, then slowly adapt it for your project.

Jason, thank you for this awesome work of enlightening people. I really appreciate. I’m working on a research project of developing a system that differentiate fake image from original once. Can I make use of this VGG-16 model in developing it?

You’re welcome.

Perhaps use it as a starting point?

Hi Jason,

Thanks for your great work. I have tried same example with image (cup.jpg).

The image i have snipped from your original image and saved as “cup.jpg”. After i tried with VGG16 model as such same your code, i unable to get accuracy, prediction also wrong.

Model throws output as “mosquito_net” with 1.7% accuracy.

Could you please let me know, why my prediction went wrong with same image ?

Perhaps ensure that you have loaded the image correctly and prepared the pixels in the expected manner – as we do in the tutorial.

Hi , I am getting the below error after executing all the code:

Could not import PIL.Image. The use of

load_imgrequires PIL.This will help you install and test PIL/Pillow:

https://machinelearningmastery.com/how-to-load-and-manipulate-images-for-deep-learning-in-python-with-pil-pillow/

thank you Jason for this tutorial , this code was for one image can you tell me how to prepare a dataset like FER2013 for the VGG16 CNN ?

What do you mean prepare the dataset?

i mean how can i adapt a dataset like FER2013 for the VGG16 cnn in the same way as the ImageNet training data was prepared because i have a project about facial expression recognition

Sorry, I don’t have an example of exactly this.

Dear jason

how could i calculate FP, TP and senstivty from TL model ?

This will help you with calculating other metrics:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

could i implement GAN augmentation instead of normal augmentation in TL and how ?

sorry for this basic question as i am a beginner

Yes, but I expect it will not be as effective as normal image data augmentation.

Hello Jason,

I am a graduate student at the University of Cincinnati. I wanted to know if it is okay for me to use the images in your post as a part of my Master’s Thesis paper while citing the source of the image i.e. this post.

Please let me know.

Thank you

Anusha

As long as you clearly cite and link to the source:

https://machinelearningmastery.com/faq/single-faq/how-do-i-reference-or-cite-a-book-or-blog-post

Hi Jason,

How do i train VGG16 with an image that is not a square matrix, like 640*480? Will i have to change the size of convolution and pooling filters as well?

Thank you.

No.

Hi Jason,

Why VGG16 is more popular and using than Resnet50 with transfer learning and fine-tuning tutorials to train dataset includes more than one class?

Are there any critical differences or reasons for that?

Thank you.

Because it is simple, well understood and good enough for many applications.

Can I use vgg16 for cancer images?Or should I prefer resnet/alexnet/inception_3 or anyother?

I recommend testing a suite of model to try as a starting point for transfer learning and discover what works best for your specific dataset.

Can I use VGG16 for oher image dataset ?

Sure.

Thanks for the wonderful work, but when I use VGG16 dataset I got error for shape of input numpy array

Can you put some light on it?

snippet of code:

def test_on_whole_videos(train_data,train_labels,validation_data,validation_labels):

x = []

y = []

count = 0

output = 0

base_model = load_VGG16_model()

model = train_model(train_data,train_labels,validation_data,validation_labels)

i=0

count = 0

for filename in os.listdir(“./test_im/3”):

img=cv2.imread(“./test_im/3/”+filename,0)

x.append(img)

Error:

x = np.array(x) in test_on_whole_videos(train_data, train_labels, validation_data, validation_labels)

17 x = np.array(x)

18 print(type(x))

—> 19 x_features = base_model.predict(x)

20 answer = model.predict(x_features)

21 print(answer)

ValueError: Input 0 of layer block1_conv1 is incompatible with the layer: expected ndim=4, found ndim=3. Full shape received: [None, 224, 224]

Sorry to hear that you’re having trouble, perhaps some of these tips will help:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hey Jason, looking at this line:

> image = preprocess_input(image)

It seems Keras’ VGG preprocess_input really just calls imagenet_utils.preprocess_input(x, data_format=data_format, mode=’caffe’) according to the source code:

@keras_export(‘keras.applications.vgg16.preprocess_input’)

def preprocess_input(x, data_format=None):

return imagenet_utils.preprocess_input(

x, data_format=data_format, mode=’caffe’)

Source: https://www.tensorflow.org/api_docs/python/tf/keras/applications/vgg16/preprocess_input

I understand this to mean that it defaults to ‘caffe’ mode, which according to the docs:

> caffe: will convert the images from RGB to BGR, then will zero-center each color channel with respect to the ImageNet dataset, without scaling.

Zero-centering makes sense, as it follows the paper’s preprocessing technique. But what about switching the channels from RGB to BGR…

Keras’ load_img() defatuls to ‘rgb’. So my concern is that using Keras’ preprocess_input(image) will mess with the channel ordering.

I tested this:

from tensorflow.keras.applications.vgg16 import preprocess_input

copied_data = np.copy(data)

prep_data = preprocess_input(copied_data)

from matplotlib import pyplot as plt

plt.imshow(data[0].astype(‘int’))

plt.show()

plt.imshow(prep_data[0].astype(‘int’))

plt.show()

And sure enough, the RGB channels were flipped. The yellows/reds in the original image turned into blue-ish hues.

So what’s the best way to combat this? Load the data as BGR from the get-go?

Welp, it seems that asking the question is often the path to enlightenment… I see now that it’s necessary to convert the image from RGB to BGR because the Keras VGG16 model with ‘imagenet’ weights are internally using BGR channel ordering.

> In the keras link to VGG16, it is stated that: “These weights are ported from the ones released by VGG at Oxford.” So the VGG16 and VGG19 models were trained in Caffe and ported to TensorFlow, hence mode == ‘caffe’ here (range from 0 to 255 and then extract the mean [103.939, 116.779, 123.68]).

@ https://stackoverflow.com/questions/53092971/keras-vgg16-preprocess-input-modes

Nice!

Intersting, perhaps Keras got things messed up in the latest version/s.

Perhaps you can implement the data prep manually for your application.

Why does the pre-trained model classify common objects accurately but does a bad job when it comes to facial images though Imagenet has a category called person?

Good question.

The model is good at classifying photos of objects like those in the training data. The model was trained on objects, not faces/people.

Hi Jason,

Thank you for a good article.

Could you please guide me to choose the right TOP-1 accuracy of VGG16 because MobilNet authors write 71.5% top-1 in their paper, keras application table shows 71.3%, and

paperwithcodes shows 74.4% under ImageNet benchmark.

Who is reporting correct accuracy? Could you please guide ?

Thank you!

You’re welcome.

Generally, I recommend testing each model on your dataset and choose the one that performs the best.

If you want to compare reported numbers, perhaps you can check the papers to see if it is an apples to apples comparison, and if not, perhaps evaluate the models yourself under the conditions you expect to use them.

Hi Jason .

I have the following questions:

1. When should one use a pre-trained model like VGG16 with transfer learning Vs train a neural network from scratch ? is this dependent on the classification task ?

2. For a beginner in neural network , should one directly approach pre-trained models ?

Pre-trained models can save time and get good results, if they were trained on a similar problem. Use them when they give better results than a model fit from scratch.

Pre-trained models are an excellent way to get started on most problems.

Thanks Jason.

You’re welcome.

Hi Jason .

I have followed your tutorial above on using VGG16 and tested on few grocery item images like tea , oil , etc . It gave very poor prediction. So now , what options do i have?

Train VGG16 on the images I have and then predict ?

Thanks !

Perhaps you can try an alternate model?

Or, perhaps you can use transfer learning to adapt the model to be better suited to your dataset?

Thanks Jason . What would be the criteria for selecting an alternate pre-trained model ? Could you please share any reference for performing transfer learning with a given pre-trained model .

Thanks !!

Choose a model that performs well or best for your dataset.

There are many examples of transfer learning on the blog, you can use the search box at the top of the page.

Thanks Jason.

Hello jason thanks for the info ! Iam really new to this Deep learning thing I need to do my accident prediction final year project using vgg16 and resnet 50 could you lend me your hand in it please ???????? please help me

You are very welcome! Generally, I recommend that you complete homework and assignments yourself.

You have chosen a course and (perhaps) have even paid money to take the course. You have chosen to invest in yourself via self-education.

In order to get the most out of this investment, you must do the work.

Also, you (may) have paid the teachers, lectures and support staff to teach you. Use that resource and ask for help and clarification about your homework or assignment from them. They work for you in some sense, and no one knows more about your homework or assignment and how it will be assed than them.

Nevertheless, if you are still struggling, perhaps you can boil your difficulty down to one sentence and contact me.

Give me some suggestion about vgg19. how to apply real life?? give some practice project??

Hi Javed…The following may be of interest:

https://medium.com/analytics-vidhya/python-based-project-covid-19-detector-with-vgg-19-convolutional-neural-network-f9602fc40b81