Automatic photo captioning is a problem where a model must generate a human-readable textual description given a photograph.

It is a challenging problem in artificial intelligence that requires both image understanding from the field of computer vision as well as language generation from the field of natural language processing.

It is now possible to develop your own image caption models using deep learning and freely available datasets of photos and their descriptions.

In this tutorial, you will discover how to prepare photos and textual descriptions ready for developing a deep learning automatic photo caption generation model.

After completing this tutorial, you will know:

- About the Flickr8K dataset comprised of more than 8,000 photos and up to 5 captions for each photo.

- How to generally load and prepare photo and text data for modeling with deep learning.

- How to specifically encode data for two different types of deep learning models in Keras.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Nov/2017: Fixed small typos in the code in the “Whole Description Sequence Model” section. Thanks Moustapha Cheikh and Matthew.

- Update Feb/2019: Provided direct links for the Flickr8k_Dataset dataset, as the official site was taken down.

How to Prepare a Photo Caption Dataset for Training a Deep Learning Model

Photo by beverlyislike, some rights reserved.

Tutorial Overview

This tutorial is divided into 9 parts; they are:

- Download the Flickr8K Dataset

- How to Load Photographs

- Pre-Calculate Photo Features

- How to Load Descriptions

- Prepare Description Text

- Whole Description Sequence Model

- Word-By-Word Model

- Progressive Loading

- Pre-Calculate Photo Features

Python Environment

This tutorial assumes you have a Python 3 SciPy environment installed. You can use Python 2, but you may need to change some of the examples.

You must have Keras (2.0 or higher) installed with either the TensorFlow or Theano backend.

The tutorial also assumes you have scikit-learn, Pandas, NumPy and Matplotlib installed.

If you need help with your environment, see this post:

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Download the Flickr8K Dataset

A good dataset to use when getting started with image captioning is the Flickr8K dataset.

The reason is that it is realistic and relatively small so that you can download it and build models on your workstation using a CPU.

The definitive description of the dataset is in the paper “Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics” from 2013.

The authors describe the dataset as follows:

We introduce a new benchmark collection for sentence-based image description and search, consisting of 8,000 images that are each paired with five different captions which provide clear descriptions of the salient entities and events.

…

The images were chosen from six different Flickr groups, and tend not to contain any well-known people or locations, but were manually selected to depict a variety of scenes and situations.

— Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics, 2013.

The dataset is available for free. You must complete a request form and the links to the dataset will be emailed to you. I would love to link to them for you, but the email address expressly requests: “Please do not redistribute the dataset“.

You can use the link below to request the dataset:

Within a short time, you will receive an email that contains links to two files:

- Flickr8k_Dataset.zip (1 Gigabyte) An archive of all photographs.

- Flickr8k_text.zip (2.2 Megabytes) An archive of all text descriptions for photographs.

UPDATE (Feb/2019): The official site seems to have been taken down (although the form still works). Here are some direct download links from my datasets GitHub repository:

Download the datasets and unzip them into your current working directory. You will have two directories:

- Flicker8k_Dataset: Contains 8092 photographs in jpeg format.

- Flickr8k_text: Contains a number of files containing different sources of descriptions for the photographs.

Next, let’s look at how to load the images.

How to Load Photographs

In this section, we will develop some code to load the photos for use with the Keras deep learning library in Python.

The image file names are unique image identifiers. For example, here is a sample of image file names:

|

1 2 3 4 5 |

990890291_afc72be141.jpg 99171998_7cc800ceef.jpg 99679241_adc853a5c0.jpg 997338199_7343367d7f.jpg 997722733_0cb5439472.jpg |

Keras provides the load_img() function that can be used to load the image files directly as an array of pixels.

|

1 2 |

from keras.preprocessing.image import load_img image = load_img('990890291_afc72be141.jpg') |

The pixel data needs to be converted to a NumPy array for use in Keras.

We can use the img_to_array() keras function to convert the loaded data.

|

1 2 |

from keras.preprocessing.image import img_to_array image = img_to_array(image) |

We may want to use a pre-defined feature extraction model, such as a state-of-the-art deep image classification network trained on Image net. The Oxford Visual Geometry Group (VGG) model is popular for this purpose and is available in Keras.

The Oxford Visual Geometry Group (VGG) model is popular for this purpose and is available in Keras.

If we decide to use this pre-trained model as a feature extractor in our model, we can preprocess the pixel data for the model by using the preprocess_input() function in Keras, for example:

|

1 2 3 4 5 6 |

from keras.applications.vgg16 import preprocess_input # reshape data into a single sample of an image image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) |

We may also want to force the loading of the photo to have the same pixel dimensions as the VGG model, which are 224 x 224 pixels. We can do that in the call to load_img(), for example:

|

1 |

image = load_img('990890291_afc72be141.jpg', target_size=(224, 224)) |

We may want to extract the unique image identifier from the image filename. We can do that by splitting the filename string by the ‘.’ (period) character and retrieving the first element of the resulting array:

|

1 |

image_id = filename.split('.')[0] |

We can tie all of this together and develop a function that, given the name of the directory containing the photos, will load and pre-process all of the photos for the VGG model and return them in a dictionary keyed on their unique image identifiers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

from os import listdir from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input def load_photos(directory): images = dict() for name in listdir(directory): # load an image from file filename = directory + '/' + name image = load_img(filename, target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) # get image id image_id = name.split('.')[0] images[image_id] = image return images # load images directory = 'Flicker8k_Dataset' images = load_photos(directory) print('Loaded Images: %d' % len(images)) |

Running this example prints the number of loaded images. It takes a few minutes to run.

|

1 |

Loaded Images: 8091 |

If you do not have the RAM to hold all images (about 5GB by my estimation), then you can add an if-statement to break the loop early after 100 images have been loaded, for example:

|

1 2 |

if (len(images) >= 100): break |

Pre-Calculate Photo Features

It is possible to use a pre-trained model to extract the features from photos in the dataset and store the features to file.

This is an efficiency that means that the language part of the model that turns features extracted from the photo into textual descriptions can be trained standalone from the feature extraction model. The benefit is that the very large pre-trained models do not need to be loaded, held in memory, and used to process each photo while training the language model.

Later, the feature extraction model and language model can be put back together for making predictions on new photos.

In this section, we will extend the photo loading behavior developed in the previous section to load all photos, extract their features using a pre-trained VGG model, and store the extracted features to a new file that can be loaded and used to train the language model.

The first step is to load the VGG model. This model is provided directly in Keras and can be loaded as follows. Note that this will download the 500-megabyte model weights to your computer, which may take a few minutes.

|

1 2 3 4 5 |

from keras.applications.vgg16 import VGG16 # load the model in_layer = Input(shape=(224, 224, 3)) model = VGG16(include_top=False, input_tensor=in_layer, pooling='avg') print(model.summary()) |

This will load the VGG 16-layer model.

The two Dense output layers as well as the classification output layer are removed from the model by setting include_top=False. The output from the final pooling layer is taken as the features extracted from the image.

Next, we can walk over all images in the directory of images as in the previous section and call predict() function on the model for each prepared image to get the extracted features. The features can then be stored in a dictionary keyed on the image id.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

from os import listdir from pickle import dump from keras.applications.vgg16 import VGG16 from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input from keras.layers import Input # extract features from each photo in the directory def extract_features(directory): # load the model in_layer = Input(shape=(224, 224, 3)) model = VGG16(include_top=False, input_tensor=in_layer) print(model.summary()) # extract features from each photo features = dict() for name in listdir(directory): # load an image from file filename = directory + '/' + name image = load_img(filename, target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) # get features feature = model.predict(image, verbose=0) # get image id image_id = name.split('.')[0] # store feature features[image_id] = feature print('>%s' % name) return features # extract features from all images directory = 'Flicker8k_Dataset' features = extract_features(directory) print('Extracted Features: %d' % len(features)) # save to file dump(features, open('features.pkl', 'wb')) |

The example may take some time to complete, perhaps one hour.

After all features are extracted, the dictionary is stored in the file ‘features.pkl‘ in the current working directory.

These features can then be loaded later and used as input for training a language model.

You could experiment with other types of pre-trained models in Keras.

How to Load Descriptions

It is important to take a moment to talk about the descriptions; there are a number available.

The file Flickr8k.token.txt contains a list of image identifiers (used in the image filenames) and tokenized descriptions. Each image has multiple descriptions.

Below is a sample of the descriptions from the file showing 5 different descriptions for a single image.

|

1 2 3 4 5 |

1305564994_00513f9a5b.jpg#0 A man in street racer armor be examine the tire of another racer 's motorbike . 1305564994_00513f9a5b.jpg#1 Two racer drive a white bike down a road . 1305564994_00513f9a5b.jpg#2 Two motorist be ride along on their vehicle that be oddly design and color . 1305564994_00513f9a5b.jpg#3 Two person be in a small race car drive by a green hill . 1305564994_00513f9a5b.jpg#4 Two person in race uniform in a street car . |

The file ExpertAnnotations.txt indicates which of the descriptions for each image were written by “experts” which were written by crowdsource workers asked to describe the image.

Finally, the file CrowdFlowerAnnotations.txt provides the frequency of crowd workers that indicate whether captions suit each image. These frequencies can be interpreted probabilistically.

The authors of the paper describe the annotations as follows:

… annotators were asked to write sentences that describe the depicted scenes, situations, events and entities (people, animals, other objects). We collected multiple captions for each image because there is a considerable degree of variance in the way many images can be described.

— Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics, 2013.

There are also lists of the photo identifiers to use in a train/test split so that you can compare results reported in the paper.

The first step is to decide which captions to use. The simplest approach is to use the first description for each photograph.

First, we need a function to load the entire annotations file (‘Flickr8k.token.txt‘) into memory. Below is a function to do this called load_doc() that, given a filename, will return the document as a string.

|

1 2 3 4 5 6 7 8 9 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text |

We can see from the sample of the file above that we need only split each line by white space and take the first element as the image identifier and the rest as the image description. For example:

|

1 2 3 4 |

# split line by white space tokens = line.split() # take the first token as the image id, the rest as the description image_id, image_desc = tokens[0], tokens[1:] |

We can then clean up the image identifier by removing the filename extension and the description number.

|

1 2 |

# remove filename from image id image_id = image_id.split('.')[0] |

We can also put the description tokens back together into a string for later processing.

|

1 2 |

# convert description tokens back to string image_desc = ' '.join(image_desc) |

We can put all of this together into a function.

Below defines the load_descriptions() function that will take the loaded file, process it line-by-line, and return a dictionary of image identifiers to their first description.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # extract descriptions for images def load_descriptions(doc): mapping = dict() # process lines for line in doc.split('\n'): # split line by white space tokens = line.split() if len(line) < 2: continue # take the first token as the image id, the rest as the description image_id, image_desc = tokens[0], tokens[1:] # remove filename from image id image_id = image_id.split('.')[0] # convert description tokens back to string image_desc = ' '.join(image_desc) # store the first description for each image if image_id not in mapping: mapping[image_id] = image_desc return mapping filename = 'Flickr8k_text/Flickr8k.token.txt' doc = load_doc(filename) descriptions = load_descriptions(doc) print('Loaded: %d ' % len(descriptions)) |

Running the example prints the number of loaded image descriptions.

|

1 |

Loaded: 8092 |

There are other ways to load descriptions that may turn out to be more accurate for the data.

Use the above example as a starting point and let me know what you come up with.

Post your approach in the comments below.

Prepare Description Text

The descriptions are tokenized; this means that each token is comprised of words separated by white space.

It also means that punctuation are separated as their own tokens, such as periods (‘.’) and apostrophes for word plurals (‘s).

It is a good idea to clean up the description text before using it in a model. Some ideas of data cleaning we can form include:

- Normalizing the case of all tokens to lowercase.

- Remove all punctuation from tokens.

- Removing all tokens that contain one or fewer characters (after punctuation is removed), e.g. ‘a’ and hanging ‘s’ characters.

We can implement these simple cleaning operations in a function that cleans each description in the loaded dictionary from the previous section. Below defines the clean_descriptions() function that will clean each loaded description.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# clean description text def clean_descriptions(descriptions): # prepare translation table for removing punctuation table = str.maketrans('', '', string.punctuation) for key, desc in descriptions.items(): # tokenize desc = desc.split() # convert to lower case desc = [word.lower() for word in desc] # remove punctuation from each token desc = [w.translate(table) for w in desc] # remove hanging 's' and 'a' desc = [word for word in desc if len(word)>1] # store as string descriptions[key] = ' '.join(desc) |

We can then save the clean text to file for later use by our model.

Each line will contain the image identifier followed by the clean description. Below defines the save_doc() function for saving the cleaned descriptions to file.

|

1 2 3 4 5 6 7 8 9 |

# save descriptions to file, one per line def save_doc(descriptions, filename): lines = list() for key, desc in descriptions.items(): lines.append(key + ' ' + desc) data = '\n'.join(lines) file = open(filename, 'w') file.write(data) file.close() |

Putting this all together with the loading of descriptions from the previous section, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 |

import string # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # extract descriptions for images def load_descriptions(doc): mapping = dict() # process lines for line in doc.split('\n'): # split line by white space tokens = line.split() if len(line) < 2: continue # take the first token as the image id, the rest as the description image_id, image_desc = tokens[0], tokens[1:] # remove filename from image id image_id = image_id.split('.')[0] # convert description tokens back to string image_desc = ' '.join(image_desc) # store the first description for each image if image_id not in mapping: mapping[image_id] = image_desc return mapping # clean description text def clean_descriptions(descriptions): # prepare translation table for removing punctuation table = str.maketrans('', '', string.punctuation) for key, desc in descriptions.items(): # tokenize desc = desc.split() # convert to lower case desc = [word.lower() for word in desc] # remove punctuation from each token desc = [w.translate(table) for w in desc] # remove hanging 's' and 'a' desc = [word for word in desc if len(word)>1] # store as string descriptions[key] = ' '.join(desc) # save descriptions to file, one per line def save_doc(descriptions, filename): lines = list() for key, desc in descriptions.items(): lines.append(key + ' ' + desc) data = '\n'.join(lines) file = open(filename, 'w') file.write(data) file.close() filename = 'Flickr8k_text/Flickr8k.token.txt' # load descriptions doc = load_doc(filename) # parse descriptions descriptions = load_descriptions(doc) print('Loaded: %d ' % len(descriptions)) # clean descriptions clean_descriptions(descriptions) # summarize vocabulary all_tokens = ' '.join(descriptions.values()).split() vocabulary = set(all_tokens) print('Vocabulary Size: %d' % len(vocabulary)) # save descriptions save_doc(descriptions, 'descriptions.txt') |

Running the example first loads 8,092 descriptions, cleans them, summarizes the vocabulary of 4,484 unique words, then saves them to a new file called ‘descriptions.txt‘.

|

1 2 |

Loaded: 8092 Vocabulary Size: 4484 |

Open the new file ‘descriptions.txt‘ in a text editor and review the contents. You should see somewhat readable descriptions of photos ready for modeling.

|

1 2 3 4 5 6 |

... 3139118874_599b30b116 two girls pose for picture at christmastime 2065875490_a46b58c12b person is walking on sidewalk and skeleton is on the left inside of fence 2682382530_f9f8fd1e89 man in black shorts is stretching out his leg 3484019369_354e0b88c0 hockey team in red and white on the side of the ice rink 505955292_026f1489f2 boy rides horse |

The vocabulary is still relatively large. To make modeling easier, especially the first time around, I would recommend further reducing the vocabulary by removing words that only appear once or twice across all descriptions.

Whole Description Sequence Model

There are many ways to model the caption generation problem.

One naive way is to create a model that outputs the entire textual description in a one-shot manner.

This is a naive model because it puts a heavy burden on the model to both interpret the meaning of the photograph and generate words, then arrange those words into the correct order.

This is not unlike the language translation problem used in an Encoder-Decoder recurrent neural network where the entire translated sentence is output one word at a time given an encoding of the input sequence. Here we would use an encoding of the image to generate the output sentence instead.

The image may be encoded using a pre-trained model used for image classification, such as the VGG trained on the ImageNet model mentioned above.

The output of the model would be a probability distribution over each word in the vocabulary. The sequence would be as long as the longest photo description.

The descriptions would, therefore, need to be first integer encoded where each word in the vocabulary is assigned a unique integer and sequences of words would be replaced with sequences of integers. The integer sequences would then need to be one hot encoded to represent the idealized probability distribution over the vocabulary for each word in the sequence.

We can use tools in Keras to prepare the descriptions for this type of model.

The first step is to load the mapping of image identifiers to clean descriptions stored in ‘descriptions.txt‘.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load clean descriptions into memory def load_clean_descriptions(filename): doc = load_doc(filename) descriptions = dict() for line in doc.split('\n'): # split line by white space tokens = line.split() # split id from description image_id, image_desc = tokens[0], tokens[1:] # store descriptions[image_id] = ' '.join(image_desc) return descriptions descriptions = load_clean_descriptions('descriptions.txt') print('Loaded %d' % (len(descriptions))) |

Running this piece loads the 8,092 photo descriptions into a dictionary keyed on image identifiers. These identifiers can then be used to load each photo file for the corresponding inputs to the model.

|

1 |

Loaded 8092 |

Next, we need to extract all of the description text so we can encode it.

|

1 2 |

# extract all text desc_text = list(descriptions.values()) |

We can use the Keras Tokenizer class to consistently map each word in the vocabulary to an integer. First, the object is created, then is fit on the description text. The fit tokenizer can later be saved to file for consistent decoding of the predictions back to vocabulary words.

|

1 2 3 4 5 6 |

from keras.preprocessing.text import Tokenizer # prepare tokenizer tokenizer = Tokenizer() tokenizer.fit_on_texts(desc_text) vocab_size = len(tokenizer.word_index) + 1 print('Vocabulary Size: %d' % vocab_size) |

Next, we can use the fit tokenizer to encode the photo descriptions into sequences of integers.

|

1 2 |

# integer encode descriptions sequences = tokenizer.texts_to_sequences(desc_text) |

The model will require all output sequences to have the same length for training. We can achieve this by padding all encoded sequences to have the same length as the longest encoded sequence. We can pad the sequences with 0 values after the list of words. Keras provides the pad_sequences() function to pad the sequences.

|

1 2 3 4 5 |

from keras.preprocessing.sequence import pad_sequences # pad all sequences to a fixed length max_length = max(len(s) for s in sequences) print('Description Length: %d' % max_length) padded = pad_sequences(sequences, maxlen=max_length, padding='post') |

Finally, we can one hot encode the padded sequences to have one sparse vector for each word in the sequence. Keras provides the to_categorical() function to perform this operation.

|

1 2 3 |

from keras.utils import to_categorical # one hot encode y = to_categorical(padded, num_classes=vocab_size) |

Once encoded, we can ensure that the sequence output data has the right shape for the model.

|

1 2 |

y = y.reshape((len(descriptions), max_length, vocab_size)) print(y.shape) |

Putting all of this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

from numpy import array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load clean descriptions into memory def load_clean_descriptions(filename): doc = load_doc(filename) descriptions = dict() for line in doc.split('\n'): # split line by white space tokens = line.split() # split id from description image_id, image_desc = tokens[0], tokens[1:] # store descriptions[image_id] = ' '.join(image_desc) return descriptions descriptions = load_clean_descriptions('descriptions.txt') print('Loaded %d' % (len(descriptions))) # extract all text desc_text = list(descriptions.values()) # prepare tokenizer tokenizer = Tokenizer() tokenizer.fit_on_texts(desc_text) vocab_size = len(tokenizer.word_index) + 1 print('Vocabulary Size: %d' % vocab_size) # integer encode descriptions sequences = tokenizer.texts_to_sequences(desc_text) # pad all sequences to a fixed length max_length = max(len(s) for s in sequences) print('Description Length: %d' % max_length) padded = pad_sequences(sequences, maxlen=max_length, padding='post') # one hot encode y = to_categorical(padded, num_classes=vocab_size) y = y.reshape((len(descriptions), max_length, vocab_size)) print(y.shape) |

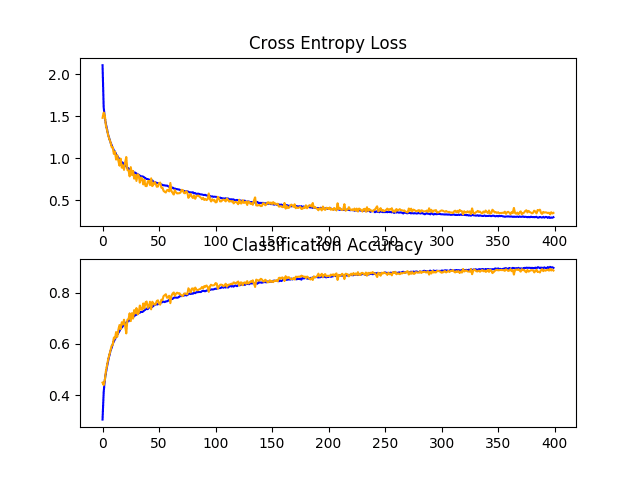

Running the example first prints the number of loaded image descriptions (8,092 photos), the dataset vocabulary size (4,485 words), the length of the longest description (28 words), then finally the shape of the data for fitting a prediction model in the form [samples, sequence length, features].

|

1 2 3 4 |

Loaded 8092 Vocabulary Size: 4485 Description Length: 28 (8092, 28, 4485) |

As mentioned, outputting the entire sequence may be challenging for the model.

We will look at a simpler model in the next section.

Word-By-Word Model

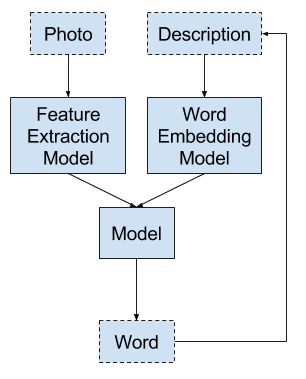

A simpler model for generating a caption for photographs is to generate one word given both the image as input and the last word generated.

This model would then have to be called recursively to generate each word in the description with previous predictions as input.

Using the word as input, give the model a forced context for predicting the next word in the sequence.

This is the model used in prior research, such as:

A word embedding layer can be used to represent the input words. Like the feature extraction model for the photos, this too can be pre-trained either on a large corpus or on the dataset of all descriptions.

The model would take a full sequence of words as input; the length of the sequence would be the maximum length of descriptions in the dataset.

The model must be started with something. One approach is to surround each photo description with special tags to signal the start and end of the description, such as ‘STARTDESC’ and ‘ENDDESC’.

For example, the description:

|

1 |

boy rides horse |

Would become:

|

1 |

STARTDESC boy rides horse ENDDESC |

And would be fed to the model with the same image input to result in the following input-output word sequence pairs:

|

1 2 3 4 5 |

Input (X), Output (y) STARTDESC, boy STARTDESC, boy, rides STARTDESC, boy, rides, horse STARTDESC, boy, rides, horse ENDDESC |

The data preparation would begin much the same as was described in the previous section.

Each description must be integer encoded. After encoding, the sequences are split into multiple input and output pairs and only the output word (y) is one hot encoded. This is because the model is only required to predict the probability distribution of one word at a time.

The code is the same up to the point where we calculate the maximum length of sequences.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

... descriptions = load_clean_descriptions('descriptions.txt') print('Loaded %d' % (len(descriptions))) # extract all text desc_text = list(descriptions.values()) # prepare tokenizer tokenizer = Tokenizer() tokenizer.fit_on_texts(desc_text) vocab_size = len(tokenizer.word_index) + 1 print('Vocabulary Size: %d' % vocab_size) # integer encode descriptions sequences = tokenizer.texts_to_sequences(desc_text) # determine the maximum sequence length max_length = max(len(s) for s in sequences) print('Description Length: %d' % max_length) |

Next, we split the each integer encoded sequence into input and output pairs.

Let’s step through a single sequence called seq at the i’th word in the sequence, where i >= 1.

First, we take the first i-1 words as the input sequence and the i’th word as the output word.

|

1 2 |

# split into input and output pair in_seq, out_seq = seq[:i], seq[i] |

Next, the input sequence is padded to the maximum length of the input sequences. Pre-padding is used (the default) so that new words appear at the end of the sequence, instead of the input beginning.

Pre-padding is used (the default) so that new words appear at the end of the sequence, instead of the beginning of the input.

|

1 2 |

# pad input sequence in_seq = pad_sequences([in_seq], maxlen=max_length)[0] |

The output word is one hot encoded, much like in the previous section.

|

1 2 |

# encode output sequence out_seq = to_categorical([out_seq], num_classes=vocab_size)[0] |

We can put all of this together into a complete example to prepare description data for the word-by-word model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

from numpy import array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load clean descriptions into memory def load_clean_descriptions(filename): doc = load_doc(filename) descriptions = dict() for line in doc.split('\n'): # split line by white space tokens = line.split() # split id from description image_id, image_desc = tokens[0], tokens[1:] # store descriptions[image_id] = ' '.join(image_desc) return descriptions descriptions = load_clean_descriptions('descriptions.txt') print('Loaded %d' % (len(descriptions))) # extract all text desc_text = list(descriptions.values()) # prepare tokenizer tokenizer = Tokenizer() tokenizer.fit_on_texts(desc_text) vocab_size = len(tokenizer.word_index) + 1 print('Vocabulary Size: %d' % vocab_size) # integer encode descriptions sequences = tokenizer.texts_to_sequences(desc_text) # determine the maximum sequence length max_length = max(len(s) for s in sequences) print('Description Length: %d' % max_length) X, y = list(), list() for img_no, seq in enumerate(sequences): # split one sequence into multiple X,y pairs for i in range(1, len(seq)): # split into input and output pair in_seq, out_seq = seq[:i], seq[i] # pad input sequence in_seq = pad_sequences([in_seq], maxlen=max_length)[0] # encode output sequence out_seq = to_categorical([out_seq], num_classes=vocab_size)[0] # store X.append(in_seq) y.append(out_seq) # convert to numpy arrays X, y = array(X), array(y) print(X.shape) print(y.shape) |

Running the example prints the same statistics, but prints the size of the resulting encoded input and output sequences.

Note that the input of images must follow the exact same ordering where the same photo is shown for each example drawn from a single description. One way to do this would be to load the photo and store it for each example prepared from a single description.

|

1 2 3 4 5 |

Loaded 8092 Vocabulary Size: 4485 Description Length: 28 (66456, 28) (66456, 4485) |

Progressive Loading

The Flicr8K dataset of photos and descriptions can fit into RAM, if you have a lot of RAM (e.g. 8 Gigabytes or more), and most modern systems do.

This is fine if you want to fit a deep learning model using the CPU.

Alternately, if you want to fit a model using a GPU, then you will not be able to fit the data into memory of an average GPU video card.

One solution is to progressively load the photos and descriptions as-needed by the model.

Keras supports progressively loaded datasets by using the fit_generator() function on the model. A generator is the term used to describe a function used to return batches of samples for the model to train on. This can be as simple as a standalone function, the name of which is passed to the fit_generator() function when fitting the model.

As a reminder, a model is fit for multiple epochs, where one epoch is one pass through the entire training dataset, such as all photos. One epoch is comprised of multiple batches of examples where the model weights are updated at the end of each batch.

A generator must create and yield one batch of examples. For example, the average sentence length in the dataset is 11 words; that means that each photo will result in 11 examples for fitting the model and two photos will result in about 22 examples on average. A good default batch size for modern hardware may be 32 examples, so that is about 2-3 photos worth of examples.

We can write a custom generator to load a few photos and return the samples as a single batch.

Let’s assume we are working with a word-by-word model described in the previous section that expects a sequence of words and a prepared image as input and predicts a single word.

Let’s design a data generator that given a loaded dictionary of image identifiers to clean descriptions, a trained tokenizer, and a maximum sequence length will load one-image worth of examples for each batch.

A generator must loop forever and yield each batch of samples. If generators and yield are new concepts for you, consider reading this article:

We can loop forever with a while loop and within this, loop over each image in the image directory. For each image filename, we can load the image and create all of the input-output sequence pairs from the image’s description.

Below is the data generator function.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

def data_generator(mapping, tokenizer, max_length): # loop for ever over images directory = 'Flicker8k_Dataset' while 1: for name in listdir(directory): # load an image from file filename = directory + '/' + name image, image_id = load_image(filename) # create word sequences desc = mapping[image_id] in_img, in_seq, out_word = create_sequences(tokenizer, max_length, desc, image) yield [[in_img, in_seq], out_word] |

You could extend it to take the name of the dataset directory as a parameter.

The generator returns an array containing the inputs (X) and output (y) for the model. The input is comprised of an array with two items for the input images and encoded word sequences. The outputs are one hot encoded words.

You can see that it calls a function called load_photo() to load a single photo and return the pixels and image identifier. This is a simplified version of the photo loading function developed at the beginning of this tutorial.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# load a single photo intended as input for the VGG feature extractor model def load_photo(filename): image = load_img(filename, target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image)[0] # get image id image_id = filename.split('/')[-1].split('.')[0] return image, image_id |

Another function named create_sequences() is called to create sequences of images, input sequences of words, and output words that we then yield to the caller. This is a function that includes everything discussed in the previous section, and also creates copies of the image pixels, one for each input-output pair created from the photo’s description.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# create sequences of images, input sequences and output words for an image def create_sequences(tokenizer, max_length, descriptions, images): Ximages, XSeq, y = list(), list(),list() vocab_size = len(tokenizer.word_index) + 1 for j in range(len(descriptions)): seq = descriptions[j] image = images[j] # integer encode seq = tokenizer.texts_to_sequences([seq])[0] # split one sequence into multiple X,y pairs for i in range(1, len(seq)): # select in_seq, out_seq = seq[:i], seq[i] # pad input sequence in_seq = pad_sequences([in_seq], maxlen=max_length)[0] # encode output sequence out_seq = to_categorical([out_seq], num_classes=vocab_size)[0] # store Ximages.append(image) XSeq.append(in_seq) y.append(out_seq) Ximages, XSeq, y = array(Ximages), array(XSeq), array(y) return Ximages, XSeq, y |

Prior to preparing the model that uses the data generator, we must load the clean descriptions, prepare the tokenizer, and calculate the maximum sequence length. All 3 of must be passed to the data_generator() as parameters.

We use the same load_clean_descriptions() function developed previously and a new create_tokenizer() function that simplifies the creation of the tokenizer.

Tying all of this together, the complete data generator is listed below, ready for use to train a model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

from os import listdir from numpy import array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load clean descriptions into memory def load_clean_descriptions(filename): doc = load_doc(filename) descriptions = dict() for line in doc.split('\n'): # split line by white space tokens = line.split() # split id from description image_id, image_desc = tokens[0], tokens[1:] # store descriptions[image_id] = ' '.join(image_desc) return descriptions # fit a tokenizer given caption descriptions def create_tokenizer(descriptions): lines = list(descriptions.values()) tokenizer = Tokenizer() tokenizer.fit_on_texts(lines) return tokenizer # load a single photo intended as input for the VGG feature extractor model def load_photo(filename): image = load_img(filename, target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image)[0] # get image id image_id = filename.split('/')[-1].split('.')[0] return image, image_id # create sequences of images, input sequences and output words for an image def create_sequences(tokenizer, max_length, desc, image): Ximages, XSeq, y = list(), list(),list() vocab_size = len(tokenizer.word_index) + 1 # integer encode the description seq = tokenizer.texts_to_sequences([desc])[0] # split one sequence into multiple X,y pairs for i in range(1, len(seq)): # select in_seq, out_seq = seq[:i], seq[i] # pad input sequence in_seq = pad_sequences([in_seq], maxlen=max_length)[0] # encode output sequence out_seq = to_categorical([out_seq], num_classes=vocab_size)[0] # store Ximages.append(image) XSeq.append(in_seq) y.append(out_seq) Ximages, XSeq, y = array(Ximages), array(XSeq), array(y) return [Ximages, XSeq, y] # data generator, intended to be used in a call to model.fit_generator() def data_generator(descriptions, tokenizer, max_length): # loop for ever over images directory = 'Flicker8k_Dataset' while 1: for name in listdir(directory): # load an image from file filename = directory + '/' + name image, image_id = load_photo(filename) # create word sequences desc = descriptions[image_id] in_img, in_seq, out_word = create_sequences(tokenizer, max_length, desc, image) yield [[in_img, in_seq], out_word] # load mapping of ids to descriptions descriptions = load_clean_descriptions('descriptions.txt') # integer encode sequences of words tokenizer = create_tokenizer(descriptions) # pad to fixed length max_length = max(len(s.split()) for s in list(descriptions.values())) print('Description Length: %d' % max_length) # test the data generator generator = data_generator(descriptions, tokenizer, max_length) inputs, outputs = next(generator) print(inputs[0].shape) print(inputs[1].shape) print(outputs.shape) |

A data generator can be tested by calling the next() function.

We can test the generator as follows.

|

1 2 3 4 5 6 |

# test the data generator generator = data_generator(descriptions, tokenizer, max_length) inputs, outputs = next(generator) print(inputs[0].shape) print(inputs[1].shape) print(outputs.shape) |

Running the example prints the shape of the input and output example for a single batch (e.g. 13 input-output pairs):

|

1 2 3 |

(13, 224, 224, 3) (13, 28) (13, 4485) |

The generator can be used to fit a model by calling the fit_generator() function on the model (instead of fit()) and passing in the generator.

We must also specify the number of steps or batches per epoch. We could estimate this as (10 x training dataset size), perhaps 70,000 if 7,000 images are used for training.

|

1 2 3 4 |

# define model # ... # fit model model.fit_generator(data_generator(descriptions, tokenizer, max_length), steps_per_epoch=70000, ...) |

Further Reading

This section provides more resources on the topic if you are looking go deeper.

Flickr8K Dataset

- Framing image description as a ranking task: data, models and evaluation metrics (Homepage)

- Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics, (PDF) 2013.

- Dataset Request Form

- Old Flicrk8K Homepage

API

Summary

In this tutorial, you discovered how to prepare photos and textual descriptions ready for developing an automatic photo caption generation model.

Specifically, you learned:

- About the Flickr8K dataset comprised of more than 8,000 photos and up to 5 captions for each photo.

- How to generally load and prepare photo and text data for modeling with deep learning.

- How to specifically encode data for two different types of deep learning models in Keras.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Is this topic included in your new book ?

Yes, I have a suite of chapters on developing a caption generation model.

Hi jason awesome content, but i am not able to understand why did you used while( 1 ):

in line number 78 in the full code.

wouldn’t it work the same way withouot using while(1)??

thanks…!!

def data_generator(descriptions, tokenizer, max_length):

# loop for ever over images

directory = ‘Flicker8k_Dataset’

while 1:————————————————————————->>>>>>line of doubt

for name in listdir(directory):

# load an image from file

filename = directory + ‘/’ + name

image, image_id = load_photo(filename)

# create word sequences

desc = descriptions[image_id]

in_img, in_seq, out_word = create_sequences(tokenizer, max_length, desc, image)

yield [[in_img, in_seq], out_word]

It is a Python generator, you can learn more about generators here:

https://wiki.python.org/moin/Generators

This is brilliant!!! Thanks for putting this together – thoroughly appreciated! ????

You’re welcome, I’m glad it helped!

Hi Jason , I find your work very helpful , have you also implemented bottom up approach (dense captioning ) of generating image captions.

Does this help:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hi Jason, this is top down approach , bottom up approach is different also called dense captioning, where we identify objects in an image the combine them to form a description.

Thanks.

Hi Jason, Isnt the data generator function supposed to call load_photo() instead of load_image()?

In the full example, the data generator does call load_photo() on line 82.

Hi Jason, I am a newbie in Python and CNN. Can I have testing source code in which I input an image and it gives output with the caption?

Yes, I will have some on the blog soon and in my new book on deep learning for NLP to be released soon.

Enjoyed reading your articles, you really explains everything in detail, Couldn’t be write much better! the article is very interesting and effective.

Note: There is an typing error in the first time you mentioned load_clean_descriptions

mapping[image_id] = ‘ ‘.join(image_desc)

sould be

descriptions[image_id] = ‘ ‘.join(image_desc)

Thanks for sharing such interesting blog.

Fixed, thanks!

Hi,

enjoy following your blog.

I’m seeing an error here

def save_doc(descriptions, filename):

lines = list()

for key, desc in mapping.items():

this threw me for a bit until I saw mapping is returned from

def load_descriptions(doc)

fix below

def save_doc(descriptions, filename):

lines = list()

for key, desc in descriptions.items():

replaces

for key, desc in mapping.items():

Ouch, I’ve fixed that example too, cheers.

Hi Jason, when I run the descriptions, I am getting the following error,

FileNotFoundError: [Errno 2] No such file or directory: ‘Flickr8k_text/Flickr8k.token.txt’, can you please help me with this please, I am very new to deep learning.

You must download the dataset and place it in the same directory as the code.

Try running from the command line, sometimes IDEs and Notebooks can mask or introduce errors.

Hi Jason, thanks for this awesome post, I really enjoyed reading it. By the way, I think there is a typo when you talk about the number of steps per epoch. I think it should read “perhaps 70,000 if 7,000 images are used for training.”.

Thanks, fixed.

Hi Jason,

When I running dump(features, open(‘features.pkl’, ‘wb’)) ,I getting the following error: “feature.pkl is not UTF-8 encoded ”

Also I try to dump the output of predict function using only the first image.

It was like this:

{‘667626_18933d713e’: array([[[[ 0. , 0. , 0. , …, 0. ,

10.62594891, 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

…,

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

9.41605377, 0. ]],

[[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 5.36805296],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

…,

[ 0. , 0. , 0. , …, 1.45877278,

0. , 39.37923431],

[ 0. , 0. , 0. , …, 0. ,

0. , 1.39090693],

[ 0. , 0. , 0. , …, 0. ,

3.93747687, 0. ]],

[[ 0. , 0. , 0. , …, 0. ,

18.81423187, 0. ],

[ 0. , 0. , 0. , …, 7.79979277,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 9.14055347],

…,

[ 0. , 0. , 0. , …, 48.84911346,

0. , 12.12792015],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

2.0710113 , 0. ]],

…,

[[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 3.75439334, 0. , …, 0. ,

0. , 0. ],

[ 3.71412587, 0. , 0. , …, 0. ,

0. , 0. ],

…,

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

18.80825424, 0. ],

[ 0. , 0. , 0. , …, 0. ,

13.0358696 , 0. ]],

[[ 0. , 0. , 0. , …, 0. ,

4.03412676, 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

…,

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0. ,

7.99308109, 0. ],

[ 0. , 0. , 0. , …, 0. ,

32.52854919, 0. ]],

[[ 0. , 0. , 0. , …, 0. ,

33.73991013, 0. ],

[ 0. , 0. , 0. , …, 0. ,

14.52160454, 0. ],

[ 0. , 0. , 0. , …, 0. ,

4.05761242, 0. ],

…,

[ 0. , 0. , 0. , …, 0. ,

0. , 0. ],

[ 0. , 0. , 0. , …, 0.90452403,

0. , 0. ],

[ 0. , 0. , 0. , …, 29.89839745,

38.23991394, 0. ]]]], dtype=float32)}

And I was confused about whether this result correct or not.

Could you help me with this?

I really don’t know why my feature.pkl can save successfully.

Thank you so much.

.

Sorry, I have not seen that error before. Perhaps the full error message to stackoverflow?

Thank you for the prompt reply.I’ll try.

Hello Jason,

I’m able to download dataset, the link is unavailable. Could you please help me here.

Thanks,

Karthik

*not able to

Jason , i could able to download now. Please ignore above my comments.

Thanks,Karthik

No problem.

You must fill out this form:

https://forms.illinois.edu/sec/1713398

Jason,

I got model-ep005-loss3.517-val_loss4.012 .

Another good article.

Thanks ,

Karthik

Very Nice!

karthik can u send me a link to download model file

Karthik can you provide link to download model file ?

Hi, Jason

I am using GPU to fit the model, but it takes too loooooooooooooong time!

More or less than 9300 seconds for each epoch.

My hardware: NVIDA GTX 850M(compute capability 5.0), GPU memory 4GiB

and my computer Memory is 8GiB

OS: Ubuntu 16.04

If i use the cpu mode, I got the Memory Error:

================= Error ===============

Traceback (most recent call last):

File “ICmodel.py”, line 217, in

X1train, X2train, ytrain = create_sequences(tokenizer, max_length, train_descriptions,train_features)

File “ICmodel.py”, line 162, in create_sequences

return array(X1),array(X2),array(y)

MemoryError

===============End of Error==============

So I have to use my gpu to run the training program. Here is my code after modifying yours above, is there any incorrect modification?

==================== Code ====================

def data_generator(mapping, tokenizer, max_length, features):

# loop for ever over images

directory = ‘Flickr8k_Dataset’

while 1:

for name in listdir(directory):

# load an image from file

filename = directory + ‘/’ + name

image_id = name.split(‘.’)[0]

# create word sequences

if image_id not in mapping:

continue

desc_list = mapping[image_id]

img_feature = features[image_id][0]

in_img, in_seq, out_word = create_sequences4list(tokenizer,max_length, desc_list, img_feature)

yield [[in_img,in_seq], out_word]

# create sequences of feature, input sequences and output words for an image

def create_sequences4list(tokenizer, max_length, desc_list, photo):

Xfe, XSeq, y = list(), list(),list()

vocab_size = len(tokenizer.word_index) + 1

# integer encode the description

for desc in desc_list:

seq = tokenizer.texts_to_sequences([desc])[0]

# split one sequence into multiple X,y pairs

for i in range(1, len(seq)):

# select

in_seq, out_seq = seq[:i], seq[i]

# pad input sequence

in_seq = pad_sequences([in_seq], maxlen=max_length)[0]

# encode output sequence

out_seq = to_categorical([out_seq], num_classes=vocab_size)[0]

# store

Xfe.append(photo)

XSeq.append(in_seq)

y.append(out_seq)

Xfe, XSeq, y = array(Xfe), array(XSeq), array(y)

return [Xfe, XSeq, y]

======================End of Code =================

Time-consuming running is disaster, could you give me some advice? thx.

You might need more RAM. Perhaps change the code to use progressive loading?

Thank you for your reply.

Progressive loading is to use the python generator? What I have post above are exactly the generator function and create_sequence function adapted for the generator. Sorry for the disappeared indents…

What I am confused is that whether I need to yield per line of descriptions or yield all five descriptions for one photo at one time?

Good question, I think you could yield every few descriptions. Even experiment a little to see what your hardware can handle.

Hey Jason Brownlee, I used this progressive Loading with this https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/#comment-429456 tutorial.

and i’m getting this error. Can you please tell me how to define model for this particular generator ?

ValueError: Error when checking input: expected input_1 to have 2 dimensions, but got array with shape (13, 224, 224, 3)

I’m new to machine learning, Thanks for your wonderful tutorial !

I’ve managed to fix that one by adding inputs1 = Input(shape=(224, 224, 3)) and now have different error. Please help

ValueError: Error when checking target: expected dense_3 to have 4 dimensions, but got array with shape (13, 4485)

Please help on the model part. I am unable to run this. And I don’t yet have the understanding required to calculate the numbers myself

were you able to solve the issue? I am stuck with the same error

ConnectionResetError: [WinError 10054] An existing connection was forcibly closed by the remote host

Error in downloading VGG16 Model.

Can You please help me to fix it out..?

Sorry to hear that, sounds like an internet connection issue. Perhaps try again?

Can anyone show me how to compile the VGG 16 model for the progressive loading in this example?

Thanx in advance.

Please help me to define the model ,i have used the data generator which is working fine but having trouble defining the model

Perhaps you can summarize your problem in a few lines?

I need a code for define model which is used before model fitting in the code:

# define model

# …

# fit model

model.fit_generator(data_generator(descriptions, tokenizer, max_length), steps_per_epoch=70000, …)

Same here jason, i have been going over your ebooks to find some solution but getting no where…could u please give the code to define the model used in the progressive loading example such that we can use it with this :

model.fit_generator(data_generator(descriptions, tokenizer, max_length), steps_per_epoch=70000, …)

same problem here please help

have you figured it out? I yes, please can you explain!

Thanx in advance.

After progressive loading how to evaluate the model and how to generate captions for new images.

See this post:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hello Jason,

I have one question regarding your discussion. As you said that steps_per_epoch will be 10*training data size i.e. 70,000 so what will happen if take steps_per_epoch equal to 70 instead of 70,000.

Do increasing no of steps_per_epoch result in better model?

Slower training. Perhaps worse model skill given the large increase in weight update frequency.

Would you say that the Whole Description Sequence Model and Word-By-Word model are RNN based?

Sure.

Hello Jason ,

I am trying to run the code for extracting features from the photos in the flickr dataset, provided by you , but it showing following error:

‘AttributeError: ‘InputLayer’ object has no attribute ‘outbound_nodes’

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

have you written tutorial on VQA. Can you suggest any python source where we can learn this.

What is VQA?

anyone know how to solve this error ?

ValueError: Error when checking input: expected input_1 to have 2 dimensions, but got array with shape (61317, 7, 7, 512)

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Can this code be used to prepare the image data for keras when ever I am using transfer learning?

Yes, somewhat.

Respected Sir,

I am facing the following error:

python3.7/site-packages/keras/engine/training_utils.py”, line 102, in standardize_input_data

str(len(data)) + ‘ arrays: ‘ + str(data)[:200] + ‘…’)

ValueError: Error when checking model input: the list of Numpy arrays that you are passing to your model is not the size the model expected. Expected to see 1 array(s), but instead got the following list of 2 arrays: [array([[[[ 66.061 , 106.221 , 112.32 ],

[ 63.060997 , 97.221 , 111.32 ],

[ 57.060997 , 96.221 , 105.32 ],

…,

[ 43.060997 , 92.221 ,…

Kindly guide me…

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason, I have collected the Flicker 8k dataset and have done some translation to local languages, but now, I want to expand my dataset. Is there any similarity between the Flicker 8k and Flicker 30k dataset like 8k is a subset of 30k. As, it can be seen the file naming in 8k and 30k are different. Do you have any idea regarding that?

Sorry. I don’t know.

Hi Jason i want do image captioning for clothes and want dataset for it

if you have dataset for this please give me or iam so glad help me how create dataset with caption for it

thanks

This may help:

https://machinelearningmastery.com/faq/single-faq/where-can-i-get-a-dataset-on-___

should i insert the flicker8k dataset into jupyter notebook??

I recommend not using a notebook:

https://machinelearningmastery.com/faq/single-faq/why-dont-use-or-recommend-notebooks

Hi Jason.

I’m trying to fit the model using data generator, but getting this error:

ValueError: in user code:

/usr/local/lib/python3.7/dist-packages/keras/engine/training.py:830 train_function *

return step_function(self, iterator)

/usr/local/lib/python3.7/dist-packages/keras/engine/training.py:813 run_step *

outputs = model.train_step(data)

/usr/local/lib/python3.7/dist-packages/keras/engine/training.py:770 train_step *

y_pred = self(x, training=True)

/usr/local/lib/python3.7/dist-packages/keras/engine/base_layer.py:989 __call__ *

input_spec.assert_input_compatibility(self.input_spec, inputs, self.name)

/usr/local/lib/python3.7/dist-packages/keras/engine/input_spec.py:197 assert_input_compatibility *

raise ValueError(‘Layer ‘ + layer_name + ‘ expects ‘ +

ValueError: Layer model_15 expects 2 input(s), but it received 3 input tensors. Inputs received: [, , ]

Can you please help me in this regard?

Sorry to hear that, these tips may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

how can we evaluate the imagecaption model in one go…

i.e All metric score calculated in one go

Hi Pranav…The following may be of interest to you:

https://machinelearningmastery.com/how-to-evaluate-pixel-scaling-methods-for-image-classification/