32 Tips, Tricks and Hacks That You Can Use To Make Better Predictions.

The most valuable part of machine learning is predictive modeling.

This is the development of models that are trained on historical data and make predictions on new data.

And the number one question when it comes to predictive modeling is:

How can I get better results?

This cheat sheet contains my best advice distilled from years of my own application and studying top machine learning practitioners and competition winners.

With this guide, you will not only get unstuck and lift performance, you might even achieve world-class results on your prediction problems.

Let’s dive in.

Note, the structure of this guide is based on an early guide that you might fine useful on improving performance for deep learning titled: How To Improve Deep Learning Performance.

Machine Learning Performance Improvement Cheat Sheet

Photo by NASA, some rights reserved.

Overview

This cheat sheet is designed to give you ideas to lift performance on your machine learning problem.

All it takes is one good idea to get a breakthrough.

Find that one idea, then come back and find another.

I have divided the list into 4 sub-topics:

- Improve Performance With Data.

- Improve Performance With Algorithms.

- Improve Performance With Algorithm Tuning.

- Improve Performance With Ensembles.

The gains often get smaller the further you go down the list.

For example, a new framing of your problem or more data is often going to give you more payoff than tuning the parameters of your best performing algorithm. Not always, but in general.

1. Improve Performance With Data

You can get big wins with changes to your training data and problem definition. Perhaps even the biggest wins.

Strategy: Create new and different perspectives on your data in order to best expose the structure of the underlying problem to the learning algorithms.

Data Tactics

- Get More Data. Can you get more or better quality data? Modern nonlinear machine learning techniques like deep learning continue to improve in performance with more data.

- Invent More Data. If you can’t get more data, can you generate new data? Perhaps you can augment or permute existing data or use a probabilistic model to generate new data.

- Clean Your Data. Can you improve the signal in your data? Perhaps there are missing or corrupt observations that can be fixed or removed, or outlier values outside of reasonable ranges that can be fixed or removed in order to lift the quality of your data.

- Resample Data. Can you resample data to change the size or distribution? Perhaps you can use a much smaller sample of data for your experiments to speed things up or over-sample or under-sample observations of a specific type to better represent them in your dataset.

- Reframe Your Problem: Can you change the type of prediction problem you are solving? Reframe your data as a regression, binary or multiclass classification, time series, anomaly detection, rating, recommender, etc. type problem.

- Rescale Your Data. Can you rescale numeric input variables? Normalization and standardization of input data can result in a lift in performance on algorithms that use weighted inputs or distance measures.

- Transform Your Data. Can you reshape your data distribution? Making input data more Gaussian or passing it through an exponential function may better expose features in the data to a learning algorithm.

- Project Your Data: Can you project your data into a lower dimensional space? You can use an unsupervised clustering or projection method to create an entirely new compressed representation of your dataset.

- Feature Selection. Are all input variables equally important? Use feature selection and feature importance methods to create new views of your data to explore with modeling algorithms.

- Feature Engineering. Can you create and add new data features? Perhaps there are attributes that can be decomposed into multiple new values (like categories, dates or strings) or attributes that can be aggregated to signify an event (like a count, binary flag or statistical summary).

Outcome: You should now have a suite of new views and versions of your dataset.

Next: You can evaluate the value of each with predictive modeling algorithms.

2. Improve Performance With Algorithms

Machine learning is all about algorithms.

Strategy: Identify the algorithms and data representations that perform above a baseline of performance and better than average. Remain skeptical of results and design experiments that make it hard to fool yourself.

Algorithm Tactics

- Resampling Method. What resampling method is used to estimate skill on new data? Use a method and configuration that makes the best use of available data. The k-fold cross-validation method with a hold out validation dataset might be a best practice.

- Evaluation Metric. What metric is used to evaluate the skill of predictions? Use a metric that best captures the requirements of the problem and the domain. It probably isn’t classification accuracy.

- Baseline Performance. What is the baseline performance for comparing algorithms? Use a random algorithm or a zero rule algorithm (predict mean or mode) to establish a baseline by which to rank all evaluated algorithms.

- Spot Check Linear Algorithms. What linear algorithms work well? Linear methods are often more biased, are easy to understand and are fast to train. They are preferred if you can achieve good results. Evaluate a diverse suite of linear methods.

- Spot Check Nonlinear Algorithms. What nonlinear algorithms work well? Nonlinear algorithms often require more data, have greater complexity but can achieve better performance. Evaluate a diverse suite of nonlinear methods.

- Steal from Literature. What algorithms are reported in the literature to work well on your problem? Perhaps you can get ideas of algorithm types or extensions of classical methods to explore on your problem.

- Standard Configurations. What are the standard configurations for the algorithms being evaluated? Each algorithm needs an opportunity to do well on your problem. This does not mean tune the parameters (yet) but it does mean to investigate how to configure each algorithm well and give it a fighting chance in the algorithm bake-off.

Outcome: You should now have a short list of well-performing algorithms and data representations.

Next: The next step is to improve performance with algorithm tuning.

3. Improve Performance With Algorithm Tuning

Algorithm tuning might be where you spend the most of your time. It can be very time-consuming. You can often unearth one or two well-performing algorithms quickly from spot-checking. Getting the most from those algorithms can take, days, weeks or months.

Strategy: Get the most out of well-performing machine learning algorithms.

Tuning Tactics

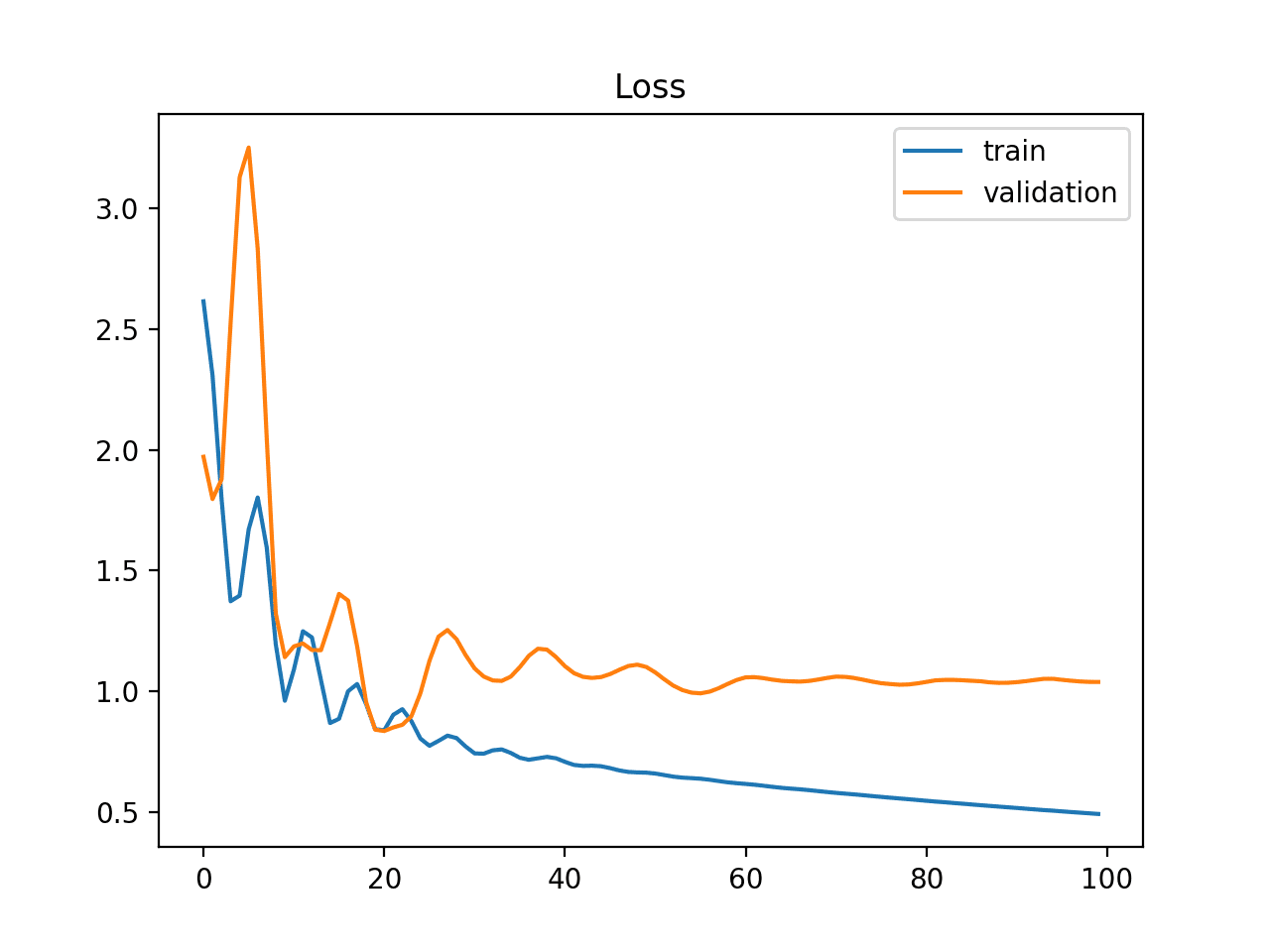

- Diagnostics. What diagnostics and you review about your algorithm? Perhaps you can review learning curves to understand whether the method is over or underfitting the problem, and then correct. Different algorithms may offer different visualizations and diagnostics. Review what the algorithm is predicting right and wrong.

- Try Intuition. What does your gut tell you? If you fiddle with parameters for long enough and the feedback cycle is short, you can develop an intuition for how to configure an algorithm on a problem. Try this out and see if you can come up with new parameter configurations to try on your larger test harness.

- Steal from Literature. What parameters or parameter ranges are used in the literature? Evaluating the performance of standard parameters is a great place to start any tuning activity.

- Random Search. What parameters can use random search? Perhaps you can use random search of algorithm hyperparameters to expose configurations that you would never think to try.

- Grid Search. What parameters can use grid search? Perhaps there are grids of standard hyperparameter values that you can enumerate to find good configurations, then repeat the process with finer and finer grids.

- Optimize. What parameters can you optimize? Perhaps there are parameters like structure or learning rate than can be tuned using a direct search procedure (like pattern search) or stochastic optimization (like a genetic algorithm).

- Alternate Implementations. What other implementations of the algorithm are available? Perhaps an alternate implementation of the method can achieve better results on the same data. Each algorithm has a myriad of micro-decisions that must be made by the algorithm implementor. Some of these decisions may affect skill on your problem.

- Algorithm Extensions. What are common extensions to the algorithm? Perhaps you can lift performance by evaluating common or standard extensions to the method. This may require implementation work.

- Algorithm Customizations. What customizations can be made to the algorithm for your specific case? Perhaps there are modifications that you can make to the algorithm for your data, from loss function, internal optimization methods to algorithm specific decisions.

- Contact Experts. What do algorithm experts recommend in your case? Write a short email summarizing your prediction problem and what you have tried to one or more expert academics on the algorithm. This may reveal leading edge work or academic work previously unknown to you with new or fresh ideas.

Outcome: You should now have a short list of highly tuned algorithms on your machine learning problem, maybe even just one.

Next:One or more models could be finalized at this point and used to make predictions or put into production. Further lifts in performance can be gained by combining the predictions from multiple models.

4. Improve Performance With Ensembles

You can combine the predictions from multiple models. After algorithm tuning, this is the next big area for improvement. In fact, you can often get good performance from combining the predictions from multiple “good enough” models rather than from multiple highly tuned (and fragile) models.

Strategy: Combine the predictions of multiple well-performing models.

Ensemble Tactics

- Blend Model Predictions. Can you combine the predictions from multiple models directly? Perhaps you could use the same or different algorithms to make multiple models. Take the mean or mode from the predictions of multiple well-performing models.

- Blend Data Representations. Can you combine predictions from models trained on different data representations? You may have many different projections of your problem which can be used to train well-performing algorithms, whose predictions can then be combined.

- Blend Data Samples. Can you combine models trained on different views of your data? Perhaps you can create multiple subsamples of your training data and train a well-performing algorithm, then combine predictions. This is called bootstrap aggregation or bagging and works best when the predictions from each model are skillful but in different ways (uncorrelated).

- Correct Predictions. Can you correct the predictions of well-performing models? Perhaps you can explicitly correct predictions or use a method like boosting to learn how to correct prediction errors.

- Learn to Combine. Can you use a new model to learn how to best combine the predictions from multiple well-performing models? This is called stacked generalization or stacking and often works well when the submodels are skillful but in different ways and the aggregator model is a simple linear weighting of the predictions. This process can be repeated multiple layers deep.

Outcome: You should have one or more ensembles of well-performing models that outperform any single model.

Next: One or more ensembles could be finalized at this point and used to make predictions or put into production.

Final Word

This cheat sheet is jam packed full of ideas to try to improve performance on your problem.

How To Get Started

You do not need to do everything. You just need one good idea to get a lift in performance.

Here’s how to handle the overwhelm:

- Pick one group

- Data.

- Algorithms.

- Tuning.

- Ensembles.

- Pick one method from the group.

- Pick one thing to try of the chosen method.

- Compare the results, keep if there was an improvement.

- Repeat.

Share Your Results

Did you find this post useful?

Did you get that one idea or method that made a difference?

Let me know, leave a comment! I would love to hear about it.

very useful, as always!

Very Helpful Tips!

Glad to hear it Mellon.

What tactics could be used for Multi-Class classification problems?

Most classification machine learning algorithms support multi-class classification.

Perhaps start with a decision tree?

Very useful. Many thanks Jason.

As a person who is always in search of learning something new I am only just starting on Python/Machine Learning/Analytics.

I am currently looking for a basic discussion as I am trying to get a clearer basic understanding of what is involved. I have done a bit of reading, but could do with a demystifying conversation.

If it is Ok with you kindly confirm how does machine learning fit in statistics and how does Python Algorithms and use of python related libraries come into place?

Great qusetion Benson,

Machine learning could thought of as applied statistics with computers and much larger datasets. The useful part of machine learning is predictive modeling, as distinct from descriptive modeling often performed in statistics.

We do not want to implement methods every time a new model is needed or new project is started. Therefore we use robust standard implementations. The Python library scikit-learn provides industry standard implementations that we can use for R&D and operational models.

I hope that helps?

Excellent post as usual. I really like how concise, clear and precise your articles are, they really help getting the big picture that I missed while I was a Master student. Keep up the good work!

Thanks Mathieu, kind of you to say.

I’m here to help if you have any questions.

Danke.

I’m glad it helped.

It´s an amazing info !!! Your blog is the best machine learning resource I ever seen, congratulations. I will buy your book the next month, it must be really usefult. Thanks !

Thanks Ivan. Kind of you to say!

Hi Jason,

I’m playing around with soil spectroscopy and trying to correlate the spectra with reference method data. When I had few samples (less than 100) I got a r2 over 70, now with over 4000 samples its down to 60… weird huh? To me this means that the property I’m trying to measure is not really correlated with the signal, so improving the number of samples would not improve the results, what do you think?

Sincerely

Grandonia

It is possible that there are non-linear interactions of the variable with other input variables that may influence the output variable. These are hard to impossible to measure.

I recommend developing models with different framings of the problem to see what works best.

Thanks for your insights Jason. Could you explain what you mean with framings? I’m pretty sure that there is no direct relationship with the elements I wanna measure (like Phosphorous) and the spectra, only indirect (P bound to some organic molecules that have a signal in the infrared region).

So do you really think that in this cases more data does not mean better results, since the interactions are so non-linear?

By framings, I mean the choice of the inputs and outputs to the mapping function that you are trying to approximate. This is not just feature selection, but the structure/nature of what is being predicted and from what.

The are many ways to frame a given predictive modeling problem and improved results can come from exploring variations, and even combining predictions from models developed from different framings.

Generally, neural nets do require more data than linear methods. Yes:

https://machinelearningmastery.com/much-training-data-required-machine-learning/

Thanks!

Very helpful. Thanks Jason!

Thanks, I’m glad to hear that.

Hi Jason, Is there more scope for improving performance with ensembling if I’m already using an ensemble model like gradient boosting regressor which has been tuned?

Yes, but you will need a model that is quite different with regard to features or modeling approach (e.g. uncorrelated errors).

any suggestions as to appropriate algorithm to use to improve lottery predictions?

The lottery is random, not predictable.

Can you suggest some good links for generating new features or doing feature engineering using statistics?

Thanks in Advance!!

Perhaps start here:

https://machinelearningmastery.com/discover-feature-engineering-how-to-engineer-features-and-how-to-get-good-at-it/

Hi Jason great pathway for the thought process.

Does ensembling model tend to Overfit quickly. I mean combining predictions on say a binary classification task having output 0 or 1.

I have tried ensembling using gradient boosting and achieve accuracy of. 99-1. Does model predictions needs to be non-correlated in order to combine predictions.

How should we choose which models to combine

Not generally. It can be easy to overfit due to using information from the test set in creating the ensemble though. Be careful.

Hi Jason,

Do you have any suggestion in imbalance sequence labeling with LSTM?

Sequence of length N to sequence of length N.

Binary classification.

One class is highly imbalances and model is overfitting badly!

A good start would be to carefully choose a performance measure, such as AUC or ROC Curves.

Hi Jason,

Awesome pointers. I will try to work with some of them .I hope they work for me , I am struggling with exact same performance levels, although I have changed data views and altered some algorithm parameters as well.

Thanks.

Hi,

Thank for your information. I have question and hope you can help me.

Totally, I have 55 points data, I divided it into 70:30 percent for training and testing data.

However, the accuracy of training is high, while testing is very worst.

I have 13 factors as input and 1 target for model (I use machine learning)

What is my problem here? How I can fix it?

Thank you very much

Perhaps try using k-fold cross validation or leave one out cross validation to get a more robust estimate of model performance.

Generally, I recommend following this process to get the best performance:

https://machinelearningmastery.com/start-here/#process

Thanks for such a nice information.

Thanks.

Using Grid search for Hyperparmeter optimization It takes long time for processing, I have run 2048 combinations and it took me nearly 120 hours to get best fit for keras RNN model with Lstm layer.

Can you please suggest how can I speedup the processing of Grid search or Is there any other approach.

I have some ideas:

Perhaps try running on a faster machine (e.g. AWS EC2)?

Perhaps try fewer combinations of hyperparameters?

Perhaps try a smaller model?

Perhaps try a smaller dataset?

Perhaps evaluate different grids of hyperparameters on different machines?

I hope that helps as a start.

thanks a lot, it is very helpful.

You’re welcome.

Sir, I wish to develop a hybrid for classification and regression as ebola prediction model

and I have a failed to understand the philosophy on how this can be formed. for instance, if like 4 algorithms selected to be applied in this hybrid , so how this model can work for categorical the sometimes on the numerical outcomes ..Sir, is it possible and how can be formed ? your advice is highly welcomed

That is tricky to achieve.

You could have a model that outputs a real value, that is interpreted by another model for a classification outcome?

You could also have a multi-output model, like a neural net, that outputs a real value and classification prediction.

Let me know how you go.

I have a problem which I think can be related to what you say above as,

“1. Improve Performance With Data: Resample Data: resample data to change the size or distribution”.

My problem is a binary classification (0 over 1 classes). Each row of data is a 3D point having three columns of X,Y,Z point coordinates and the rest of columns are attribute values.

Although the classification only uses columns of attribute (features), I keep X,Y,Z at each row(point), because I need them to eventually visualize the results of classification (for example, colorizing points in class 0 as blue while points in class 1 as red).

The points are 3D coordinates of a building, so when I visualize the points I can randomly cut different parts of the building and label them as train/test data. I have randomly cut different parts of building, so I have several train/test data . For each one I do the process (fit model on train data, test on test data and then predict classes of unseen data e.g., another building).

Therefore, I can have different train/test data with different spatial distributions of two classes. (By spatial distributions of two classes, I mean where the two classes are located in the 3D space.). SO as you say I am doing resampling to find a sample which gives the best performance and use it as training data set.

In train/test data called A, the 3D locations of red and blue classes, are different from those in train/test data called B.

The f1-score of A and B on their test set are different but good (high around 90% for either of classes). However, when I predict unseen data with model fitted to A, the f1-score is awful while when I predict unseen data with model fitted to B, the f1-score is good (and visualizing the building gives meaningful predicted classes).

Can I call this change of f1-score for A and B on unseen data as model variance?

By trial and error, I concluded that when classes 0 and 1 are surrounded by each other (spatial distribution of B) I get good f1-score on unseen data, while when classes 0 and 2 are away from each other I get awful f1-score on unseen data. I there any scientific reason for this?

For all cases, I have almost equal number of 0 and 1 classes.

Many Thanks

Model variance would be a measure of the same model fit on different training dataset and evaluated on different test dataset. Variance is the spread of model skill defined by a chosen metric in this scenario.

This might be a case of requiring sufficiently representative training/test sets when fitting/evaluating a model.

thanks for the information

You’re welcome.

Hi Jason,

I want to develop a model that will do hierarchical multi-class classification, In my case each child node can have more than 10 class till level 4. I tried creating model at leaf level but no luck(since more than 1000 class). Tried Classification at each level (less number of class than flat structure), still no luck. Is there any other way I can work on to optimize the model, may be creating model at node (too number of model).

What best you can suggest ?

Many Thanks

Sorry, I don’t know much about hierarchical multi-class classification. Perhaps hit the literature to see what the state of the art is?

Thanks for the information

You’re welcome.

Sir, Whan iam running this code getting this error sir. How can i clear the errror. The same code which i tried in pyhton 3.6. Same error i was getting.Please reply me>>>

RESTART: C:\Users\poornima\AppData\Local\Programs\Python\Python37-32\ex2.py

Scores: [0.0, 0.0, 0.0, 0.0, 0.0]

Mean Accuracy: 0.000%

>>>

Looks like your model has no skill. Perhaps try debugging your code?

hi, I am implementing a model for the financial forecast of a European index based on the data of a systemic risk indicator and I have followed your instructions to prepare the data, I have used LSTM but I have a low loss value and a very low accuracy ( 0.0014). what could be the problem?

You cannot measure accuracy for a regression task:

https://machinelearningmastery.com/faq/single-faq/how-do-i-calculate-accuracy-for-regression

I implemented J48, how can I measure the effect of lowering the number of examples in the training set

Perhaps prepare a separate dataset with fewer rows, then evaluate the model on the new dataset in Weka.

Do we evaluate the model by considering “precision and recall” or by corrected instance percentage?

You must choose a metric for your project that best captures the goals of your project.

This can help:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Very good article, thank you for sharing.

I have a question and I hope you can help me: Is there any specific method or algorithm for “Invent More Data”? I want to focus on ordinary algorithms, rather than data expansion methods similar to methods such as inverting and mirroring pictures in deep learning. I searched related content, but did not find the ideal result.

Looking forward for your response, thank you!

Thanks!

Yes, it is called oversampling and there are many approaches for this. Perhaps start here:

https://machinelearningmastery.com/smote-oversampling-for-imbalanced-classification/

Very good article, thank you for sharing.

I have a question and I hope you can help me: Is there any specific method or algorithm for “Invent More Data”? I want to focus on ordinary algorithms, rather than data expansion methods similar to methods such as inverting and mirroring pictures in deep learning. I searched related content, but did not find the ideal result.

Looking forward for your response, thank you!

————

Supplementary explanation following the above content:

What I have learned about data expansion is as follows:

* Regarding deep learning, such as image processing, data can be increased and expanded through image rotation;

* Regarding other machine learning: 1. Classification problems (unbalanced samples), data can be expanded by algorithms similar to SMOTE; 2. Regression problems? ——I hope you can help me.

I want to know if there are some methods or algorithms that are sufficient for data expansion, not only to deal with the imbalance of samples, but also to be applicable to more general situations and to increase training data.

Looking forward to your reply, thank you.

Thanks.

The methods for imbalanced classification can be used for any tabular data (e.g. random oversampling).

Also, simply adding gaussian noise to examples can be used to expand the dataset.

Dear Jason Brownlee, kindly suggest me how to make an expert system in diabetes using the advanced features of WEKA .Is it possible ?

Hi ARKarthikeyan…Thank you for the recommendation for our consideration.