Applied machine learning is typically focused on finding a single model that performs well or best on a given dataset.

Effective use of the model will require appropriate preparation of the input data and hyperparameter tuning of the model.

Collectively, the linear sequence of steps required to prepare the data, tune the model, and transform the predictions is called the modeling pipeline. Modern machine learning libraries like the scikit-learn Python library allow this sequence of steps to be defined and used correctly (without data leakage) and consistently (during evaluation and prediction).

Nevertheless, working with modeling pipelines can be confusing to beginners as it requires a shift in perspective of the applied machine learning process.

In this tutorial, you will discover modeling pipelines for applied machine learning.

After completing this tutorial, you will know:

- Applied machine learning is concerned with more than finding a good performing model; it also requires finding an appropriate sequence of data preparation steps and steps for the post-processing of predictions.

- Collectively, the operations required to address a predictive modeling problem can be considered an atomic unit called a modeling pipeline.

- Approaching applied machine learning through the lens of modeling pipelines requires a change in thinking from evaluating specific model configurations to sequences of transforms and algorithms.

Let’s get started.

A Gentle Introduction to Machine Learning Modeling Pipelines

Photo by Jay Huang, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Finding a Skillful Model Is Not Enough

- What Is a Modeling Pipeline?

- Implications of a Modeling Pipeline

Finding a Skillful Model Is Not Enough

Applied machine learning is the process of discovering the model that performs best for a given predictive modeling dataset.

In fact, it’s more than this.

In addition to discovering which model performs the best on your dataset, you must also discover:

- Data transforms that best expose the unknown underlying structure of the problem to the learning algorithms.

- Model hyperparameters that result in a good or best configuration of a chosen model.

There may also be additional considerations such as techniques that transform the predictions made by the model, like threshold moving or model calibration for predicted probabilities.

As such, it is common to think of applied machine learning as a large combinatorial search problem across data transforms, models, and model configurations.

This can be quite challenging in practice as it requires that the sequence of one or more data preparation schemes, the model, the model configuration, and any prediction transform schemes must be evaluated consistently and correctly on a given test harness.

Although tricky, it may be manageable with a simple train-test split but becomes quite unmanageable when using k-fold cross-validation or even repeated k-fold cross-validation.

The solution is to use a modeling pipeline to keep everything straight.

What Is a Modeling Pipeline?

A pipeline is a linear sequence of data preparation options, modeling operations, and prediction transform operations.

It allows the sequence of steps to be specified, evaluated, and used as an atomic unit.

- Pipeline: A linear sequence of data preparation and modeling steps that can be treated as an atomic unit.

To make the idea clear, let’s look at two simple examples:

The first example uses data normalization for the input variables and fits a logistic regression model:

- [Input], [Normalization], [Logistic Regression], [Predictions]

The second example standardizes the input variables, applies RFE feature selection, and fits a support vector machine.

- [Input], [Standardization], [RFE], [SVM], [Predictions]

You can imagine other examples of modeling pipelines.

As an atomic unit, the pipeline can be evaluated using a preferred resampling scheme such as a train-test split or k-fold cross-validation.

This is important for two main reasons:

- Avoid data leakage.

- Consistency and reproducibility.

A modeling pipeline avoids the most common type of data leakage where data preparation techniques, such as scaling input values, are applied to the entire dataset. This is data leakage because it shares knowledge of the test dataset (such as observations that contribute to a mean or maximum known value) with the training dataset, and in turn, may result in overly optimistic model performance.

Instead, data transforms must be prepared on the training dataset only, then applied to the training dataset, test dataset, validation dataset, and any other datasets that require the transform prior to being used with the model.

A modeling pipeline ensures that the sequence of data preparation operations performed is reproducible.

Without a modeling pipeline, the data preparation steps may be performed manually twice: once for evaluating the model and once for making predictions. Any changes to the sequence must be kept consistent in both cases, otherwise differences will impact the capability and skill of the model.

A pipeline ensures that the sequence of operations is defined once and is consistent when used for model evaluation or making predictions.

The Python scikit-learn machine learning library provides a machine learning modeling pipeline via the Pipeline class.

You can learn more about how to use this Pipeline API in this tutorial:

Implications of a Modeling Pipeline

The modeling pipeline is an important tool for machine learning practitioners.

Nevertheless, there are important implications that must be considered when using them.

The main confusion for beginners when using pipelines comes in understanding what the pipeline has learned or the specific configuration discovered by the pipeline.

For example, a pipeline may use a data transform that configures itself automatically, such as the RFECV technique for feature selection.

- When evaluating a pipeline that uses an automatically-configured data transform, what configuration does it choose? or When fitting this pipeline as a final model for making predictions, what configuration did it choose?

The answer is, it doesn’t matter.

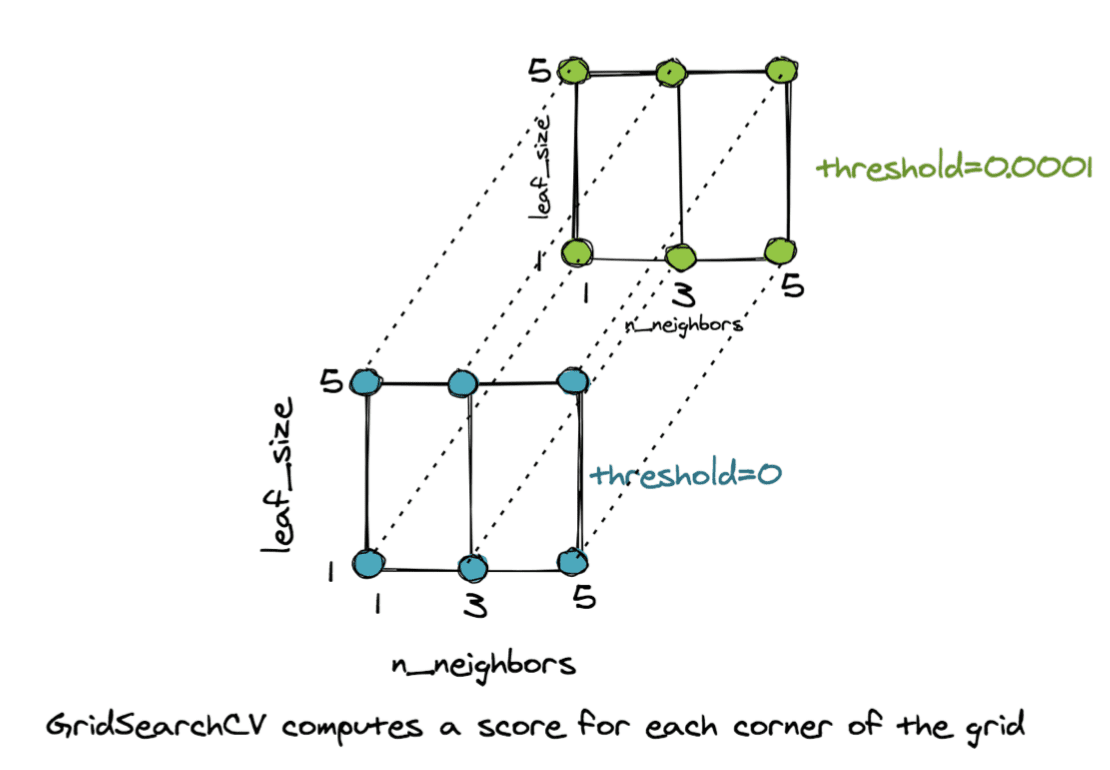

Another example is the use of hyperparameter tuning as the final step of the pipeline.

The grid search will be performed on the data provided by any prior transform steps in the pipeline and will then search for the best combination of hyperparameters for the model using that data, then fit a model with those hyperparameters on the data.

- When evaluating a pipeline that grid searches model hyperparameters, what configuration does it choose? or When fitting this pipeline as a final model for making predictions, what configuration did it choose?

The answer again is, it doesn’t matter.

The answer applies when using a threshold moving or probability calibration step at the end of the pipeline.

The reason is the same reason that we are not concerned about the specific internal structure or coefficients of the chosen model.

For example, when evaluating a logistic regression model, we don’t need to inspect the coefficients chosen on each k-fold cross-validation round in order to choose the model. Instead, we focus on its out-of-fold predictive skill

Similarly, when using a logistic regression model as the final model for making predictions on new data, we do not need to inspect the coefficients chosen when fitting the model on the entire dataset before making predictions.

We can inspect and discover the coefficients used by the model as an exercise in analysis, but it does not impact the selection and use of the model.

This same answer generalizes when considering a modeling pipeline.

We are not concerned about which features may have been automatically selected by a data transform in the pipeline. We are also not concerned about which hyperparameters were chosen for the model when using a grid search as the final step in the modeling pipeline.

In all three cases: the single model, the pipeline with automatic feature selection, and the pipeline with a grid search, we are evaluating the “model” or “modeling pipeline” as an atomic unit.

The pipeline allows us as machine learning practitioners to move up one level of abstraction and be less concerned with the specific outcomes of the algorithms and more concerned with the capability of a sequence of procedures.

As such, we can focus on evaluating the capability of the algorithms on the dataset, not the product of the algorithms, i.e. the model. Once we have an estimate of the pipeline, we can apply it and be confident that we will get similar performance, on average.

It is a shift in thinking and may take some time to get used to.

It is also the philosophy behind modern AutoML (automatic machine learning) techniques that treat applied machine learning as a large combinatorial search problem.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Summary

In this tutorial, you discovered modeling pipelines for applied machine learning.

Specifically, you learned:

- Applied machine learning is concerned with more than finding a good performing model; it also requires finding an appropriate sequence of data preparation steps and steps for the post-processing of predictions.

- Collectively, the operations required to address a predictive modeling problem can be considered an atomic unit called a modeling pipeline.

- Approaching applied machine learning through the lens of modeling pipelines requires a change in thinking from evaluating specific model configurations to sequences of transforms and algorithms.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Very nice explanation of the least popular topic. I am happy that we already are doing majority of the steps but also got to know some of the new concepts.

Thanks.

I really appreciate everything that you share. Machine learning plays an integral part in solving the mystery of consumer preferences. Very Useful post.

Thank you.

I really appreciate this excellent article appropriate to my matter.Here is a deep description about the article matter which helped me more.

Thanks, I’m happy to hear that.

Thanks for the great series.

I am using make_pipeline in part because I want to use the scalers that are available in make_column_transformer. This has worked for me when I use TransformedTargetRegressor inside the pipeline, but my goal is to use RNN.

I have tried adding LSTM, RNN, and SimpleRNN layers into my pipeline but they seem to fail because they do not have both fit() and transform() functions available. I get a “Last step of Pipeline should implement fit” error.

Is there a way to get RNN to work within a pipeline? Or alternatively, is there a way to use make_column_transformer outside of a pipeline?

It might be easier to perform the transforms with each data prep object manually.

Thank you for great article with well explanation on the concepts.

You’re welcome!

as always thank you for the article sir.

i’m always confused anytime you mention pipeline in your old articles. usually you give reference when talking about something (say, the article mentions cross validation then you give a link to your article about it). using search bar i found out this article about pipeline is quite new. if possible in the future when you update your articles that mention pipeline, you can link this article as reference. hopefully to avoid cunfusion like i did..

once again thank you, your blog has been very helpful for me to learn about machine learning.

Thanks for the suggestion!

please i a student carrying out a research on evaluation of machine learning classifiers for mobile malware detection,and from the research i find out that dealing with such a large amount of data to be train need a guide on how to use modelling pipeline to easy the work.Thanks,

Thank you Ahmadu for your feedback!

I generally recommend working with a small sample of your dataset that will fit in memory.

I recommend this because it will accelerate the pace at which you learn about your problem:

It is fast to test different framings of the problem.

It is fast to summarize and plot the data.

It is fast to test different data preparation methods.

It is fast to test different types of models.

It is fast to test different model configurations.

The lessons that you learn with a smaller sample often (but not always) translate to modeling with the larger dataset.

You can then scale up your model later to use the entire dataset, perhaps trained on cloud infrastructure such as Amazon EC2.

There are also many other options if you are intersted in training with a large dataset. I list 7 ideas in this post:

7 Ways to Handle Large Data Files for Machine Learning

Hi, I wanted to make sure if I understand this correctly.

-To make a final model (without using a pipeline), we fit the whole data into the model with the determined hyperparameter configurations.

-To make a final pipeline model, we fit the whole data into the pipeline and search for the best hyperparameter configurations again by using GridSearchCV.

Hi Timotius…Your understanding is correct! I would recommend that you proceed with your suggestion and let us know what you find.